2. 福州墨尔本理工职业学院 信息工程系,福建 福州 350121;

3. 福建农业职业技术学院 教学科研处,福建 福州 350181;

4. 福建工程学院 信息科学与工程学院,福建 福州 350118;

5. 福州墨尔本理工职业学院 现代教育技术中心,福建 福州 350121

2. Department of Information Engineering, Fuzhou Melbourne Polytechnic, Fuzhou 350121, China;

3. Teaching and Research Division,, Fujian Vocational College of Agriculture, Fuzhou 350181, China;

4. Information Science and Engineering College, Fujian University of Technology, Fuzhou 350118, China;

5. Modern Education Technical Center, Fuzhou Melbourne Polytechnic, Fuzhou 350121, China

知识库(knowledge base, KB)[1]以三元组的形式编码事实,三元组由实体和关系组成。主流知识库(如NELL[2]、YAGO[3]、Freebase[4]等)在语义搜索[5]和问题解答[6]等领域[7]应用广泛。

然而,现有知识库缺失大量事实,即三元组不完整,缺少实体或关系[8]。知识图谱补全(knowledge graph completion, KGC)[9]旨在解决该问题,通过提取局部模式或语义特征,用已知信息生成新的有效事实[10-11],许多学者对KGC的核心概念、关键问题、主流技术和未来方向进行了分析、总结和展望[12-13]。模式提取借助贝叶斯扩展或张量/矩阵分解[14]增强表达能力,但往往忽略了路径携带的语义信息,经典模型如RESCAL[15]、TransE[16]、DistMult[17]和ConvE[18]。上述模型大多忽略路径携带的语义信息。

在知识推理中,实体对间的多条关系路径所携带的语义信息有助于判定三元组的有效性[19-20]。Neelakantan等[21]和Das等[22]使用循环神经网络(recurrent neural networks, RNN)进行关系路径嵌入以减小计算开销。由于常规RNN无法学习到长序列的语义依赖,Hochreiter等[23]提出了LSTM(long short-term memory),引入门控结构计算遗忘和更新的信息。Xu等[24]将注意力机制引入图像物体识别;目前该机制已应用到机器翻译和知识补全。Xiong等[25]结合嵌入模型和路径模型的优点,使用强化学习框架,在TransE的基础上将智能体编码至连续空间中,通过最优关系采样和路径扩展进行推理,同时设计了自定义的奖励函数, 兼顾局部模式提取和语义关联识别[26-28]。

本文设计了PKICLA方案(path-based knowledge inference with CNN, LSTM and attention mechanism),结合卷积神经网络(convolutional neural networks, CNN)和双向LSTM实现基于关系路径嵌入的局部特征提取和向量序列合并,同时借助注意力机制实现多路径权重分配,集成关系语义评分,在NELL995和FB15k-237数据集上进行链路预测,比较PKICLA与其他主流模型的性能。

1 相关研究 1.1 嵌入模型KGC中,嵌入模型的基本思路是学习节点和关系的低维矢量表示,保留原有结构信息和知识约束,如TransE将关系映射为平移向量,认为若三元组成立,则平移后的头部向量应靠近尾部向量,即

近年来,在自然语言处理(natural language processing, NLP)领域,最初用于计算机视觉的CNN大放光彩[31],其参数规模和计算开销远少于全连接神经网络。ConvE在ComplEx[32]的基础上引入CNN,将

上述模型大多只考虑直接关联,忽略了关系路径蕴含的语义信息[34-35]。Zhang等[36]认为,在复杂现实场景中进行推理,集成关系路径的丰富语义信息很有必要;Xiong等[37]则认为知识库的持续动态增长和稀疏性决定了few-shot、one-shot甚至是zero-shot的推理需求,而语义信息等辅助知识有助于实现这类推理。Lao等[19-20]验证了关系路径对知识补全的辅助作用:使用深度优先的随机游走算法生成路径,使用逻辑回归或决策树等二分类方法训练并预测链路。关系路径后续也有许多改进研究[38-39],如Das等[40]提出MINERVA方案,在知识图遍历中使用历史路径信息,Lin等[41]在其基础上改进了奖励函数。此外,Lin等[42]和Luo等[43]将关系路径与TransE结合,进一步提升知识表达能力。然而,多数相关研究将路径视为原子性特征,导致特征矩阵的规模庞大,计算开销高[44-45]。

1.4 融合模型RNN原本用于处理序列数据,在语音识别、NLP和连续图像处理等领域取得成功,因此Neelakantan等[21]提出Path-RNN,将路径分解为关系序列,用作RNN的输入,通过层内的参数共享降低计算开销,选择得分最高的路径(Max运算)以补全缺失三元组。然而,单一路径可能无法提供足够的语义参照,因此Das等[22]使用Mean和LogSumExp等指标集成多路径信息,但忽略了不同路径与候选关系的语义关联程度存在差异。

由于常规RNN存在梯度消失问题,难以学习到长距离的语义依赖关系,因此LSTM模型[23]引入门控结构计算遗忘和更新的信息,后续产生了许多变种[46]。

近来用于调整资源分配的注意力机制也在NLP领域得到应用[47],Bahdanau等[48]和Vaswani等[49]将之用于机器翻译的解码器设计;Jiang等[27]提出了基于注意力机制的知识推理方案,根据路径的语义匹配程度为其分配不同的权重。Nathani等[34]使用注意力机制提取知识图中的近邻信息,用于发现近似关系簇,以及同一实体的角色差异。

Wang等[50]和Zhang等[36]认为,长距离的多跳推理有助于发掘实体关联,从而提高知识推理模型在现实场景中的性能,但注意力机制在长序列上的分配机制有待优化,有研究尝试集成上述框架以取长补短,Zhou等[26]提出Att-BLSTM用于关系分类,词级嵌入后使用双向LSTM[51]合并句级信息并结合注意力机制评分;Chiu等[52]使用LSTM和CNN的混合模型识别命名实体,降低特征工程的计算量。

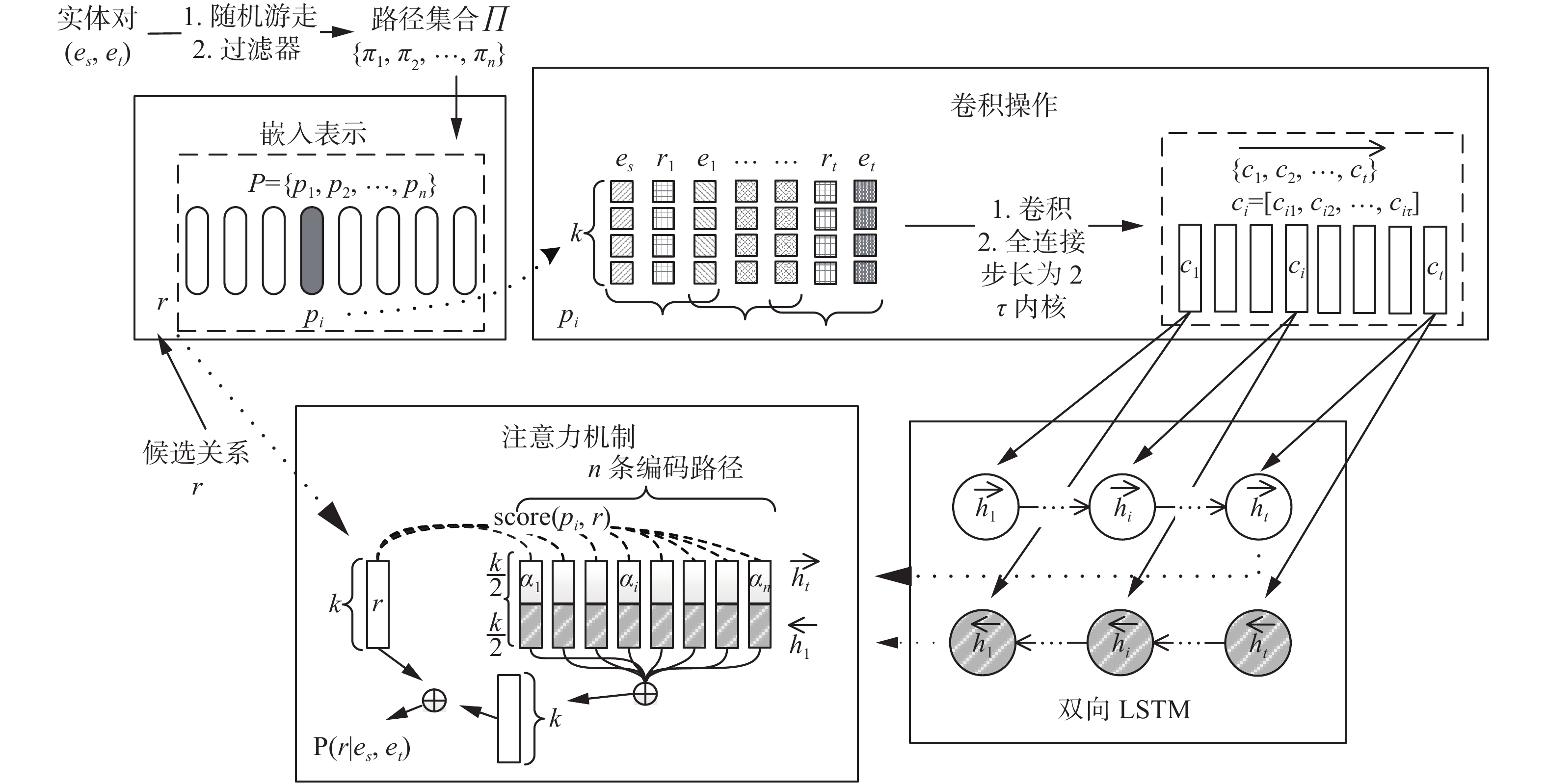

由于基于嵌入特征提取的模型和基于关系路径语义的模型各有优点,因此本文在前人工作基础上将嵌入表示与语义提取结合,提出PKICLA模型,首先使用自定义的CNN框架编码完整路径;其次将前、后向LSTM的隐藏状态拼接,合并关系序列特征,实体对的多条路径相当于在多个整句级别上并行映射;最后使用基于注意力机制的方法集成不同路径与候选关系的语义关联信息,计算关系与实体对的概率得分,用于判定三元组是否成立。

2 PKICLAPKICLA模型框架如图1所示。在给定实体对和候选关系的前提下,利用CNN将通过随机游走得到的实体间多条路径分别依据其关系序列编码为低维表示,将变长路径映射到定长的向量序列,保留其局部结构;使用双向LSTM将路径的特征序列合并为单一向量,减少计算开销;由于不同路径与候选关系的语义关联程度不同,结合注意力机制计算各路径的相关性并分配权重,加权计算关系的状态向量,通过该关系与相应实体对的概率得分判定三元组是否有效。

|

Download:

|

| 图 1 PKICLA模型框架 Fig. 1 Model framework of PKICLA | |

给定KG包括实体集E和关系集R。三元组

本文使用实体类型对应的向量表示[22],进一步减小参数规模,同时解决测试集中部分实体在训练集中未出现的问题。将头/尾实体对和候选关系通过嵌入矩阵转化为

常规RNN存在梯度消失问题,难以学习长序列的语义信息;Zhou等[26]使用双向LSTM(BLSTM)并通过peephole connections查看当前神经节点/细胞的状态,增加CEC(constant error carousel)到各门的双向关联;Lu等[53]使用的双向GRU(bidirectional gated recurrent unit)则通过类似耦合门控的设计简化了细胞结构和参数规模,保留了近似性能[54];其中重置门

将卷积层输出序列的每个向量视作LSTM中的一个时间步,每个时间步将一个

主流PRA常使用Max或Mean运算,忽略了不同路径提供的推理证据存在差异,因此本文使用Bahdanau等[48]提出的基于累加性注意力机制(additive attention)的路径信息集成,该方法对于不同区间数值的适应能力优于简单的点积计算语义相关度得分[22, 49]。将候选关系的向量表示

| $ {\rm{s}}{\rm{c}}{\rm{o}}{\rm{r}}{\rm{e}}\left({\boldsymbol{p}}_{\boldsymbol{i}},{\boldsymbol{r}}\right)={\rm{t}}{\rm{a}}{\rm{n}}{\rm{h}}\left({\boldsymbol{p}}_{\boldsymbol{i}}{\boldsymbol{W}}_{\boldsymbol{s}}\right){\boldsymbol{r}} $ | (1) |

| $ {\alpha }_{i}=\frac{{\rm{e}}{\rm{x}}{\rm{p}}\left({\rm{s}}{\rm{c}}{\rm{o}}{\rm{r}}{\rm{e}}\left({\boldsymbol{p}}_{\boldsymbol{i}},{\boldsymbol{r}}\right)\right)}{ \displaystyle\sum _{i=1}^{n}{\rm{e}}{\rm{x}}{\rm{p}}\left({\rm{s}}{\rm{c}}{\rm{o}}{\rm{r}}{\rm{e}}\left({\boldsymbol{p}}_{\boldsymbol{i}},{\boldsymbol{r}}\right)\right)} $ | (2) |

| $ {\boldsymbol{c}}=\sum_{i=1}^{n}{\alpha }_{i}{\boldsymbol{p}}_{\boldsymbol{i}} $ | (3) |

| $ {{P}}\left({\boldsymbol{r}}|{\boldsymbol{e}}_{\boldsymbol{s}},{\boldsymbol{e}}_{\boldsymbol{t}}\right)=f\left({\boldsymbol{W}}_{\boldsymbol{p}}\right({\boldsymbol{c}}+{\boldsymbol{r}}\left)\right) $ | (4) |

式中:

本文使用Adam优化器[55]训练PKICLA以优化结果,损失函数定义如式(5)所示:

| $ \begin{array}{l} \quad\quad\quad\quad\quad\quad\quad\quad L\left(\Theta \right)= -\dfrac{1}{N}\\ \left( \displaystyle\sum _{\left({\boldsymbol{e}}_{\boldsymbol{s}},{\boldsymbol{r}},{\boldsymbol{e}}_{\boldsymbol{t}}\right)\in {T}^+}{\rm{log}}{P}\left({\boldsymbol{r}}|{\boldsymbol{e}}_{\boldsymbol{s}},{\boldsymbol{e}}_{\boldsymbol{t}}\right)+ \displaystyle\sum _{\left({\hat{\boldsymbol{e}}_{\boldsymbol{s}},}{\hat{\boldsymbol{r}}},{\hat{\boldsymbol{e}}_{\boldsymbol{t}}}\right)\in {T}^-}{\rm{log}}(1-{{P}}\left(\hat{\boldsymbol{r}}|{\hat{{\boldsymbol{e}}}_{\boldsymbol{s}}},{\hat{{\boldsymbol{e}}}_{\boldsymbol{t}}}\right))\right)+\\ \quad\quad\quad\quad\quad\quad\quad\quad\quad{\rm{\lambda }}{\left|\right|\Theta \left|\right|}_{2}^{2} \end{array}$ | (5) |

目标函数中

链路预测从已知信息中推理新的事实,用于知识补全,方法是计算给定头/尾实体与特定关系连接的概率得分,判定三元组是否有效。度量指标通常为原始正确实体在所有候选项中的排序,如:查询(Joe Biden, isPresidentOf,?),期望实验结果中,“the U.S.”或“America”应得分较高,或排序较前。

实验使用平均精度均值(mean average precision, MAP)、正确三元组的平均倒数排名(mean reciprocal rank, MRR)、Hits@1(排名在第1位的有效实体的比例)、Hits@3(取前3位)、Hits@5(取前5位)和F1等指标进行评估。MAP取头/尾实体预测排序的均值;Hits@1和Hits@3将头/尾预测视为同一任务,合并计算hit rate。

3.2 数据集本文在FB15k-237和NELL995这两个常规数据集,FC17大型数据集(模拟现实复杂场景)[27, 36]和NELL-One稀疏数据集[37]上进行链路预测,其统计信息如表1所示。从NELL995中删除无推理价值的generalizations和haswikipediaurl关系,保留频率最高的Top 200关系的三元组。Toutanova等[39]在FB15k基础上删除可逆三元组,生成FB15k-237,防止算法高分漏洞。FC17的主要数据来自Freebase,同时集成了ClueWeb中对应实体链接;选择其中频率最高的46个关系用于实验。NELL-One是NELL数据集中三元组实例数

| 表 1 数据集统计信息 Tab.1 Dataset Statistics |

对于上述数据集中的每一个关系,确保Train Set和Dev. Set/Test Set中无重复三元组(含反向)。将无对应关系路径的实体对删除。将路径长度限制设定为4,相应的元素个数最多为9(含中间实体,含填充)。将Random Walk的概率阈值设置为0.1。

参考Bordes等[16]的实验,使用Bernoulli方法[29]随机替换实体创建无效三元组,具体如下:给定有效三元组

实验中用于比较的基准模型包括:

1)DistMult[17] (2015),使用对角矩阵表示目标关系;

2)DeepPath[25] (2017),基于TransE[16]的强化学习(reinforcement learning, RL)框架;

3)Single-Model[22] (2017),使用RNN处理关系序列,本文采用原方案推荐的LogSumExp;

4)Att-Model+Type[27] (2017),可视作基于注意力机制的Single-Model,本文重新实现;

5)ConvE[18] (2018),使用2维卷积处理实体/关系嵌入;

6)G-GAT[34](2019),使用注意力机制提取近邻特征,该模型侧重复杂数据集上的关系预测;

7)M-walk[28](2018),同样使用RL和RNN框架,结合随机抽样搜索路径空间;

8)GMH[36] (2020),多跳知识推理框架,结合局部特征和图结构整体特征,针对复杂现实场景设计;该模型在FC17数据集上进行预测;

9)Gmatching[37](2018),针对few-shot的近似度量推理框架。

GMH使用ConvE作为预训练模型,距离上限设置为6时性能最优;其他基准模型均使用原方案的最优性能建议参数。

实验在Dev. Set上验证,若最后10轮(epoch)对应准确率提升<10−2则停止训练并确定最优参数。超参数池设置如下:minibatch size=64,学习率

常规数据集上实验结果如表2所示,最优表现设置为粗体,次优设置为斜体+下划线。PKICLA相比Single-Model和Att-Model+Type这两个相似模型有一定的提升,在较大数据集,特别是关系类型分布相比NELL995复杂得多的FB15k-237数据集上,优势明显;在NELL995上,由于部分实体对的路径较少,基于关系路径语义的模型的Hits@1和Hits@3得分受到影响,但PKICLA的表现仍较稳定。基于双线性乘法运算的DistMult模型擅长提取实体相似性特征,尽管没有考虑路径语义,在两个数据集上的MRR得分都较高。在稠密数据集NELL995上,DeepPath结合强化学习的路径扩展有效弥补了平移模型表达能力不足的缺陷,各项指标表现较稳定。ConvE在NELL995上的表现出色,但在FB15k-237上性能下滑,可能是因为忽略平移特性导致部分全局特征丢失。G-GAT针对复杂数据集进行设计,性能表现整体优于DeepPath,略逊于同样结合注意力机制的Att-Model+Type,可见多跳路径能提供比单跳近邻更多的语义信息。M-Walk在NELL995数据集上取得了最高的hits@1得分;但模型受到无效路径的干扰,在FB15k-237数据集上性能不算突出。G-GAT 原文没有给出具体超参设置,本文实现与原文结果差异较大,因此引用原文在 FB15k-237 上的实验数据。

| 表 2 NELL995和FB15k-237上的性能比较 Tab.2 Performance comparison on NELL995 and FB15k-237 |

进一步选取部分整体表现较好的模型,比较其在NELL995数据集不同任务/关系上的MAP得分,如图2所示。DeepPath仅考虑了局部特征,Single-Model则缺少对不同语义关联的关系路径的权重分配,PKICLA弥补了这两种模型的不足,在10种主要关系上的表现都有所提升。相比Att-Model+Type,PKICLA在7种关系上也具有优势,特别在athletePlaysForTeam和bornLocation复杂关系上,PKICLA有较明显提升(约2.7%),说明卷积特征提取+双向LSTM的路径合并有助于提取局部模式。

|

Download:

|

| 图 2 NELL995不同关系上的MAP得分 Fig. 2 Comparison of MAP scores on various relations of NELL995 | |

FC17数据集上的实验结果如表3所示,相比Att-Model+Type,GMH结合了图结构的整体特征,一定程度上缓解了长距离推理带来的无效关系偏离效应;但对循环次数依赖较重。在3个指标上,PKICLA都取得了最高分,但PKICLA的路径长度是手动设置的,而GMH框架可自适应的调整,在路径长度可变的推理任务中可能表现较好。

| 表 3 FC17上的性能比较 Tab.3 Performance comparison on FC17 |

NELL-One数据集上的实验结果如表4所示,在没有应用Gmatching框架时,PKICLA的few-shot预测能力明显强于TransE和DistMult。应用框架后,3个模型的性能都有所上升,PKICLA的性能仍然是最优,但相对TransE(94.0%)和DistMult(65.7%),PKICLA的提升较小(6.2%)。

此外,本文比较了不同实体类型覆盖率、不同路径长度和不同LSTM模型对PKICLA在NELL995上性能表现的影响,如表5所示。数据集中绝大多数实体携带类型信息,反之实验中使用实体自身的嵌入表达,因此比较不同实体类型覆盖率对模型性能的影响,发现差异极小;当覆盖率较低时,性能有轻微下降,因为测试集中含有训练集中未出现的实体。当路径长度设置为4时,性能表现有一定上升,可能是因为阈值较小时,测试数据中部分实体对无法生成足够的路径;但差异不大,说明短路径提供了大部分的推理信息。与实体类型覆盖率类似,不同LSTM模型对性能造成的影响微弱。

| 表 4 NELL-One上的性能比较 Tab.4 Performance comparison on NELL-One |

| 表 5 NELL995数据集上不同实体类型覆盖率、路径长度和LSTM模型的比较 Tab.5 Comparison between different coverages, path lengths, and LSTM models on NELL995 |

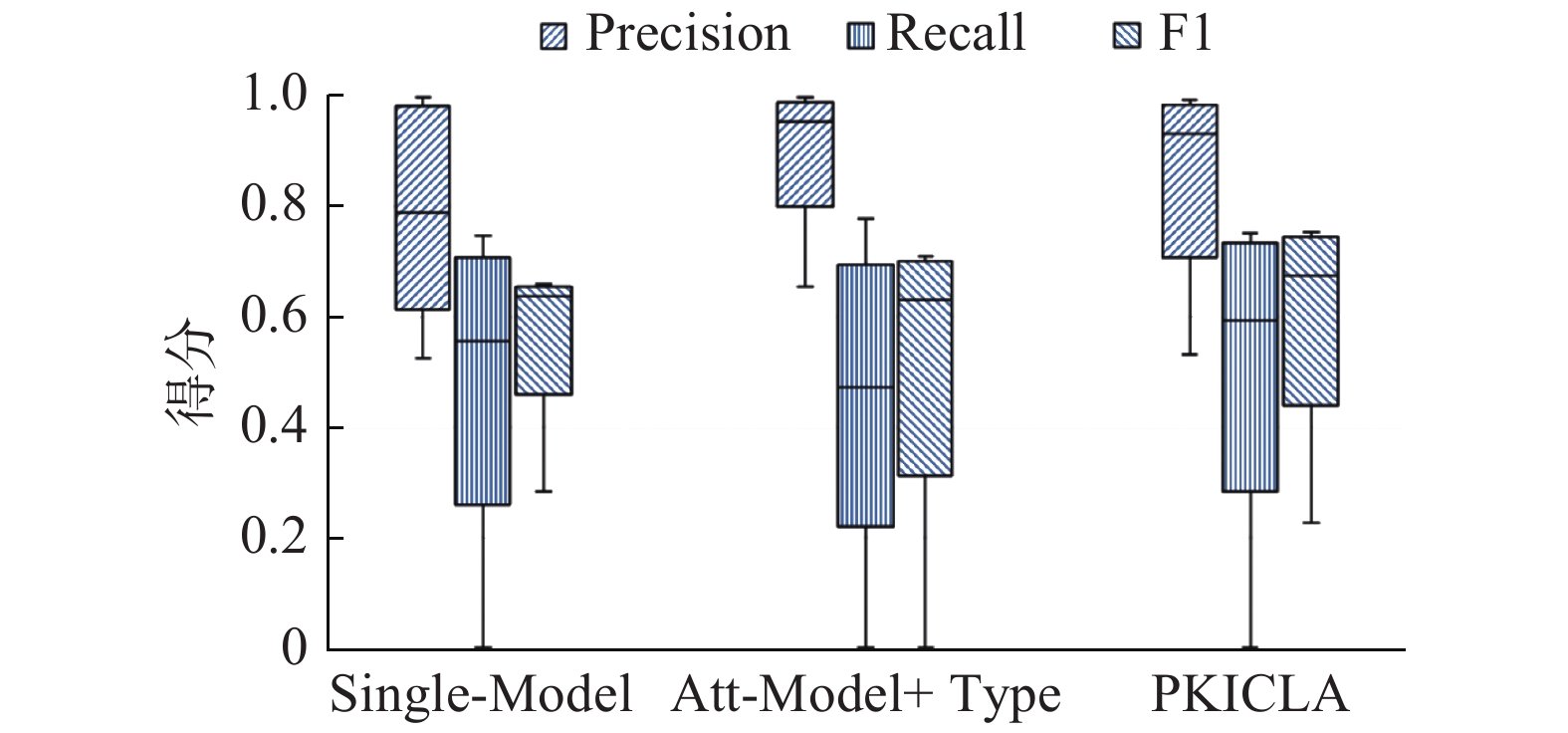

最后,选取表现较好的Single-Model和Att-Model+Type模型为基准,比较其与PKICLA在NELL995上的Precision、Recall和F1得分,结果如图3、图4所示,PKICLA较为平衡,F1得分高于另外两个模型;随着Recall率增长,Precision下滑也较平缓,说明基于注意力机制的语义集成能更好地匹配候选关系,以及卷积操作在提取局部特征上的优势。

|

Download:

|

| 图 3 NELL995上的Precision/Recall/F1比较 Fig. 3 Comparison of Precision/Recall/F1 on NELL995 | |

|

Download:

|

| 图 4 NELL995上的Precision-Recall曲线比较 Fig. 4 Comparison of Precision-Recall Curve on NELL995 | |

本文通过自定义的CNN框架和双向LSTM提取三元组局部特征,合并关系序列为单一向量,并使用基于注意力机制的方法集成多条路径的语义信息,用于计算候选三元组的概率得分。链路预测结果证明本文模型可在常规和大型数据集上进行知识推理,复杂关系的学习能力较强,Precision、Recall和F1指标的整体表现也高于主流模型。PKICLA亦可用于few-shot的推理任务,但在无法生成足够路径的数据集上仍有提升空间,因此未来工作考虑引入强化学习框架、带置信度的规则体系、知识层次结构或多源信息融合模型以扩大方案的适用范围。此外,本文使用单一实体类型进行嵌入表达,但实体往往具有多类型[22],因此计划优化嵌入方案。最后,为适应现实场景任务,针对知识的不确定性建模[56],以及重塑特征维度以优化信息提取也是工作方向。

| [1] |

LEHMANN J, ISELE R, JAKOB M, et al. DBpedia–a large-scale, multilingual knowledge base extracted from Wikipedia[J]. Semantic web, 2015, 6(2): 167-195. DOI:10.3233/SW-140134 ( 0) 0)

|

| [2] |

MITCHELL T, COHEN W, HRUSCHKA E, et al. Never-ending learning[J]. Communications of the ACM, 2018, 61(5): 103-115. DOI:10.1145/3191513 ( 0) 0)

|

| [3] |

REBELE T, SUCHANEK F, HOFFART J, et al. YAGO: a multilingual knowledge base from Wikipedia, Wordnet, and Geonames[C]//Proceedings of the 15th International Semantic Web Conference on the Semantic Web. Kobe, Japan, 2016: 177−185.

( 0) 0)

|

| [4] |

BOLLACKER K, EVANS C, PARITOSH P, et al. Freebase: a collaboratively created graph database for structuring human knowledge[C]//Proceedings of 2008 ACM SIGMOD International Conference on Management of Data. Vancouver, Canada, 2008: 1247−1250.

( 0) 0)

|

| [5] |

XIONG Chenyan, POWER R, CALLAN J. Explicit semantic ranking for academic search via knowledge graph embedding[C]//Proceedings of the 26th International Conference on World Wide Web. Perth, Australia, 2017: 1271−1279.

( 0) 0)

|

| [6] |

HAO Yanchao, ZHANG Yuanzhe, LIU Kang, et al. An end-to-end model for question answering over knowledge base with cross-attention combining global knowledge[C]//Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics. Vancouver, Canada, 2017: 221−231.

( 0) 0)

|

| [7] |

刘知远, 孙茂松, 林衍凯, 等. 知识表示学习研究进展[J]. 计算机研究与发展, 2016, 53(2): 247-261. LIU Zhiyuan, SUN Maosong, LIN Yankai, et al. Knowledge representation learning: a review[J]. Computer research and development, 2016, 53(2): 247-261. DOI:10.7544/issn1000-1239.2016.20160020 (  0) 0)

|

| [8] |

WEST R, GABRILOVICH E, MURPHY K, et al. Knowledge base completion via search-based question answering[C]//Proceedings of the 23rd International Conference on World Wide Web. Seoul, Korea, 2014: 515−526.

( 0) 0)

|

| [9] |

刘峤, 李杨, 段宏, 等. 知识图谱构建技术综述[J]. 计算机研究与发展, 2016, 53(3): 582-600. LIU Qiao, LI Yang, DUAN Hong, et al. Knowledge graph construction techniques[J]. Journal of computer research and development, 2016, 53(3): 582-600. DOI:10.7544/issn1000-1239.2016.20148228 (  0) 0)

|

| [10] |

徐增林, 盛泳潘, 贺丽荣, 等. 知识图谱技术综述[J]. 电子科技大学学报, 2016, 45(4): 589-606. XU Zenglin, SHENG Yongpan, HE Lirong, et al. Review on knowledge graph techniques[J]. Journal of University of Electronic Science and Technology of China, 2016, 45(4): 589-606. DOI:10.3969/j.issn.1001-0548.2016.04.012 (  0) 0)

|

| [11] |

CHEN Xiaojun, JIA Shengbin, XIANG Yang. A review: knowledge reasoning over knowledge graph[J]. Expert systems with applications, 2020, 141: 112948. DOI:10.1016/j.eswa.2019.112948 ( 0) 0)

|

| [12] |

官赛萍, 靳小龙, 贾岩涛, 等. 面向知识图谱的知识推理研究进展[J]. 软件学报, 2018, 29(10): 2966-2994. GUAN Saiping, JIN Xiaolong, JIA Yantao, et al. Knowledge reasoning over knowledge graph: a survey[J]. Journal of software, 2018, 29(10): 2966-2994. (  0) 0)

|

| [13] |

CAI Hongyun, ZHENG V W, CHANG K C C. A comprehensive survey of graph embedding: problems, techniques, and applications[J]. IEEE transactions on knowledge and data engineering, 2018, 30(9): 1616-1637. DOI:10.1109/TKDE.2018.2807452 ( 0) 0)

|

| [14] |

WANG Quan, MAO Zhendong, WANG Bin, et al. Knowledge graph embedding: a survey of approaches and applications[J]. IEEE transactions on knowledge and data engineering, 2017, 29(12): 2724-2743. DOI:10.1109/TKDE.2017.2754499 ( 0) 0)

|

| [15] |

NICKEL M, MURPHY K, TRESP V, et al. A review of relational machine learning for knowledge graphs[J]. Proceedings of the IEEE, 2016, 104(1): 11-33. DOI:10.1109/JPROC.2015.2483592 ( 0) 0)

|

| [16] |

Bordes A, Usunier N, Garcia-Durán A, et al. Translating embeddings for modeling multi-relational data[C]//Proceedings of the 26th International Conference on Neural Information Processing Systems. Lake Tahoe, United States, 2013: 2787−2795.

( 0) 0)

|

| [17] |

YANG Bishan, YIH W T, HE Xiaodong, et al. Embedding entities and relations for learning and inference in knowledge bases[J/OL].(2020-01-01)[2020-05-01] https://arxiv.org/abs/1412.6575.

( 0) 0)

|

| [18] |

DETTMERS T, MINERVINI P, STENETORP P, et al. Convolutional 2D knowledge graph embeddings[C]//Proceedings of the 32nd AAAI Conference on Artificial Intelligence, (AAAI-18), the 30th innovative Applications of Artificial Intelligence (IAAI-18), and the 8th AAAI Symposium on Educational Advances in Artificial Intelligence (EAAI-18). New Orleans, USA, 2017: 1811−1818.

( 0) 0)

|

| [19] |

LAO Ni, COHEN W W. Relational retrieval using a combination of path-constrained random walks[J]. Machine learning, 2010, 81(1): 53-67. DOI:10.1007/s10994-010-5205-8 ( 0) 0)

|

| [20] |

LAO Ni, MITCHELL T, COHEN W W. Random walk inference and learning in a large scale knowledge base[C]//Proceedings of 2011 Conference on Empirical Methods in Natural Language Processing. Edinburgh, United Kingdom, 2011: 529−539.

( 0) 0)

|

| [21] |

NEELAKANTAN A, ROTH B, MCCALLUM A. Compositional vector space models for knowledge base completion[C]//Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing. Beijing, China, 2015: 156−166.

( 0) 0)

|

| [22] |

DAS R, NEELAKANTAN A, BELANGER D, et al. Chains of reasoning over entities, relations, and text using recurrent neural networks[C]//Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics. Valencia, Spain, 2017: 132−141.

( 0) 0)

|

| [23] |

HOCHREITER S, SCHMIDHUBER J. Long short-term memory[J]. Neural computation, 1997, 9(8): 1735-1780. DOI:10.1162/neco.1997.9.8.1735 ( 0) 0)

|

| [24] |

XU K, BA J L, KIROS R, et al. Show, attend and tell: neural image caption generation with visual attention[C]//Proceedings of the 32nd International Conference on Machine Learning. Lille, France, 2015: 2048−2057.

( 0) 0)

|

| [25] |

XIONG Wenhan, HOANG T, WANG W Y. Deeppath: a reinforcement learning method for knowledge graph reasoning[C]//Proceedings of 2017 Conference on Empirical Methods in Natural Language Processing. Copenhagen, Denmark, 2017: 564−573.

( 0) 0)

|

| [26] |

ZHOU Peng, SHI Wei, TIAN Jun, et al. Attention-based bidirectional long short-term memory networks for relation classification[C]//Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Berlin, Germany, 2016: 207−212.

( 0) 0)

|

| [27] |

JIANG Xiaotian, WANG Quan, QI Baoyuan, et al. Attentive path combination for knowledge graph completion[C]//Proceedings of the 9th Asian Conference on Machine Learning. Seoul, Korea, 2017: 590−605.

( 0) 0)

|

| [28] |

SHEN Yelong, CHEN Jianshu, HUANG Posen, et al. M-walk: learning to walk over graphs using monte Carlo tree search[C]//Proceedings of the 32nd International Conference on Neural Information Processing Systems. Montréal, Canada, 2018: 6787−6798.

( 0) 0)

|

| [29] |

WANG Zhen, ZHANG Jianwen, FENG Jianlin, et al. Knowledge graph embedding by translating on hyperplanes[C]//Proceedings of the 28th AAAI Conference on Artificial Intelligence. Montréal, Canada, 2014: 1112−1119.

( 0) 0)

|

| [30] |

LIN Yankai, LIU Zhiyuan, SUN Maosong, et al. Learning entity and relation embeddings for knowledge graph completion[C]// Proceedings of the 29th AAAI Conference on Artificial Intelligence. Austin, USA, 2015: 2181−2187.

( 0) 0)

|

| [31] |

KIM Y. Convolutional neural networks for sentence classification[C]//Proceedings of 2014 Conference on Empirical Methods in Natural Language Processing. Doha, Qatar, 2014: 1746−1751.

( 0) 0)

|

| [32] |

TROUILLON T, WELBL J, RIEDEL S, et al. Complex embeddings for simple link prediction[C]//Proceedings of the 33rd International Conference on Machine Learning. New York, USA, 2016: 2071−2080.

( 0) 0)

|

| [33] |

BALAŽEVIĆ I, ALLEN C, HOSPEDALES T M. Hypernetwork knowledge graph embeddings[C]//Proceedings of the 28th International Conference on Artificial Neural Networks and Machine Learning. Munich, Germany, 2019: 553−565.

( 0) 0)

|

| [34] |

NATHANI D, CHAUHAN J, SHARMA C, et al. Learning attention-based embeddings for relation prediction in knowledge graphs[C]//Proceedings of the 57th Conference of the Association for Computational Linguistics. Florence, Italy, 2019: 4710−4723.

( 0) 0)

|

| [35] |

TAKAHASHI R, TIAN R, INUI K. Interpretable and compositional relation learning by joint training with an autoencoder[C]//Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne, Australia, 2018: 2148−2159.

( 0) 0)

|

| [36] |

ZHANG Yao, ZHANG Xu, WANG Jun, et al. GMH: a general multi-hop reasoning model for KG completion[J]. (2020-01-01)[2020-05-01] https://arxiv.org/abs/2010.07620.

( 0) 0)

|

| [37] |

XIONG Wenhan, YU Mo, CHANG Shiyu, et al. One-shot relational learning for knowledge graphs[C]//Proceedings of 2018 Conference on Empirical Methods in Natural Language Processing. Brussels, Belgium, 2018: 1980−1990.

( 0) 0)

|

| [38] |

NGUYEN D Q, SIRTS K, QU Lizhen, et al. Neighborhood mixture model for knowledge base completion[C]//Proceedings of the 20th SIGNLL Conference on Computational Natural Language Learning. Berlin, Germany, 2016: 40−50.

( 0) 0)

|

| [39] |

TOUTANOVA K, LIN X V, YIH W T, et al. Compositional learning of embeddings for relation paths in knowledge base and text[C]//Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Berlin, Germany, 2016: 1434−1444.

( 0) 0)

|

| [40] |

DAS R, DHULIAWALA S, ZAHEER M, et al. Go for a walk and arrive at the answer: reasoning over paths in knowledge bases using reinforcement learning[C]//Proceedings of the 6th International Conference on Learning Representations. Vancouver, Canada, 2017.

( 0) 0)

|

| [41] |

LIN X V, SOCHER R, XIONG Caiming. Multi-hop knowledge graph reasoning with reward shaping[C]//Proceedings of 2018 Conference on Empirical Methods in Natural Language Processing. Brussels, Belgium, 2018: 3243−3253.

( 0) 0)

|

| [42] |

LIN Yankai, LIU Zhiyuan, LUAN Huabo, et al. Modeling relation paths for representation learning of knowledge bases[C]//Proceedings of 2015 Conference on Empirical Methods in Natural Language Processing. Lisbon, Portugal, 2015: 705−714.

( 0) 0)

|

| [43] |

LUO Yuanfei, WANG Quan, WANG Bin, et al. Context-dependent knowledge graph embedding[C]//Proceedings of 2015 Conference on Empirical Methods in Natural Language Processing. Lisbon, Portugal, 2015: 1656−1661.

( 0) 0)

|

| [44] |

SHANG Chao, TANG Yun, HUANG Jing, et al. End-to-end structure-aware convolutional networks for knowledge base completion[C]//Proceedings of the 33rd AAAI Conference on Artificial Intelligence, AAAI 2019, the 31st Innovative Applications of Artificial Intelligence Conference, IAAI 2019, the 9th AAAI Symposium on Educational Advances in Artificial Intelligence. Honolulu, United States, 2019: 3060−3067.

( 0) 0)

|

| [45] |

TUAN Yilin, CHEN Y N, LEE H Y. DyKgChat: Benchmarking dialogue generation grounding on dynamic knowledge graphs[C]//Proceedings of 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. Hong Kong, China, 2019: 1855−1865.

( 0) 0)

|

| [46] |

GREFF K, SRIVASTAVA R K, KOUTNÍK J, et al. LSTM: A search space odyssey[J]. IEEE transactions on neural networks and learning systems, 2017, 28(10): 2222-2232. DOI:10.1109/TNNLS.2016.2582924 ( 0) 0)

|

| [47] |

XIE Qizhe, MA Xuezhe, DAI Zihang, et al. An interpretable knowledge transfer model for knowledge base completion[C]//Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics. Vancouver, Canada, 2017: 950−962.

( 0) 0)

|

| [48] |

BAHDANAU D, CHO K, BENGIO Y. Neural machine translation by jointly learning to align and translate[C]//Proceedings of the 3rd International Conference on Learning Representations. San Diego, USA, 2014.

( 0) 0)

|

| [49] |

VASWANI A, SHAZEER N, PARMAR N, et al. Attention is all you need[C]//Advances in Neural Information Processing Systems 30. Long Beach, USA, 2017: 5998−6008.

( 0) 0)

|

| [50] |

WANG Xiang, WANG Dingxian, XU Canran, et al. Explainable reasoning over knowledge graphs for recommendation[C]//Proceedings of the 33rd AAAI Conference on Artificial Intelligence, AAAI 2019, the 31st Innovative Applications of Artificial Intelligence Conference, IAAI 2019, the 9th AAAI Symposium on Educational Advances in Artificial Intelligence. New York, United States, 2019: 5329−5336.

( 0) 0)

|

| [51] |

GRAVES A, MOHAMED A R, HINTON G. Speech recognition with deep recurrent neural networks[C]//Proceedings of 2013 IEEE International Conference on Acoustics, Speech and Signal Processing. Vancouver, Canada, 2013: 6645−6649.

( 0) 0)

|

| [52] |

CHIU J P C, NICHOLS E. Named entity recognition with bidirectional LSTM-CNNs[J]. Transactions of the association for computational linguistics, 2016, 4: 357-370. DOI:10.1162/tacl_a_00104 ( 0) 0)

|

| [53] |

LU R, DUAN Z. Bidirectional GRU for sound event detection[C]//Detection and Classification of Acoustic Scenes and Events. [S. l.]. 2017: 17−20.

( 0) 0)

|

| [54] |

CHUNG J, GULCEHRE C, CHO K H, et al. Empirical evaluation of gated recurrent neural networks on sequence modeling[J]. (2020-01-01)[2020-05-01] https://arxiv.org/abs/1412.3555.

( 0) 0)

|

| [55] |

KINGMA D P, BA J. Adam: a method for stochastic optimization[C]//Proceedings of the 3rd International Conference on Learning Representations. San Diego, USA, 2014: 604−612

( 0) 0)

|

| [56] |

JIANG Tianwen, ZHAO Tong, QIN Bing, et al. The role of "Condition": a novel scientific knowledge graph representation and construction model[C]//Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. Anchorage, United States, 2019: 1634−1642.

( 0) 0)

|

2021, Vol. 16

2021, Vol. 16