降维[1]是数据分类中的一个重要问题,其目的是学习一个变换矩阵,将高维数据投影到低维子空间中,使数据在低维子空间中得到有效地分类。经典的降维方法包括主成分分析(principal component analysis, PCA)[2-3]和线性判别分析(linear discriminant analysis, LDA)[4-5],其中PCA是一种将数据信息投影到正交线性空间的无监督方法,原始PCA在对数据降维时,以平方欧氏距离来表示损失,而欧氏距离对噪声及异常值敏感。于是提出许多PCA变体来降低异常值的影响,例如 Nie等[6-7]提出非贪婪

以上方法的每一个样本对应一个损失值,损失值的大小决定了样本对模型的贡献,这就表明了样本之间存在差异性,而这些方法均同等地对待所有训练样本,没有考虑样本之间的差异性。受人类/动物学习过程的启发,文献[10-11]提出了自定步长(或课程) (self-pace learning, SPL)学习理论,其主要思想是以自定步长方式来实现从“简单”到“复杂”样本的训练,最终学习得到一个成熟的模型。此后,Lu等[12]提出自步课程学习(self-paced curriculum learning, SPCL)同时考虑训练前已知的先验知识和训练过程中的学习进度。Yu等[13]提出了自步学习K均值聚类(self-paced learning for k-means clustering algorithm, SPKC),将自步学习机制用于聚类方法。Hu等[14]提出自步核联合稀疏表示高光谱图像分类(kernel joint sparse representation based on self-paced learning for hyperspectral image classification, SPKJSR),将自步学习机制用于高光谱图像分类。

本文为充分考虑样本之间的差异性,利用SPL思想提出自步稀疏最优均值主成分分析(sparse optimal mean principal component analysis based on self-paced learning, SPL-OMSPCA),该模型放宽了对投影矩阵的正交约束,通过对训练样本由“简单”到“复杂”的渐进学习过程,得到最优投影矩阵和最优样本均值。该方法能更好地获得样本中重要的特征信息,避免异常值的影响,提高了算法的鲁棒性。在6个不同数据集上的实验表明,SPL-OMSPCA算法优于目前最先进的主成分分析方法。

1 相关工作给定训练样本为

假设训练样本

| $ \mathop {{\rm{min}}}\limits_{{W}} ||{{X}} - {{W}}{{{W}}^{\rm{T}}}{{X}}||_F^2,{\rm{s}}.{\rm{t}}.,{{{W}}^{\rm{T}}}{{W}} = {{I}} $ | (1) |

式中

基于

| $ \mathop {\min }\limits_{{{b}},{{{W}}^{\rm{T}}}{{W}}} ||{{X}} - {{b}}{{\bf{1}}^{\rm{T}}} - {{W}}{{{W}}^{\rm{T}}}\left( {{{X}} - {{b}}{{\bf{1}}^{\rm{T}}}} \right)||_F^2 $ |

式中:

若式(1)中的投影矩阵与恢复矩阵不相同,并以

| $ \mathop {\min }\limits_{{{Q}},{{P}}} ||{{X}} - {{P}}{{{Q}}^{\rm{T}}}{{X}}|{|_{2,1}} + \lambda ||{{Q}}|{|_{2,1}} $ |

式中:

受人类/动物学习过程的启发,SPL以自定步长的方式实现从“简单样本”到“复杂样本”迭代地训练模型。其优化模型为

| $\begin{split} & \min \displaystyle\sum\limits_{i = 1}^n {{v_i}} L({{{y}}_i},g({{{x}}_i},{{w}})) + \alpha \phi \left( {{w}} \right) + \displaystyle\sum\limits_{i = 1}^n {f({{{v}}_i},k)} \\ & {\rm{s}}{\rm{.t}}{\rm{.}}{v_i} \in [0,1],i = 1,2, \cdots ,n \end{split} $ |

式中:

SPL-OMSPCA的基本思想是:以

| $ \begin{aligned} \mathop {\min }\limits_{{{v}},{{b}},{{P}},{{Q}}} \displaystyle\sum\limits_{i = 1}^n {{{{v}}_i}} ||{{{x}}_i} - {{b}} - {{P}}{{{Q}}^{\rm{T}}}\left( {{{{x}}_i} - {{b}}} \right)|{|_2} + \alpha ||{{Q}}|{|_{2,1}} +\\ \displaystyle\sum\limits_{i = 1}^n {f({{\rm{v}}_i},k)} \quad\quad\quad\quad\quad\quad\quad\\ {\rm{s}}{\rm{.t}}{\rm{.}}{{{P}}^{\rm{T}}}{{P}} = {{I}},{{{v}}_i} \in [0,1],i = 1,2, \cdots ,n \quad\quad\quad\end{aligned} $ | (2) |

式中:

自步正则化函数

上述方法是硬性阈值权值选择方法,通过给每个样本分配二元权值

| $ \begin{aligned} \mathop {\min }\limits_{{{v}},{{b}},{{P}},{{Q}}} \displaystyle\sum\limits_{i = 1}^n {{{{v}}_i}} ||{{{x}}_i} - {{b}} - {{P}}{{{Q}}^{\rm{T}}}\left( {{{{x}}_i} - {{b}}} \right)|{|_2} + \alpha ||{{Q}}|{|_{2,1}} + \\ \displaystyle\sum\limits_{i = 1}^n {\frac{{{\beta ^2}}}{{{v_i} + \beta k}}}\quad\quad\quad\quad\quad\quad\quad\quad \\ {\rm{s}}{\rm{.t}}{\rm{.}}{{{P}}^{\rm{T}}}{{P}} = {{I}},{v_i} \in [0,1],i = 1,2, \cdots ,n\quad\quad\quad \end{aligned} $ | (3) |

式中

| $ \begin{aligned} \mathop {\min }\limits_{{{v}},{{b}},{{P}},{{Q}}} ||({{X}} - {{b1}^{\rm{T}}} - {{P}}{{{Q}}^{\rm{T}}}({{X}} - {{b1}^{\rm{T}}}))\sqrt {{V}} \sqrt {{D}} ||_F^2 + \\ \alpha ||\sqrt {{H}} {{Q}}||_F^2 + \displaystyle\sum\limits_{i = 1}^n {\frac{{{\beta ^2}}}{{{v_i} + \beta k}}}\quad\quad\quad\quad\quad \\ {\rm{s}}{\rm{.t}}{\rm{.}}{{{P}}^{\rm{T}}}{{P}} = {{I}},{v_i} \in [0,1],i = 1,2, \cdots ,n\quad\quad\quad \end{aligned} $ | (4) |

式中:

| $ {d_{ii}} = \left\{ {\begin{split} &{{1 / {||{{A}} - {{P}}{{{Q}}^{\rm{T}}}{{A}}{)^i}|{|_2}}}},\quad{||{{({{A}} - {{P}}{{{Q}}^{\rm{T}}}{{A}})}^i}|{|_2} \ne 0} \\ &{1 / \delta },\quad{\text{其他}}\end{split}} \right. $ | (5) |

式中:

| $ {h_{ii}} = \left\{ {\begin{split} &{{1 / {||{{{Q}}^j}|{|_2}}}},\quad{||{{{Q}}^j}|{|_2} \ne 0}\\ &{1 / \delta },\quad{\text{其他}} \end{split}} \right. $ | (6) |

式中:

因为式(4)含有

当

| $ \begin{split} \mathop {\min }\limits_{{{v}},{{b}},{{P}},{{Q}}} \displaystyle\sum\limits_{i = 1}^n {{{{v}}_i}} ||{{{x}}_i} - {{b}} - {{P}}{{{Q}}^{\rm{T}}}\left( {{{{x}}_i} - {{b}}} \right)|{|_2} + \displaystyle\sum\limits_{i = 1}^n {\frac{{{\beta ^2}}}{{{v_i} + \beta k}}} \\ {\rm{s}}{\rm{.t}}{\rm{.}}{{{P}}^{\rm{T}}}{{P}} = {{I}},{v_i} \in [0,1],i = 1,2, \cdots ,n \quad\quad\end{split} $ |

令

| $ \begin{aligned} & \mathop {\min }\limits_{{v}} \displaystyle\sum\limits_{i = 1}^n {{v_i}} {L_i} + \displaystyle\sum\limits_{i = 1}^n {\frac{{{\beta ^2}}}{{{v_i} + \beta k}}} \\ & {\rm{s}}{\rm{.t}}{\rm{.}}{{{v}}_i} \in [0,1],i = 1,2,\cdots,n \end{aligned} $ | (7) |

式(7)的目标函数关于

| $ {{{v}}_i} = \left\{ {\begin{split} &{\rm{1}},\quad{{L_i} \leqslant 1/{{\left( {k + 1/\beta } \right)}^2}}\\ &{\rm{0}},\quad{{L_i} \geqslant 1/{k^2}}\\ &{\beta \left( {\frac{{\rm{1}}}{\sqrt {{L_i}} }- k} \right)},\quad{\text{其他}} \end{split}}\right. $ | (8) |

当固定

| $ \mathop {\min }\limits_{{b}} \left\| {({{X}} - {{b1}^{\rm{T}}} - {{P}}{{{Q}}^{\rm{T}}}({{X}} - {{b1}^{\rm{T}}}))\sqrt {{V}} \sqrt {{D}} } \right\|_F^2 $ | (9) |

式(9)的目标函数关于

| $ ({{I}} - {{P}}{{{Q}}^{\rm{T}}} - {{Q}}{{{P}}^{\rm{T}}} + {{Q}}{{{Q}}^{\rm{T}}})({{b1}^{\rm{T}}} - {{X}}){{VD1}} = 0 $ | (10) |

令

| $ ({{I}} - {{P}}{{{Q}}^{\rm{T}}} - {{Q}}{{{P}}^{\rm{T}}} + {{Q}}{{{Q}}^{\rm{T}}}){{c}} = 0 $ | (11) |

若

| $ {{b}} = \frac{{{{XVD1}}}}{{{{\bf{1}}^{\rm{T}}}{{VD1}}}} $ | (12) |

若

| $ {{c}} = {{{U}}_{\rm{1}}}{{z}} $ |

其中

| $ {{b}} = \frac{{{{({{{U}}_{\rm{1}}})}_{{{s}} + {\rm{1}}}} + {{XVD1}}}}{{{{\bf{1}}^{\rm{T}}}{{VD1}}}} $ | (13) |

固定

| $ \begin{aligned} \mathop {\min }\limits_{{Q}} \left\| {({{X}} - {{b1}^{\rm{T}}} - {{P}}{{{Q}}^{\rm{T}}}({{X}} - {{b1}^{\rm{T}}}))\sqrt {{V}} \sqrt {{D}} } \right\|_F^2 + \\ \alpha ||\sqrt {{H}} {{Q}}||_F^2\quad \quad \quad \quad \quad \quad \quad \end{aligned} $ | (14) |

令

| $ \mathop {\min }\limits_{{Q}} ||{{G}} - {{P}}{{{Q}}^{\rm{T}}}{{G}}||_F^2 + \alpha ||\sqrt {{H}} {{Q}}||_F^2 $ | (15) |

式(15)的目标函数关于

| $ \left( {{{G}}{{{G}}^{\rm{T}}} + \alpha {{H}}} \right){{Q}} = {{G}}{{{G}}^{\rm{T}}}{{P}} $ |

从而有

| $ {{Q}} = {\left( {{{G}}{{{G}}^{\rm{T}}} + \alpha {{H}}} \right)^{ - 1}}{{G}}{{{G}}^{\rm{T}}}{{P}} $ | (16) |

固定

| $ \begin{split} \mathop {\min }\limits_{{P}} \left\|\left( {\left( {{{X}} - {{b1}^{\rm{T}}}} \right) - {{P}}{{{Q}}^{\rm{T}}}\left( {{{X}} - {{b1}^{\rm{T}}}} \right)} \right)\sqrt {{V}} \sqrt {{D}} \right\|_F^2 \\ {\rm{s.t.}}{{{P}}^{\rm{T}}}{{P}} = {{I}}\quad\quad\quad\quad\quad\quad\quad \end{split} $ |

或等价形式为

| $ \begin{aligned} \mathop {\min }\limits_{{P}} \left\| \sqrt {{D}} \sqrt {{V}} {({{X}} - {{b1}^{\rm{T}}})^{\rm{T}}} - \sqrt {{D}} \sqrt {{V}} {({{X}} - {{b1}^{\rm{T}}})^{\rm{T}}}{{Q}}{{{P}}^{\rm{T}}}\right\|_F^2\\ {\rm{s.t.}}{{{P}}^{\rm{T}}}{{P}} = {{I}} \quad\quad\quad\quad\quad\quad\quad\quad\quad \end{aligned} $ | (17) |

约束条件

| $ {{P}} = {{{E}}_2}{{{U}}_2^{\rm{T}}} $ | (18) |

以上过程不断迭代,直到收敛条件满足为止,具体算法如算法1。

算法1 SPL-OMSPCA

输入 样本

输出 投影矩阵

迭代执行以下操作,直到模型收敛:

1)通过式(8)计算

2)通过式(12)、(13)计算

3)通过式(16)计算

4)通过式(18)计算

5)通过式(5)计算

6)通过式(6)计算

7) 更新

得到投影矩阵

| $ c = {{\rm{argmin}} _{{c_i}}}{\left(\displaystyle\sum\limits_{j = 1}^m {{{(X_i^j - {y_j})}^2}} \right)^{1/2}} $ | (19) |

式中:

在算法1中,主要的计算复杂度为奇异值分解和矩阵求逆,故本算法时间复杂度最高为

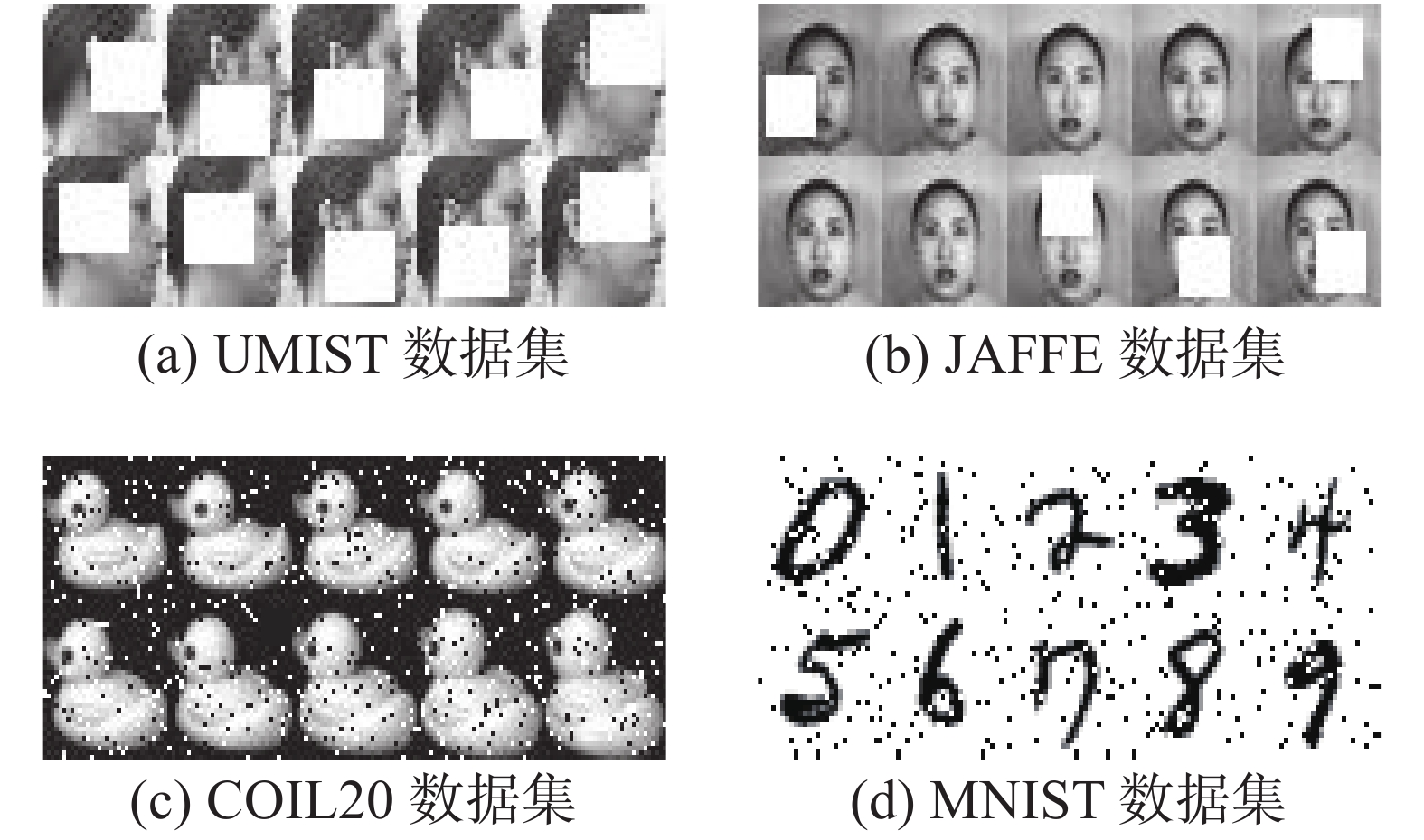

为评估SPL-OMSPCA算法的有效性,本文将在UMIST[16]、JAFFE[17]、AR[18]、BIO[19]、COIL20[20]及MNIST[21]数据集上进行测试,每次实验独立随机,重复20次取平均识别率和标准差作为最后实验结果,并与PCA、SPCA、OMRPCA和JSPCA算法作对比。

3.1 数据集为测试SPL-OMSPCA对异常值的鲁棒性,将UMIST数据集的每个图像随机添加

| 表 1 不同数据集的属性及特点 Tab.1 Attributes and characteristics of different datasets |

|

Download:

|

| 图 1 部分含噪数据集的可视化 Fig. 1 Visualization of some datasets with noise | |

在各数据集的每类中随机选取

|

Download:

|

| 图 2 不同算法在6个数据集上不同子空间维度的分类精度 Fig. 2 Classification accuracies of different algorithm in different subspace dimensions on six datasets | |

| 表 2 在6个不同噪声数据集上各种PCA算法的分类精度及其标准差 Tab.2 Average classification accuracy and standard deviation of various PCA algorithm on six data sets with different noise training samples |

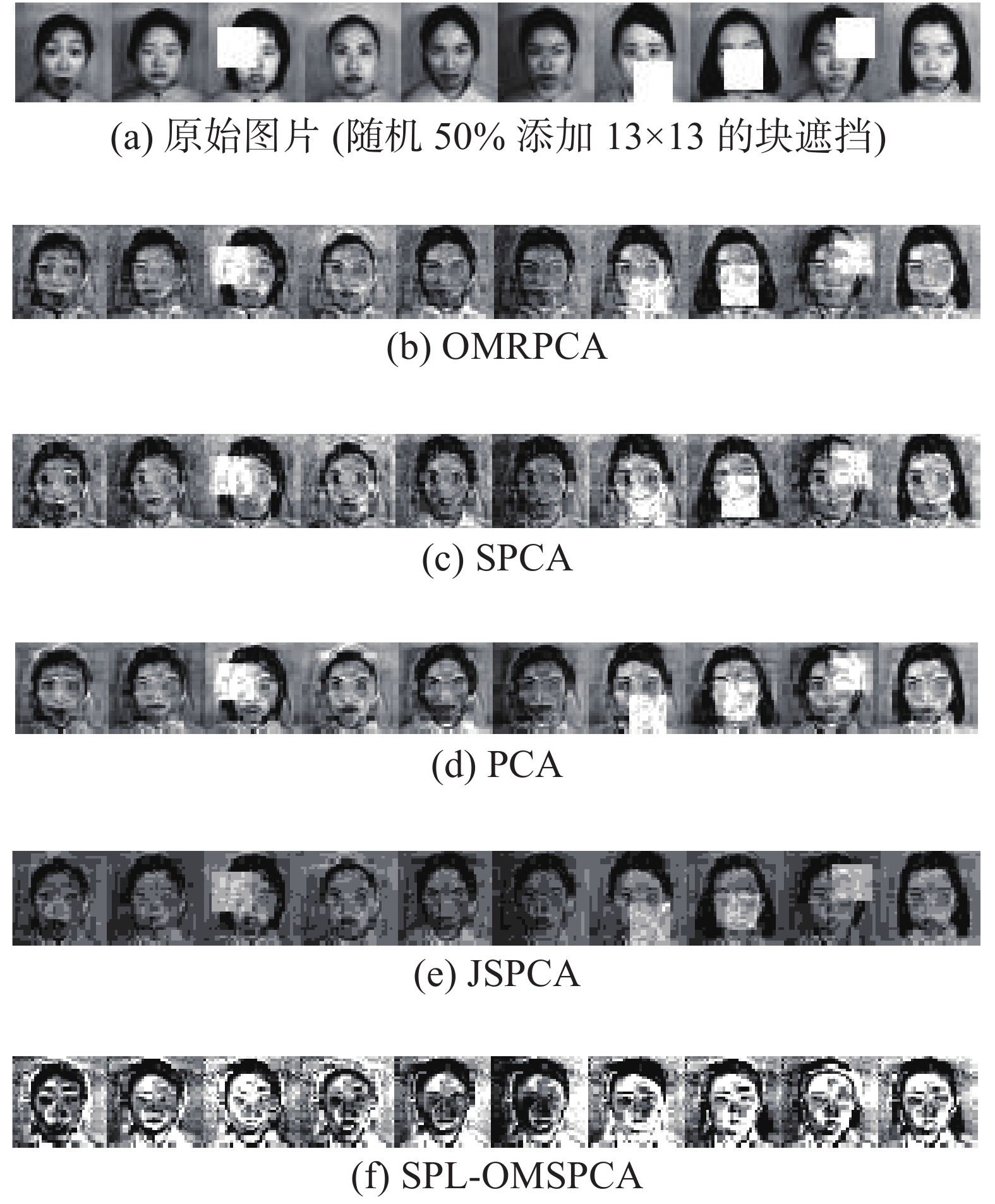

为进一步证明实验的合理性,选取JAFFE和COIL20数据集,L分别取10和9,子空间维度取10。SPL-OMSPCA模型中,每一次迭代中更新均值,图3展示了2个数据集从1024个特征中随机抽取的10个特征的均值变化,由图3可看出均值随迭代次数增加逐渐趋于平稳。模型考虑样本自身差异性,引入自步学习机制,利用损失值判断样本的难易程度,赋予样本软权重

综上所述,SPL-OMSPCA模型对噪声样本具有更高的鲁棒性,且能取得比其他算法更高的识别率。

|

Download:

|

| 图 3 均值变化图 Fig. 3 Graph of the mean value | |

|

Download:

|

| 图 4 不同算法对部分JAFFE数据集的重构图像 Fig. 4 Reconstructed images of partial JAFFE datasets by different algorithms | |

| 表 3 自步软权重

|

|

Download:

|

| 图 5 目标函数收敛图 Fig. 5 Convergence graph of objective value | |

由式(3)提出的问题可知,参数

| $ {L_m} = 1/{\left( {k + 1/\beta } \right)^2} $ | (20) |

为简化计算,令

| $ k=1/{2}\sqrt{L_{m}},\quad \beta ={2}\sqrt{{{L}}_{m}} $ | (21) |

对步长参数

|

Download:

|

| 图 6 不同参数上的分类精度 Fig. 6 Classification accuracy on different parameters | |

本文提出了一种新的无监督降维算法——自步稀疏最优均值主成分分析(SPL-OMSPCA)算法。基于对样本自身差异性的考虑,引入自步学习的思想,实现样本从“简单”到“复杂”的训练,且在迭代过程中,自动更新均值,而后通过对投影矩阵添加

| [1] |

OU Weihua, YOU Xinge, TAO Dacheng, et al. Robust face recognition via occlusion dictionary learning[J]. Pattern recognition, 2014, 47(4): 1559-1572. DOI:10.1016/j.patcog.2013.10.017 ( 0) 0)

|

| [2] |

WEI Lai, ZHOU Rigui, YIN Jun, et al. Latent graph-regularized inductive robust principal component analysis[J]. Knowledge-based systems, 2019, 177: 68-81. DOI:10.1016/j.knosys.2019.04.007 ( 0) 0)

|

| [3] |

HE Jinrong, BI Yingzhou, LIU Bin, et al. Graph-dual Laplacian principal component analysis[J]. Journal of ambient intelligence and humanized computing, 2019, 10(8): 3249-3262. DOI:10.1007/s12652-018-1096-5 ( 0) 0)

|

| [4] |

ZHAO Haifeng, WANG Zheng, NIE Feiping. A new formulation of linear discriminant analysis for robust dimensionality reduction[J]. IEEE transactions on knowledge and data engineering, 2018, 31(4): 629-640. DOI:10.1109/TKDE.2018.2842023 ( 0) 0)

|

| [5] |

朱换荣, 郑智超, 孙怀江. 面向局部线性回归分类器的判别分析方法[J]. 智能系统学报, 2019, 14(5): 959-965. ZHU Huanrong, ZHENG Zhichao, SUN Huaijiang. Locality-regularized linear regression classification-based discriminant analysis[J]. CAAI transactions on intelligent systems, 2019, 14(5): 959-965. (  0) 0)

|

| [6] |

NIE Feiping, HUANG Heng, DING C, et al. Robust principal component analysis with non-greedy ℓ1-norm maximization[C]//Proceedings of the 22nd International Joint Conference on Artificial Intelligence. Barcelona, Catalonia, Spain, 2011: 1433−1438.

( 0) 0)

|

| [7] |

NIE Feiping, YUAN Jianjun, HUANG Heng. Optimal mean robust principal component analysis[C]//Proceedings of the 31st International Conference on Machine Learning. Beijing, China, 2014: 1062−1070.

( 0) 0)

|

| [8] |

ZOU Hui, HASTIE T, TIBSHIRANI R. Sparse principal component analysis[J]. Journal of computational and graphical statistics, 2006, 15(2): 265-286. DOI:10.1198/106186006X113430 ( 0) 0)

|

| [9] |

YI Shuangyan, LAI Zhihui, HE Zhenyu, et al. Joint sparse principal component analysis[J]. Pattern recognition, 2017, 61: 524-536. DOI:10.1016/j.patcog.2016.08.025 ( 0) 0)

|

| [10] |

BENGIO Y, LOURADOUR J, COLLOBERT R, et al. Curriculum learning[C]//Proceedings of the 26th Annual International Conference on Machine Learning. Montreal, Quebec, Canada, 2009: 41−48.

( 0) 0)

|

| [11] |

KUMAR M P, PACKER B, KOLLER D. Self-paced learning for latent variable models[C]//Proceedings of the 23rd International Conference on Neural Information Processing Systems. Vancouver, British Columbia, Canada, 2010: 1189−1197.

( 0) 0)

|

| [12] |

JIANG Lu, MENG Deyu, ZHAO Qian, et al. Self-paced curriculum learning[C]//Proceedings of the 29th AAAI Conference on Artificial Intelligence. Austin, Texas, USA, 2015: 2694−2700.

( 0) 0)

|

| [13] |

YU Hao, WEN Guoqiu, GAN Jiangzhang, et al. Self-paced learning for K-means clustering algorithm

[J]. Pattern recognition letters, 2020, 132: 69-75. DOI:10.1016/j.patrec.2018.08.028 ( 0) 0)

|

| [14] |

HU Sixiu, PENG Jiangtao, FU Yingxiong, et al. Kernel joint sparse representation based on self-paced learning for hyperspectral image classification[J]. Remote sensing, 2019, 11(9): 1114-1132. DOI:10.3390/rs11091114 ( 0) 0)

|

| [15] |

HAJINEZHAD D, SHI Qingjiang. Alternating direction method of multipliers for a class of nonconvex bilinear optimization: convergence analysis and applications[J]. Journal of global optimization, 2018, 70(1): 261-288. DOI:10.1007/s10898-017-0594-x ( 0) 0)

|

| [16] |

HOU Chenping, NIE Feiping, YI Dongyun, et al. Feature selection via joint embedding learning and sparse regression[C]//Proceedings of the 22nd International Joint Conference on Artificial Intelligence. Barcelona, Spain, 2011: 1324−1329.

( 0) 0)

|

| [17] |

LYONS M J, BUDYNEK J, AKAMATSU S. Automatic classification of single facial images[J]. IEEE transactions on pattern analysis and machine intelligence, 1999, 21(12): 1357-1362. DOI:10.1109/34.817413 ( 0) 0)

|

| [18] |

WRIGHT J, YANG A Y, GANESH A, et al. Robust face recognition via sparse representation.[J]. IEEE transactions on pattern analysis and machine intelligence, 2009, 31(2):210-227.

( 0) 0)

|

| [19] |

JESORSKY O, KIRCHBERG K J, FRISCHHOLZ R W. Robust face detection using the hausdorff distance[C]//Proceedings of the 3rd International Conference on Audio- and Video-Based Biometric Person Authentication. Halmstad, Sweden, 2001: 90−95.

( 0) 0)

|

| [20] |

ZHENG Miao, BU Jiajun, CHEN C, et al. Graph regularized sparse coding for image representation[J]. IEEE transactions on image processing, 2011, 20(5): 1327-1336. DOI:10.1109/TIP.2010.2090535 ( 0) 0)

|

| [21] |

KUSSUL E, BAIDYK T. Improved method of handwritten digit recognition tested on MNIST database[J]. Image and vision computing, 2004, 22(12): 971-981. DOI:10.1016/j.imavis.2004.03.008 ( 0) 0)

|

2021, Vol. 16

2021, Vol. 16