b Key Laboratory of East China Sea Fishery Resources Exploitation, Ministry of Agriculture and Rural Affairs, East China Sea Fisheries Research Institute, Chinese Academy of Fishery Sciences, Shanghai 200090, China;

c Department of Laboratory Medicine, Renji Hospital, School of Medicine, Shanghai Jiao Tong University, Shanghai 200137, China

Digital microfluidics (DMF) as a branch of lab-on-a-chip technology is increasingly growing popular due to its unique capability for control of discrete fluids with high flexibility [1-3]. It could automatically manipulate pL- to mL-sized droplets to dispense, move, merge and split [4, 5] on the surface of an electrode array, which has advantages of being miniaturized, programmable, parallelizable and low power consumption [6-8]. By integrating DMF with analytical techniques, it can be utilized for a wide range of applications such as point-of-care testing [9-11], programmable chemical synthesis [12] and single cell analysis [13-16]. In addition, as the device of DMF do not require pump, valve and syringe for fluid control, it is also an ideal tool for portable and automatic pre-treatment of samples for single molecule techniques in aqueous solution such as nanopore [17, 18] and optical/magnetic tweezers [19]. Generally, a control system of DMF is interfaced with the DMF chip to execute the manipulation of the droplets by regulating the applied voltage on the electrodes. However, in practice, the reconfiguration of the applied voltage in a multi-step process may not be translated into droplet movement due to the charge inverting effect [20] or unpredictable surface heterogeneity [21]. Hence, it is important to develop efficient approaches for monitoring the state and position of the droplets in the DMF system, which not only increases the robustness of the droplet control, but also offers an additional way to obtain the dynamic information of the droplets [22-24].

Measuring the capacitance of each electrode has been developed to examine the existing of droplet and obtain its location by using an impedance measuring system [25-28]. Image processing is an alternative method for monitoring the droplets and realize feedback control. Shin et al. introduced machine vision for localizing droplets by detecting edges and fitting circles in HSI colour space [29]. Similarly, Vo et al. developed a background subtraction method for droplets recognition based on edge detection and Hough circle detection (HCD) [30]. Willsey et al. proposed method based on colour filter for searching the droplets in the acquired image [31].

Although the image processing methods, in comparison with the capacitance measurement, could obtain more detailed information for the droplets such as size, shape and colour, their performance may be unsatisfied due to the interferes in the image including the background patterns or inhomogeneous illumination [30]. As far as we know, the existing image processing methods used for DMF are all based on object detection by searching the droplets in the global space, such as colour filter, Hough circle detection and connected components detection. Furthermore, their performances highly depend on the configuration of parameters for different conditions. Therefore, it is still necessary to develop methods to prompt robust, accurate and fast recognition of droplets for the digital microfluidics.

Object tracking algorithms are popular in the applications for location and recognition with advantages of high performance and efficiency. Among the tracking algorithms, the correlation filter trackers (CFT) have been widely studied in recent years [32-35]. CFTs introduce the structure of the circular patch to expand samples and simplify calculation, which enable the tracking algorithms to improve both speed and accuracy. In this study, we developed an intelligent droplet tracking method for the DMF system based on a correlation filter model, which is updated by online learning the features of the targets in each frame of the video stream. The development of the proposed method made it possible to recognize both coloured and colourless droplets moving on the DMF chip with high accuracy, and measuring their velocity in real time. We also demonstrated the capability of the method for online monitoring the colour changing of the droplets for the colorimetric assay and study of reaction kinetics. Furthermore, the source code of the developed method and the dataset are open to the public [36]. We believe it will be a useful tool for enhancing the reliability of the DMF system and promoting its applications that require automatic control of droplets.

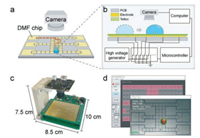

A custom-designed DMF system is used for the manipulation of droplets (Fig. 1), which consists of the DMF chip, a miniature digital camera (USB4KHDR01, f = 3.24 mm, Rervision Technology, China), the control board and computer [37]. The digital camera is vertically mounted above the DMF chip. As a compromise between the accuracy and efficiency for droplet tracking, images with resolution of 800 × 600 are taken by the camera and then transferred to the computer for further processing. DMF chips were custom-designed and fabricated using standard printed circuit board techniques (FASTPCB Technology, China). The structure of our DMF chip in a single-plate format is depicted with a detailed cross-sectional schematic diagram as shown in Fig. 1b. Electrodes on the chip were fabricated using the gold electrodeposition method. The pattern of the electrode array comprised 51 actuator electrodes (2.25 mm × 2.25 mm) and 6 reservoir electrodes (9.72 mm × 6.95 mm). To form a robust and ultrathin hydrophobic dielectric layer on the electrodes, a PTFE film (10 µm, Hongfu Material, China) was carefully and tightly stuck onto the DMF chip.

|

Download:

|

| Fig. 1. Setup of the DMF platform with droplet tracking system. (a) Online monitoring of the droplet on the DMF chip with a camera. (b) Setup of the DMF platform integrated with a droplet tracking system. (c) Photograph of the DMF platform with a size of 10.0 cm × 8.5 cm × 7.5 cm. (d) User interface of the DMF software with online droplet tracking. | |

As previously reported, the control board was used to execute the movement of droplet, which consists of a high voltage generator, microcontroller and a high-voltage multiplexing chip (HV507, Microchip Technology Inc., USA). Droplet actuation was achieved by applying a DC voltage (300 V) to the electrodes configured through the multiplexing chip.

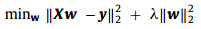

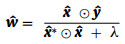

The goal of the CFT is to learn a correlation filter based on ridge regression, which can be employed to determine the position of the droplet in search window (the testing patch). The position of the droplet is corresponding to the maximum response of the correlation filter. The ridge regression problem can be expressed by (Eq. 1):

|

(1) |

where the vector w is the learned correlation filter, X is a data matrix, y is the regression target and λ is for control of overfitting. Solving of the problem gives a closed-form solution (Eq. 2):

|

(2) |

where I is an identity matrix. To expand the sample, the data matrix X can be generated through cyclic shift of the raw image or extracted features. Meanwhile, due to the special properties of the circulant matrix X, the calculation including matrix multiplication and inversion in Eq. 2 can be efficiently calculated in the Fourier domain (Eq. 3) [34]:

|

(3) |

where x can be either raw image patch or extracted features of the search window, the symbol ˆ represents the Fourier transform of a vector, * means the complex conjugate, and ⊙ denotes element-wise multiplication (Eq. 4) [38].

|

(4) |

Subsequently, the filter w learned in the current frame is convolved with testing patch z (search window) in the next frame to generate the response map according to Eq. 4. The location of the maximum response is the target location [39].

The CFT-based droplet tracking method was integrated in our custom-designed software called DropletFactory for the control of our DMF platform. To identify droplets on the DMF chip, the developed method contains three stages: a) Pre-processing, b) initialization of CFT and c) tracking.

Pre-processing: In this step, the tilt and distortion of the raw image is corrected by perspective transformation, and the regions of electrodes are recognized by adaptive threshold segmentation and connected components detection for further locating the position of the droplet on the DMF chip, more details are shown in part 1 of Supporting information.

Initialization of CFT: The position of the droplet in the first frame is obtained by Hough circle detection, providing a training patch for model initialization which is set to 3 times of the detected droplet size. The process for detecting the initial position of the droplet is shown in part 2 of Supporting information.

Tracking: The trained model of the CFT is used to predict position of the droplet in each frame. The workflow of droplet localization based on tracking is shown in Fig. 2. In the first frame, the initial position of the droplet is located by HCD, which provides an image patch for model training. Subsequently, multiple visual features can be extracted for better describe the input, and the correlation filter is trained for initialization. In frame t (t ≥ 2), the testing patch is cropped according to the previous position, and the new multi-features are extracted as current input. Afterwards, correlation between it and the learned filter is performed in frequency domain. FFT and IFFT means fast Fourier transform and its inverse transform, respectively. The response map as an output donates the spatial confidence, whose peak estimates the new location of droplet. Finally, multi-features at the predicted position is extracted for training and updating the model. The pseudocode of droplet tracking method is shown part 3 of Supporting information.

|

Download:

|

| Fig. 2. Workflow of droplet positioning based on correlation filter tracker. | |

To testify the performance of the droplet tracking method, we collected videos of droplet movement on the DMF chip with different speed and colours to build a dataset called SingleDroplet (part 4 of Supporting information). Each video has 1000 frames with manually labelled bounding box of the droplets indicating the target position. The movement speed of the droplets is adjusted by using different control intervals (T) of the electrode array ranging from 100 ms to 500 ms. Videos of coloured droplet (20 µL) and colourless droplet (20 µL) were recorded, respectively. As each frame in the video is corrected for tilt and distortion, the size of each pixel is 0.05 mm.

Since the features used for the testing patch can highly affect the results of recognition, we first examined the performance of the droplet tracking method by using different features including FHOG [34], ColorName (CN) [33], Gray [32] and RGB (raw data), which are commonly applied in the CFT models. Mean location error and mean FPS (frame per second) were used to evaluate the performance. The location error was defined as the Euclidean distance between location centre and the ground truth in the dataset. A distance threshold of location error (half of the electrode width, 1.125 mm) was introduced to check if the target was tracked or lost. If the location error is larger than the threshold, it will be regarded as loss of target. Here, the colourless and coloured droplet samples in the dataset with control interval of 100 ms were used for tracking.

To demonstrate the capacity of the proposed method in practical use, all of the tests were carried out on a normal computer (Windows 10, Intel Core CPU, i5-9300H, 2.4 GHz, 8 GB RAM). Results indicated the proposed droplet tracking method with features except the FHOG performed well with quite low rate of target loss (part 5 of Supporting information, Video S1 in Supporting information). Compared with other features, the Gray feature is more advantageous in processing speed with mean FPS of 250 Hz. Though the CN feature has a lower processing speed (135 Hz) due to the high data dimension, it has relative low location error for both colourless and coloured droplets. Owing to the flexible framework of the CFT, we testified the multi-features configuration by combining the Gray feature and CN feature. The accuracy for recognition of both coloured and colourless droplets was improved by using the multi-features configuration with an acceptable processing speed (135 Hz), and the target loss only has a rate of 0.1% (Table S1 in Supporting information), which was occasionally occurred when the droplet turned a corner. In addition, the droplets were recaptured in the next frame in all the cases of target loss.

The influence of the droplet speed on the recognition performance was studied for the multi-feature configuration (Gray + CN) by analysing samples with control interval ranging from 100 ms to 500 ms. The results are shown in Fig. S5 (Supporting information). There is no significant difference of mean location error was observed with decreasing of the droplet speed. Meanwhile, the mean FPS for all the tests were higher than 135 Hz, which is applicable for online usage. Therefore, the proposed multi-feature configuration is suitable to be utilized for the droplet tracking.

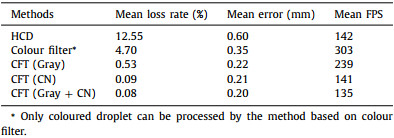

To compare the performance, the proposed method with different features (Gray, CN, Gray + CN) and two conventional methods based on object detection (Hough circle detection [30] and colour filter [31]) were used to process the videos in the dataset. The proposed method with feature of Gray + CN achieved the lowest mean loss rate of 0.08% and the minimum error (0.20 mm) for processing of coloured or colourless droplets (Table 1). Though the method based on colour filter has the fastest processing speed, it is not able to recognize colourless droplets, and its mean loss rate (4.70%) and mean error (0.35 mm) are both higher than the proposed method. The method based on Hough circle detection can be used for both coloured and colourless droplet, but it has a relative high rate of loss (12.55%). It is also notable that the results of both the object detection algorithms are strongly dependent on the configurations of the parameters, such as colour range, radius range of detected circle. The failures of droplet location in the two conventional methods is mainly caused by the image noise and light variations. In contrast, the proposed method does not need to adjust any parameters due to the self-training ability. The processing time for each frame by the CFT-based method is within 8 ms, which is enough to meet real-time requirements. Therefore, the tracking method based on CFT is promising in various DMF applications.

|

|

Table 1 Performances of the CFT-based method and object detection methods (HCD and colour filter). |

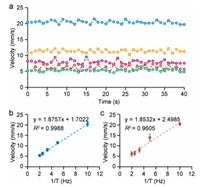

The droplet tracking method based on feature of Gray + CN was used for monitoring the velocity of both coloured and colourless droplets. The velocity was calculated by measuring the displacement of droplet every 25 frames based on the proposed method. Due to the discrete nature of digital microfluidics, which actuates the droplets by activating the electrodes in sequence, the lateral force applied on the droplets is not constant. By using the tracking method, the fluctuation of droplet velocity was quantified (Fig. 3a). A good linearity was obtained between the mean velocity and the control frequency (1/T) for both coloured and colourless droplets (Figs. 3b and c), suggesting that the tracking method could be used to measure the velocity of droplets.

|

Download:

|

| Fig. 3. Monitoring of droplet velocity by the CFT-based method with multi-features (Gray + CN). (a) Fluctuation of the velocity of the droplet (colourless) with control frequency of 2 Hz (Green), 2.5 Hz (Red), 3.3 Hz (purple), 5 Hz (Yellow) and 10 Hz (Blue). Calibration curves of velocity versus activation frequency of colourless droplet (b) and coloured droplet (c), respectively. | |

One of the most common problem in digital microfluidics is the failure of droplet control due to its incorrect position (the edge of droplet did not overlap on the next electrode) [6]. Although improvements of materials and fabrication technique for DMF chip could decrease the occurrence of the problems, feedback system is still necessary to ensure the control stability. Different from the feedback system for adjusting the driving voltages, the proposed CFT-based feedback system is developed based on a self-correcting process for adjusting the droplet position by using a back and forward approach. The implementation of the feedback control was described by the finite state machine to process the droplet movement including the conditions of initialization, running, fault and recovery (part 6 of the Supporting information). As testified in experiment of droplet with size of 15 µL on the one-plate DMF chip, the proposed feedback system could successfully recover the movement of droplets from incorrect position (Video S2 in Supporting information). Furthermore, the proposed feedback system was also used for parallel control of multiple droplets, which is implemented by instantiating the CF trackers for each droplet (Video S3 in Supporting information).

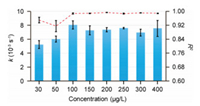

As an example of the principles, the proposed droplet tracking method is applied for monitoring the Griess reaction performed on the DMF platform (Fig. 4a). Griess reaction is widely used for the detection of nitrite in foods and water, relying on the formation of an Azo dye with the reaction of sulfanilic acid and N-(1-naphthyl)ethylenediamine dihydrochloride. The detailed experiment and data processing of the DMF based Griess reaction is demonstrated in part 7 of the Supporting information. Owing to the advance of the online learning for the features of the target droplet, the proposed method is able to recognize the moving droplets in the images acquired by the camera, even the colour of the droplets gradually changed during the reaction (Fig. 4b, Video S4 in Supporting information), which can be hardly realized by using a colour filtering method. In addition, we developed a pixel filtering method to extract the pixels from the recognized droplet whose colour were different from the pixels in the droplets before reaction. Based on the extracted pixels, the saturation of droplet (Sd) was calculated by Sd = Se/Nd, where Se is the sum of the saturation of extracted pixels, and Nd is the total number of pixels in the recognized droplet. As the pixel filtering method excludes the background elements, the influence of background patterns could be highly eliminated. As shown in Fig. 4c, with the increasing of reaction time, the droplet saturation gradually increased from zero to a plateau. The final saturation of a reaction is defined by averaging the droplet saturation in the last 10 s. The calibration curves of the final saturation versus nitrite concentration was established with a satisfied linearity (Fig. 4d, R2 = 0.9939), which suggests that the method can be applied to quantify the nitrite concentration of the samples. Moreover, the LOD was found to be approximately 20 µg/L for nitrite concentration, which is low enough for most applications in the field such as environmental sensing and food safety. Since our method focuses on monitoring the reaction in a moving droplet, the colour information is acquired from a camera with limited resolution for each RGB channel (8-bit), the LOD can also be further improved by integrating a photodiode or photomultiplier tube as auxiliary sensors.

|

Download:

|

| Fig. 4. Quantification of nitrite concentration based on the developed CF tracker for DMF. (a) Route of droplet to execute the colorimetric assay based on the Griess method. (b) The colour development of the Griess reaction with droplets of nitrite concentration (0, 30, 50, 100, 150, 200, 250, 300, 400 µg/L) in 10 min. (c) Dynamic change of the droplet saturation corresponding to the droplets in (b) with an interval of 5 s. (d) Calibration curves of the droplet saturation versus nitrite concentration. Each data point is the mean of three measurements and error bars show standard deviation. | |

The Griess reaction is a consecutive first-order reaction with two steps. Its kinetics can be simplified to a first-order reaction [40] described by a rate constant (k) as dCP/dt = kCN, where CP, CN are the concentration of the product and nitrite, respectively. Then, CP is given by CP = CN, 0(1-e−kt). The reaction kinetics can be studied based on the curves of droplet saturation by fitting to the equation. By this mean, we obtained the k of Griess reaction with different nitrite concentration (30-400 µg/L) for the droplet-based reactions. As a result, the fitted rate constants were close to each other (~7.00 × 10−3 s−1) for droplets with nitrite concentration of no less than 100 µg/L (Fig. 5). In addition, the saturation curves were well fitted to the equation (R2 > 0.98), which supported that the trends of the droplet saturation were consistent with the kinetics of the reaction. On the other hand, the fitted rate constants were decreased to 5.22 × 10−3 s−1 and 6.02 × 10−3 s−1 in the droplets with a lower concentration of 30 µg/L and 50 µg/L. Since the rate constant is theoretically independent of the concentration in the Griess reaction, the decrease of the rate constant could be induced by the low signal-to-noise rate and response delay of the saturation curve, as the product concentration in the early part of the reaction is below the LOD. The fitting performances supported this hypothesis as the R2 obviously decreased when the nitrite concentration in droplets was lower than 100 µg/L. Therefore, it is suggested that the rate constant in the droplet with concentration higher than 5-fold of the LOD could be evaluated by using the proposed method.

|

Download:

|

| Fig. 5. Rate constants (blue bar) estimated by the fitting method for nitrite concentration ranging from 30 µg/L to 400 µg/L and the corresponding R2 of the fitting (red dashed line). Each data point is the mean of three measurements and error bars show standard deviation. | |

This model experiment demonstrated the advantages of the proposed droplet tracking method for monitoring the reaction state in a moving droplet on the DMF chip for both colorimetric assay and kinetic study. In the further, it also could be used in reactions without colour development by using other imaging techniques such as infrared imaging [41], fluorescence imaging [42] and chemiluminescence imaging [43].

In summary, we developed a droplet tracking method for automatically recognition and feedback control of the droplets on a DMF chip, which has higher precision than conventional methods based on object detection. A standard dataset containing samples for different colour and velocity of droplets is established to testify the performance of the proposed method. Both the source code and the dataset are open to the public [36]. The results showed that the proposed method by using feature of Gray + CN could locate both coloured and colourless droplets for 99.92% frames at a high speed (< 8 ms), with location error as low as 0.2 mm, and the developed system can be used for parallel control of multiple droplets. We also demonstrate the capability of the proposed method to obtain dynamic information of a reaction in a DMF platform. With an experimental model based on Griess reaction, it has been used for both colorimetric assay of nitrite and study of reaction kinetics. We believe the propose method is promising for a broad range of applications including point-of-care testing, sample pre-treatment, cell culture [44, 45] and chemical synthesis in microfluidics [46].

Declaration of competing interestThe authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

AcknowledgmentsThe authors would like to thank the financial support from the National Natural Science Foundation of China (Nos. 31701698, 81972017), Shanghai Key Laboratory of Forensic Medicine, Academy of Forensic Science (No. KF1910) and Shanghai Shenkang Hospital Development Center to promote clinical skills and clinical innovation ability in municipal hospitals of the Three-year Action Plan Project (No. SHDC2020CR3006A).

Supplementary materialsSupplementary material associated with this article can be found, in the online version, at doi:10.1016/j.cclet.2021.05.002.

| [1] |

J. Zhong, J. Riordon, T.C. Wu, et al., Lab Chip 20 (2020) 709-716. DOI:10.1039/C9LC01042D |

| [2] |

W. Wang, X. Rui, W. Sheng, et al., Sens. Actuators B: Chem. 324 (2020) 128763. DOI:10.1016/j.snb.2020.128763 |

| [3] |

J. Guo, L. Lin, K. Zhao, et al., Lab Chip 20 (2020) 1577-1585. DOI:10.1039/D0LC00024H |

| [4] |

A.T. Jafry, H. Lee, A.P. Tenggara, et al., Sens. Actuators B: Chem. 282 (2019) 831-837. DOI:10.1016/j.snb.2018.11.135 |

| [5] |

X. Min, C. Bao, W.S. Kim, ACS Sens. 4 (2019) 918-923. DOI:10.1021/acssensors.8b01689 |

| [6] |

T. Loveless, J. Ott, P. Brisk, CGO 2020: Proceedings of the 18th ACM/IEEE International Symposium on Code Generation and Optimization, 2020, pp. 171-184.

|

| [7] |

M. Alistar, U. Gaudenz, Bioeng. 4 (2017) 45. DOI:10.3390/bioengineering4020045 |

| [8] |

G. Sathyanarayanan, M. Haapala, C. Dixon, A.R. Wheeler, T.M. Sikanen, Adv. Mater. Technol. 5 (2020) 2000451. DOI:10.1002/admt.202000451 |

| [9] |

C. Dixon, J. Lamanna, A.R. Wheeler, Lab Chip 20 (2020) 1845-1855. DOI:10.1039/D0LC00302F |

| [10] |

R.S. Sista, R. Ng, M. Nuffer, et al., Diagnostics 10 (2020) 21. DOI:10.3390/diagnostics10010021 |

| [11] |

M.S. Lee, W. Hsu, H.Y. Huang, et al., Biosens. Bioelectron. 150 (2020) 111851. DOI:10.1016/j.bios.2019.111851 |

| [12] |

P.Y. Keng, S. Chen, H. Ding, et al., Proc. Natl. Acad. Sci. USA. 109 (2012) 690-695. DOI:10.1073/pnas.1117566109 |

| [13] |

J. Lamanna, E.Y. Scott, H.S. Edwards, et al., Nat. Commun. 11 (2020) 5632. DOI:10.1038/s41467-020-19394-5 |

| [14] |

X. Xu, Q. Zhang, J. Song, et al., Anal. Chem. 92 (2020) 8599-8606. DOI:10.1021/acs.analchem.0c01613 |

| [15] |

A.H.C. Ng, M.D. Chamberlain, H. Situ, V. Lee, A.R. Wheeler, Nat. Commun. 6 (2015) 7513. DOI:10.1038/ncomms8513 |

| [16] |

J. Zhai, H. Li, A.H.H. Wong, et al., Microsyst. Nanoeng. 6 (2020) 1-10. DOI:10.1038/s41378-019-0121-y |

| [17] |

S.M. Lu, Y.Y. Peng, Y.L. Ying, Y.T. Long, Anal. Chem. 92 (2020) 5621-5644. DOI:10.1021/acs.analchem.0c00931 |

| [18] |

K. Sun, C. Zhao, X. Zeng, et al., Nat. Commun. 10 (2019) 5083. DOI:10.1038/s41467-019-13047-y |

| [19] |

K.C. Neuman, A. Nagy, Nat. Methods 5 (2008) 491-505. DOI:10.1038/nmeth.1218 |

| [20] |

N. Yang, X. Liu, X. Zhang, et al., Sens. Actuators A 219 (2014) 6-12. DOI:10.1016/j.sna.2014.06.004 |

| [21] |

S. Mukherjee, I. Pan, T. Samanta, Algorithm for fault localization on a digital microfluidic biochip using particle swarm optimization technique, in: 2016 IEEE International Symposium on Circuits and Systems (ISCAS), 2016, pp. 602-605.

|

| [22] |

I. Swyer, R. Fobel, A.R. Wheeler, Langmuir 35 (2019) 5342-5352. DOI:10.1021/acs.langmuir.9b00220 |

| [23] |

A.D. Ruvalcaba-Cardenas, P. Thurgood, S. Chen, K. Khoshmanesh, F.J. Tovar-Lopez, ACS Appl. Mater. Interfaces 11 (2019) 39283-39291. DOI:10.1021/acsami.9b10796 |

| [24] |

M. Li, C. Dong, M.K. Law, et al., Sens. Actuators B: Chem. 287 (2019) 390-397. DOI:10.1016/j.snb.2019.02.021 |

| [25] |

R. Fobel, C. Fobel, A.R. Wheeler, Appl. Phys. Lett. 102 (2013) 193513. DOI:10.1063/1.4807118 |

| [26] |

C. Li, K. Zhang, X. Wang, et al., Sens. Actuators B: Chem. 255 (2018) 3616-3622. DOI:10.1016/j.snb.2017.09.071 |

| [27] |

S. Han, X. Liu, L. Wang, Y Wang, G Zheng, MethodsX 6 (2019) 1443-1453. DOI:10.1016/j.mex.2019.06.006 |

| [28] |

Q. Zhu, Y. Lu, S. Xie, et al., Microfluid Nanofluidics 24 (2020) 1-9. DOI:10.1007/s10404-019-2306-y |

| [29] |

Y.J. Shin, J.B. Lee, Rev. Sci. Instrum. 81 (2010) 014302. DOI:10.1063/1.3274673 |

| [30] |

P.Q.N. Vo, M.C. Husser, F. Ahmadi, H. Sinha, S.C.C. Shih, Lab Chip 17 (2017) 3437-3446. DOI:10.1039/C7LC00826K |

| [31] |

M. Willsey, K. Strauss, L. Ceze, et al., Puddle: a dynamic, error-correcting, full-stack microfluidics platform, in: ASPLOS '19: Proceedings of the Twenty-Fourth International Conference on Architectural Support for Programming Languages and Operating Systems, April 2019, pp. 183-197.

|

| [32] |

D.S. Bolme, J.R. Beveridge, B.A. Draper, Y.M. Lui, Visual object tracking using adaptive correlation filters, in: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2010, pp. 2544-2550.

|

| [33] |

M. Danelljan, F. Shahbaz Khan, M. Felsberg, J.V.D. Weijer, Adaptive color attributes for real-time visual tracking, in: 2014 IEEE Conference on Computer Vision and Pattern Recognition, 2014, pp. 1090-1097.

|

| [34] |

J.F. Henriques, R. Caseiro, P. Martins, J. Batista, IEEE Trans. Pattern Anal. Mach. Intell. 37 (2015) 583-596. DOI:10.1109/TPAMI.2014.2345390 |

| [35] |

Y. Li, J. Zhu, A scale adaptive kernel correlation filter tracker with feature integration, in: Computer Vision-ECCV 2014 Workshops, 2015, pp. 254-265.

|

| [36] |

The source code is available at https://github.com/ecustdmf/MV4DMF.

|

| [37] |

Z. Gu, M.L. Wu, B.Y. Yan, H.F. Wang, C. Kong, ACS Omega 5 (2020) 11196-11201. DOI:10.1021/acsomega.0c01274 |

| [38] |

S. Liu, D. Liu, G. Srivastava, D. Polap, M. Wozniak, Complex Intell. Syst. 7 (2021) 1895-1917. DOI:10.1007/s40747-020-00161-4 |

| [39] |

M. Mueller, N. Smith, B. Ghanem, Context-aware correlation filter tracking, in: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 1387-1395.

|

| [40] |

B. Lin, J. Xu, K. Lin, M. Li, M. Lu, ACS Sens. 3 (2018) 2541-2549. DOI:10.1021/acssensors.8b00781 |

| [41] |

E. Polshin, B. Verbruggen, D. Witters, et al., Sens. Actuators B: Chem. 196 (2014) 175-182. DOI:10.1016/j.snb.2014.01.105 |

| [42] |

S. Han, Q. Zhang, X. Zhang, et al., Biosens. Bioelectron. 143 (2019) 111597. DOI:10.1016/j.bios.2019.111597 |

| [43] |

M.H. Shamsi, K. Choi, A.H.C. Ng, M.D. Chamberlain, A.R. Wheeler, Biosens. Bioelectron. 77 (2016) 845-852. DOI:10.1016/j.bios.2015.10.036 |

| [44] |

Y. Zheng, Z. Wu, J.M. Lin, L. Lin, Chin. Chem. Lett. 31 (2020) 451-454. DOI:10.1016/j.cclet.2019.07.036 |

| [45] |

Y. Zheng, Z. Wu, M. Khan, et al., Anal. Chem. 91 (2019) 12283-12289. DOI:10.1021/acs.analchem.9b02434 |

| [46] |

Z. Wu, Y. Zheng, L. Lin, et al., Angew. Chem. Int. Ed. 59 (2020) 2225-2229. DOI:10.1002/anie.201911252 |

2021, Vol. 32

2021, Vol. 32