The Chinese Meteorological Society

Article Information

- ZHAO, Bin, and Bo ZHANG, 2018.

- Assessing Hourly Precipitation Forecast Skill with the Fractions Skill Score. 2018.

- J. Meteor. Res., 32(1): 135-145

- http://dx.doi.org/10.1007/s13351-018-7058-1

Article History

- Received April 27, 2017

- in final form September 18, 2017

2. China Meteorological Administration Numerical Weather Prediction Center, Beijing 100081

Continuous improvements in computing power have led to increasing resolution in operational numerical models that allow for predictions of small-scale local weather events such as heavy regional rainfall. These models can now provide accurate forecast information for the distribution of precipitation with increasingly realistic mobile structures (Atger, 2001; Weisman et al., 2008). However, some important mesoscale phenomena, such as squall lines, are very difficult to forecast. Forecast errors increase more rapidly at smaller spatial scales (Lorenz, 1969), leading to limited predictability.

Some researchers are still using traditional categorical verification skill scores based on the target hit rate for precipitation, such as the Threat Score and frequency bias methods (Murphy, 1971; Chen et al., 2013). However, it is difficult to prove the value of high-resolution forecasts using these traditional verification statistics (Ebert, 2008) because they mainly compare the intensity of precipitation, resulting in false alarms and missed events that increase at finer spatial resolutions. A higher spatial resolution introduces faster-growing errors because smaller-scale details are represented and it is difficult to match precisely with the observed small-scale features. This makes precipitation features at some scales unpredictable and unbelievable.

Mass et al. (2002) concluded that the traditional categorical score for a high-resolution model can easily subject the precipitation forecast to a “double-penalty” problem caused by slight displacements in either space or time. It is difficult to obtain sufficient assessment information and these traditional methods cannot objectively reflect the real ability of numerical models to forecast precipitation (Baldwin and Kain, 2006; Brill and Mesinger, 2009; Davis et al., 2009).

Murphy (1993) described the general characteristics of a good precipitation forecast in terms of consistency, quality, and value. For hourly forecasts in particular, the value in the amount of precipitation is too small to allow for a good forecast. A mismatch between a precipitation forecast and observations may indicate that the tradi-tional skill score is not performing as it should be and it is difficult to distinguish the real performance of the model forecast. Smaller percentile thresholds are sensitive at larger scales while higher percentile thresholds indicate small, peak features (Roberts, 2008). For precipitation forecasts on a smaller timescale (hourly), the spatial and temporal errors are penalized twice by near-miss and false-positive results. It is necessary to choose a method that considers multiple scales and to determine a suitable scale on which to analyze the precipitation in detail in order to be able to distinguish the differences between the forecasts and observations.

Spatial verification techniques can compensate for deficiencies in the traditional skill score (Ahijevych et al., 2009; Gilleland, 2016). The main spatial methods include the neighborhood verification method (Ebert, 2009), scale decomposition (Casati and Stephenson, 2004; Casati, 2010; Mittermaier and Roberts, 2010), and methods for object attributes (Casati et al., 2008). The spatial method for object attributes focuses on attribute information such as the position, shape, and intensity of objects and uses the degree of fit as the criterion for evaluation. Ebert and Gallus (2009) reported a contiguous rainfall area spatial verification method based on object attributes. This method can decompose the overall error in the precipitation forecast into error components, such as the differences in position, to determine the source of systematic errors. The NCAR scientists developed a method for object-based diagnostic evaluation based on object attributes and applied it to their model evaluation tools (Davis et al., 2009). The method calculates the matching characteristics of the center of mass, the area ratio, the direction angle, and the overlap ratio as separate factors to determine the similarity scores. However, this method is affected by a variety of factors, such as the smoothing radius and filter threshold. The lack of consistency in the results obtained by different smoothing radii will give a misleading evaluation.

The neighborhood method (fuzzy method) focuses on upscaling the higher resolution forecast and the observations to a larger scale and measuring the similarity characteristics by spatial smoothing or statistical probability distribution. The simple upscaling method can provide the precipitation technique score at different scales (Zepeda et al., 2000; Yates et al., 2006; Zhao and Zhang 2017), but it cannot solve the problem of excessive smoothing of the precipitation field. Roberts and Lean (2008) proposed an improved neighborhood method, which, by reference to the mean squared error (MSE) skill score, obtained comprehensive assessment information by comparing the forecast and observed fractional coverage of grid-box events in spatial windows of increasing size. Its purpose is to define the useful forecast that has a similar probability distribution for both the forecast and the observations. They named it the fractions skill score (FSS). This scoring method is simple to construct. It is not subject to complex factors (e.g., the filter threshold or smoothing radius) and can give a consistent evaluation, and has thus become the most common spatial technique for use in high-resolution verification.

We assessed the performance of the FSS at high spatial and temporal resolutions using the Global/Regional Assimilation Prediction System regional forecasting model (GRAPES_MESO V4.0) product developed and operated by the China Meteorological Administration Numerical Weather Prediction Center. Using hourly precipitation verification results, we studied the similarities and differences between the neighborhood spatial me-thod and the traditional precipitation skill score. By means of analysis and comparison, we aimed to propose an evaluation scheme for the prediction of precipitation by combining the spatial technique and the traditional categorical score.

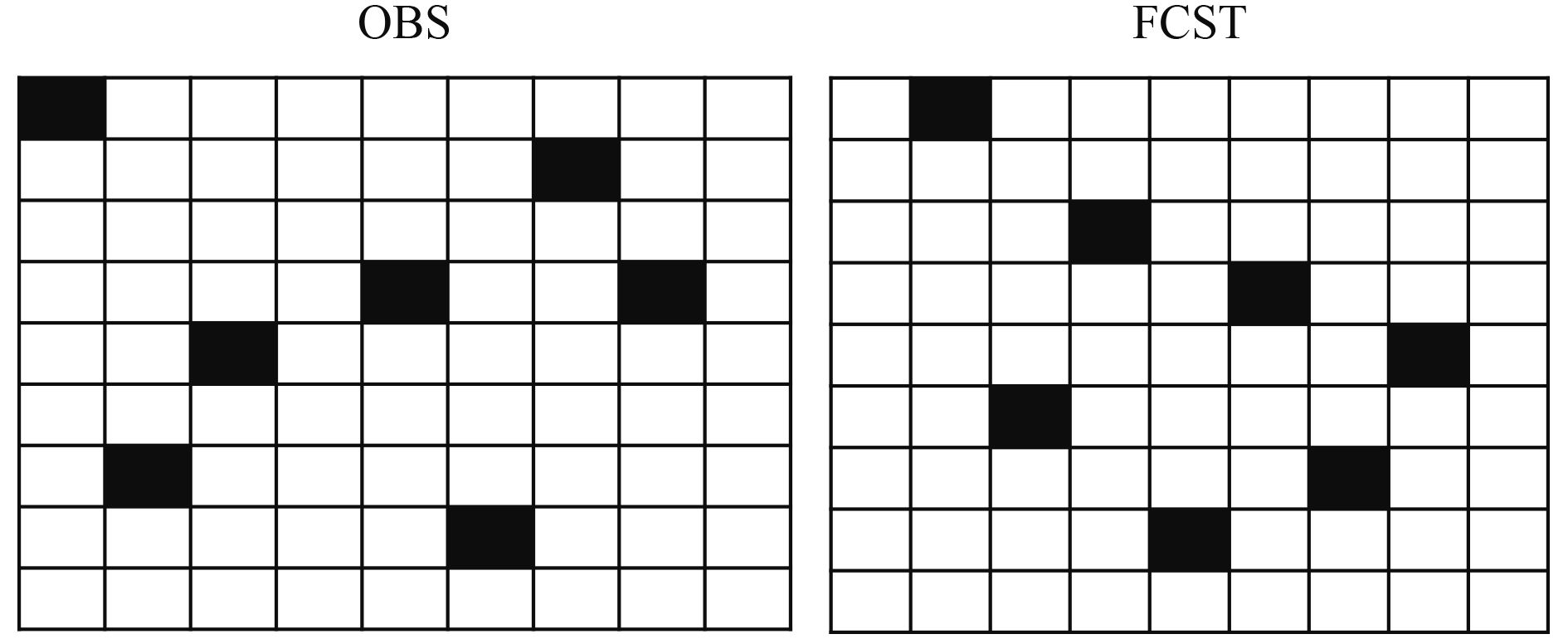

2 Data and methods 2.1 Fractions skill scoreThe FSS, introduced by Roberts and Lean (2008), is different from traditional category skill scores that are based on grid point by grid point verification methods for forecasts and observations. The FSS is based on the probability of the occurrence of precipitation in different spatial windows. In the 9 × 9 grid box in Fig. 1, the forecast events and observed events are distributed in 7 grids and the locations do not correspond with each other on a one to one basis. Using a traditional category score, such as a threshold score, to obtain the precipitation skill score would give 0, indicating no forecasting skill. However, based on the probability of the occurrence of precipitation in the analysis domain (the ratio of the number of precipitation grids to the number of analysis grids), the forecast and the observations are both 7/49; that is, the precipitation forecast coverage is the same as the observations. The FSS redefines the grid fractions under different precipitation thresholds in certain spatial windows. It first defines a precipitation threshold that is applied to both the forecast and the verifying observation field. A neighborhood scale is then selected to compare the two fields of neighborhood events. The comparison is made by using the MSE of their frequencies. This is called the fraction Brier score (FBS) as defined below:

| (1) |

|

| Figure 1 Schematic example of observations (OBS) and forecast (FCST) fractions. |

The FBS directly compares the fractional coverage of rainfall events in windows surrounding the forecasts and observations. Pfcst and Pobs are the frequency of forecast and observation events in each neighborhood grid box, respectively. The angled brackets denote a mean over the grid boxes. The FBS is similar to the Brier score, but its value can be anywhere between 0 and 1. The fractions skill score (FSS) is then defined as follows:

| (2) |

The denominator in the second term is equivalent to the FBS of the worst possible forecast, where there is no overlap between the forecast and the observations. The FSS varies from 0 (total mismatch) to 1 (perfect fore-cast). In general, as the neighborhood scale increases, the FSS will gradually increase. If the forecast is biased such that Pfcst = bPobs, then the FSS asymptotes to 2b/(b2 + 1) (Mittermaier and Roberts, 2010). Roberts and Lean (2008) defined a threshold for the FSS, above which the forecast is predictable and skillful:

| (3) |

where f is the observed fractional rainfall coverage over the domain (wet-area ratio). If f is not very large (within a large domain), a value of 0.5 can be used as a lower limit, and then useful FSS can be approximated as FSSuseful≥ 0.5 (Mittermaier and Roberts, 2010).

2.2 DataThe quantitative precipitation estimation products developed by the China National Meteorological Information Center (NMIC) were selected as the observational data in our analysis. This dataset combined data from regional observation gauges (> 30,000 in China), high-resolution radar estimates, and satellite products. The spatial resolution was 5 km and the temporal resolution was 1 h.

The products of the GRAPES_MESO V4.0 regional model were selected as the forecast data. These products were developed from the China Meteorological Administration Numerical Weather Prediction Center operational model. The resolution of the model was 10 km and the domain covered the same land area of China and surrounding regions as the observational dataset (15°–65°N, 70°–145°E).

We used hourly precipitation forecast products from July 1 to August 31 of 2016, with the initial time at 0000 UTC. During the analysis, both the forecasts and the observations were interpolated onto the same 10 km grid so that they could be evaluated at the same resolution. This paper focuses on the assessment of the neighborhood spatial method on a two-month timescale and the difference of FSS from the traditional precipitation skill score so as to obtain an effective analysis scheme combining the two verification methods for hourly precipitation forecasts.

3 Verification of hourly precipitationA region (15°–65°N, 70°–145°E) was selected as the area for analysis and two months of data from the hourly precipitation products were used to assess the performance of the model. The 8 neighborhood scales used were 10, 30, 50, 90, 130, 170, 330, and 650 km, and 4 1-h precipitation thresholds of 0.1, 1.0, 3.0, and 5.0 mm were selected. We first analyzed the ability of the model to forecast precipitation on different spatial scales. Figure 2 shows the two-month mean FSS results for the different precipitation thresholds. The x-axis corresponds to the neighborhood scale in kilometers and the y-axis corresponds to the forecast time in hours. The black line represents the useful scale (FSS = 0.5) at which the forecast is believable.

|

| Figure 2 Mean FSS for hourly precipitation thresholds of (a) 0.1, (b) 1.0, (c) 3.0, and (d) 5.0 mm h–1, based on the first 24-h forecasts of the GRAPES_MESO during July and August 2016. The black line in each panel is the useful scale. |

The traditional category verification of gridded forecasts matches the forecast with an observed value in a grid box and therefore only the forecast corresponding to that grid box in both space and time is skillful against the observation. For precipitation forecasts with a high spa-tiotemporal resolution, it is difficult to match the fore-cast and observations perfectly, particularly for hourly evaluations of precipitation, as only a slight displacement will affect the skill score. Fuzzy verification assumes that it is acceptable for the forecast to be slightly displaced and still be useful. The allowable scale is defined by the neighborhood scale. Figure 2 shows that there is high uncertainty (significant change and unstable) in the first six hours of the forecast. This should be considered as a result of the model spin-up period. After that, the plots are similar to those expected meteorologically. At the low precipitation threshold (0.1 mm h–1), the model mainly reflects the ability to forecast a belt of rain and results in a relatively high useful scale (130 km) over the last 18 h. At higher precipitation thresholds, the high temporal variability of the useful scale is a result of the uncertainty in the location of the rainfall. It gradually becomes representative of small probability events, so the useful scale of 1 mm h–1 increases up to 170 km and the scale of 3 mm h–1 increases to > 330 km; and for large thresholds (5 mm h –1), it gives results at scales > 500 km. It is usually hard to predict the correct location of rain for relatively low probability events. The FSS for large precipitation thresholds is always < 0.5 for smaller spatial scales. This does not mean that it has no skill for forecasting precipitation because the FSS can also represent the skill performance and may depend on the application of the forecast ( Mittermaier and Roberts, 2010).

To obtain a unified measure, we define the useful scale of 1 mm h–1 (moderate rain) as the analysis scale to calculate the skill scores and assess the difference between the traditional category score and the FSS spatial method. The neighborhood scale was defined as 170 km.

The similarity and error distribution characteristics of different scales were analyzed. Equation (1) can be decomposed as follows:

| (4) |

where σ is the standard deviation and R is the correlation coefficient between the forecast and the observations:

| (5) |

| (6) |

| (7) |

The first three terms on the right-hand side of Eq. (4) are the response to the pattern of displacement and the last term is related to the systematic error (overall bias).

Equation (2) can therefore be rewritten as:

| (8) |

The denominator is the reference FBS value that can be thought of as the maximal FBS value (worst possible forecast), in which there is no overlap between forecasted and observed events.

Figure 3 shows the five terms of Eq. (8), including 1 – FSS, the term related to the standard deviation of the forecast

|

| Figure 3 Distributions of (a) 1 – FSS, (b) TERM-FCST, (c) TERM-OBS, (d) TERM-R, and (e) TERM-SYS, with the four precipitation thresholds (0.1, 1.0, 3.0, and 5.0 mm h –1). See text for detailed expressions of the five terms. |

To further examine the reasons for this distribution, Fig. 4 shows the distributions of σfcst, σobs, R, and

|

| Figure 4 Distributions of the standard deviation of (a) forecast and (b) observation, (c) correlation coefficient, and (d) maximum FBS, with four thresholds (0.1, 1.0, 3.0, and 5.0 mm h–1) for hourly precipitation forecasts during July and August 2016. |

|

| Figure 5 Distribution of 2-month averaged values of FSS with 4 thresholds (0.1, 1.0, 3.0, and 5.0 mm h–1) in July and August 2016. |

As can be seen from this analysis, the initial stage of the forecast stability is low and it should not be considered important in the analysis. Systematic error does not play a major part in the calculation of the FBS, whereas the bias correction FBS can be shown to follow:

| (9) |

Taylor diagrams (Taylor, 2001) provide a way of graphically summarizing how closely a pattern (or a set of patterns) matches observations, and can be used in FBS analysis. The similarity between two patterns is quantified in terms of their correlation, their centered root-mean-square error, and the amplitude of their variations (represented by standard deviation) based on the law of cosines.

Figure 6 shows the statistical pattern of the two-month averaged precipitation skill for the four different thresholds. There was a similar trend before the 12-h forecast mark in that the FBS gradually decreased with the forecast time at any thresholds with an equal correlation. For the last 12 h of the forecast, there was essentially no difference in the forecast dispersion and correlation. The statistical results remained at a relatively low level, showing the relative stability of the model forecast.

|

| Figure 6 Pattern statistics describing the forecast skill of four precipitation thresholds: (a) 0.1, (b) 1.0, (c) 3.0, and (d) 5.0 mm h–1 from the 7–24-h forecasts in July and August 2016. |

We selected a good example (8 h) in the early forecast period and a stable example (24 h) in the later forecast period to compare the difference between the FSS and the traditional category score. Table 1 gives the mean FSS and TS (Threshold Score) at the 8- and 24-h forecasts for different precipitation thresholds. The spatial verification method clearly shows a higher skill than the traditional skill score. The TS hardly retains a score > 0.1 above the 3.0 mm h –1 threshold, whereas the FSS is about 0.3 at 5 mm h–1. In other words, it is difficult for the traditional skill score to evaluate successfully under the condition of a higher precipitation threshold.

| Precipitation threshold (mm) | 8 h | 24 h | ||

| FSS | TS | FSS | TS | |

| 0.1 | 0.55 | 0.18 | 0.54 | 0.17 |

| 1.0 | 0.57 | 0.13 | 0.49 | 0.13 |

| 3.0 | 0.44 | 0.07 | 0.37 | 0.07 |

| 5.0 | 0.35 | 0.05 | 0.29 | 0.05 |

The larger the mean skill, the more likely it is that the daily skill in the time series will be higher and it will be easier to describe the difference in the daily precipitation forecast. Figure 7 shows time series of the FSS and TS for two forecast lead times (8 and 24 h) to further examine the difference between the spatial verification me-thod and the traditional category technique. It can be seen that the overall skill of the TS is lower than that of the FSS. The traditional statistical scores show a difference for smaller precipitation thresholds (< 3 mm h–1) during the time series; however, for relatively heavy precipitation (> 5 mm h–1), it only has a low skill (close to, or at, zero), which cannot reflect the difference between different times. This makes it impossible to effectively assess the difference between the forecasting performances for different periods of precipitation. This is because the traditional skill score is based on point-to-point verification. Low-threshold precipitation mainly represents the large area precipitation and still reflects some skill at high spatial and temporal resolutions. High threshold precipitation gradually becomes more representative of point rainfall, so the traditional skill score finds it difficult to match the forecast and observed events, showing a clear trend for false alarms and missed events, resulting in a low hit rate.

|

| Figure 7 Temporal evolutions of (a, c) FSS and (b, d) TS for (a, b) 8- and (c, d) 24-h forecast hourly rainfall with different thresholds (colored lines) during July and August 2016. |

The FSS showed a significantly different time series, even during the period of poor simulation (TS close to 0). It is therefore useful to distinguish the features of any slight difference in model performance. It is shown that the FSS is better in identifying precipitation forecast skill, especially for heavy rainfall forecast, than traditio-nal skill scores (TS and others). It can also be seen from the statistics calculated for different precipitation thresholds that the overall trend of evolution of the FSS is consistent under each threshold, which shows that the score weakened as the threshold increased.

Figure 8 shows the scatter distributions of the FSS and TS for 8- and 24-h hourly precipitation and summarizes the difference in the performance of the two assessment methods. The FSS and TS are concentrated at moderate precipitation thresholds as a result of the relatively consistent assessment of features, confirming a close correspondence. As the precipitation thresholds increase, the dispersion increases significantly, especially for heavy rainfall. The ability of the traditional technique to describe precipitation of this magnitude is so weak that it is difficult to reflect the difference between the daily forecasts and is thus hard to provide an effective statistical assessment. However, it is easy to make an effective statistical analysis of the comprehensive performance of daily precipitation using the FSS spatial test method, even at the threshold for heavy rainfall.

|

| Figure 8 Scatter distributions of FSS and TS for (a) 8- and (b) 24-h forecast hourly rainfall with different thresholds (colored dots) during July and August 2016. |

The traditional category precipitation skill score emphasizes point-to-point statistical assessment, and suffers from the “double-penalty” problem caused by slight spatial and temporal differences in precipitation from the high-resolution model, and thus cannot objectively reflect the actual ability of the model to forecast precipitation. The spatial verification method is the focus of the current research internationally and has the ability to overcome the “core bottleneck” of the traditional skill score. The neighborhood spatial technique of FSS examines the forecasting ability of different forecasting scales to obtain a useful scale. This method does not focus on point-to-point statistical features to determine the forecast skill, but considers the probability of precipitation fractions within the scale used to compare the error between the forecasted and observed events.

We used hourly forecast products from the GRAPES regional model and quantitative precipitation estimation products from the National Meteorological Information Center of China for July and August 2016 to study the effects of FSS in verifying the hourly precipitation. We first determined a useful scale in the FSS analysis and then assessed the similarities and differences between the FSS spatial technique and the traditional skill score based on this scale. The results were as follows.

(1) The precipitation forecast in the regional model performs poorly during the model spin-up period, but the assessment is stable and consistent after the first 6 h.

(2) The role of the systematic error is insignificant with respect to the MSE of the fraction (FBS) and can be ignored. The dispersion of the observations has a diurnal cycle and the standard deviation of the forecast is similar to the pattern of the reference maximum value of the FBS.

(3) The FSS maintains a high degree of consistency with the correlation coefficient between the forecasted and observed fractions. The FSS can be used as a characteristic function to express the correlation, in a similar manner to the Murphy skill score.

(4) The FSS has obvious advantages in the assessment of precipitation, especially for the forecasting of heavy rainfall. It can distinguish effectively between differences in precipitation time series. The traditional skill score cannot distinguish as much as that from the FSS under high precipitation threshold conditions.

Current research into spatial techniques for the assessment of precipitation forecasts is at a preliminary stage. Many aspects need to be explored and analyzed further, such as whether the FSS score is affected by the neighborhood scale, whether the scale is too small to meet the requirements of a useful scale for the FSS, and whether poor skill will make it difficult to obtain effective assessment information. If the scale is too large, it will mislead the evaluation of different precipitation systems, so choosing an effective analytical scale is the first consideration. In this work, we analyzed a time period (July and August) with frequent rainfall; further work is planned to determine the skill score for a period/season with low levels of precipitation.

| Ahijevych, D., E. Gillel, B. G. Brown, et al., 2009: Application of spatial verification methods to idealized and NWP-gridded precipitation forecasts. Wea. Forecasting, 24, 1485–1497. DOI:10.1175/2009WAF2222298.1 |

| Atger, F., 2001: Verification of intense precipitation forecasts from single models and ensemble prediction systems. Nonlin. Proc. Geophys., 8, 401–417. DOI:10.5194/npg-8-401-2001 |

| Baldwin, M. E., and J. S. Kain, 2006: Sensitivity of several performance measures to displacement error, bias, and event frequency. Wea. Forecasting, 21, 636–648. DOI:10.1175/WAF933.1 |

| Brill, K. F., and F. Mesinger, 2009: Applying a general analytic method for assessing bias sensitivity to bias-adjusted threat and equitable threat scores. Wea. Forecasting, 24, 1748–1754. DOI:10.1175/2009WAF2222272.1 |

| Casati, B., 2010: New developments of the intensity-scale technique within the Spatial Verification Methods Intercomparison Project. Wea. Forecasting, 25, 113–143. DOI:10.1175/2009WAF2222257.1 |

| Casati, B., G. Ross, and D. B. Stephenson, 2004: A new intensity-scale approach for the verification of spatial precipitation forecasts. Meteor. Appl., 11, 141–154. DOI:10.1017/S1350482704001239 |

| Casati, B., L. J. Wilson, D. B. Stephenson, et al., 2008: Forecast verification: Current status and future directions. Meteor. Appl., 15, 3–18. DOI:10.1002/(ISSN)1469-8080 |

| Chen, J., Y. Wang, L. Li, et al., 2013: A unified verification system for operational models from Regional Meteorological Centres of China Meteorological Administration. Meteor. Appl., 20, 140–149. DOI:10.1002/met.1406 |

| Davis, C. A., B. G. Brown, R. Bullock, et al., 2009: The method for Object-Based Diagnostic Evaluation (MODE) applied to numerical forecasts from the 2005 NSSL/SPC Spring Program. Wea. Forecasting, 24, 1252–1267. DOI:10.1175/2009WAF2222241.1 |

| Ebert, E. E., 2008: Fuzzy verification of high-resolution gridded forecasts: A review and proposed framework. Meteor. Appl., 15, 51–64. DOI:10.1002/(ISSN)1469-8080 |

| Ebert, E. E., 2009: Neighborhood verification: A strategy for rewarding close forecasts. Wea. Forecasting, 24, 1498–1510. DOI:10.1175/2009WAF2222251.1 |

| Ebert, E., and A. Gallus Jr. E., 2009: Toward better understanding of the contiguous rain area (CRA) method for spatial forecast verification. Wea. Forecasting, 24, 1401–1415. DOI:10.1175/2009WAF2222252.1 |

| Gilleland, E., 2016: A new characterization in the spatial verification framework for false alarms, misses, and overall patterns. Wea. Forecasting, 32, 187–198. DOI:10.1175/WAF-D-16-0134.1 |

| Lorenz, E. N., 1969: Atmospheric predictability as revealed by naturally occurring analogues. J. Atmos. Sci, 26, 636–646. DOI:10.1175/1520-0469(1969)26<636:APARBN>2.0.CO;2 |

| Mass, C. F., D. Ovens, K. Westrick, et al., 2002: Does increasing horizontal resolution produce more skillful forecasts? Bull. Amer. Meteor. Soc, 83, 407–430. DOI:10.1175/1520-0477(2002)083<0407:DIHRPM>2.3.CO;2 |

| Mittermaier, M., and N. Roberts, 2010: Intercomparison of spatial forecast verification methods: Identifying skillful spatial scales using the fractions skill score. Wea. Forecasting, 25, 343–354. DOI:10.1175/2009WAF2222260.1 |

| Murphy, A. H., 1971: A note on the ranked probability score. J. Appl. Meteor., 10, 155–156. DOI:10.1175/1520-0450(1971)010<0155:ANOTRP>2.0.CO;2 |

| Murphy, A. H., 1993: What is a good forecast? An essay on the nature of goodness in weather forecasting. Wea. Forecasting, 8, 281–293. DOI:10.1175/1520-0434(1993)008<0281:WIAGFA>2.0.CO;2 |

| Roberts, N., 2008: Assessing the spatial and temporal variation in the skill of precipitation forecasts from an NWP model. Meteor. Appl., 15, 163–169. DOI:10.1002/(ISSN)1469-8080 |

| Roberts, N., and H. W. Lean, 2008: Scale-selective verification of rainfall accumulations from high-resolution forecasts of convective events. Mon. Wea. Rev., 136, 78–97. DOI:10.1175/2007MWR2123.1 |

| Taylor, K. E., 2001: Summarizing multiple aspects of model performance in a single diagram. J. Georphys. Res., 106, 7183–7192. DOI:10.1029/2000JD900719 |

| Weisman, M. L., C. Davis, W. Wang, et al., 2008: Experiences with 0-36-h explicit convective forecasts with the WRF-ARW model. Wea. Forecasting, 23, 407–437. DOI:10.1175/2007WAF2007005.1 |

| Yates, E., S. Anquetin, V. Ducrocq, et al., 2006: Point and areal validation of forecast precipitation fields. Meteor. Appl., 13, 1–20. |

| Zepeda-Arce, J., E. Foufoula-Georgiou, and K. K. Droegemeier, 2000: Space-time rainfall organization and its role in validating quantitative precipitation forecasts. J. Geophys. Res., 105, 10129–10146. DOI:10.1029/1999JD901087 |

| Zhao, B., and B. Zhang, 2017: Application of neighborhood spatial verification method on precipitation evaluation. Torrential Rain and Disasters, 36, 497–504. DOI:10.3969/j.issn.1004-045.2017.06.004 |

2018, Vol. 32

2018, Vol. 32