Active Online Learning in the Binary Perceptron Problem

Supported by the National Natural Science Foundation of China under Grant Nos. 11421063 and 11747601 and the Chinese Academy of Sciences under Grant No. QYZDJ-SSW-SYS018

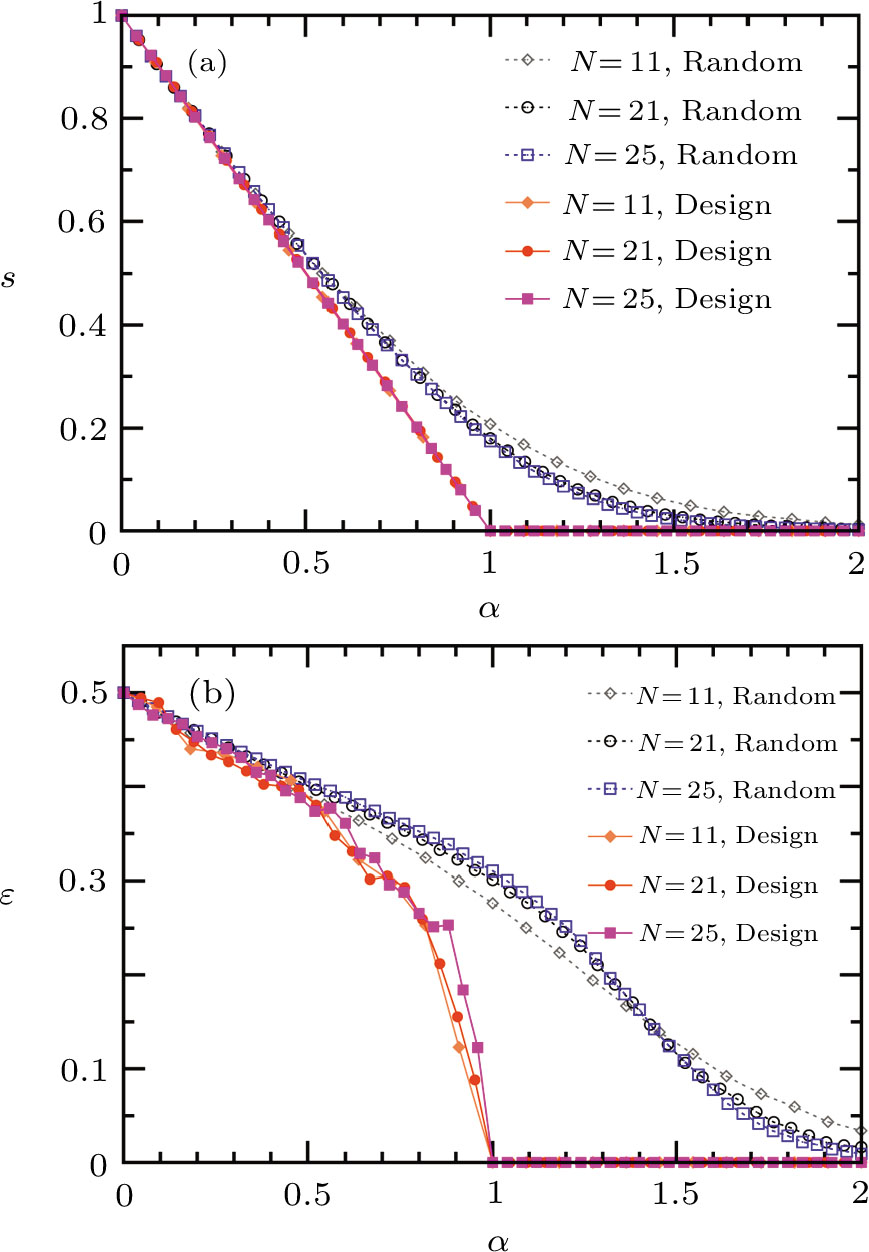

The active learning strategy (6) outperforms passive learning on small Ising perceptrons: (a) entropy density s (in units of bit), (b) generalization error ε. Training patterns are added one after another, α = P/N is the instantaneous density of patterns (N is the number of input neurons and P is the number of training patterns). Each data point is obtained by averaging over 1,000 independent runs of the passive (Random) or the active (Design) learning algorithm.