As a powerful tool for ocean exploration and the development of sub-sea infrastructure, the autonomous underwater vehicle (AUV) is playing an increasingly important role in military and scientific research. However, due to its limited operational hours, the AUV must be frequently recharged and relaunched and the complex and hostile underwater environment increases the difficulty of this process. Therefore, a reliable homing and docking technology is crucial for the AUV to be able to complete its missions.

To determine its position and attitude relative to the docking station (DS), the AUV can be equipped with various sensors, i.e., acoustic, electromagnetic, and optical. Compared to other technologies, optical guidance has good directional accuracy, low vulnerability to external detection, and a diverse range of uses (Deltheil et al., 2002). The optical docking system provides a targeting accuracy on the order of 1 cm in real-life conditions, even in turbid bay waters (Cowen et al., 1997). However, its operating distance is limited because of the underwater conditions. Yu et al. (2001) proposed a navigation method for the AUV based on artificial underwater landmarks (AULs), which can be recognized by a vision system installed in an AUV. Lee et al. (2003) presented a docking system for AUVs that enable them to dock at an underwater station with the aid of a camera installed at the center of the AUV nose. A docking method based on image processing for cruising-type AUVs was proposed by Park et al. (2009), and a similar docking method for hovering-type AUVs was proposed by Kondo et al. (2012). Maki et al. (2013) proposed a docking method for hovering-type AUVs based on both acoustic and visual positioning, and Yahya and Arshad (2015) proposed a tracking method based on the placement of colored markers in the cluttered environment. Vallicrosa et al. (2016) developed a light beacon navigation system for estimating the position of the DS with respect to the AUV.

All the above mentioned optical guidance systems for AUV homing and docking have established that optical guidance technology is highly feasible and has reliable precision, especially at short distances. However, underwater imaging poses significant challenges at extended ranges compared to similar situations over the ocean surface. Even in the clearest ocean waters, the visibility range, at best, is on the order of 10 m owing to the absorption and scattering of light by particles of various origins, including algal cells, detritus, sediments, plankton, and bubbles (Hou, 2009). Therefore, extending the operating range while maintaining the precision of the optical guidance system is the main technological challenge of AUVs. As an information carrier, the characteristics of polarized light, such as the degree of polarization (DOP), have greater robustness than light intensity (Xu et al., 2015). More than 70 underwater species (Horváth and Varjú, 2004) are polarization-sensitive and some have the ability to navigate, hunt, camouflage themselves, and communicate via polarized vision (Shashar et al., 2000; Waterman, 2006; Cartron et al., 2013). Polarization has been found to increase the contrast and detection range in scattering media both above and under water (Schechner et al., 2003; Schechner and Karpel, 2005). In addition, bionic polarization navigation technology has the potential for application in water (Cheng et al., 2020).

In this paper, we propose a real-time method for homing and docking the AUV by determining its position and attitude relative to polarized AULs on the DS, which are recognized by a vision system installed in the AUV. We introduce the theory of polarization imaging, along with the concept of polarized optical guidance. Then, we obtain atmospheric intensity and DOP images and simulate underwater intensity and DOP images at various distances. Pixel-recognition errors are input into the model to calculate the pose errors. We conduct underwater experiments to determine the practicability of the proposed method and the results indicate that the polarized optical guidance system extends the operating distance while maintaining the precision of traditional optical guidance. This biologically inspired polarized optical guidance mechanism provides a novel approach for AUV homing and docking that enables AUVs to perform tasks at much greater distances.

2 Methods 2.1 Theory of Polarization ImagingGenerally, vision systems recognize the intensity characteristic of AULs, whereas the proposed method recognizes the DOP of the polarized AULs. The DOP is the ratio of the component magnitude of the polarized light to the total magnitude of the light, which can vary between 0 (completely unpolarized light) and 100% (completely polarized light). To obtain a DOP image, the DOP of every pixel of the image is calculated and the Stokes vector S is used to describe the polarized light beam. All information about the polarized light is contained in the four components of Eq. (1):

| $ S={[I, Q, U, V]}^{\rm{T}}, $ | (1) |

where I is the total intensity of the light, Q is the fraction of linear polarization parallel to a reference plane, U is the proportion of linear polarization at 45˚ with respect to the reference plane, and V is the fraction of right-handed circular polarization. The linear polarization is used to format the image. The polarization state of the incident light S = [I, Q, U, V]T, which is changed by a polarizing film, can be expressed based on the Muller matrix:

| $ \left[\begin{array}{c}{I}^{\prime }\\ {Q}^{\prime }\\ {U}^{\prime }\\ {V}^{\prime }\end{array}\right]=\frac{1}{2}\left[\begin{array}{cccc}1& \mathrm{cos}2\psi & \mathrm{sin}2\psi & 0\\ \mathrm{cos}2\psi & {\mathrm{cos}}^{2}2\psi & \mathrm{cos}2\psi \mathrm{sin}2\psi & 0\\ \mathrm{sin}2\psi & \mathrm{cos}2\psi \mathrm{sin}2\psi & {\mathrm{sin}}^{2}2\psi & 0\\ 0& 0& 0& 0\end{array}\right]\times \left[\begin{array}{c}I\\ Q\\ U\\ V\end{array}\right], $ | (2) |

where ψ is the angle between the main optical axis and the zero reference line and S' = [I', Q', U', V']T is the polarization state of the emergent light. Here the first row of the Muller matrix is relevant because the light intensity can be obtained directly by the camera:

| $ {I}^{\prime }(\psi)=\frac{1}{2}(I+Q\mathrm{cos}2\psi +U\mathrm{sin}2\psi).$ | (3) |

Therefore, if the light intensities of the emergent light at three different ψ values are known, the I, Q, and U values of the incident beam can be calculated. If we set ψ to 0˚, 45˚, and 90˚, the following equation set is obtained:

| $ \left\{ \begin{array}{l}{I}^{\prime }({0}^{°})=(I+Q)/2\\ {I}^{\prime }({45}^{°})=(I+U)/2\\ {I}^{\prime }({90}^{°})=(I-Q)/2\end{array} \right. .$ | (4) |

The equation set is then transformed as follows:

| $ \left\{ \begin{array}{l} I={I}^{\prime }({0}^{°})+{I}^{\prime }({90}^{°})\\ Q={I}^{\prime }({0}^{°})-{I}^{\prime }({90}^{°})\\ U=2{I}^{\prime }({45}^{°})-{I}^{\prime }({0}^{°})-{I}^{\prime }({90}^{°})\end{array} \right. .$ | (5) |

Then, the linear DOP is calculated and a DOP image is obtained:

| $ P=\frac{\sqrt{{Q}^{2}+{U}^{2}}}{I}.$ | (6) |

The precision of the homing and docking process depends on the image quality. To better evaluate the image quality, we introduce the parameter K:

| $ K=\frac{R_\rm{t}-R_\rm{b}}{R_\rm{b}}, $ | (7) |

where Rt is the intensity or DOP of the target and Rb is the intensity or DOP of the background in the image.

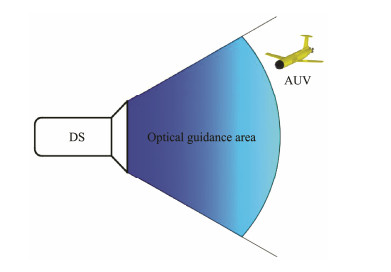

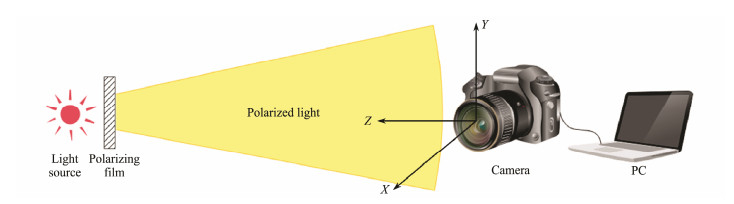

2.2 Polarized Optical GuidancePolarization imaging, which enables the contrast of target images to be increased and both the illumination effects and backscattering to be decreased, is an ideal technique for underwater target detection, as it is simple to implement, requires little power, and can be used in realtime applications (Dubreuil et al., 2013). In addition, in the deep sea, there is no daylight, which means point-like bioluminescence is the only light source (Warrant and Locket, 2004). To survive in the dark ocean environment, marine creatures have evolved to acquire polarization sensitivity, which they use for navigation, predation, disguise, and communication in the same way as humans use intensity information. Inspired by these marine creatures, we design a method for determining the position and attitude of the AUV based on polarized AULs, which are recognized by a camera installed in the AUV, as shown in Fig.1. A light source with a wavelength of 532 nm is employed because of its greater propagation distance. We use a normal light source rather than laser light because AUVs have difficulty recognizing laser light, which is unidirectional, despite its greater propagation distance.

|

Fig. 1 Schematic of optical guidance. |

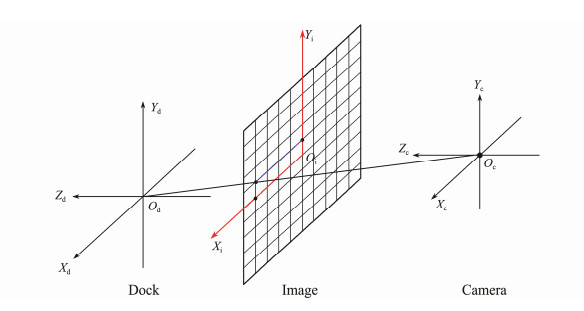

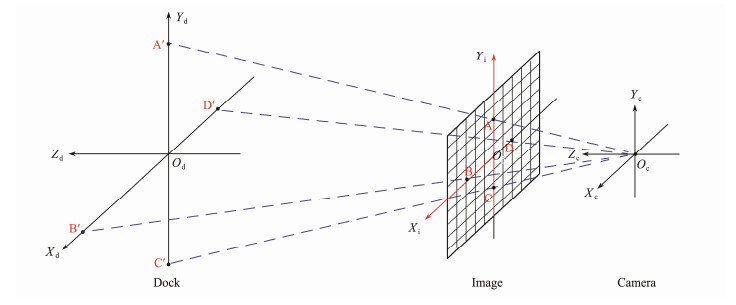

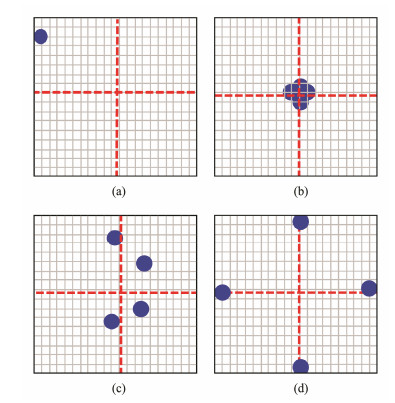

The proposed system consists of a camera and four AULs, which are polarized light beacons situated in the DS. As the light source is polarized, its DOP is greater than that of the background. The pixel center of each AUL can be identified by finding the maximum DOP point in the image, for which the corresponding pixel coordinates can be obtained. Figs. 2 and 3 show the DS, image, and the camera coordinate system at long and short distances, respectively. Fig.4 shows the positions of the four light beacons in the image during the docking process. When the AUV is far away from the DS, the four light beacons appear to be located in one place on the edge of the AUV vision system, as shown in Figs. 2 and 4(a). The angles of rotation, θ and φ about the X-axis and Y-axis, respectively can be calculated as shown in Eq. (8):

| $ \left\{ \begin{array}{l}\theta =\mathrm{arctan}({y}_{0}/f)\\ \phi =\mathrm{arctan}({x}_{0}/f)\end{array} \right., $ | (8) |

|

Fig. 2 Coordinate system of polarized optical guidance at a large distance. |

|

Fig. 3 Coordinate system of polarized optical guidance at a close distance. |

|

Fig. 4 Positions of four light beacons in the image during the docking process. (a), (b), (c), and (d) represent different stages of the docking process. |

where x0 and y0 are the respective horizontal and vertical coordinates of the camera coordinate system, which can be calculated based on the physical pixel size of the camera and the coordinates of the image coordinate system. The pixel coordinates of the light beacons are obtained by image processing. f is the focal length of the camera.

At a distance, the AUV approaches the DS based on two angles. As the AUV nears the DS, the four polarized light beacons appear in the AUV vision system, as shown in Figs. 3, 4(b), and 4(c). Safe and accurate homing and docking of the AUV depends on the precise determination of the position and attitude of the AUV relative to the DS. It is a perspective-n-point (PNP) problem, in which the position and attitude of the AUV are determined on the relationship of the positions of the polarized AULs in the image and actual situation when the number of AULs is n in computer vision. Fischler and Bolles (1987) proved that solving a PNP problem and obtaining a unique solution requires at least four coplanar feature points. The PNP problem simplifies the proposed method into a three-dimensional quadratic system of equations:

| $ \left\{ \begin{array}{l}{x}^{2}+{y}^{2}-2xy\mathrm{cos}\alpha ={c}^{2}\\ {x}^{2}+{z}^{2}-2xz\mathrm{cos}\beta ={b}^{2}\\ {y}^{2}+{z}^{2}-2yz\mathrm{cos}\gamma ={a}^{2}\end{array} \right. , $ | (9) |

where α, β, and γ are the respective angles among OcA', OcB', and OcC'. a, b, and c are the respective distances between A', B', and C', which are known beforehand. Then, x, y, and z, which are the respective lengths of A'Oc, B'Oc, and C'Oc, are known. However, the equation has multiple solutions, and therefore, a fourth point is needed to obtain a unique solution. Coupled with the coordinates for A, B, and C in the camera coordinate system, the coordinates for A', B', and C' in the camera coordinate system are obtained. Finally, we use a matrix for coordinate transformation to solve for the six position and attitude parameters of the camera relative to the AULs, as shown below:

| $ \left[\begin{array}{l}X\\ Y\\ Z\\ 1\end{array}\right]=\left(\begin{array}{cccc}\rm{cos}\phi \rm{cos}\mu & \rm{cos}\phi \rm{sin}\mu & -\rm{sin}\phi & {T}_{X}\\ \rm{cos}\mu \rm{sin}\theta \rm{sin}\phi -\rm{cos}\theta \rm{sin}\mu & \rm{cos}\theta \rm{cos}\mu \rm{+sin}\theta \rm{sin}\phi \rm{sin}\mu & \rm{cos}\phi \rm{sin}\theta & {T}_{Y}\\ \rm{sin}\theta \rm{sin}\mu \rm{+cos}\theta \rm{cos}\mu \rm{sin}\phi & \rm{cos}\theta \rm{sin}\phi \rm{sin}\mu -\rm{cos}\mu \rm{sin}\theta & \rm{cos}\theta \rm{cos}\phi & {T}_{Z}\\ 0& 0& 0& 1\end{array}\right)\left[\begin{array}{l}{X}^{\prime }\\ {Y}^{\prime }\\ {Z}^{\prime }\\ 1\end{array}\right], $ | (10) |

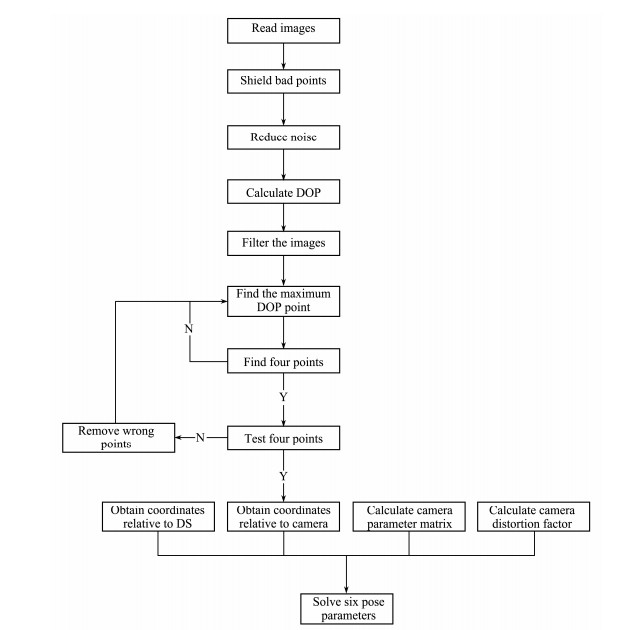

where the positions of the camera relative to the AULs are TX, TY, and TZ, and the attitudes are θ, φ, and µ. (X, Y, Z) and (X', Y', Z') are the coordinates of the AULs relative to the DS and the camera coordinate system. Fig.5 shows the complete solution process, for which the total computing time is no longer than 10 ms. When the AUV is close to the DS, the four light beacons are outside the image, as shown in Fig.4(d). This distance is too short to adjust the attitude of the AUV, so the AUV simply maintains its current direction and homes in.

|

Fig. 5 Flow chart of solution process for six pose parameters. |

The proposed real-time processing algorithm for polarized optical guidance is mainly based on the PNP problem and has a fast calculation speed because of its lower computational complexity. Due to its iterative nature, the algorithm has strong robustness and high precision. It requires only the relative position of each AUL and nothing more. However, the model errors are sensitive to discrepancies between the actual and estimated centers of each AUL.

2.3 Experiment to Demonstrate the SuperiorityTo demonstrate the superior performance of the proposed method, we must prove that the use of polarized optical guidance extends the operating distance while maintaining the precision of traditional optical guidance. In a field experiment, we used a camera to acquire measurements at the roof of a building at night, to obtain intensity and DOP images at 1-m interval at distances less than 30 m and 5-m interval at distances greater than 30 m. The exposure time was 50 ms, which was kept constant for each acquisition. The camera had 2448×2048 pixels and a lens focal length of 10.5 mm. The physical size of each pixel was 3.45 μm. The AULs comprised four LEDs and linear polarizing films. The power of each AUL was 5 mW and the docking target was a square with side lengths of 0.15 m. In the experiment, the camera represented the AUV, and the AULs represented the DS. Fig.6 shows a sketch of the optics. The unpolarized light source became polarized by the polarizing film. Intensity data was captured by the camera and the polarization was calculated using a PC. We note that the light pollution in the experimental environment was negligible.

|

Fig. 6 Schematic of the optics of the experiment. |

First, we obtained a series of images of the AULs at different distances up to 45 m. Then, we corrected the distortion of the images and simulated the effects of water using an image degradation model (Hufnagel and Stanley, 1964) for oceanic water (Mobley, 1994) with a low concentration of chlorophyl and a moderate concentration of hydrosols. The water type was the coastal ocean for which the absorption coefficient was 0.18 m−1 and the scattering coefficient was 0.22 m−1 (Petzold, 1972). Next, the algorithm obtained the pixel-recognition errors of the center of every AUL, which are the errors between the actual and identified pixel centers of the AULs. The averaged pixel-recognition errors were entered into the calculation model, which calculated the errors in the position and attitude of the camera relative to the AULs. To further demonstrate the feasibility of the proposed method underwater, after waterproofing all the equipment, we placed the experimental setup in seawater at a depth of 0.5 m.

3 Results and DiscussionIn this section, we describe the simulations and experiments we conducted to illustrate the advantages of polarized versus unpolarized optical guidance. The quality of the DOP images was found to be better than the intensity images both above and under water, so we established that polarization imaging technology extends the operating distance of underwater optical guidance. To simulate the total pose errors of the proposed method, we obtained the pixel-recognition errors of the center of the AULs in the image and proved that underwater polarized optical guidance maintains the precision of traditional optical guidance. We conducted underwater experiments to evaluate the performance of the system and the feasibility of the proposed method.

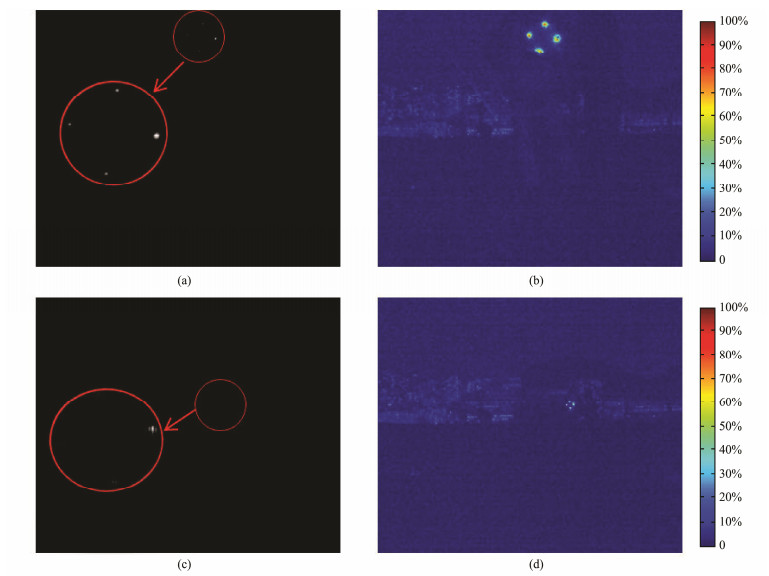

3.1 Quality of Intensity and DOP ImagesFig.7 shows the intensity and DOP images of the atmospheric experiment. At a distance of 5 m, the AULs in the intensity image were barely visible, as depicted in Fig. 7(a), whereas in the DOP image, they were clearly visible, as depicted in Fig.7(b). At a distance of 20 m, the AULs in the intensity image were invisible, as shown in Fig.7(c), whereas in the DOP image, they were still visible, as shown in Fig.7(d). In the intensity images, the small red circles on the right enclose the light sources, and the big circles on the left are enlarged versions of the same. Table 1 shows the quality of the intensity and DOP images obtained in the atmospheric experiment, which was calculated using Eq. (7). The above images were then simulated underwater, the quality of which are shown in Table 2. In Tables 1 and 2, we can see improvement with respect to the quality of the DOP images relative to intensity images. The image quality and improvement in Tables 1 and 2, as obtained by Eq. (7), are dimensionless. These results establish that the quality of polarization imaging is obviously improved, especially under water and at a large distance. Thus, we have proved that polarization imaging technology has a longer operating distance than traditional optical guidance technology.

|

Fig. 7 Atmospheric experiment. (a), intensity image at a distance of 5 m; (b), DOP image at a distance of 5 m; (c), intensity image at a distance of 20 m; and (d), DOP image at a distance of 20 m. |

|

|

Table 1 Image quality of intensity and DOP above water |

|

|

Table 2 Image quality of intensity and DOP under water |

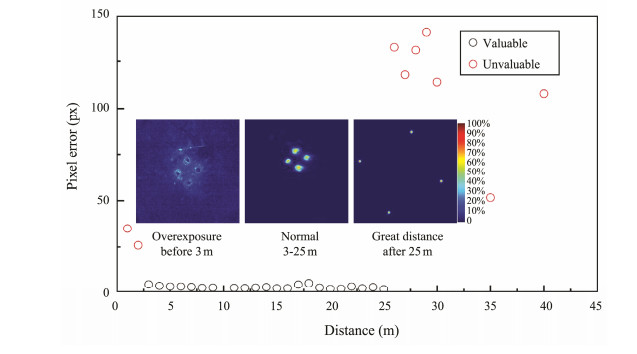

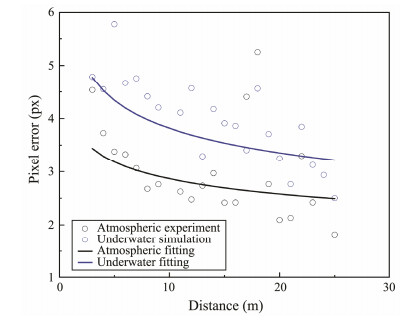

Using the algorithm, we calibrated the atmospheric images and obtained the pixel-recognition errors of the center of the AULs in the images. We acquired 10 images and obtained 40 pixel-recognition errors for every test position. We then calculated the mean errors, and Fig.8 shows the relationship between the distance and the error. At distances less than 3 m and greater than 25 m, the pixel errors are high due to overexposure and camera performance, respectively. This means the errors obtained at distances between 3 m and 25 m are valuable, which are shown in Fig.9. Next, we simulated the atmospheric images underwater and obtained the corresponding errors, which are also shown in Fig.9. Both these errors featured some distortion, but the underwater errors were the larger of the two. Owing to the scattering and absorption of particles in water, it is more difficult to recognize the center of the AUL underwater. We found the pixel-recognition error to be inversely proportional to the distance of the beacon from the camera. The closer was the beacon to the camera, the larger was the projected light in the image, which led to greater errors than those of beacons that were far away. These results are consistent with those reported in previous work (Vallicrosa et al., 2016). At the same time, if the light source had higher power or a bigger luminous area, the results would have been better at greater distances and worse at shorter distances due to overexposure and the large imaging area. In contrast, if the light source had lower power or a smaller luminous area, the camera would experience performance challenges at large distances.

|

Fig. 8 Mean pixel errors within 45 m. |

|

Fig. 9 Pixel errors of atmospheric experiment and underwater simulation between 3 and 25 m and their fitting results. |

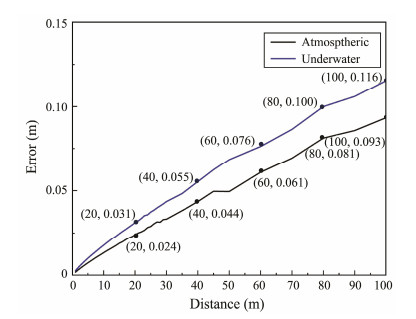

Fig. 9 shows plots in which the atmospheric and underwater errors are fitted, wherein we can see that because of the water degradation, the pixel errors underwater were greater than those in the atmosphere. Lastly, after fitting the pixel errors at different distances, we input them into the AUV pose model to calculate the six parameters of the position and attitude. Due to the iterative nature of the algorithm, the angle and Z-direction distance errors were small. The total errors mainly depended on the distances along the X and Y directions. Fig.10 shows the simulation results of the relationship between the distance and the total errors of the AUV, in which we can see that the position errors of both the atmospheric and underwater images increased with the distance between the AUV and the DS. Within 100 m, the atmospheric errors were no greater than 0.093 m and the underwater errors were no greater than 0.116 m. This degree of precision is consistent with the results obtained in previous work (Hong et al., 2003) using unpolarized optical guidance.

|

Fig. 10 Total pose errors of the AUV within 100 m. |

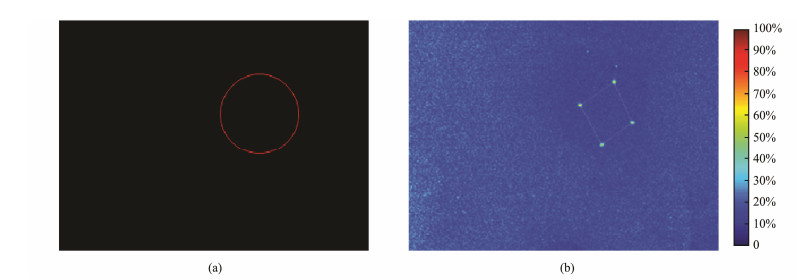

The underwater experiment was conducted in the coastal ocean in the afternoon to be consistent with the conditions used in the simulation. The underwater light field was polarized due to the refracted polarized skylight formed by the atmospheric scattering. However, the DOP of the underwater light field was significantly lower than that of the polarized AULs and had little impact on the experiment. Despite the absence of any interference from fish, seaweed, or other marine creatures, the underwater environment in the experiment was complex, much like the real AUV working environment. The water was semiturbid and the visibility was poor owing to the absorption and scattering of light by various particles. Long-term underwater work would seriously shorten the life of the equipment because of seawater corrosion and pressure. The ocean waves, shoreline, seabed, and waterproof device also had a negative influence on the experiment due to their impacts on the light. Except for the imaging method, the experimental conditions of the intensity and DOP images were the same. Fig.11 shows the intensity and DOP images of the polarized AULs in the underwater experiment, for which the distance between the beacon and the camera was 2 m. In the intensity image, the light source was invisible, but its position is indicated by a red circle. In the DOP image, the light source was clear and successfully recognized, and the polarized AULs were four bright points that had higher DOP values than the background. The underwater DOP image had more noisy points owing to the scattering of various particles, and the calculation accuracy was poorer than the atmospheric image because of the complex underwater optical characteristics. There were also other bright points above the AULs that were darker than the polarized AULs, which were formed by the reflections of the water surface in the DOP image. The total underwater pose error was 0.011 m, which is consistent with that of the simulation. This result verifies the superior performance of the proposed system and the feasibility of this method underwater.

|

Fig. 11 Underwater experiment. (a) Intensity image and (b) DOP image. |

In this paper, we proposed a real-time and bioinspired method that uses polarized optical guidance to determine the position and attitude of an AUV relative to a DS for homing and docking. This method, based on polarization imaging technology, extends the operating distance while maintaining the precision of traditional optical guidance. The simulation and experimental results obtained in this research serve as a feasibility study and provide the necessary evidence that such a method is warranted. The obtained angle errors were negligible, and the position errors were no greater than 0.116 m within 100 m in the coastal ocean. A fast calculation speed enables real-time solutions for the AUV position and attitude. Furthermore, this method is simple and inexpensive, requiring only the relative position of each AUL. Future work will focus on accelerating the calculation speed, developing a dynamic model, and conducting long-distance underwater experiments.

AcknowledgementsThis work was supported by the National Natural Science Foundation of China (NSFC) (Nos. 51675076, 51505062), the Science Fund for Creative Research Groups of NSFC (No. 51621064), and the Pre-Research Foundation of China (No. 61405180102).

Cartron, L., Josef, N., Lerner, A., Mccusker, S. D., Darmaillacq, A. S., Dickel, L. and Shashar, N., 2013. Polarization vision can improve object detection in turbid waters by cuttlefish. Journal of Experimental Marine Biology and Ecology, 447(3): 80-85. (  0) 0) |

Cheng, H. Y., Chu, J. K., Zhang, R., Tian, L. B. and Gui, X. Y., 2020. Underwater polarization patterns considering single Rayleigh scattering of water molecules. International Journal of Remote Sensing, 41(13): 4947-4962. DOI:10.1080/01431161.2019.1685725 (  0) 0) |

Cowen, S., Briest, S. and Dombrowski, J., 1997. Underwater docking of autonomous undersea vehicles using optical terminal guidance. Oceans'97 MTS/IEEE Conference Proceedings. Halifax, 2: 1143-1147. DOI:10.1109/OCEANS.1997.624153 (  0) 0) |

Deltheil, C., Didier, L., Hospital, E. and Brutzman, D. P., 2002. Simulating an optical guidance system for the recovery of an unmanned underwater vehicle. IEEE Journal of Oceanic Engineering, 25(4): 568-574. (  0) 0) |

Dubreuil, M., Delrot, P., Leonard, I., Alfalou, A., Brosseau, C. and Dogariu, A., 2013. Exploring underwater target detection by imaging polarimetry and correlation techniques. Applied Optics, 52(5): 997-1005. DOI:10.1364/AO.52.000997 (  0) 0) |

Fischler, M. A. and Bolles, R. C., 1987. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Communications of the ACM, 24(6): 381-395. (  0) 0) |

Hong, Y. H., Kim, J. Y., Lee, P. M., Jeon, B. H., Oh, K. H., and Oh, J. H., 2003. Development of the homing and docking algorithm for AUV. International Offshore and Polar Engineering Conference Proceedings. Honolulu, 205-212.

(  0) 0) |

Horváth, H. G., and Varjú, D., 2004. Polarized Light in Animal Vision. Springer, Berlin, 470pp.

(  0) 0) |

Hou, W., 2009. A simple underwater imaging model. Optics Letters, 34(17): 2688-2690. DOI:10.1364/OL.34.002688 (  0) 0) |

Hufnagel, R. E. and Stanley, N. R., 1964. Modulation transfer function associated with image transmission through turbulent media. Journal of the Optical Society of America, 54(1): 52. DOI:10.1364/JOSA.54.000052 (  0) 0) |

Kondo, H., Okayama, K., Choi, J. K., Hotta, T., Kondo, M., Okazaki, T., Singh, H., Chao, Z., Nitadori, K., Igarashi, M. and Fukuchi, T., 2012. Passive acoustic and optical guidance for underwater vehicles. 2012 Oceans-Yeosu. IEEE, Yeosu, 1: 1-6. (  0) 0) |

Lee, P. M., Jeon, B. H. and Kim, S. M., 2003. Visual servoing for underwater docking of an autonomous underwater vehicle with one camera. IEEE Oceans 2003. Celebrating the Past... Teaming Toward the Future (IEEE Cat. No. 03CH37492). San Diego, 2: 677-682. (  0) 0) |

Maki, T., Shiroku, R., Sato, Y., Matsuda, T., Sakamaki, T., and Ura, T., 2013. Docking method for hovering type AUVs by acoustic and visual positioning. International Underwater Technology Symposium, Tokyo, 1-6.

(  0) 0) |

Mobley, C. D., 1994. Light and Water: Radiative Transfer in Natural Waters. Academic, San Diego, 592pp.

(  0) 0) |

Park, J. Y., Jun, B. H., Lee, P. M. and Oh, J., 2009. Experiments on vision guided docking of an autonomous underwater vehicle using one camera. Ocean Engineering, 36(1): 48-61. (  0) 0) |

Petzold, T. J., 1972. Volume Scattering Functions for Selected Ocean Waters. Scripps Institution of Oceanography La Jolla Ca Visibility Lab, La Jolla, 79pp.

(  0) 0) |

Schechner, Y. Y. and Karpel, N., 2005. Recovery of underwater visibility and structure by polarization analysis. IEEE Journal of Oceanic Engineering, 30(3): 570-587. DOI:10.1109/JOE.2005.850871 (  0) 0) |

Schechner, Y. Y., Narasimhan, S. G. and Nayar, S. K., 2003. Polarization-based vision through haze. Applied Optics, 42(3): 511-525. DOI:10.1364/AO.42.000511 (  0) 0) |

Shashar, N., Hagan, R., Boal, J. G. and Hanlon, R. T., 2000. Cuttlefish use polarization sensitivity in predation on silvery fish. Vision Research, 40(1): 71-75. DOI:10.1016/S0042-6989(99)00158-3 (  0) 0) |

Vallicrosa, G., Bosch, J., Palomeras, N., Ridao, P., Carreras, M. and Gracias, N., 2016. Autonomous homing and docking for AUVs using range-only localization and light beacons. Ifac Papersonline, 49(23): 54-60. DOI:10.1016/j.ifacol.2016.10.321 (  0) 0) |

Warrant, E. J. and Locket, N. A., 2004. Vision in the deep sea. Biological Reviews of the Cambridge Philosophical Society, 79(3): 671-712. DOI:10.1017/S1464793103006420 (  0) 0) |

Waterman, T. H., 2006. Reviving a neglected celestial underwater polarization compass for aquatic animals. Biological Reviews of the Cambridge Philosophical Society, 81(1): 111-115. (  0) 0) |

Xu, Q., Guo, Z. Y., Tao, Q. Q., Jiao, W. Y., Wang, X. S., Qu, S. L. and Gao, J., 2015. Transmitting characteristics of polarization information under seawater. Applied Optics, 54(21): 6584. DOI:10.1364/AO.54.006584 (  0) 0) |

Yahya, M. F., and Arshad, M. R., 2015. Tracking of multiple markers based on color for visual servo control in underwater docking. International Conference on Control System, Computing and Engineering. George Town, 482-487.

(  0) 0) |

Yu, S. C., Ura, T., Fujii, T. and Kondo, H., 2001. Navigation of autonomous underwater vehicles based on artificial underwater landmarks. MTS/IEEE Oceans 2001. An Ocean Odyssey. Conference Proceedings. Honolulu, 1: 409-416. DOI:10.1109/OCEANS.2001.968760 (  0) 0) |

2020, Vol. 19

2020, Vol. 19