The ocean occupies a vast area that is twice as large as that occupied by lands; thus, it has an important contribution to the balance of Earth's ecosystem and provides people with abundant resources. Currently, three main methods are used to explore oceans: deep diving, deep netting, and deep drilling. These enabled us to gain deep insights into oceans. The development of automation technology gradually led to the use of underwater robots as the primary means of underwater operations (Xie et al., 2018; Zhuang et al., 2021; Chen et al., 2022; Duan et al., 2022). Compared with manual operations, underwater robots are known for their high efficiency and low cost. Underwater robots, combined with various sensors and computer vision, can be used to study various topics, namely, aquatic organisms in offshore waters, transmission of information through seabed observation networks in deep seas, and monitoring of underwater organisms and environmental changes through videos and images, which greatly accelerate the development of marine science.

The challenges in underwater image classification include the following: 1) the energy loss of light during the propagation process that reduces its intensity and the uneven spectral propagation that distorts colors with the changes in distance (Mittal et al., 2022); 2) the complex underwater environment that causes underwater images to be susceptible to high noise, low visibility, edge blurring, low contrast, and color deviation. These challenges reduce the visibility of underwater targets and results in blurred underwater images and low contrast. In addition, owing to the differences in water quality, underwater image classification has poor accuracy.

Before the advent of deep learning, underwater image classification tasks often involved human expert-based image annotation and traditional feature-based feature descriptors in the characterization of underwater objects, and features are categorized using classifiers, such as Naive Bayes, ensemble learning, and histograms. Shihavuddin et al. (2013) combined five feature descriptors and four classifiers to investigate six datasets and applied them to the Red Sea dataset to classify coral reef biomes. Mehta et al. (2007) extracted texture features of images, classified coral reef images using support vector machines (SVM) and obtained 95% classification accuracy on a low-quality and small-sample image dataset. In the study of Beijbom et al. (2012), a benchmark dataset of coral reef images was proposed, and image features were by texture and color; training was conducted on the 2008 and 2009 datasets, and an accuracy of 83.1% was achieved on the 2010 dataset, which provides a new solution for the automated ecological analysis of coral reefs. Nadeem et al. (2019) achieved a systematization accuracy of 93.59% on the Eliat Fluorescence Coral dataset by fusing the training results of fluorescence and reflection images. Traditional feature descriptors, such as vein, color, and shape traits, efficiently process images. However, they exhibit poor accuracy because of various underwater image targets, complex environments, and insufficient lighting.

Given their excellent performance in image systematization missions (Simonyan and Zisserman, 2015; Szegedy et al., 2016; Krizhevsky et al., 2017), researchers have quickly applied convolutional neural networks (CNNs to the underwater domain) (Wang et al., 2019; An et al., 2020; Lu et al., 2020; Salman et al., 2020; Irfan et al., 2021). Mahmood et al. (2016) combined VGG (Visual Geometry Group) network extraction and handcrafted features and obtained a classification accuracy of 84.5%. Mahmood et al. (2018) used VGGnet and ResNet in the extraction of common features of coral and noncoral images and realized the automatic annotation of coral population distribution. Compared with that of manual annotation, the computational cost was greatly reduced, and the efficiency was improved, benefiting the long-term detection of marine ecosystems. Prasetyo et al. (2022) combined the low-level features of images with the high-level ones through depthwise separable convolution and improved the fish image classification performance, achieving 98.46% and 97.09% accuracies on the Fish-gres and Fish4Knowledge datasets, respectively. Zhou et al. (2023) proposed a novel ResNet network for the improved classification of fish and invertebrates. Lopez-Vazquez et al. (2023) proposed a convolutional residual network for the classification of marine organisms. Ma et al. (2023) proposed a deep CNN for the classification of underwater sonar images.

Despite the considerable potential of deep learning, end-to-end models cause difficulty in understanding the process of network training and network improvement for specific tasks and model performance. Drawing on the refined processing of each part of a classification task using the traditional classification method, we considered retaining the excellent feature learning capability of a CNN when performing an image classification task while selecting a better classifier to process features.

Traditional classifiers, such as the k-nearest neighbor, SVM, and decision tree, are accompanied by problems, such as the numerous parameters of the model structure that must be adjusted, leading to slow neural network fitting. Pao et al. (1994) proposed a random vector functional link (RVFL) for the random generation of hidden layer biases and input weights of a neural net and used the least squares method to solve the output weights of the hidden layer, which substantially improved the training speed compared with the previous feedforward neural net. Based on a single hidden layer, Tamura and Tateishi (1997) proved that a feedforward neural network with two hidden layers can use (N / 2) + 3 hidden layer nodes to learn the features of N training samples; notably, a single hidden layer feedforward neural network requires N − 1 nodes. Huang (2003) made an additional reduction in the number of required nodes. Meanwhile, Tang et al. (2015) put forward a multilayer coding extreme learning machine (ELM) for the extraction of image features, and it performed better than the existing multilayer perception on multiple classification datasets. Prompted by the multilayer ELM, Shi et al. (2019) proposed a deep RVFL network that adds direct connections from each layer of a network to the original single hidden layer network and includes denoising in autoencoding to obtain better and higher-level feature representations. Experiments by Shi et al. (2019) showed that deep RVFL exhibits an improved performance while requiring fewer hidden layer nodes than ELM. Subsequently, Shi et al. (2019) used the combination of ensemble and deep learning, put forward a general framework for ensemble deep RVFL, and verified its effectiveness using sparse datasets.

This paper proposes a novel underwater image classification model that combines the advantages of RVFLs and deep learning and uses an EfficientnetB0 CNN to abstract traits from underwater images. The RVFL was considered in the classifier, and its network structure was improved. The EfficientnetB0 – two-hidden-layer RVFL (TRVFL) network was used to classify an underwater image dataset.

2 Related Work 2.1 Random Vector Functional Link NetworkThe RVFL is a relatively simple neural network. Suppose N arbitrary samples (xi, yi), where xi = [xi1, xi2, ···, xin]T ∈Rn, yi = [yi1, yi2, ···, yim]T ∈Rm, and m, n represent the vector's dimensions. The formula for the RVFL network is as follows:

| $ \sum\nolimits_{j = 1}^L {{\beta _j}} g({w_j}{X_i} + {b_j}) + \sum\nolimits_{j = L + 1}^{L + d} {{\beta _j}} {X_i}_j = {o_i}, i = 1, 2, \cdot \cdot \cdot, N, $ | (1) |

where L represents the neuron number, g(x) denotes the function of activation, wj indicates the input weight, bj is j-th neuron bias, d corresponds to the input data dimension, and βj is the output weight. wj and bj are randomly generated parameters.

For the minimization of the output error,

| $ \sum\nolimits_{i = 1}^N {\left\| {{o_i} - {y_i}} \right\|} = 0. $ | (2) |

The above equation can be directly expressed as follows:

| $ \sum\nolimits_{j = 1}^L {{\beta _j}} g({w_j}{X_i} + {b_j}) + \sum\nolimits_{j = L + 1}^{L + d} {{\beta _j}} {X_i}_j = {y_i}, i = 1, 2, \cdot \cdot \cdot, N, $ | (3) |

Then

| $ \boldsymbol H \boldsymbol \beta =\boldsymbol Y, $ | (4) |

where H represents the hidden layer's output, β denotes the hidden layer's weight, and Y indicates the output. The detailed expressions are as follows:

| $\boldsymbol H = {\left[ {\begin{array}{*{20}{c}} {g({w_1}{X_1} + {b_1})}& \cdots &{g({w_L}{X_1} + {b_L})}&{{x_{11}}}& \cdots &{{x_{1n}}} \\ \vdots & \ddots & \vdots & \vdots & \ddots & \vdots \\ {g({w_1}{X_N} + {b_1})}& \cdots &{g({w_L}{X_N} + {b_L})}&{{x_{N1}}}& \cdots &{{x_{Nn}}} \end{array}} \right]_{N \times (L + n)}}, $ | (5) |

| $\boldsymbol \beta = {\left[ {\begin{array}{*{20}{c}} {\beta _1^{\text{T}}} \\ \vdots \\ {\beta _L^{\text{T}}} \\ {\beta _{L + 1}^{\text{T}}} \\ \vdots \\ {\beta _{L + n}^{\text{T}}} \end{array}} \right]_{(L + n) \times m}}, $ | (6) |

| $\boldsymbol Y = \left[ {\begin{array}{*{20}{c}} {y_1^{\text{T}}} \\ \vdots \\ {y_N^{\text{T}}} \end{array}} \right]. $ | (7) |

The output weight is calculated as

Efficientnet was used to obtain the backbone network EfficientnetB0 through automatic tuning; the relationship among the width, depth, and resolution of the network was studied, and the best scaling ratio was determined to maintain the network with the highest performance under a given resource condition (Tan and Le, 2019). As for the experimental results on ImageNet, Efficientnet exhibited a higher accuracy while requiring fewer training parameters than other CNNs.

EfficientnetB0 comprises 16 mobile inverted bottleneck convolutions (MBConv), which randomly change the depth of the network with the expansion ratio during the convolution process. As a result, the training speed of the network increases. The Squeeze-and-Excitation Network (SENet) introduced therein features an attention mechanism that helps in the extraction of the main feature information.

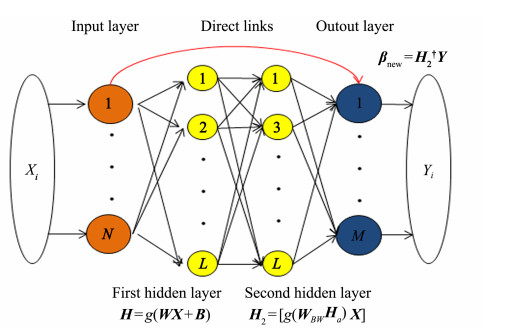

3 Proposed Underwater Image Classification Model 3.1 Two-Hidden-Layer Random Vector Functional LinkThe RVFL uses a random assignment to determine input weights and hidden layer biases. In this study, an RVFL network with double hidden layers was proposed. The parameters of the second hidden layer were calculated using a novel method, which resulted in more stable and accurate model outputs. Fig. 1 illustrates the model structure. Parameters other than those of the second layer were the same as those used in the previous generation method, and no changes were made.

|

图 1 Network structure of two-hidden-layer random vector functional link. |

The original TRVFL has weight W and bias B at the first hidden layer, and the output of the hidden layer is H = g(WX + B). The weights W and bias B at the second layer are stochastically produced, and the corresponding hidden layer output is H1 = [g(W1X + B1)X]. Finally, the corresponding output weight is obtained by solving the equation

The output weight was calculated based on the first hidden layer's output β = [HX]†Y and the output of the second hidden layer is [HsX] = Yβ†. Then, a new augmented matrix WBW = [B1 W1], Ha = [1 H]T is defined. WBW is calculated using the following formula:

| $ \boldsymbol{W}_{B W}=g^{-1}\left(\boldsymbol{H}_s\right) \boldsymbol{H}_2^{\dagger} . $ | (8) |

The new second hidden layer output is H2 = [g(WBWHa) X], and its corresponding output weight is

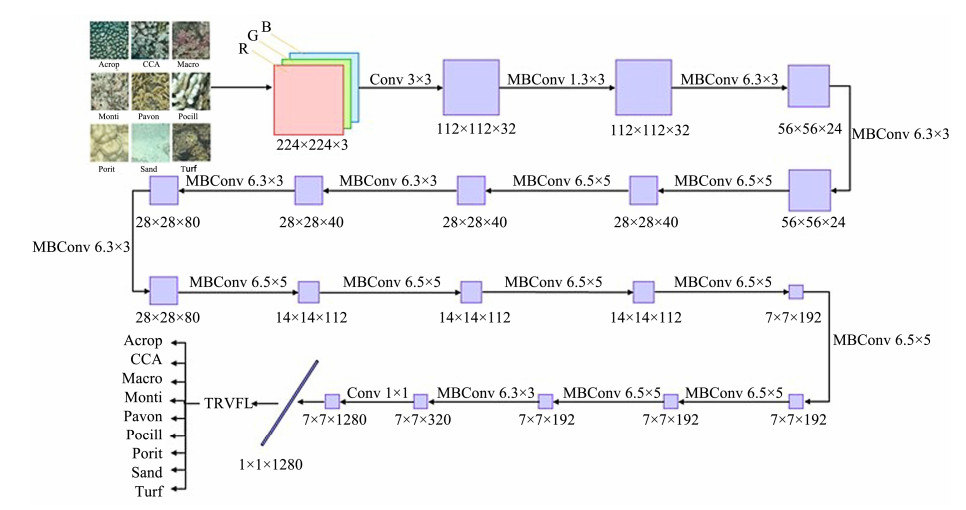

The steps for image classification using the EfficientnetB0-TRVFL algorithm are as follows:

1) For an RGB image, the EfficientnetB0 network requires changing the image size to 224 × 224. After 32 convolutions of 3 × 3 kernels, the size obtained is 112 × 112 × 32.

2) A depth convolution kernel size of 3 × 3 is applied on the 112 × 112 × 32 feature output in step 1. Then, the MB-Conv with an expansion ratio of 1 is used, and the size after convolution becomes 112 × 112 × 16.

3) The depthwise convolution kernel with a size of 3 × 3 MBConv is applied twice on the 112 × 112 × 16 feature out-put in step 2. The expansion ratio is 6, and the postconvolution size has dimensions of 56 × 56 × 24.

4) The depth convolution kernel with a size of 5 × 5 MBConv is applied twice on the 56 × 56 × 24 feature output in step 3. The expansion ratio is 6, and the postconvolution size has dimensions of 28 × 28 × 40.

5) The 28 × 28 × 40 feature output in step 4 undergoes three depth convolutions with a kernel size of 5 × 5. The MBConv has an expansion ratio of 6, and the convolutional size has dimensions of 14 × 14 × 80.

6) The 14 × 14 × 80 feature output in step 5 is subjected to three-time depth convolution with a kernel size of 5 × 5. The MBConv has an expansion ratio of 6, and the size after the convolution is 14 × 14 × 112.

7) The process is conducted four times on the 14 × 14 × 112 feature output from step 6. The depth convolution kernel size, MBConv expansion ratio, and postconvolution size reach 5 × 5, 6, and 7 × 7 × 192, respectively.

8) Depth convolution is performed on the 7 × 7 × 192 feature output in step 7. The depth convolution kernel has a scale of 3 × 3, the expansion ratio is 6, and the size after convolution is 7 × 7 × 320.

9) A 7 × 7 × 320 input image is convolved using 1280 convolution kernels with a size of 1 × 1 to obtain a feature map with dimensions of 7 × 7 × 1280. After obtaining the batch normalization layer, Swish activation function, and global average pooling layer, 1 × 1 × 1280 features are out-putted.

10) The activations() method is used to obtain the output feature matrix, with each sample corresponding to a row with 1280-dimensional feature attributes.

11) The TRVFL classifier is used to replace the softmax classifier to classify features and obtain related results.

Fig. 2 shows the specific process of the EfficientnetB0-TRVFL algorithm model. The trait abstraction method was used with deep learning to process underwater images, and EfficientnetB0 was selected as the CNN structure to obtain a high-performance underwater image classification model. The EfficientnetB0 CNN proposes a unified scaling scale to achieve high efficiency using parameters such as depth, width, and image resolution. Subsequently, the RVFL neural network was improved as a model classifier, and the TRVFL neural network added a hidden layer to the network structure. Simultaneously, to improve the stability of network performance, we proposed a new weight calculation method and replaced the original randomly generated parameter acquisition method. Therefore, classification accuracy can be improved using the TRVFL classifier instead of the softmax classifier to classify features.

|

图 2 Flowchart of EfficientnetB0-two-hidden-layer random vector functional link. |

In this study, six algorithms were compared with the proposed EfficientnetB0-TRVFL algorithm model: Resnet50, VGG16, AlexNet, Googlenet, EfficientnetB0, and EfficientnetB0-RVFL. The laboratory environment involved a computer with a Windows 10 operating system, and MATLAB R2021a software was used.

4.2 Algorithm Parameter Settings1) Patch size

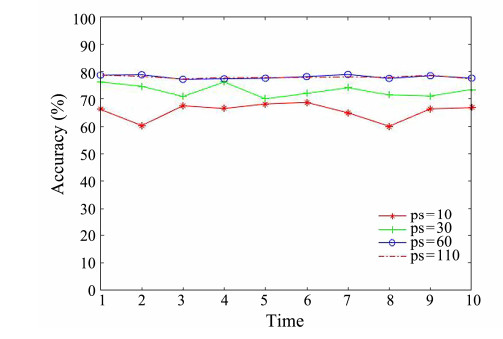

In this study, four different patch sizes were set to abstract trait messages of marked points, and their classification accuracies were compared. For extremely large patch sizes, the surrounding complex environment may disturb the main feature information of the labeled points. Conversely, a small patch size is inadequate to express feature information. However, when the labeling points were located at the boundaries of the two adjacent target types to be classified, the classification results for any patch size were incorrect. Therefore, in this study, patches with four sizes were compared in the MLC 2008 dataset. The overall classification performance was good and very stable when the patch size was 60 pixels (Fig. 3). Therefore, we selected a patch size of 60 pixels for the experiments.

|

图 3 Classification accuracies for patch sizes (ps) are 10, 30, 60 and 110. |

2) Quantity of hidden layer nodes

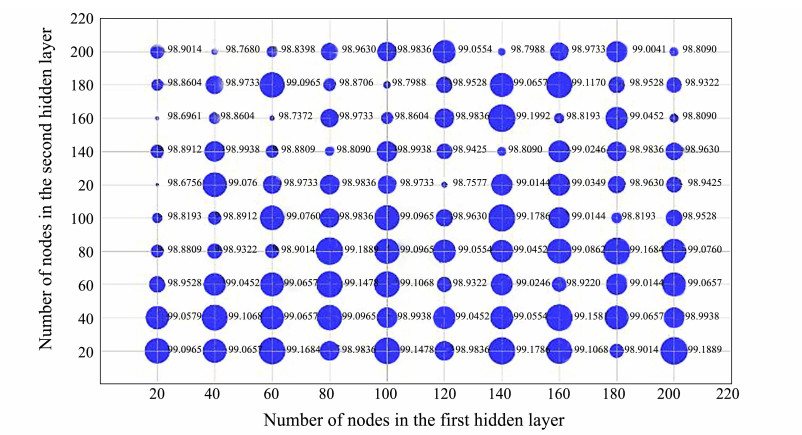

The number of hidden layer nodes is a key factor in neural networks. A very limited number of nodes cause fitting failure of the neural network, resulting in a suboptimal model classification performance. Meanwhile, an extremely high number of nodes will lead to model overfitting, reduce the network classification performance, and waste computing resources. As this study proposed a TRVFL, we set 20 – 200 hidden layer nodes for each layer. The number was incremented by 20, and 100 groups were tested on the Fish-gres dataset. Furthermore, each group of experiments was run ten times, and the average results were obtained. As shown in Fig. 4, the best classification performance was observed when the number of nodes in the second hidden layer was similar to that in the first hidden layer. In addition, given the computing resources, the first and second hidden layers simultaneously contained 80 nodes.

|

图 4 Classification performance of two-hidden-layer random vector functional link with different node numbers. |

3) Activation function

In addition to data processing, activation functions can be used to enhance the capability of linear classifiers, such as RVFL networks, for better feature representations. The number of hidden nodes was set to 80, and cross-validation of models with different activation functions was used to verify the classification performance. The same type of activation function was used for the two hidden layers. Table 1 shows the outcomes of 10 tests conducted using the EfficientnetB0-TRVFL classification model on the Fishgres dataset with six activation functions. The difference in classification accuracy was insufficient for the six activation functions. In general, when Radbas was used as the activation function, the best classification performance was attained; the average classification result of the Sin activation function was the lowest and significantly lower than those of the other five activation functions. Therefore, Radbas was selected as the activation function of EfficientnetB0-TRVFL for experiments.

|

|

表 1 Comparison of classification performances of different activation functions (the best value is displayed in bold) |

4) Other algorithm parameter settings

In this study, all the CNN parameters were set uniformly. The iteration round and learning rate were set at 10 and 0.0001, respectively. Verification was performed once per iteration, the batch training scale was set to 64, and the optimizer was set to sgdm. All the CNNs were pretrained, and transfer learning was conducted for dataset classification. For the new fully connected layer, the learning rates of the weights and biases were set to 10, which increased the suitability of the model for underwater image datasets. The TRVFL had the Radbas activation function, and the number of nodes in both hidden layers was set to 80.

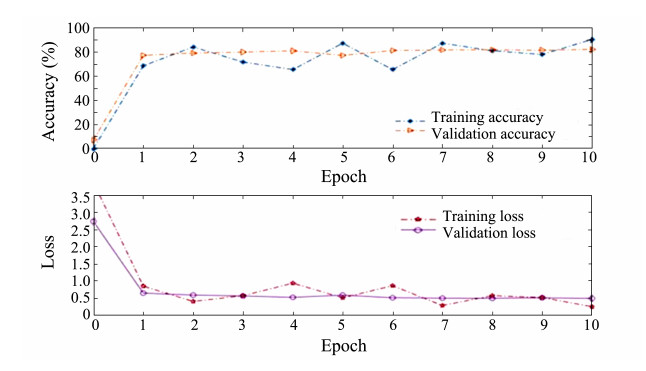

4.3 Network Training ProcessFig. 5 shows the classification accuracy and loss of the EfficientnetB0 network during feature extraction from the MLC2008 dataset based on the above algorithm parameters. The upper and lower halves of the figure display the classification accuracy and loss curves, respectively. The abscissa indicates the network for 10 rounds of training, and the different lines denote the classification accuracy and loss of the training and validation sets during the learning process. Fig. 5 also reveals that when EfficientnetB0 was trained with the MLC dataset, the accuracy and loss curves of the training set fluctuated substantially because of the large number of specimens, limited amount of data learned in each iteration process, and uneven distribution and difficulty of various classes of images in the MLC dataset. Overall, the accuracy curves of the training and test sets showed an upward trend, and the loss curve reached good convergence.

|

图 5 Classification accuracy and loss curve of the EfficientnetB0 network model. |

Four evaluation criteria are typically used to assess the properties of algorithm models in classification tasks: F1-score, recall, precision, and accuracy (Marre et al., 2020; Durden et al., 2021). Regarding multiclassification issues, the MAP can be applied to assess a model's properties, which represents the model's classification capability for all classes. The F1-score is suitable for binary classification tasks. Meanwhile, F1macro is apt for unbalanced samples and categories of equal importance, which can ensure the classification performance of small sample categories and underwater datasets with unbalanced species. The recall, precision, and F1-score used in the experiments in this study all referred to precisionmacro, recallmacro, and F1 macro, respectively.

The arithmetic expression of evaluation indicators is as follows:

| $ {\text{accuracy}} = \frac{{TP + TN}}{{TP + FN + FP + TN}}, $ | (9) |

| $ {\text{precision}} = \frac{{TP}}{{TP + FP}}, $ | (10) |

| $ {\text{recall}} = \frac{{TP}}{{TP + FN}}, $ | (11) |

| $ F{\text{1}} = \frac{{2 \times P \times R}}{{P + R}}, $ | (12) |

| ${\text{MAP}} = \frac{{\sum\nolimits_{i = 1}^n {\int_1^0 {p(r){\text{d}}r} } }}{n} , $ | (13) |

| $ precisio{n_{{\text{marco}}}} = \frac{{\sum\nolimits_{i = 1}^n {precisio{n_i}} }}{n} , $ | (14) |

| $ recal{l_{{\text{marco}}}} = \frac{{\sum\nolimits_{i = 1}^n {recal{l_i}} }}{n}, $ | (15) |

| $ F{1_{{\text{macro}}}} = 2 \times \frac{{precisio{n_{{\text{marco}}}} \times recal{l_{{\text{marco}}}}}}{{precisio{n_{{\text{marco}}}} + recal{l_{{\text{marco}}}}}}. $ | (16) |

where FN indicates that if a sample is positive, then the data will be treated as a negative example. FP denotes the opposite, which means that if a sample is negative, the data will be treated as a positive example. TN means that the data are correctly identified as a negative example. The TP indicates that the data are correctly identified as a positive sample.

4.5 Discussion of Experimental Results 4.5.1 Comparison of experimental resultsWe confirmed the TRVFL classifier performance by applying EfficientnetB0 to draw the traits of MLC2008, MLC-2009, and Fish-gres datasets and used different classifiers (softmax, TRVFL, and RVFL) to classify and compare the performance evaluation indicators of each algorithm model. Tables 2 – 4 present the experimental results. Each algorithm was run 10 times, where the MLC2008 and Fish-gres datasets were randomly divided by 2:1 and 7:3 per run, respectively. The best outcomes of the algorithms are also listed in the table.

|

|

表 2 Results of every model on the MLC2008 dataset (the best value is displayed in bold) |

|

|

表 3 Results of every model on the MLC2009 dataset (the best value is displayed in bold) |

|

|

表 4 Results of every model on the Fish-gres dataset (the best value is displayed in bold) |

As shown in Tables 2 – 4, the test outcomes of EfficientnetB0 on MLC2008, MLC2009, and Fish-gres datasets were better than those of the other four CNNs. Simultaneously, the RVFL was used to replace the original softmax classification layer in the network, which resulted in a 2% – 3% improvement in the accuracy of EfficientnetB0-RVFL. Compared with the RVFL, after the addition of a hidden layer with a new parameter calculation method, the TRVFL classifier achieved better classification accuracy. For the MLC2008 dataset, the accuracy rate, precision rate, recall rate, F1-score, average accuracy rate, and Cohen's kappa coefficient reached 87.28%, 89.19%, 80.73%, 84.34%, 90.78%, and 0.82, respectively, indicating that the predicted model results were almost identical to the actual classification results. The following results were obtained for the MLC2009 dataset: accuracy rate of 74.06%, precision rate of 78.53%, recall rate of 65.21%, F1-score of 69.59%, average accuracy rate of 82.85%, Cohen's kappa coefficient of 0.61. These values indicate that the predicted outcomes of the model are highly consistent with the actual classification results. For the Fish-gres dataset, the rate of accuracy was 99.59%, the precision rate was 99.55%, the rate of the recall was 99.55%, the F1-score was 99.55%, the average accuracy rate was 99.42%, and Cohen's kappa coefficient of 0.96 indicates that the forecasted outcomes of the algorithm and actual classification results are quite consistent.

According to the experimental results on the three datasets, EfficientnetB0-TRVFL exhibited the best results in terms of various performance evaluation indicators, demonstrating the superiority of its algorithm performance and good generalization.

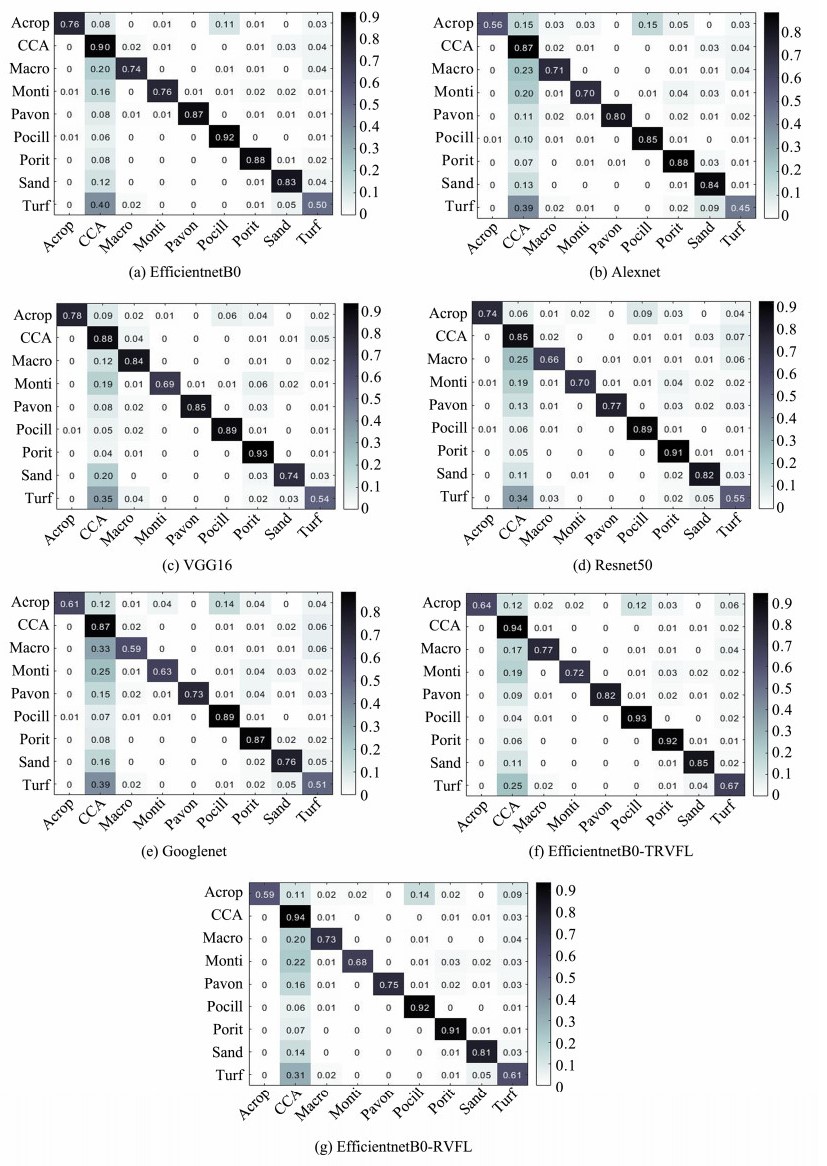

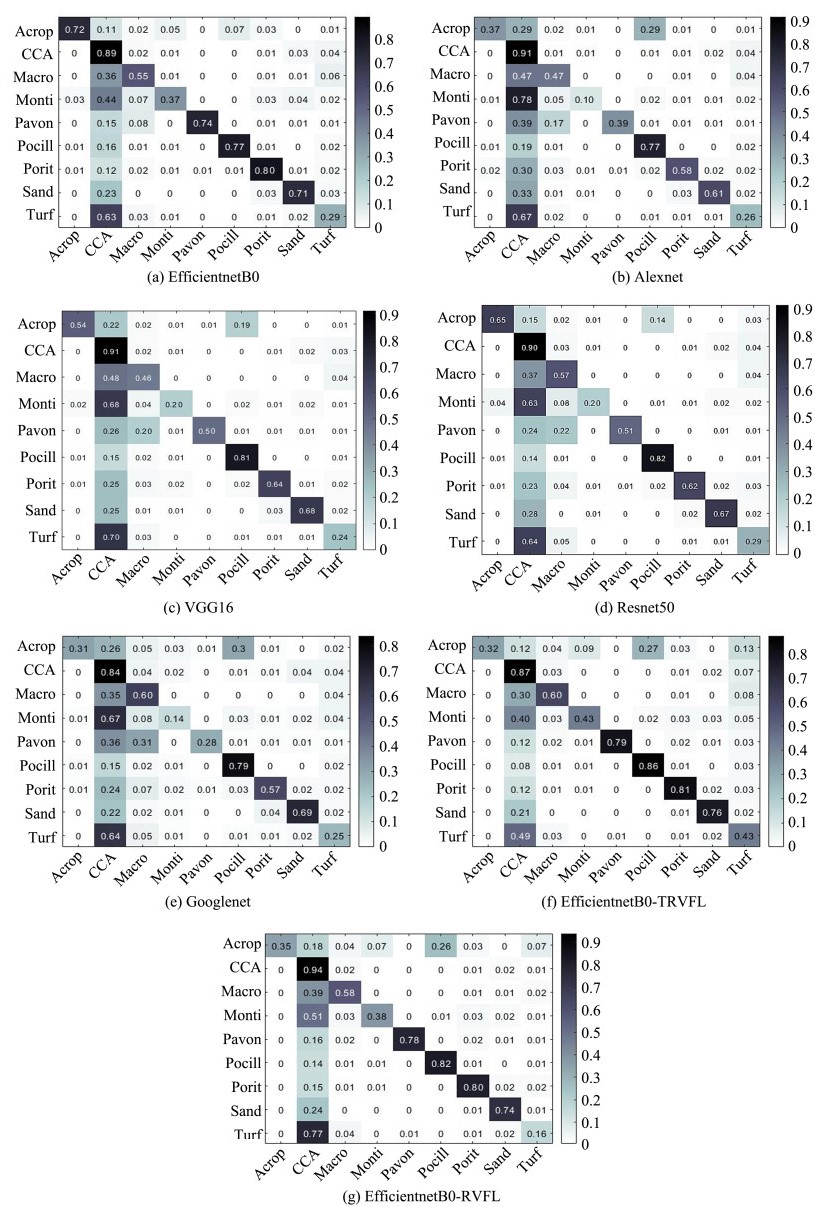

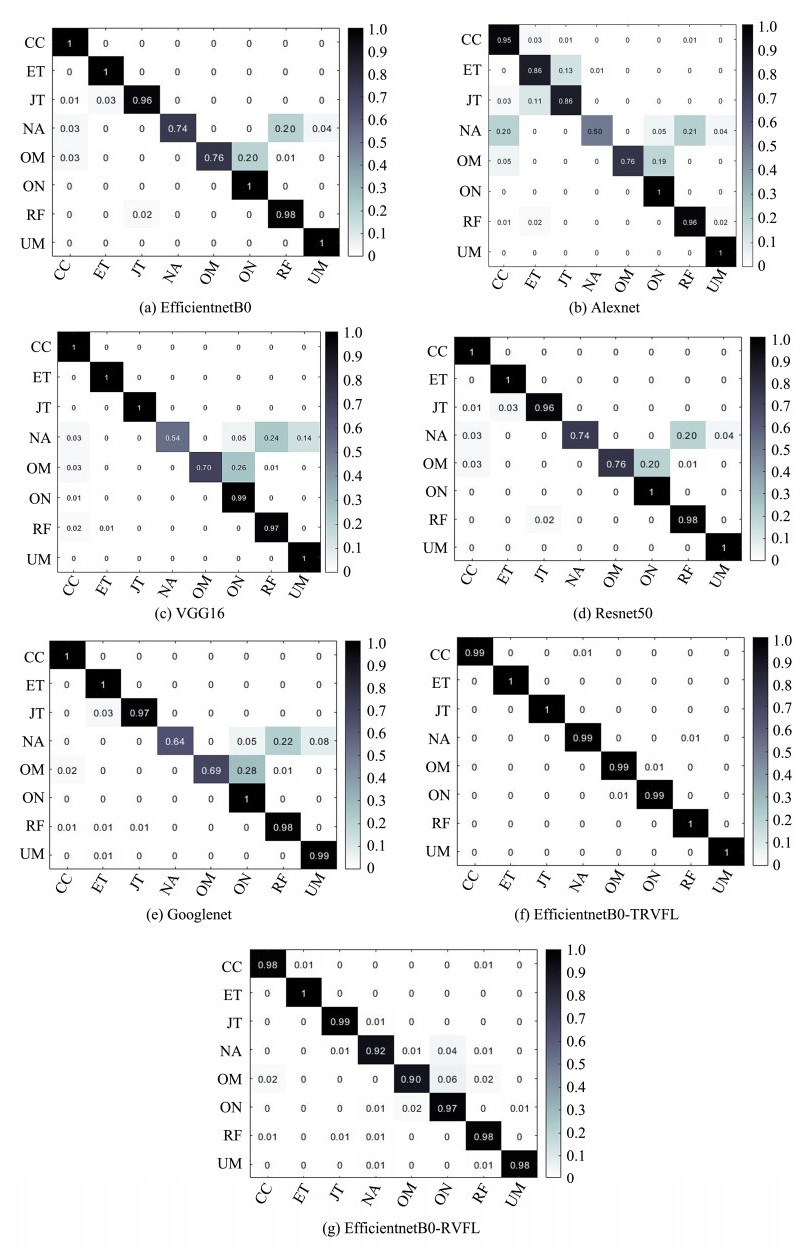

To better illustrate the property improvement of the proposed model compared with traditional classification models, we used five traditional CNNs and EfficientnetB0-RVFL as objects for comparison experiments. Figs. 6 – 8 show the confusion matrix for the proposed algorithm and the other six comparison algorithm models in the MLC2008, MLC2009, and Fish-gres datasets. The row in the figure represents the predicted class, the column denotes the actual class, and the cell with the number 0 indicates that the predicted probability for this cell is less than 0.01. EfficientnetB0-TRVFL obtained excellent classification results for the eight categories in the Fish-gres dataset, whereas for the more challenging MLC2008 and MLC2009 datasets, owing to the interference of the locations of annotation points and complex morphological environment, the confusion between CCA and the other eight categories remained. However, the overall classification accuracy was considerably improved, and almost no confusion was observed in the other categories.

|

图 6 Confusion matrix of running different algorithms on the MLC2008 dataset. |

|

图 7 Confusion matrix of running different algorithms on the MLC2009 dataset. |

|

图 8 Confusion matrix of the outcomes of running distinct models on the Fish-gres dataset. |

To demonstrate the advantages of the EfficientnetB0-TRVFL model in underwater image classification tasks, we used the three latest CNNs, namely, Densenet201, Resnet101, and Darknet53, to conduct experiments using the same parameters on the three datasets used in this research. The results of existing methods running on the datasets used in this study were compared. As presented in Table 5, the EfficientnetB0-TRVFL model had an accuracy improvement of 8% – 9% on the MLC2008 dataset, which is 6.48% higher than that of cResFeats (Mahmood et al., 2020); the accuracy of the MLC2009 dataset was increased by 4% – 5%, which is 3.96% higher than that of the combined features (Mahmood et al., 2018) and indicates that the proposed model has the best classification capability for underwater images compared with existing methods.

|

|

表 5 Comparison of the accuracies (%) of the proposed model with other existing methods |

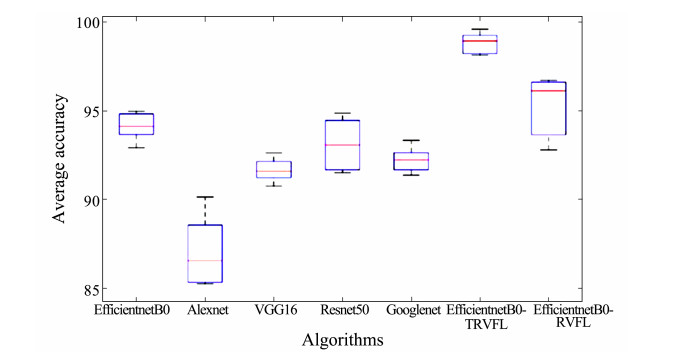

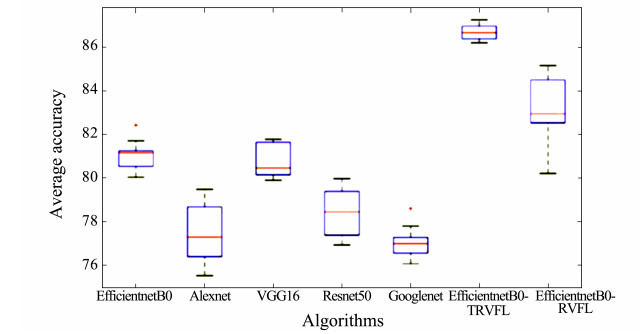

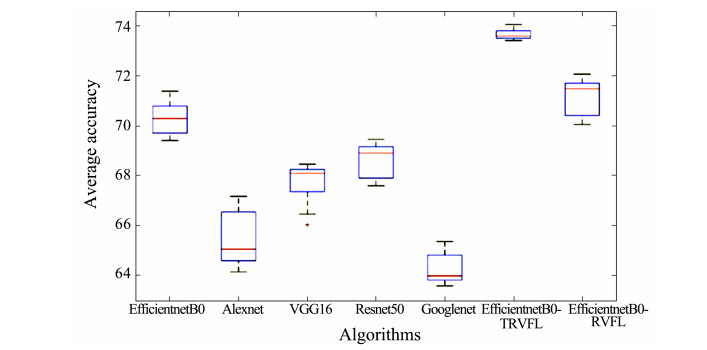

In this study, the stability of the EfficientnetB0-TRVFL algorithm was analyzed through boxplot illustrations. The experimental results were plotted as box plots, which were obtained by running each algorithm 10 times (Figs. 9 – 11). The box positions in the figures represent the overall classification accuracy of each algorithm. The red line denotes the median of outcomes, the red crosses refer to the outliers in data, and the upper and lower boundaries stand for the minimum and maximum values of the results, respectively. The size of the blue box refers to the distribution of results to the top 25% – 75% which also represents the stability of the results. The smaller the box, the better the stability. The distribution of results in the three figures shows that in the MLC2008, MLC2009, and Fish-gres datasets, the proposed EfficientnetB0-TRVFL model attained the best systematization accuracy, and the model put forward by our group is better than most algorithms in terms of stability and has no outliers. In contrast to the Efficient-netB0 network model, the EfficientnetB0-RVFL model owned a certain improvement in accuracy. However, in terms of stability, its performance was superior to that of the softmax classifier owing to the randomness of RVFL parameters.

|

图 9 Boxplots representing the accuracy of 10 runs on the Fish-gres dataset obtained using various algorithms. |

|

图 10 Boxplots representing the accuracy of 10 runs on the MLC2008 dataset obtained using various algorithms. |

|

图 11 Boxplots representing the accuracy of 10 runs on the MLC2009 dataset using obtained various algorithms. |

The Kolmogorov-Smirnov (KS) test is a nonparametric statistical test. The two-sample KS test was to determine significant differences in the distribution of results. As the KS test requires no data to follow a normal distribution and excludes certain parameters, it is widely used in twosample tests.

The KS test method was used to detect significant differences among algorithms. At the significance level of 0.01, a significant difference was observed when p < 0.01. The opposite was true when p > 0.01. Table 6 lists the test results on the EfficientnetB0-TRVFL algorithm and the compared models. We used bold font to indicate that no evident difference was observed between the algorithms. Remarkable differences were detected between the network model proposed in this study and other models.

|

|

表 6 p-values of each algorithm for the Kolmogorov-Smirnov test |

1) A neural network model with two hidden layers was proposed for the deepening of previous single hidden layers. An unstable network performance was observed due to the randomly generated parameters of the original RVFL model. The TRVFL proposes a new method for the calculation of the parameters in the second hidden layer, which further stabilizes the network and causes no change in the random generation of previous parameters.

2) TRVFL was used to replace the original classifier in the EfficientnetB0 CNN for the classification of underwater images. The algorithm not only uses the advantages of CNN to extract image features but also improves image classification accuracy using the advantages of linear classifiers such as TRVFL.

3) In this study, the advantages of RVFL and traditional CNNs were combined. The EfficientnetB0 model was used to extract underwater features, and the TRVFL model was applied as a classifier to categorize underwater objects. We selected the parameters with the best classification effect as those of the TRVFL network model and used the KS test and boxplots to analyze the significance and stability of the EfficientnetB0-TRVFL model to assess the performance of its algorithm.

4) The size of the dataset image and network parameters were selected through experimental comparison, and the highest classification accuracies (87.28%, 74.06%, and 99.59%) were obtained for the MLC2008, MLC2009, and Fish-gres datasets, respectively. Compared with existing methods, the proposed image classification model has significant performance advantages, high classification accuracy, and good stability.

A deep learning model requires a large amount of data to achieve high accuracy. Therefore, for practical applications of the proposed method, further studies should focus on data enhancement technology of underwater images to overcome the scarcity of training data. Additional studies are also required to ensure the reliability and security of the proposed deep-learning network.

AcknowledgementsWe appreciate the support of the National Key R & D Program of China (No. 2022YFC2803903), the Key R & D Program of Zhejiang Province (No. 2021C03013), and the Zhejiang Provincial Natural Science Foundation of China (No. LZ20F020003).

An, Z. F., Zhang, J., and Xing, L., 2020. Inversion of oceanic parameters represented by CTD utilizing seismic multi-attributes based on convolutional neural network. Journal of Ocean University of China, 19(6): 1283-1291. DOI:10.1007/s11802-020-4133-x (  0) 0) |

Beijbom, O., Edmunds, P. J., Kline, D. I., Mitchell, B. G., and Kriegman, D., 2012. Automated annotation of coral reef survey images. 2012 IEEE Conference on Computer Vision and Pattern Recognition. Providence, USA, 1170-1177.

(  0) 0) |

Chen, Y., Zhu, J., Wan, L., Fang, X., Tong, F., and Xu, X. M., 2022. Routing failure prediction and repairing for AUV-assisted underwater acoustic sensor networks in uncertain ocean environments. Applied Acoustics, 186: 108479. DOI:10.1016/j.apacoust.2021.108479 (  0) 0) |

Duan, S., Lin, Y., Zhang, C. Y., Li, Y. H., Zhu, D., Wu, J., et al., 2022. Machine-learned, waterproof MXene fiber-based glove platform for underwater interactivities. Nano Energy, 91: 106650. DOI:10.1016/j.nanoen.2021.106650 (  0) 0) |

Durden, J. M., Hosking, B., Bett, B. J., Cline, D., and Ruhl, H. A., 2021. Automated classification of fauna in seabed photographs: The impact of training and validation dataset size, with considerations for the class imbalance. Progress in Oceanography, 196: 102612. DOI:10.1016/j.pocean.2021.102612 (  0) 0) |

Huang, G. B., 2003. Learning capability and storage capacity of two-hidden-layer feedforward networks. IEEE Transactions on Neural Networks, 14(2): 274-281. DOI:10.1109/TNN.2003.809401 (  0) 0) |

Irfan, M., Zheng, J., Iqbal, M., Masood, Z., Arif, M. H., and Hassan, S. R. U., 2021. Brain inspired lifelong learning model based on neural based learning classifier system for underwater data classification. Expert Systems with Applications, 186: 115798. DOI:10.1016/j.eswa.2021.115798 (  0) 0) |

Khan, S. H., Hayat, M., Bennamoun, M., Sohel, F. A., and Togneri, R., 2017. Cost-sensitive learning of deep feature representations from imbalanced data. IEEE Transactions on Neural Networks and Learning Systems, 29(8): 3573-3587. (  0) 0) |

Krizhevsky, A., Sutskever, I., and Hinton, G. E., 2017. Imagenet classification with deep convolutional neural networks. Communications of the ACM, 60(6): 84-90. DOI:10.1145/3065386 (  0) 0) |

Lopez-Vazquez, V., Lopez-Guede, J. M., Chatzievangelou, D., and Aguzzi, J., 2023. Deep learning based deep-sea automatic image enhancement and animal species classification. Journal of Big Data, 10(1): 37. (  0) 0) |

Lu, Y. C., Tung, C., and Kuo, Y. F., 2020. Identifying the species of harvested tuna and billfish using deep convolutional neural networks. ICES Journal of Marine Science, 77(4): 1318-1329. DOI:10.1093/icesjms/fsz089 (  0) 0) |

Ma, Q. X., Jiang, L. Y., and Yu, W. X., 2023. Lambertian-based adversarial attacks on deep-learning-based underwater side-scan sonar image classification. Pattern Recognition, 138: 109363. DOI:10.1016/j.patcog.2023.109363 (  0) 0) |

Mahmood, A., Bennamoun, M., An, S., Sohel, F., and Boussaid, F., 2020. ResFeats: Residual network based features for underwater image classification. Image and Vision Computing, 93: 103811. DOI:10.1016/j.imavis.2019.09.002 (  0) 0) |

Mahmood, A., Bennamoun, M., An, S., Sohel, E., Boussaid, E., Hovey, R., et al. , 2016. Coral classification with hybrid feature representations. 2016 IEEE International Conference on Image Processing. Arizona, USA, 519-523.

(  0) 0) |

Mahmood, A., Bennamoun, M., An, S. J., Sohel, F. A., Boussaid, F., Hovey, R., et al., 2018. Deep image representations for coral image classification. IEEE Journal of Oceanic Engineering, 44(1): 121-131. (  0) 0) |

Marre, G., Deter, J., Holon, F., Boissery, P., and Luque, S., 2020. Fine-scale automatic mapping of living Posidonia oceanica seagrass beds with underwater photogrammetry. Marine Ecology Progress Series, 643: 63-74. (  0) 0) |

Mehta, A., Ribeirom, E., Gilner, J., Woesik, R., Ranchordas, A. K., Araujo, H., et al., 2007. Coral reef texture classification using support vector machines. 2nd International Conference on Computer Vision Theory and Applications. Barcelona, Spain, 302-310.

(  0) 0) |

Mittal, S., Srivastava, S., and Jayanth, J. P., 2022. A survey of deep learning techniques for underwater image classification. IEEE Transactions on Neural Networks and Learning Systems, 34(10): 6968-6982. (  0) 0) |

Nadeem, U., Bennamoun, M., Sohel, F., and Togneri, R., 2019. Deep fusion net for coral classification in fluorescence and reflectance images. 2019 Digital Image Computing: Techniques and Applications. Perth, Australia, 188-194.

(  0) 0) |

Pao, Y. H., Park, G. H., and Sobajic, D. J., 1994. Learning and generalization characteristics of the random vector functionallink net. Neurocomputing, 6(2): 163-180. (  0) 0) |

Prasetyo, E., Suciati, N., and Fatichah, C., 2022. Multi-level residual network VGGNet for fish species classification. Journal of King Saud University – Computer and Information Sciences, 34(8): 5286-5295. DOI:10.1016/j.jksuci.2021.05.015 (  0) 0) |

Prasetyo, E., Suciati, N., and Fatichah, C., 2020. Fish-gres dataset for fish species classification. Mendeley Data, VI, DOI: 10.17632/76cr3wfhff.1.

(  0) 0) |

Salman, A., Siddiqui, S. A., Shafait, F., Mian, A., Shortis, M. R., Khurshid, K., et al., 2020. Automatic fish detection in underwater videos by a deep neural network-based hybrid motion learning system. ICES Journal of Marine Science, 77(4): 1295-1307. (  0) 0) |

Shi, Q. S., Katuwal, R., and Suganthan, P. N., 2019. Stacked autoencoder based deep random vector functional link neural network for classification. Applied Soft Computing, 85: 105854. (  0) 0) |

Shihavuddin, A. S. M., Gracias, N., Garcia, R., Gleason, A. C. R., and Gintert, B., 2013. Image-based coral reef classification and thematic mapping. Remote Sensing, 5(4): 1809-1841. (  0) 0) |

Simonyan, K., and Zisserman, A., 2015. Very deep convolutional networks for large-scale image recognition. International Conference on Learning Representations. San Diego, USA, 1-14.

(  0) 0) |

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., and Wojna, Z., 2016. Rethinking the inception architecture for computer vision. IEEE Conference on Computer Vision and Pattern Recognition. Seattle, USA, 2818-2826.

(  0) 0) |

Tamura, S., and Tateishi, M., 1997. Capabilities of a four-layered feedforward neural network: Four layers versus three. IEEE Transactions on Neural Networks, 8(2): 251-255. (  0) 0) |

Tan, M., and Le, Q. V., 2019. EfficientNet: Rethinking model scaling for convolutional neural networks. 36th International Conference on Machine Learning. Long Beach, USA, 97.

(  0) 0) |

Tang, J., Deng, C., and Huang, G. B., 2015. Extreme learning machine for multilayer perceptron. IEEE Transactions on Neural Networks and Learning Systems, 27(4): 809-821. (  0) 0) |

Wang, X. H., Ouyang, J. H., Li, D. Y., and Zhang, G., 2019. Underwater object recognition based on deep encoding-decoding network. Journal of Ocean University of China, 18(2): 376-382. (  0) 0) |

Xie, K., Pan, W., and Xu, S., 2018. An underwater image enhancement algorithm for environment recognition and robot navigation. Robotics, 7(1): 14. (  0) 0) |

Zhou, Z., Yang, X., Ji, H., and Zhu, Z., 2023. Improving the classification accuracy of fishes and invertebrates using residual convolutional neural networks. ICES Journal of Marine Science, 80(5): 1256-1266. (  0) 0) |

Zhuang, S., Zhang, X., Tu, D. W., Ji, Y., and Yao, Q. Z., 2021. A dense stereo matching method based on optimized direction-information images for the real underwater measurement environment. Measurement, 186: 110142. (  0) 0) |

2. Zhejiang Key Laboratory of DDIMCCP, Lishui University, Lishui 323000, China;

3. School of Mechanical Engineering, Hangzhou Dianzi University, Hangzhou 310018, China

2024, Vol. 23

2024, Vol. 23