The article information

- X. Wang, S. K. Ong, A. Y. C. Nee

- A comprehensive survey of augmented reality assembly research

- Advances in Manufacturing, 2016, 4(1): 1-22

- http://dx.doi.org/10.1007/s40436-015-0131-4

-

Article history

- Received: 10 July 2015

- Accepted: 14 December 2015

- Published online: 12 January 2016

2 Department of Mechanical Engineering, Faculty of Engineering, National University of Singapore, 9 Engineering Drive 1, Singapore 117576, Singapore

Assembly involves the grouping of individual parts that fit together to form the finished commodities with good added value. According to Whitney [1],it is the capstone process that links unit manufacturing processes to business processes. Hence,many business and technology strategies have been implemented in this domain to deal with the challenges that the manufacturing industry is constantly facing. Nowadays,the highly competitive business environment presses for innovative products at reduced timeto-market and there is an increasing trend that collaborative manufacturing environments require real-time information exchanges between various stakeholders in a product development lifecycle.

Augmented reality (AR),which is a set of innovative and effective human computer interaction (HCI) techniques,has potential for addressing these problems. AR enriches the way that users experience the real world by embedding virtual objects to coexist and interact with real objects in the real world [2]. In the past two decades,AR has progressed from marker-based to marker-less,and more recently mobile context-aware methods that can bring AR into mobile and assembly workshop contexts. Due to the growing amount of efforts in AR from several reputed corporations (IBM,HP,Sony,Google,etc.) and universities,this novel technology has been applied successfully in many areas,e.g.,medicine [3],maintenance and repair [4], cultural heritage [5] and education [6]. In particular, Google Glass [7],a compact and lightweight optical seethrough monocular display,provides two key benefits of AR,namely,encumbrance-free and instant access to information by affixing it in the visual field.

AR has been implemented to address a wide range of problems throughout the assembly phase in the lifecycle of a product,e.g.,planning,design,ergonomics assessment, operation guidance and training,by creating an augmented environment where virtual objects (instructions,visual aids,and industrial components) coexist and interact with the real objects and environment. In such an augmented environment,users can achieve real feedback and virtual contents in order to analyze the behavior and properties of planned products,obtaining the benefits of both physical and virtual prototyping [8]. Many review works have been reported to summary the state-of-the-art systems in AR assembly. Ong et al. [9] provided a comprehensive review on AR in manufacturing and Fite-Georgel [10] made a study on industrial augmented reality (IAR). Nee et al. [8] reviewed the research and development of AR applications in design and manufacturing,and meanwhile. Leu et al. [11] reported the state-of-the-art survey of methodologies for developing computer-aided design (CAD) model based systems for assembly simulation,planning and training. However,although there are noted significant amounts of work in AR assembly in recent years,there are no rigorous papers that review the state-of-the-art work of AR in assembly tasks. Therefore,the objective of this paper is to fill this gap by providing a state-of-the-art summary of mainstream studies of AR assembly,highlighting the research concentration and paucity,summarizing the issues and challenges,suggesting solutions to these issues and challenges,and forecasting future research and development trends. The remaining of this review is organized as follows. Section 2 provides an overview of computer-aided technologies in mechanical assembly. Section 3 reviews AR assembly applications. Issues that limit the development of AR assembly systems and future trends are discussed in Section 4. Section 5 summaries and concludes the review and examines the future trends.

2 Overview of computer-aided technologies in mechanical assembly 2.1 Background of traditional computer-aided assembly technologyAssembly design,planning and operation facilitation is a critical step and should be considered at all stages of the product design process. Traditional computer-aided assembly technologies focus on assembly process planning,where details of assembly constraints and relationships of different parts are formalized [12]. Expert assembly planners typically use modern commercial CAD systems,with which,the 3D CAD models of the components to be assembled are displayed on computer screens to aid the planners to determine component’s geometric characteristics and assembly features for a new product [13]. Although this is the most commonly used approach, there are limitations. Firstly,users are required to identify constraint information between the mating parts by selecting the mating surfaces,axes and/or edges manually; and this is a complicated task for complex assemblies. It is difficult to foresee the impact of individual mating specifications on other aspects of the assembly process with such part-to-part specification techniques. Secondly,most CAD software lacks direct interaction between users and the components,such that users cannot manipulate the components with hands naturally.

Virtual reality (VR) technology plays a vital role in simulating advanced 3D human computer interactions, especially for mechanical assembly [13],allowing users to be completely immersed in a synthetic environment. Many VR systems have been proposed successfully to aid assembly activities,e.g.,CAVE [14, 15],IVY [16],Vshop [17],VADE [18, 19, 20, 21, 22],HIDRA [23, 24],and SHARP [25, 26]. However,even though VR systems are designed to simulate the assembly process in a realistic fashion,they still have limitations. Firstly,the physical relations between the users and the virtual environment are eliminated,the users’ realistic experience is lost,thus decreasing the fidelity and credibility of the VR assembly process. Furthermore,the VR experience may not be highly convincing as it is not easy to fully and accurately model the actual working environments which are critical to the manufacturing processes. Although there are advanced approaches to accelerate the computation process (e.g.,graphics processing unit (GPU) based acceleration [27]),the real-time performance is still a challenge.

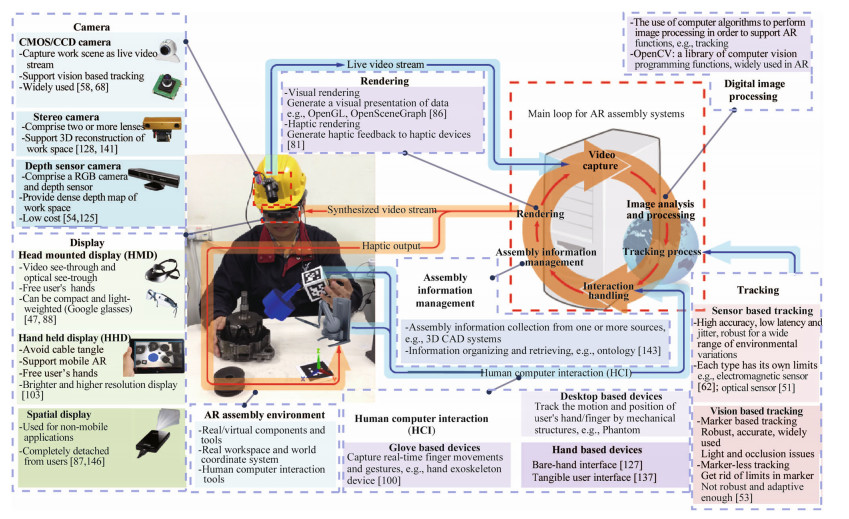

2.2 Typical architecture of AR assembly systemAR technology can overcome these limitations as it does not need the entire real world to be modeled [9],thus reducing the high cost of constructing a fully immersive VR environments and the inconvenience of some of the commercial CAD systems. More importantly,AR enhances the interaction between the systems and the users by allowing them to move around in the AR environment and manipulate the objects naturally. Therefore,AR technology has emerged as one of the most promising approaches to facilitate mechanical assembly processes. A typical AR assembly system is illustrated in Fig. 1.

|

| Fig. 1 Typical AR assembly environment |

In Fig. 1,six functional modules,i.e.,video capture, image analysis and processing,tracking process,interaction handling,assembly information management and rendering are illustrated as the kernel modules to constitute the main loop of an AR assembly system. In addition,for each important functional module,e.g.,display or camera, there is a pop-out note to illustrate its characteristics,disadvantages,classifications,etc. Important pathways for data transfer on which the six kernel modules rely are shown in color,e.g.,input data pathways are in blue; while output data pathways are in orange.

2.3 SurveyIn this survey,the articles were searched from the following online databases,namely,Google Scholar,Engineering Village,ScienceDirect,IEEE Xplore,SpringerLink,ACM Digital Library,and Web of Science. Each paper on AR assembly published from 1990 to 2015 is reviewed by the authors to determine its eligibility and level of relevance based on the following criteria. The articles must be AR work in areas of assembly operation to assure the related work. Those articles that concentrate solely on AR rather than their applications in assembly are not included. For example,AR papers for tracking theory or view management are out of the scope. Among these selected articles (119 papers),after evaluation,91 of them (primarily published from 2005 to 2015) are considered as recent pertinent work which will be discussed in detail in Section 3. For the early articles before 2005,only some important works are included. Considering the space limit of a paper,the recent works that can represent most up-todate advances in AR assembly are preferred in this paper.

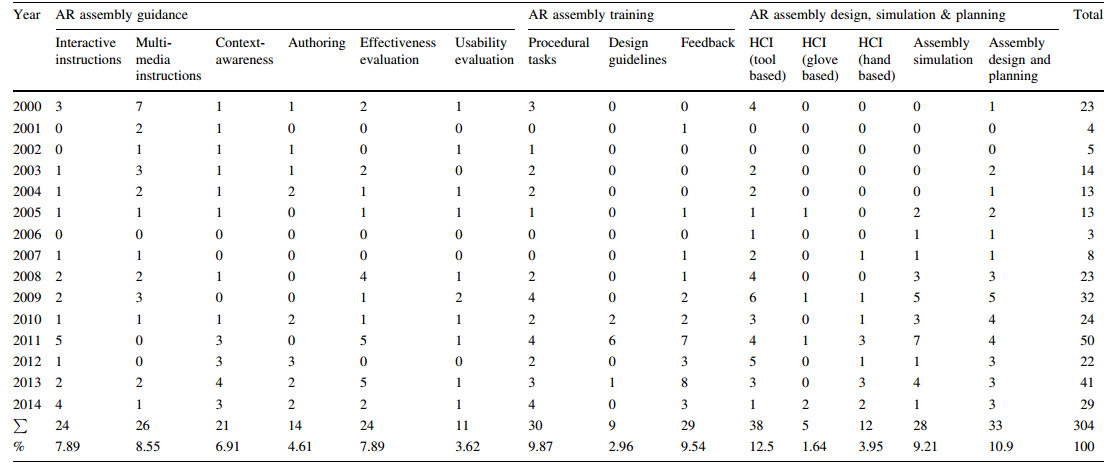

Considering that AR assembly is an emerging area and there are not the significant body of journal articles,the authors looked into the leading assembly and manufacturing research journals and conferences for quality assurance purposes,e.g.,International Journal of Advanced Manufacturing Technology (IJAMT),International Journal of Production Research (IJPR),Computer Aided Design (CAD),The International Academy for Production Engineering (IAPE),etc. In order not to miss the relevant articles in other domains,the authors also searched articles in pure AR technical journals and conferences,e.g.,IEEE International Symposium on Mixed and Augmented Reality (ISMAR),HCI conferences,etc.,which might have small number of relevant papers dealing with AR for assembly tasks. Table 1 summarizes the major worldwide research effort using AR in mechanical assembly.

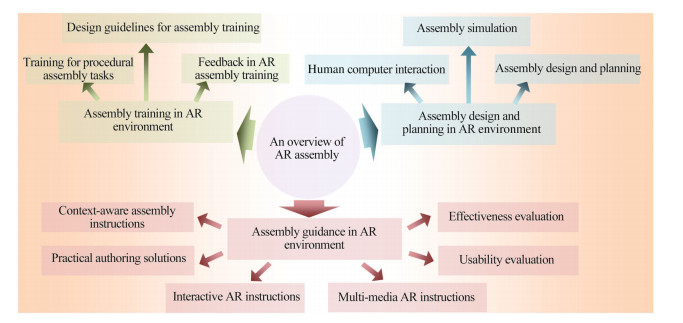

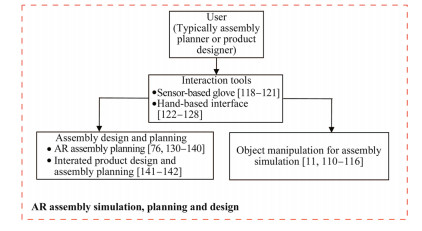

In this paper,the authors aim to provide a concise overview of the technical features,characteristics and broad range of applications of AR assembly systems, which,hopefully will serve as a useful introduction and reference guide for scientists interested in studying and applying this important technology. After a summary of the typical AR assembly environment,and characteristics/disadvantages of the most representative systems in the community of AR assembly,the applications in modern assembly technology are roughly categorized into three sections based on the lifecycle of product development, i.e.,design and planning,operation guidance,and training. Each section contains a brief overview of the key features and major applications with reference to seminal publications and more comprehensive specialized reviews provided for more in-depth reading. The discussion is interspersed with more detailed descriptions of selected recent studies,which demonstrate the current state of the art and future trends which continue to find new and exciting applications across the assembly and other manufacturing technologies (see Fig. 2).

|

| Fig. 2 Major applications of AR technology in assembly tasks |

During the evolution of AR assembly research,a variety of related research topics have been developed and discussed extensively. In this paper,past AR assembly research has been grouped into three main categories and twelve subcategories as shown in Table 2. Table 2 shows the number of papers published in the selected journals and conferences in each of these categories over time,and the final total percentage break down of the papers. It is noted that some papers discuss several topics and are not limited to one category.

As shown in Table 2,the main categories which constitute the whole AR assembly work include AR assembly guidance (120 articles,39.47%),AR assembly training (68 articles,22.37%),and AR assembly design,simulation and planning (116 articles,38.16%). The category of AR assembly guidance can be divided into six sub-categories. Most of the articles that fall into this category related to the topics of ‘‘Interactive instructions’’ (24 articles,7.89%) and ‘‘Multi-media instructions’’ (26 articles,8.55%). These research topics have a relatively steady number of publications per year. However,the number of papers on these topics may decrease gradually with the growing maturity of the field. Researchers also focus on context-awareness (21 articles,6.91%),authoring (14 articles,4.61%),effectiveness evaluation (24 articles,7.89%),and usability evaluation (11 articles,3.62%),which reflect more emerging research topics. These topics are under-rated because there are few publications in those topics in the past; however, these topics are attracting greater attention recently. For instance,since AR authoring for an enterprise requires heavy investment,which can lead to redundant pathways, researchers are paying more attention to the topic of automatic creation of context aware AR contents for assembly operations by engineers and authoring staff as well as by the end users (three articles in 2012,four articles in 2013,three articles since 2014). Another example is evaluation methods for AR assembly; after almost two decades of research and development work,the framework to assess AR assembly systems is still unclear. The benefits achieved by the introduction of AR are still elusive without benchmarks of current procedures and objectives for an assembly task. Therefore,a growing number of researchers have begun to focus on the relevant topics on evaluation work,i.e.,effectiveness evaluation,and usability evaluation. The category of AR assembly training can be divided into three sub-categories. Most of the articles that fall into this category are related to the topics of ‘‘Assembly training for procedural tasks’’ (30 articles,9.87%) and ‘‘Feedback for user’s action’’ (29 articles,9.54%). 9 articles (2.96%) concerns ‘‘Design guidelines’’,i.e.,the development of guidelines that can form the fundamentals of an effective AR assembly training system. This topic has the potential to attract increasing attention from researchers because the design guidelines can be adapted and customized for a wide range of AR assembly training systems. The category of AR assembly design,simulation and planning can be divided into three sub-categories,namely, ‘‘HCI’’ (55 articles,18.09% in total; tool-based: 38 articles, 12.5%; glove-based: 5 articles,1.64%; hand-based: 12 articles,3.95%),‘‘Assembly design and planning’’ (33 articles,10.9%) and ‘‘Assembly simulation’’ (28 articles, 9.21%). ‘‘HCI’’ is one of the most popular research topics and the papers are related to ‘‘HCI’’ counts almost one-fifth of all the research works in AR assembly. This is reasonable because HCI is one of the fundamental enabling technologies to make AR assembly more useful,functional and reliable,and is still a popular research topic with many fertile areas for research,e.g.,bare-hand interface. All the three sub-categories of ‘‘AR assembly design,simulation and planning’’ are the areas where AR technology can show the greatest utility and highest potential for positive impact in assembly tasks.

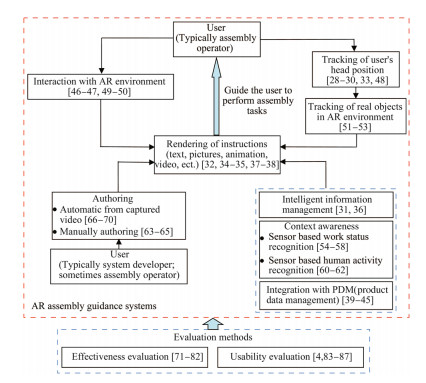

3.2 AR assembly guidanceThe relations between the research topics pertinent to AR assembly guidance are shown in Fig. 3. The details of these research topics will be described in this section.

|

| Fig. 3 Topics related to AR assembly guidance |

References [28, 29, 30] have proposed the first implementation of a classic AR assembly system by combining head position sensing and real world registration with the HMD, such that a computer-produced diagram,containing pertinent information,can be superimposed and stabilized on a specific position on a real-world object. Since then,many research works on AR assembly guidance have been reported [31, 32, 33, 34, 35]. Molineros and Sharma [36] proposed an effective information presentation scheme using assembly graphs to control the augmented instructions. As can be seen,instead of replacing manpower with machines to improve work quality,AR enables operators to perform tasks which require a higher level of qualification and even allow them to learn by doing the work. A few AR assembly systems have been applied in the industrial context [37, 38]. Researchers [39, 40, 41, 42] proposed a series of AR assembly systems that focused on the implementation of an AR assembly system to a real setting in factory by integrating design for assembly software tools (CAD/PDM/PLM). Tumkor et al. [43] assessed the potential of the implementation of AR in engineering educational tool for assembly. Recently,Makris et al. [44] proposed a system which integrated AR technologies with the use of CAD data to provide visual instructions of assembly tasks. Sanna et al. [45] proposed a mobile AR guidance system which was aimed to support novice of assembly operator to perform assembly tasks.

The topic of AR based multi-media assembly guidance has been investigated as a classic problem for a long time. However,although much effort has been paid on this topic, some of the AR assembly guidance systems seem to focus solely on providing step-based instructions for user,i.e., overlooking user’s need of timely guidance in assembly operations. In addition,few works could keep track of the assembly status in real-time and recognize errors and completion states automatically at each step.

AR can provide intuitive interaction experience to the users by seamlessly combining the real world with the various computer-generated contents and therefore,many researchers focus on the development of interactive AR guidance systems for assembly processes [46, 47]. Sukan et al. [48] presented an interactive system called ParaFrustum to support users to view a target object from appropriate viewpoints in context,such that the viewpoints could avoid occlusions. Posada et al. [49] described how visual computing methods in AR could contribute to assembly process guidance under the new industrial standard. Zhang et al. [50] proposed a method to implement the RFID technology in the application of assembly guidance in an augmented reality environment,aiming at providing just-in-time information rendering and intuitive information navigation for the assembly operator. Henderson and Feiner [51] presented the first AR system to aid users in the psychomotor phase of procedural tasks [52]. The system provides dynamic and prescriptive instructions in response to the user’s on-going activities. In order to enhance manmachine communication with more efficient and intuitive information presentation,Andersen et al. [53] proposed a proof-of-concept system based on stable pose estimation by matching captured image edges with synthesized edges from CAD models for a pump assembling process.

In summary,previous works show the potential of AR assembly guidance systems with multi-media and interactive instructions in improving the performance of the users. However,limitations exist in current AR systems when assisting complex assembly processes and the issues include time-consuming authoring procedures,integration with enterprise data,intuitive user interface,etc. Future work should examine the appropriateness of AR guidance for more complex,multi-step assembly tasks. In addition, although interactive AR assembly guidance has improved the traditional step-by-step guidance systems by providing pertinent information according to user’s requirement,the interaction scheme may disturb or interrupt user’s on-going assembly task. Therefore,it is imperative to work on detecting and recognizing the users’ actions in order to provide an industrial robust hands free interaction.

3.2.2 Context-awareness and authoringAim at improving labor efficiency and accuracy,a contextaware AR assembly system keeps track of the status of users in real-time,automatically recognizing manual errors and completion at each assembly step and displaying multimedia instructions corresponding to the recognized states [54]. The ARVIKA project [55] provides a context-sensitive system to enhance the real field of vision of a skilled worker,technician or development engineer with timely pertinent information. Rentzos et al. [56] proposed a context-aware AR assembly system,which integrated the existing information and knowledge available in CAD/ PDM systems based on the product and process semantics, for real-time support of the human operator. Zhu et al. [57] proposed a wearable AR mentoring system to support assembly and maintenance tasks in industry by integrating a virtual personal assistant (VPA) to provide natural spoken language based interaction,recognition of users’ status, and position-aware feedback to the user. Recently,there are studies on the recognition and tracking of the pose of the objects in an assembly such that the corresponding information can be retrieved from the system. For instance, Radkowski and Oliver [58] proposed a recognition method based on SIFT [59] features to distinguish multiple circuit boards and to identify the related feature map in real time during the circuit assembly tasks. In order to implement the context-aware AR systems,3D models of workspace scenes are often required,whether for registration of the camera pose and virtual objects,handling of occlusion,or authoring of pertinent information. However,there seems to be a lack of discussion on the topic of in situ 3D working scene modeling in some of the proposed context-aware AR systems.

Researchers have investigated the impact of contextaware based AR on the performance of human operators as well as reducing their fatigue during assembly tasks by providing real-time user ergonomic feedback. Chen et al. [60] proposed an adaptive guiding scene display method in order to display the synthesized guiding scene on suitable region from an optimal viewpoint. Damen et al. [61] proposed a real-time AR guidance system to facilitate the sensing of activities and actions within the immediate spatial vicinity of the user. Vignais et al. [62] proposed a system for the real-time assessment of manual assembly tasks by combining sensor network and AR technology to provide real-time ergonomic feedback during the actual work execution.

The above systems have been demonstrated to be useful based on the user study. However,in order to be more robust,the systems need to be task-adaptive,i.e.,suited for a wide range of different tasks,when they are implemented in the complex industrial tasks,because it is time-consuming to build ‘‘ad hoc’’ systems for tasks with different scenes and different task contents. Moreover,the implementation issues of sensors should be considered. For example,the magnetic field sensed by the sensors may be affected to yield stable and accurate pose results due to the presence of metal objects in an assembly.

Practical authoring solution is another popular research topic which has been sought after by the researchers,who have attempted to support complex assembly tasks with AR-integrated systems,in order to minimize costs for specialized content generation [63, 64, 65]. Petersen and Stricker [66],and Mura et al. [67] reported proof-of-concept systems to create interactive AR manual automatically (for assembly,maintenance,etc.) by segmenting video sequences and live-streams of manual workflows into the comprising single tasks. Petersen et al. [68] extended these approaches to extract the required information from a moving camera which could be attached to user’s head, providing ‘‘ad hoc’’,in-situ documentation of workflows during the execution. Bhattacharva and Winer [69] presented a method to generate AR work instructions in real time. Mohr et al. [70] proposed an AR authoring system to transfer typical graphical elements in printed documentation (e.g.,arrows indicating motions,structural diagrams for assembly relations,etc.) to AR guidance automatically.

AR assembly authoring systems have been enabled to cope with manual assembly operations efficiently; however,the use of AR authoring in complicated industrial areas is still imperative and needs further development, such that the AR authoring systems can become smart enough to understand a user’s intent and generate timely context-aware guidance during assembly operations without manual input.

3.2.3 Evaluation of AR assembly guidanceThe evaluation studies of AR applications can be roughly classified into two types,namely,effectiveness evaluation and usability evaluation [71]. Effectiveness evaluation concerns the capability of the system to produce a desired result for a certain task or activity,e.g.,improvement of productivity,assembly performance time,number of errors,etc. Odenthal et al. [72] proposed a comparative study of the performance of a human operator in the event of an assembly error under head-mounted and table mounted augmented vision system (AVS). Hou et al. [73] reported an empirical analysis in the use of AR technology for guiding workers in the field of construction assembly and evaluated the effectiveness of AR-based animation in facilitating piping assembly. The results in these works indicated a positive effect of facilitations when using the AR system in assembly tasks. Besides that,several researches have been published that present comparative studies on video see-through and optical see-through AR displays with traditional assembly instruction manual or computer-aided instruction to demonstrate the usefulness and intuitiveness of AR conditions [74, 75, 76, 77]. Several researches were published on comparing AR assembly systems with other types of assembly facilitation systems, e.g.,VR systems,user manual based systems and audiovisual tools based systems [78, 79, 80]. Recently,Gavish et al. [81] reported their empirical evaluation of efficiency and effectiveness of AR and VR systems within the scope of the SKILLS Integrated Project for industrial maintenance and assembly (IMA) tasks training to demonstrate the usefulness of the AR platform. Radkowski et al. [82] presented an analysis of two factors that might affect the effectiveness of AR assembly training systems,namely,the complexity of the visual features,and the complexity of the product.

Usability evaluation involves investigating the ease of use and learnability of the AR assembly system based on needs analysis,user interviews and expert evaluations. In order to test the usability of AR technology in assembly tasks,Henderson and Feiner [4, 83] presented a withinsubject controlled user study for a comparison of three setups for assembly and maintenance operations of a military vehicle under field conditions. Gattullo et al. [84] proposed a set of experiments to test variables affecting text legibility and focus on deriving criterion to facilitate designers of AR interface. Recently,researchers begin to focus the usability evaluation on the cognitive load for users [85, 86]. The cognitive activities concern those involved in the reasoning and volitional processes that go on between perception and actual actions [71]. Stork and Schubo [87] investigated human cognition in AR production environments to study the benefits achieved from AR in assembly tasks. Hou et al. [86] proposed a set of initial experiments to assess the discrepancies between the traditional guidance and AR to evaluate the efficiency of learning or training for people involved in the assembly of complex systems.

For both effectiveness and usability evaluation,there is a huge diversity among these studies with respect to various interaction methods,virtual instructions rendering,and level of complexity of the product. Thus,different factors that influence the effectiveness and usability of an AR assembly system are still obscure. Previous research works indicate that the use of AR for a specific ‘‘ad hoc’’ assembly task is beneficial; however,few works focus on the evaluation of these systems for a variety of assembly tasks varying in different factors. Therefore,the general evaluation framework and method of AR systems suited for a wide range of assembly tasks should be investigated to reflect the characteristics and effectiveness of AR systems (e.g.,portability,visual intuitive,cognitive load,etc.). In addition,since emerging AR systems and services introduce a new level of complexity with respect to usability evaluation,researchers tend to become more interested in evaluating novel AR interfaces,especially how these interfaces can improve human resource capabilities and the attendant human factors.

3.3 AR assembly trainingTraining activities for technicians in the acquisition of new assembly skills play an important role in the industry. AR has been shown effective to support assembly training in some particular industrial context due to its time-efficiency,high effectiveness and the assurance for technicians to achieve an immediate capacity to accomplish the task [88, 89, 90]. The relations between the research topics pertinent to AR assembly training are shown in Fig. 4.

|

| Fig. 4 Topics related to AR assembly training |

Research in this area has largely involved procedural tasks where a user follows visual or other multi-modal cues to perform a series of steps,with the focus on maximizing the user’s efficiency while using the AR system [91, 92]. Fiorentino et al. [93] proposed an AR assembly training system based on large screen projection technology for the rendering of AR instructions. Liu et al. [94] proposed an AR based system for the assembly of narrow cabin products to promote the efficiency of assembly training and guidance by creating an information-enhanced AR assembly environment. The European project Service and Training through Augmented Reality (STAR) [95] was developed for industrial training. It was reported that in this project, the test subjects completed the assembly task significantly faster with fewer errors due to the employment of spatiallyregistered AR. While there has been much research into the use of AR to aid assembly training,most systems guide the users through a fixed series of steps and provide minimal feedback when the users make a mistake,which is not conducive to learning. In order to improve this issue, Gorecky et al. [96] proposed two novel techniques for cognitive aid and training in the European project COGNITO,namely,the active recognition using sensor networks and workflow recognition. The system can analyze and record assembly workflows automatically by observing experienced technicians to build up a system-internal understanding of assembly processes. Matsas and Vosniakos [97] proposed an interactive and immersive simulation environment to facilitate training of simple manufacturing tasks with information feedback,e.g.,safety issues,situational information.

Feedback is considered as an important factor in the training process which has received much attention [98- 100]. Re and Bordegoni [101] presented a monitor system for training,such that supervisors could check the assembly/maintenance activity from a remote display and provide feedback to the users whenever necessary. Kruger and Nguyen [102] proposed an approach to compute the positions of each part of worker’s body with captured input depth images,and analyze the ergonomic scores. Webel et al. [103] investigated the implementation of tactile feedback and location-dependent AR information (adaptive visual aids) during the AR training process to verify the usefulness of the multimodal AR training system. Westerfield et al. [104, 105] integrated the intelligent tutoring systems (ITSs) with AR interfaces to provide customized instruction to each trainee. Researchers have focused on design guidelines for the design of AR assembly training systems. Chimienti et al. [106] suggested a general procedure for unskilled operators to follow a correct implementation of AR assembly training. Besides that,an interdisciplinary study of AR-based assembly training using cognitive science and psychology has been published by Webel et al. [107, 108] and Gavish et al. [109].

A major challenge in industry for AR assembly training is to handle its complexity in terms of number of components,safety,assembly difficulties,and rapid updating of assembly skills,though the procedures of assembly skills training are relatively static and predictable. In particular, assembly methods are adapted to various products,and they are dependent on the mating and connection relations between components. Therefore,in future,the adaptivity of various methods and difficulty levels of assembly operations would be expected to be integrated to the AR assembly training system to enhance a user’s comprehension,and the compatibility and optimization of such integration should be investigated,such that the new AR assembly training systems can handle current trends of agile product development and manufacturing.

3.4 AR assembly process simulation and planningAmong the topics in AR assembly simulation and planning (see Fig. 5),objects manipulation has been widely studied [11, 110, 111]. Marcincin et al. [112] implemented a set of open source tools to track the position and orientation of the assembly operation working base with a special gyroscopic head to measure the base’s rotation,and a pantograph mechanism to measure the tilting motions. Based on the position data obtained,the authors utilized open source software to manage the visualization and simulation of the virtual objects in the assembly process, reacting flexibly to the occurrence of events and situations. The authors also developed a system which allows users to see important information about the exact position and orientation of a single assembly element during AR assembly [113]. Woll et al. [114] proposed an AR assembly simulation system to aid the assembly of a car power generator,employing AR technology for letting the user experience the spatial relationship between the components,thus enhancing the transition from assembly instructions into practical skills. Researchers have worked on the improvement of the 3D spatial feeling in AR assembly simulation by employing geometric relations between objects; however,many of the current AR assembly simulation systems seem to be limited to the interaction between virtual objects and ignore an important issue of incorporating real objects into the AR environment to provide the core benefits of AR,which are haptic feedback,natural interaction and better manipulation intuition. Wang et al. [115] proposed a pipeline to incorporate real objects,e.g.,tools,parts,etc.,in the AR assembly workspace,such that a user could interact with several real objects among virtual objects. Nevertheless, the proposed system is a proof-of-concept prototype with several limitations,e.g.,limitations of colored marker tracking,limited tracked volume,etc. Wang et al. [116] proposed a prototype to realize the interaction between real and virtual objects. However,its scope of application in industry is limited,as it assumes that the position and orientation of the real object as well as the pose of the camera are fixed during AR assembly.

|

| Fig. 5 Topics related to AR assembly simulation, planning and design |

Much effort has been reported to achieve natural and intuitive HCI in AR assembly. Velaz et al. [117] conducted a set of experiments to compare the influence of the interaction approaches (including mouse,haptic device, marker-less hands 2D/3D motion capture system) on the virtual object manipulation of assembly tasks. Theis et al. [118],Valentini [119] and Wei and Chen [120] reported interactive virtual assembly systems based on the use of a sensor-based glove. Lee et al. [121] presented a two-handed tangible AR interface,which was composed of two tangible cubes tracked by a marker-based tracker,to provide two types of interactions based on familiar metaphors from real object assembly. These HCI methods can be accurate and intuitive,yet the users may suffer from the high cost and cumbersomeness of the devices. Recently, hand-based HCI has become a popular research topic in AR assembly simulation [122, 123]. Arroyave-Tobon et al. [124] proposed a hand gestures based interaction tool named AIR-MODELLING to facilitate the designer to create virtual models of products in AR environment. Radkowski and Stritzke [125] reported a method based on Kinect to observe both hands of an assembly operator,such that the system can support the operator to select,manipulate and assemble 3D models of mechanical systems. Ong and Wang [126] and Wang et al. [127] presented intuitive and easy-to-use interaction approaches in an AR assembly environment based on bare-hand interaction. Wang et al. [128] proposed a methodology to integrate 3D bare-hand interaction with interactive manual assembly design system for the users to manipulate the virtual components in a natural and effective manner. However,since the majority of current hand-based approaches require users to undertake specific simple actions to implement the interaction with the AR environment,these approaches seem to lack intuitiveness. Furthermore,in the case of hand gesture based HCI interfaces,only a limited types of gestures have been used and the gestures are designed by researchers for optimal recognition rather than for naturalness,meaning that the mappings between gestures and commands to the computer are often arbitrary and unintuitive [129].

The application of AR assembly can be created to enhance the assembly design and planning process [130, 131]. Pan et al. [132] proposed an automatic assembly/ disassembly simulation method for the accurate path planning of virtual assembly. Some works were reported to combine manual assembly guidance with assembly planning to improve the whole assembly planning and operation process [76, 133, 134]. Ong et al. [135] and Pang et al. [136] presented an AR-based methodology to integrate assembly product design and planning (PDP) activities with workspace design and planning (WDP). Aided by the WDP information feedback in real time,the designers and engineers could make better decisions for assembly design. Fiorentino et al. [137, 138, 139, 140] presented a series of work to describe assembly design review workspace by acquiring gesture commands based on a combination of video and depth cameras. Recently,as a new trend for AR assembly research,Wang et al. [141] and Ng et al. [142] presented an AR system that integrates design and assembly planning to support ergonomics and assembly evaluation during the early development stage of a product.

The implementation of assembly design and planning in the AR environment is promising and a growing number of researchers have studied this area; however,some limitations still exist in the state-of-the-art systems in this area, e.g.,accuracy issue,intuitiveness and dependency on CAD software. Focusing on these issues,one solution is to construct an integrated AR assembly platform which can model the interaction between mating components accurately in an assembly. In addition,it is important to propose an information management method,which extracts information from CAD system,stores and reasons the assembly relations independently with enhanced inference functions.

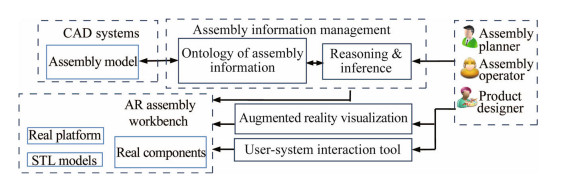

Figure 6 shows an integrated AR assembly environment (IARAE) system developed at the National University of Singapore [143]. IARAE consists primarily of four functional modules,namely,assembly information management (AIM) module,augmented reality visualization (ARV) module,user-system interaction tool (USIT) and AR assembly workbench (ARAW). The AIM module stores all the assembly information extracted from CAD models based on ontology. The information can be accessed and modified during an AR assembly operation independent of CAD systems. In the USIT module,users, primarily assembly planners and/or product designers,can manipulate both real and virtual components to interact with other components to verify the design. The ARV module supports the tracking and rendering of real components. The user performs AR assembly operations in the ARAW,which is the assembly task workspace for the users. In this environment,computer-generated graphics are superimposed onto a real platform to interact with real components,interaction tools and the surroundings in order to enhance the user’s perception when simulating and assessing an assembly.

|

| Fig. 6 ystem framework of IARAE [143] |

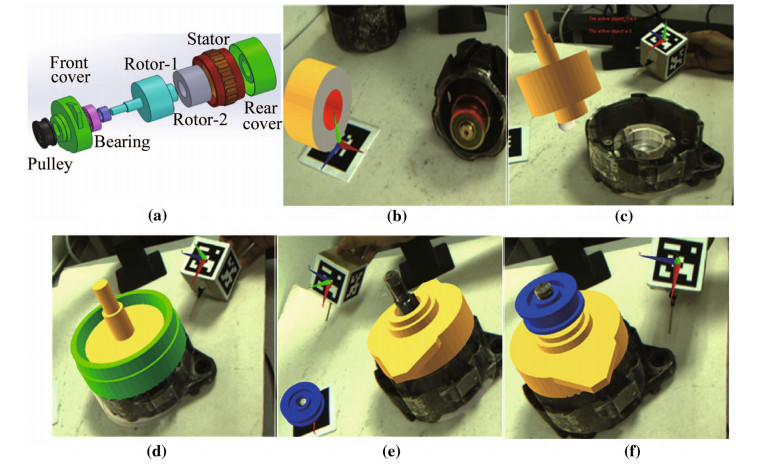

One advantage of this system over existing VR/AR assembly simulation and planning systems is a contact motion simulation strategy to analyze the interaction between real and virtual components based on the reasoning functions and assembly information ontology to model all the possible motion directions of the virtual components. The system can identify and deal with contacts automatically when the virtual components interact with real components in an AR assembly. In order to support the system to access and modify the information instances during an AR assembly process,a novel assembly information management approach is developed based on the reasoning functions of ontology. In addition,a hybrid marker-less tracking method is presented to support the interaction between real and virtual components. Figure 7 is a case study of the AR assembly process. A marker is fixed on the assembly workbench to determine the world coordinate system,so that the camera can track its own pose and position with respect to the world coordinate system. The marker does not affect the performance of the marker-less tracking of the real component.

|

| Fig. 7 AR assembly processes of an alternator assembly [143] (a) components list; (b) assembly of virtual rotor-2; (c) manipulation of virtual rotor-1; (d) assembly of virtual stator; (e) manipulation of virtual pulley; (f) assembly of virtual pulley |

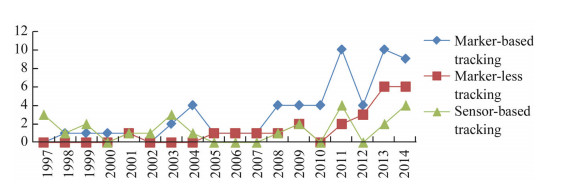

One of the crucial tasks in AR assembly is to develop a realtime tracking system suited for industrial scenarios,which characteristics (e.g.,poorly textured objects with lots of smooth surfaces,strong light variation,very small size of objects,etc.) challenge most of the techniques available [144]. The trends of the implementation of tracking methods in AR assembly systems are shown in Fig. 8. As shown in Fig. 8,marker-based tracking is the most widely used and popular tracking approach in AR assembly even until now. Many researchers implement the marker-based tracking approach in AR assembly systems due to its accuracy, flexibility as well as ease of use [41, 88, 89]. Wang et al. [116] used a cardboard with a marker to create a virtual panel. Wang et al. [115] explored tracking from non-square visual markers with a color-based marker to track the tool models so that the operators could manipulate the scanned, articulated real objects naturally. The use of markers is very common in AR assembly because it increases the robustness and improves the computation efficiency due to the stable and fast detection of markers in the entire image sequence. However,marker-based tracking approaches suffer from marker occlusion which may cause incorrect registration in the field of view [64]. In addition,many industrial components are too small to attach markers.

|

| Fig. 8 Trends for tracking approaches implementation in AR assembly |

Sensor-based tracking is another reliable tracking approach,which has been widely implemented in AR assembly system even before marker-based tracking is implemented (see Fig. 8). The tracking principle is based on different types of sensors,e.g.,magnetic,acoustic, inertial,optical and/or mechanical sensors; while visionbased tracking relies on computer vision techniques. Generally,sensor-based trackers used in AR applications need to provide high accuracy,low latency and jitter,and provide robust operations under a wide range of environmental variations [9]. Each type of tracker has its own advantages and disadvantages,e.g.,optical sensors have high accuracy,flexibility and low latency,but are expensive,heavy and require extensive calibration.

Marker-less tracking is deemed to be a desirable successor of markers recently. Simultaneous localization and mapping (SLAM) is an established technique to build up a map within an unprepared environment,while at the same time keeping track of its current location based on the extracted scene features [145]. This approach can provide robust tracking of the AR assembly systems even if the original fiducials are not visible in the view. However, since SLAM systems do not consider updating 3D structure corresponding to dynamic objects,the target working scene must be kept stationary,otherwise the recovered 3D models will become obsolete rapidly,and the system has to start from scratch for each frame of the captured video. A lot of other different scene feature-based tracking techniques have also been applied,such as reported in Refs. [47, 53, 146]. A binary descriptor is proposed [147] to improve natural feature tracking by employing a more complete description compared with BRIEF [148] and a multiple gridding strategy to capture the structure at different spatial granularities. Petit et al. [149] proposed a tracking strategy by integrating classical geometrical and color edge based features in the pose estimation phase. In these systems,the trade-offs between the tracking accuracy and computation cost need to be considered carefully. Recently,the popularity of graphics processor unit (GPU)- based computation acceleration is gaining attention; hence future work should investigate the integration of this technique in order that the system can handle complex tracking scenes with faster speed.

4.1.2 RegistrationThe primary objective for tracking in AR assembly is to facilitate registration of virtual components and/or manual instructions with the correct pose in an augmented space. There are two critical issues in this area,i.e.,accuracy and latency.

The issue of accuracy refers to the error arising from the inaccuracy present in the sensory devices,misalignments between sensors,and/or inaccurate tracking results [150]. In order to reduce the errors,Zheng et al. [151] presented a closed-loop registration approach,implementing the desired synthetic imagery (real and virtual) directly as the goal. Yang et al. [152] proposed a method to obtain extrinsic calibration of a generically configured RGB and depth camera rig with partially known metric information of observed scene in a single shot fashion. Implementation of advanced tracking methods,special strategies for registration and effective calibration approaches is the primary approach to eliminate the accuracy issue at the current stage.

The latency issue refers to the alignment errors of the virtual objects caused by the difference in time between the moment an observer moves and the time when the image corresponds to the new position of the observer is displayed [8]. This issue can become serious as head rotations and/or user’s operations become very fast. To solve this problem,Waegel and Brooks [153] implemented a lowlatency inertial measurement unit (IMU) to remedy the slow frame rate of depth cameras and proposed new 3D reconstruction algorithms to localize the camera position and build a map of the environment simultaneously,providing stable and drift-free registration of the virtual objects.

4.2 Collaborative AR interfaceSince an assembly operation links many other processes in manufacturing (e.g.,product design,factory layout planning,machining,joining,etc.),it is imperative to investigate how AR can play a pivotal role where assembly designers,planners as well as operators can participate together to facilitate communication between them [154, 155]. Liverani et al. [131] proposed the personal active assistant (PAA) linked to a designer workstation wirelessly to augment information from the views of worker and designer on their HMD. Boulanger [89] presented a collaborative AR tele-training system to support the remote users by sharing the view of the local user. Shen et al. [156] and Ong and Shen [157] proposed a remote collaboration system,so that the distributed users could view a product model from different perspectives. Collaborative AR has brought a wide range of benefits to assembly tasks,i.e., flexible collaboration times,knowledge retention, increased problem context understanding and awareness, and combining with existing and on-going working processes. However,sometimes due to the lack of virtual copresence,the users’ effective communication is difficult. Oda and Feiner [158] designed gesturing in an augmented reality depth-mapped environment (GARDEN) to improve the accuracy of referencing physical objects that are farther away than an arm’s length in a shared un-modeled environment by using sphere casting. Ranatunga et al. [159] presented a method that allowed an expert to use multi-touch gestures to translate,rotate and annotate an object on a video feed to facilitate remote guidance in the collaboration with other users. The system introduces a level of abstraction to the remote experts,allowing them to directly specify the object movements required of a local worker. Another issue is that although a number of collaborative AR systems have been implemented,few of them have been integrated with rigorous user studies [2]. Formal user studies need to be conducted in future collaborative AR research.

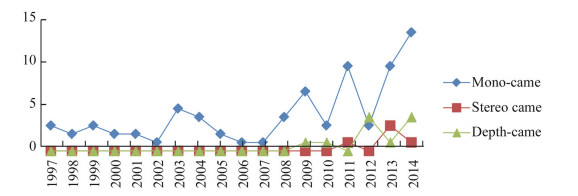

4.3 3D workspace scene captureA seamless blending of real world and computer-generated contents is often seen as a key issue to improve user immersion for AR assembly systems. The fundamental issue is the lack of real-time acquisition of the dynamic assembly workspace scene,which is a challenging task that requires the state-of-the-art technology and instrumentation. Figure 9 shows the trends of camera usage in AR assembly systems. As can be seen,mono cameras are implemented in most AR assembly systems for a long time, because mono cameras are generally light-weight and convenient to use. Moreover,a simple mono camera can be powerful enough to handle most of the core functions in AR technology. Recently,some special types of cameras, e.g.,stereo camera,depth camera etc.,are produced to better support information capture functions,and the published papers show a growing number of researchers implementing these cameras in the AR assembly systems (see Fig. 9). Stereo camera comprises two or more lenses with a separate image sensor or film frame for each lens to simulate human binocular vision. Therefore,a stereo camera has the ability to capture 3D images,which is a process known as stereo photography. Stereo cameras may be used for 3D reconstruction or for range imaging. However,the accuracy of the camera is not linear. In addition,it is not possible to calculate the distance of every pixel in the image. Recently,the rising accessibility of depth sensors,such as Microsoft Kinect,has generated great interest from the researchers. Reference [160] improves depth capture by combining the Kinect with a high end Nvidia GPU to capture a dense map of the 3D scene that can be exported to a mesh. With these scene capture technologies,dense 3D reconstruction [161] can be achieved,which can facilitate virtual objects to interact with the real assembly workspace. However,even the state-of-the-art dense 3D reconstruction techniques usually suffer from insufficient accuracy,resulting in visual artifacts,such as loss of detailed shapes and rough boundaries. In addition,there is a gap towards real-time reconstruction of 3D models with meaningful structural information, which is imperative for the rendering of the synthetic scene for assembly tasks. In order to improve the above problems,Dou et al. [162] introduced a 3D capture system that firstly builded a complete and accurate 3D model for dynamic objects by fusing a data sequence captured by commodity depth and color cameras,and then tracked the fused model to align it with subsequent captures.

|

| Fig. 9 Trends for camera usage in AR |

AR,as an effective HCI tool that helps user in the assembly design,planning and operation process,requires efficient knowledge representation schemes. Therefore,knowledge representation is a critical issue in order to sift through the raw data available to user and make sense of it. The use of ontologies can help integrate and transfer valuable, unstructured information and knowledge,and provide rich conceptualization of complex domains,such as assembly [163]. Researchers have proven that product assembly ontology is useful in storing and managing assembly data, e.g.,core product model (CPM) [164] and open assembly model (OAM) [165]. The ontology-based knowledge management system has been integrated to AR operation guidance system. Lee and Rhee [166] built a pioneering context-aware AR system for car maintenance and assembly operations based on the ontology of workspace context. Zhu et al. [167] implemented context ontology in the proposed AR bi-directional authoring system because ontology was independent of programming languages and also enabled context reasoning using first-order logic.

While knowledge is traditionally viewed as structured information,it can also be considered as information in context,i.e.,both the content and the relevant context of the information should be considered [168]. By considering the context,one of the major challenges to employ AR is to provide in-situ information which is registered to the physical world (the context),and reduce the cognitive load of particular tasks. To provide the most relevant service/ information to the users (e.g.,directing user attention to specific workpiece features),application and service providers should be aware of their contexts and adapt to their changing contexts automatically,i.e.,context-awareness, eliminating the need to search for the information. Contextawareness generally includes two items,namely,the status information of people,place,time and event,and the cognitive status of users,such as attention and comprehension. Most efforts of context-aware AR assembly are focused on the first item such as accuracy of tracking as well as the recognition of the working scene. However, efforts are still insufficient to establish context-aware AR systems that can reflect cognitive context fully. Recently, some of the literatures which focus on cognitive context have been introduced. Hervas et al. [169] proposed a system to generate navigation based on the user’s cognitive context to supply spatial orientation and cognitive facilitation rather than basing on context information which belonged to the first item.

5 ConclusionsThis paper has presented extensive review of researches on AR in assembly tasks reported between 1990 and 2015. To the authors’ best knowledge,this paper is the first review work to provide a comprehensive summary and critical comments for the state-of-the-art AR assembly systems. A full and comprehensive academic map for the distribution of AR assembly researches all over the world is provided to facilitate further investigation. This review starts with a description of existing assembly aided technologies,and followed by detailing the wide variety of assembly applications for which AR systems are now being applied and tested. The literature related to AR assembly research is divided into three categories (i.e.,AR assembly guidance, AR assembly training,and AR assembly simulation,design and planning),and twelve sub-categories. According to the analysis in this paper,these sub-categories can be regrouped into three classes based on the current development state and future research trends. The first class includes five topics that papers are published on most frequently,which attract most attention because they are the areas where AR technology can demonstrate the greatest utility and highest potential for positive impact in assembly tasks,i.e.,HCI (tool-based,glove-based,and hand-based),assembly design and planning,assembly simulation,feedback for user’s action,and assembly training for procedural tasks. The second class of topics reflects more emerging research interests,including context-awareness,authoring,effectiveness evaluation, usability evaluation,and design guidelines. Although topics in this class are under-rated because there are few publications in those topics in the past; however,these topics are attracting greater attention recently. The third class includes topics that have relatively steady number of published papers over time,namely,interactive instructions and multi-media instructions. However,the number of papers on these topics may decrease gradually with the growing maturity of the field.

The bottlenecks for current AR assembly systems and potential future work directions are discussed with technical details based on an analysis of the cutting-edge development of AR technology,i.e.,tracking and registration issues,collaborative AR interface,3D workspace scene capture,knowledge representation and contextawareness,etc. With the technical analysis of the cuttingedge AR technology development,readers can quickly find potential research areas where AR can further enhance and improve assembly systems. Based on the discussion, researchers who want to make a new work on AR assembly in future should consider the following topics:

(i) Advanced computer vision technology to capture the 3D workspace scene with depth information so that real-time 3D reconstruction can be achieved with high precision to facilitate the interaction between virtual and physical objects in the workspace;

(ii) Standardization of assembly pertinent knowledge representation and assembly data structure interoperable between different AR assembly systems;

(iii) Development of more intelligent AR assembly systems to understand and induce operator workflows,such that the systems can consider the operators’ mental status to provide instructions fit for their cognitive process;

(iv) Intuitive user-system interface,e.g.,bare-hand based interface,which requires no calibration and no attached device,to interact with AR assembly systems with natural gestures;

(v) Collaborative AR assembly systems to facilitate the concurrent product development process.

Besides the above key features,the effectiveness and usability of the new AR assembly systems depend much on how familiar the developers and users are with the scientific rationale and experience behind their applications in the assembly task. This understanding will also be the key to designing appropriate system features and successful AR support in future.

| 1. | Whitney DE (2004) Mechanical assemblies: their design, manufacture, and role in product development. Oxford University Press, Oxford |

| 2. | Zhou F, Duh H-L, Billinghurst M (2008) Trends in augmented reality tracking, interaction and display: a review of ten years of ISMAR. Proceedings of the 7th IEEE international symposium on mixed and augmented reality (ISMAR 2008), Cambridge, pp 193-202 |

| 3. | Sielhorst T, Feuerstein M, Navab N (2008) Advanced medical displays: a literature review of augmented reality. J Disp Technol 4(4):451-467 |

| 4. | Henderson SJ, Feiner SK (2011) Exploring the benefits of augmented reality documentation for maintenance and repair. IEEE Trans Vis Comput Graph 17(10):1355-1368 |

| 5. | Ridel B, Reuter P, Laviole J et al (2014) The revealing flashlight: interactive spatial augmented reality for detail exploration of cultural heritage artifacts. J Comput Cult Herit 7(2):1-18 |

| 6. | Bower M, Howe C, McCredie N et al (2014) Augmented reality in education-cases, places and potentials. Educ Media Int 51(1):1-15 |

| 7. | Google Glass. http://www.google.com/glass/start/. Accessed 10 July 2015) |

| 8. | Nee AYC, Ong SK, Chryssolouris G et al (2012) Augmented reality applications in design and manufacturing. CIRP Annal Manuf Technol 61(2):657-679 |

| 9. | Ong SK, Yuan ML, Nee AYC (2008) Augmented reality applications in manufacturing: a survey. Int J Prod Res 46(10):2707-2742 |

| 10. | Fite-Georgel P (2011) Is there a reality in industrial augmented reality? In: Proceedings of the 10th IEEE international symposium on mixed and augmented reality (ISMAR 2011), Basel, pp 201-210 |

| 11. | Leu MC, ElMaraghy HA, Nee AYC et al (2013) CAD model based virtual assembly simulation, planning and training. CIRP Annal Manuf Technol 62(2):799-822 |

| 12. | Boothroyd G, Dewhurst P, Knight WA (2011) Product design for manufacture and assembly. CRC Press, Boca Raton |

| 13. | Seth A, Vance JM, Oliver JH (2011) Virtual reality for assembly methods prototyping: a review. Virtual Reality 15(1):5-20 |

| 14. | Cruz-Neira C, Sandin DJ, DeFanti TA et al (1992) The CAVE: audio visual experience automatic virtual environment. Commun ACM 35(6):64-72 |

| 15. | Cruz-Neira C, Sandin DJ, DeFanti TA (1993) Surround-screen projection-based virtual reality: the design and implementation of the CAVE. Proceedings of the 20th annual conference on computer graphics and interactive techniques, Anaheim, California, pp 135-142 |

| 16. | Kuehne R, Oliver J (1995) A virtual environment for interactive assembly planning and evaluation. Proceedings of ASME design automation conference |

| 17. | Pere E, Langrana N, Gomez D et al (1996) Virtual mechanical assembly on a PC-based system. Proceedings of ASME design engineering technical conferences and computers and information in engineering conference, Irvine, pp 18-22 |

| 18. | Jayaram S, Connacher HI, Lyons KW (1997) Virtual assembly using virtual reality techniques. Computer Aided Design 29(8):575-584 |

| 19. | Jayaram S, Jayaram U, Wang Y et al (1999) VADE: a virtual assembly design environment. Comput Graph Appl 19(6):44-50 |

| 20. | Jayaram S, Jayaram U, Wang Y et al (2000) CORBA-based collaboration in a virtual assembly design environment. Proceedings of ASME design engineering technical conferences and computers and information in engineering conference |

| 21. | Jayaram U, Tirumali H, Jayaram S (2000) A tool/part/human interaction model for assembly in virtual environments. Proceedings of ASME design engineering technical conferences |

| 22. | Taylor F, Jayaram S, Jayaram U (2000) Functionality to facilitate assembly of heavy machines in a virtual environment. Proceedings of ASME design engineering technical conferences |

| 23. | Coutee AS, McDermott SD, Bras B (2001) A haptic assembly and disassembly simulation environment and associated computational load optimization techniques. J Comput Inform Sci Eng 1(2):113-122 |

| 24. | Coutee AS, Bras B (2002) Collision detection for virtual objects in a haptic assembly and disassembly simulation environment. ASME design engineering technical conferences and computers and information in engineering conference, Montreal, pp 11-20 |

| 25. | Seth A, Su HJ, Vance JM (2005) A desktop networked haptic VR interface for mechanical assembly. ASME 2005 International mechanical engineering congress & exposition, Orlando, pp 173-180 |

| 26. | Seth A, Su HJ, Vance JM (2006) SHARP: a system for haptic assembly & realistic prototyping. ASME 2006 international design engineering technical conferences and computers and information in engineering conference in Philadelphia, Pennsylvania, pp 905-912 |

| 27. | Graphics Processing Unit (GPU). http://www.nvidia.com. Accessed 10 July 2015 |

| 28. | Caudell TP, Mizell DW (1992) Augmented reality: an application of heads-up display technology to manual manufacturing processes. Proceedings of the 25th Hawaii international conference on system sciences, Hawaii, pp 659-669 |

| 29. | Sims D (1994) New realities in aircraft design and manufacture. Comput Graph Appl 14(2):91 |

| 30. | Mizell D (2001) Boeing's wire bundle assembly project. In: Barfield W, Caudell T (eds) Fundamentals of wearable computers and augmented reality. CRC Press, Mahwah, pp 447-467 |

| 31. | Feiner S, Macintyre B, Seligmann D (1993) Knowledge-based augmented reality. Commun ACM 36(7):53-62 |

| 32. | Webster A, Feiner S, Maclntyre B et al (1996) Augmented reality in architectural construction, inspection and renovation. In: Proceedings of ASCE 3rd congress on computing in civil engineering, Anaheim, pp 913-919 |

| 33. | Curtis D, Mizell D, Gruenbaum P et al (1998) Several devils in the details: making an AR application work in the airplane factory. In: Proceedings of the International Workshop Augmented Reality in San Francisco, California, pp 47-60 |

| 34. | Schwald B, Figue J, Chauvineau E et al (2001) STARMATE: using augmented reality technology for computer guided maintenance of complex mechanical elements. In: Stanford-Smith B, Chiozza E (eds) E-work and e-commerce-novel solutions and practices for a global networked economy. IOS Press, Amsterdam, pp 196-202 |

| 35. | Syberfeldt A, Danielsson O, Holm M et al (2015) Visual assembling guidance using augmented reality. In: International manufacturing research conference 2015, Charlotte, pp 1-12 |

| 36. | Molineros J, Sharma R (2001) Computer vision for guiding manual assembly. In: Proceedings of the 2001 IEEE international symposium on assembly and task planning (ISATP 2001), Fukuoka, pp 362-368 |

| 37. | Schwald B, Laval BD (2003) An augmented reality system for training and assistance to maintenance in the industrial context. In: International conference in central Europe on computer graphics, visualization and computer vision (WSCG), Plzen, pp 425-432 |

| 38. | Regenbrecht H, Baratoff G, Wilke W (2005) Augmented reality projects in the automotive and aerospace industries. IEEE Comput Graph Appl 25(6):48-56 |

| 39. | Saaski J, Salonen T, Hakkarainen M et al (2008) Integration of design and assembly using augmented reality. In: Ratchev S, Koelemeijer S (eds) Micro-assembly technologies and applications. Springer, London, pp 395-404 |

| 40. | Salonen T, Saaski J (2008) Dynamic and visual assembly instruction for configurable products using augmented reality techniques. In: Yan XT, Jiang C, Eynard B (eds) Advanced design and manufacture to gain a competitive edge. Springer, London, pp 23-32 |

| 41. | Salonen T, Saaski J, Hakkarainen M et al (2007) Demonstration of assembly work using augmented reality. In: Proceedings of the 6th ACM international conference on image and video retrieval (CIVR 2007), Amsterdam, pp 120-123 |

| 42. | Salonen T, Saaski J, Woodward C (2009) Data pipeline from CAD to AR based assembly instructions. In: Proceedings of the ASME-AFM 2009 world conference of innovative virtual reality (WINVR09), Mediapole, pp 165-168 |

| 43. | Tumkor S, Aziz ES, Esche SK et al (2013) Integration of augmented reality into the CAD process. In: Proceedings of the ASEE annual conference and exposition, Atlanta |

| 44. | Makris S, Pintzos G, Rentzos L et al (2013) Assembly support using AR technology based on automatic sequence generation. CIRP Annal Manuf Technol 62(1):9-12 |

| 45. | Sanna A, Manuri F, Lamberti F et al (2015) Using handheld devices to support augmented reality based maintenance and assembly tasks. In: 2015 IEEE international conference on consumer electronics (ICCE), Berlin, pp 178-179 |

| 46. | Kollatsch C, Schumann M, Klimant P et al (2014) Mobile augmented reality based monitoring of assembly lines. Procedia CIRP 23:246-251 |

| 47. | Yuan ML, Ong SK, Nee AYC (2008) Augmented reality for assembly guidance using a virtual interactive tool. Int J Prod Res 46(7):1745-1767 |

| 48. | Sukan M, Elvezio C, Oda O et al (2014) ParaFrustum: visualization techniques for guiding a user to a constrained set of viewing positions and orientations. In: Proceedings of the 27th annual ACM symposium on user interface software and technology, Honolulu, pp 331-340 |

| 49. | Posada J, Toro C, Barandiaran I et al (2015) Visual computing as a key enabling technology for industry 4.0 and industrial internet. IEEE Comput Graph Appl 35(2):26-40 |

| 50. | Zhang J, Ong SK, Nee AYC (2011) RFID-assisted assembly guidance system in an augmented reality environment. Int J Prod Res 49(13):3919-3938 |

| 51. | Henderson SJ, Feiner SK (2011) Augmented reality in the psychomotor phase of a procedural task. In: Proceedings of the 10th IEEE international symposium on mixed and augmented reality (ISMAR 2011), Basel, pp 191-200 |

| 52. | Neumann U, Majoros A (1998) Cognitive, performance, and systems issues for augmented reality applications in manufacturing and maintenance. In: Proceedings of IEEE virtual reality (VR 1998), San Francisco, pp 4-11 |

| 53. | Andersen M, Andersen R, Larsen C et al (2009) Interactive assembly guide using augmented reality. In: Proceedings of the 5th international symposium on advances in visual computing: Part I, Las Vegas, pp 999-1008 |

| 54. | Khuong BM, Kiyokawa K, Miller A et al (2014) The effectiveness of an AR-based context-aware assembly support system in object assembly. In: Proceedings of IEEE virtual reality, Minneapolis, pp 57-62 |

| 55. | Friedrich W (2002) ARVIKA augmented reality for development, production, and service. In: Proceedings of the 1st IEEE international symposium on mixed and augmented reality (ISMAR 2002), Darmstadt, pp 3-4 |

| 56. | Rentzos L, Papanastasiou S, Papakostas N et al (2013) Augmented reality for human-based assembly: using product and process semantics. In: Analysis, design and evaluation of human-machine systems, Las Vegas, pp 98-101 |

| 57. | Zhu Z, Branzoi V, Wolverton M et al (2014) AR-Mentor: augmented reality based mentoring system. In: Proceedings of the 13rd IEEE international symposium on mixed and augmented reality (ISMAR 2014), Munich, pp 17-22 |

| 58. | Radkowski R, Oliver J (2013) Natural feature tracking augmented reality for on-site assembly assistance systems. In: Shumaker R (ed) Virtual, augmented and mixed reality, systems and applications. Springer, Berlin, pp 281-290 |

| 59. | Lowe DG (1999) Object recognition from local scale-invariant features. In: Proceedings of the 1999 international conference on computer vision, Kerkyra, pp 1150-1157 |

| 60. | Chen CJ, Hong J, Wang SF (2015) Automated positioning of 3D virtual scene in AR-based assembly and disassembly guiding system. Int J Adv Manuf Technol 76(5-8):753-764 |

| 61. | Damen D, Gee A, Mayol-Cuevas W et al (2012) Egocentric real-time workspace monitoring using a rgb-d camera. In: 2012 IEEE/RSJ international conference on intelligent robots and systems (IROS), Vilamoura, pp 1029-1036 |

| 62. | Vignais N, Miezal M, Bleser G et al (2013) Innovative system for real-time ergonomic feedback in industrial manufacturing. Appl Ergon 44(4):566-574 |

| 63. | Haringer M, Regenbrecht H (2002) A pragmatic approach to augmented reality authoring. In: Proceedings of IEEE 1st international symposium on mixed and augmented reality (ISMAR 2002), Darmstadt, pp 237-245 |

| 64. | Zauner J, Haller M, Brandl A et al (2003) Authoring of a mixed reality assembly instructor for hierarchical structures. In: Proceedings of the 2nd IEEE international symposium on mixed and augmented reality (ISMAR 2003), Tokyo, pp 237-246 |

| 65. | Servan J, Mas F, Menendez JL et al (2012) Using augmented reality in AIRBUS A400M shop floor assembly work instructions. In: The 4th manufacturing engineering society international conference, Cadiz, pp 633-640 |

| 66. | Petersen N, Stricker D (2012) Learning task structure from video examples for workflow tracking and authoring. In: Proceedings of the 11th IEEE international symposium on mixed and augmented reality (ISMAR 2012), Atlanta, pp 237-246 |

| 67. | Mura K, Petersen N, Huff M et al (2013) IBES: a tool for creating instructions based on event segmentation. Front Psychol 4:1-14 |

| 68. | Petersen N, Pagani A, Stricker D (2013) Real-time modeling and tracking manual workflows from first-person vision. In: Proceedings of the 12th IEEE international symposium on mixed and augmented reality (ISMAR 2013), Adelaide, pp 117-124 |

| 69. | Bhattacharva B, Winer E (2015) A method for real-time generation of augmented reality work instructions via expert movements. IS&T/SPIE Electronic Imaging: 93920G-1-93920G-13 |

| 70. | Mohr P, Kerbl B, Donoser M et al (2015) Retargeting technical documentation to augmented reality. In: Proceedings of the 33rd annual ACM conference on human factors in computing systems, Seoul, pp 3337-3346 |

| 71. | Hou L, Wang X (2013) A study on the benefits of augmented reality in retaining working memory in assembly tasks: a focus on differences in gender. Autom Constr 32:38-45 |

| 72. | Odenthal B, Mayer MP, Kabub W et al (2014) A comparative study of head-mounted and table-mounted augmented vision systems for assembly error detection. Hum Fact Ergon Manuf Serv Ind 24(1):105-123 |

| 73. | Hou L, Wang X, Truijens M (2013) Using augmented reality to facilitate piping assembly: an experiment-based evaluation. J Comput Civil Eng 29(1):05014007 |

| 74. | Baird KM, Barfield W (1999) Evaluating the effectiveness of augmented reality displays for a manual assembly task. Virtual Reality 4(4):250-259 |

| 75. | Tang A, Owen C, Biocca F et al (2003) Comparative effectiveness of augmented reality in object assembly. In: Proceedings of the SIGCHI conference on Human factors in computing systems (CHI 03), Fort Lauderdale, pp 73-80 |

| 76. | Wiedenmaier S, Oehme O, Schmidt L et al (2003) Augmented reality (AR) for assembly processes design and experimental evaluation. Int J Hum Comput Interact 16(3):497-514 |

| 77. | Odenthal B, Mayer MP, Kabub W et al (2011) An empirical study of disassembling using an augmented vision system. In: Proceedings of the 3rd international conference on digital human modeling (ICDHM'11), Orlando, pp 399-408 |

| 78. | Boud AC, Haniff DJ, Baber C et al (1999) Virtual reality and augmented reality as a training tool for assembly tasks. In: Proceedings of 1999 IEEE international conference on information visualization, London, pp 32-36 |

| 79. | Rios H, Hincapie M, Caponio A et al (2011) Augmented reality: an advantageous option for complex training and maintenance operations in aeronautic related processes. In: Proceedings of 2011 international conference on virtual and mixed reality—Part 1, Orlando, pp 87-96 |

| 80. | Suarez-Warden F, Cervantes-Gloria Y, Gonzalez-Mendivil E (2011) Sample size estimation for statistical comparative test of training by using augmented reality via theoretical formula and OCC graphs: aeronautical case of a component assemblage. In: Proceedings of 2011 international conference on virtual and mixed reality-systems and applications—Part II, Orlando, pp 80-89 |

| 81. | Gavish N, Gutierrez T, Webel S et al (2013) Evaluating virtual reality and augmented reality training for industrial maintenance and assembly tasks. Interact Learn Environ. doi:10.1080/10494820.2013.815221 |

| 82. | Radkowski R, Herrema J, Oliver J (2015) Augmented realitybased manual assembly support with visual features for different degrees of difficulty. Int J Hum Comput Interact 31(5):337-349 |

| 83. | Henderson SJ, Feiner SK (2009) Evaluating the benefits of augmented reality for task localization in maintenance of an armored personnel carrier turret. In: Proceedings of the 8th IEEE international symposium on mixed and augmented reality (ISMAR 2009), Orlando, pp 135-144 |

| 84. | Gattullo M, Uva AE, Fiorentino M et al (2015) Legibility in industrial AR: text style, color coding, and illuminance. IEEE Comput Graph Appl 35(2):52-61 |

| 85. | Hou L, Wang X (2011) Experimental framework for evaluating cognitive workload of using AR system for general assembly task. In: Proceedings of the 28th international symposium on automation and robotics in construction, Seoul, pp 625-630 |

| 86. | Hou L, Wang X, Bernold L et al (2013) Using animated augmented reality to cognitively guide assembly. J Comput Civil Eng 27(5):439-451 |

| 87. | Stork S, Schubo A (2010) Human cognition in manual assembly: theories and applications. Adv Eng Inform 24(3):320-328 |

| 88. | Reiners D, Stricker D, Klinker G et al (1998) Augmented reality for construction tasks: door lock assembly. In: Proceedings of the 1st international workshop on augmented reality (IWAR98), San Francisco, pp 31-46 |

| 89. | Boulanger P (2004) Application of augmented reality to industrial teletraining. In: Proceedings of the 1st Canadian conference on computer and robot vision, Ontario, pp 320-328 |

| 90. | Horejsi P (2015) Augmented reality system for virtual training of parts assembly. Proc Eng 100:699-706 |

| 91. | Simon V, Baglee D, Garfield S et al (2014) The development of an advanced maintenance training programme utilizing augmented reality. In: Proceedings of the 27th international congress of condition monitoring and diagnostic engineering, Brisbane |

| 92. | Peniche A, Treffetz H, Diaz C et al (2012) Combining virtual and augmented reality to improve the mechanical assembly training process in manufacturing. In: Proceedings of the 2012 American conference on applied mathematics, pp 292-297 |

| 93. | Fiorentino M, Uva AE, Gattullo M et al (2014) Augmented reality on large screen for interactive maintenance instructions. Comput Ind 65(2):270-278 |

| 94. | Liu Y, Li SQ, Wang JF et al (2015) A computer vision-based assistant system for the assembly of narrow cabin products. Int J Adv Manuf Technol 76(1-4):281-293 |

| 95. | Raczynski A, Gussmann P (2004) Services and training through augmented reality. In: The 1st European conference on visual media production (CVMP 2004), pp 263-271 |

| 96. | Gorecky D, Worgan SF, Meixner G (2011) COGNITO:a cognitive assistance and training system for manual tasks in industry. In: Proceedings of the 29th annual European conference on cognitive ergonomics, Rostock, pp 53-56 |

| 97. | Matsas E, Vosniakos GC (2015) Design of a virtual reality training system for human-robot collaboration in manufacturing tasks. Int J Interact Desig Manuf. doi:10.1007/s12008-015-0259-2 |

| 98. | Pathomaree N, Charoenseang S (2005) Augmented reality for skill transfer in assembly task. In: 2005 IEEE international workshop on robot and human interactive communication in Nashville, Tennessee, pp 500-504 |

| 99. | Kreft S, Gausemeier J, Matysczok C (2009) Towards wearable augmented reality in automotive assembly training. In: ASME international design engineering technical conferences and computers and information in engineering conference, San Diego, pp 1537-1547 |

| 100. | Charoenseang S, Panjan S (2011) 5-finger exoskeleton for assembly training in augmented reality. In: Shumaker R (ed) Virtual and mixed reality-new trends. Springer, Berlin, pp 30-39 |

| 101. | Re GM, Bordegoni M (2014) An augmented reality framework for supporting and monitoring operators during maintenance tasks. In: Shumaker R, Lackey S (eds) Virtual, augmented and mixed reality applications of virtual and augmented reality. Springer, Berlin, pp 443-454 |

| 102. | Kruger J, Nguyen TD (2015) Automated vision-based live ergonomics analysis in assembly operations. CIRP Annal Manuf Technol 64(1):9-12 |

| 103. | Webel S, Bockholt U, Engelke T et al (2013) An augmented reality training platform for assembly and maintenance skills. Robot Autonom Syst 61(4):398-403 |