2. Alibaba(Beijing) Software Services Company Limited, Beijing 100022, China

Machine reading comprehension (MRC) with unanswerable questions is challenging to the field of natural language processing research. Unlike previous work which ignores the mechanism of answerable and unanswerable, the semantic conflicts detection-based MRC network (SCDNet) was proposed aiming at detections of no-answer (NA) questions through semantic conflicts detection network. The basic idea is that if the given question is unanswerable, there exists semantic absence or conflicts between the question and the reference passages. Therefore, SCDNet predicts the NA probability by checking whether the passage covers the integral semantics of the question. Besides, in order to extract the exact answer from the passage, SCDNet is applied an answer length penalty in the loss function, which helps the learning objective to be more consistent with the evaluation metrics. SCDNet packs the NA question predictor and the answer extractor in a joint model and is trained in an end-to-end manner. Experiments show that SCDNet performs better than some strong baseline models, and achieve an F1 score of 72.43 and 76.96 NA accuracy on SQuAD 2.0 dataset.

2. 阿里巴巴(北京)软件服务有限公司, 北京 100022

机器阅读理解中存在无法仅从给定文档中获取问题答案的特殊情况,为此,基于语义冲突检测的机器阅读理解网络(SCDNet)提出应通过检测问题与文档内容之间的语义分歧来识别这种情况.经分析发现,文档无法为问题提供答案的根本原因主要分为两类:一是文档中不包含问题所需的语义信息;二是二者包含的语义成分之间存在分歧.据此推断,可以通过检测文档语义信息是否全面涵盖问题所需的信息来识别问题是否可由文档信息给出回答.此外,通过在损失函数中加入答案文本长度惩罚项,网络优化目标函数更接近评测指标,系统性能得到提升.网络模型使用联合训练模型建模无答案的问题识别与答案抽取2个子任务,并使用端到端的方式训练.实验结果证明,其对无答案问题类别预测的正确率超过了性能先进的基线模型SAN2.0,在SQuAD2.0数据集上取得了72.43的F1值和76.96的无答案问题识别正确率.

Machine reading comprehension (MRC) is a kind of question answering system based on the facts in the reference text. It has received considerable attention over the past few years. With the benefit of the first high-quality and large MRC dataset SQuAD 1.1[1], MRC models with deep learning architectures are proposed and have achieved promising results on a variety of tasks. However, most of them are trained to choose the most probable answer by comparing the candidate answers under the hypothesis that the given text always has the correct answer in its context. However, this hypothesis cannot be guaranteed in real world, some questions might be unanswerable only by its reference text. SQuAD 2.0[2] released recently offers a no-answer (NA) option to each question. Table 1 gives an example in SQuAD 2.0 which cannot be answered from the given reference text. The unanswerable questions in SQuAD 2.0 are written specially to be similar to answerable ones, all of the questions' contents are relevant to the passage and each of the unanswerable questions is provided a plausible answer which is not real. So, there are no obvious differences between answerable questions and unanswerable questions, and they must be distinguished by deep semantic matching.

|

|

Tab. 1 Examples of SQuAD 2.0 |

To deal with the NA problem, most current models append a special symbol to the passage to represent NA, models are trained to point to this special symbol when there comes an unanswerable question. Additionally, UNet[3] proposed a universal node for classifing the NA problems, SAN 2.0[4] used a binary NA classifier to be a joint training target, Read+verify[5] applied a binary generative pre-training (GPT) verifier to check the entailment relation between the question and the predicted answer sentence. Although they got great success in improving the NA prediction, they all ignore the mechanism of NA.

An NA question is difficult to recognize because there is no special syntactic or semantic NA-feature, it only depends on the question-passage semantic relation, and can only be distinguished from their semantic matching results. From this point of view, the NA problem is similar to the natural language inference (NLI) task[6-7] except that the NLI task focuses on the relationship between two sentences while MRC task cares more about a question and a passage. A question would be unanswerable if the given passage doesn't have enough information to support the facts the question asked, or if the semantics conveyed by the passage conflicts with the facts asked by the question, while when a question is answerable by a passage, every semantic component of the question can be found in the passage. Table 1 demonstrates two examples to explain our claim. Example 1 shows that the highlighted semantic components of the question all exist in the passage, they locate concentrated in the question but scattered in the passage. Example 2 shows that the counterpart of the question word "cause" or the question phrase "smoking tobacco cause" doesn't exist in the passage. Inspired by this semantic location pattern and the works from NLI, it is proposed that the NA problem can be formulated as a semantic matching task that detects semantic conflicts and absence between question and passage.

To recognize an answerable question, every semantic constituents of thequestion needs to find its counterpart in the passage to ensure the passage matches all the semantic components from the question, and the semantic integrity checking is more reasonable to be modeled from the question side. In SCDNet, the question's semantic matching counterpart is collected from the passage by query-to-passage attention, the attention averaged passage vectors are concatenated after the query vectors forming the passage-aware question, and then fed into a BiLSTM layer for the absence and conflicts detection.

Furthermore, the answer prediction subtask is jointly trained with the NA classifier, the answer prediction network utilizes an iterative pointer network to predict the answer's boundary. An answer boundary penalty is used in the loss function to constraint the start-end pair in a reasonable relative position. The penalty is a function of the distance of the highest confident start-end pair, it improves the model's F1 performance for about 1 percent.

Our contributions can be summarized as follows:

·SCDNet, a novel neural model is proposed which predicts the unanswerable questions and extracts answers for answerable questions, while the two procedures are packed together into a joint model and are trained jointly.

·SCDNet uses a simple Bi-directional long short term memory (BiLSTM) + maxout network to predict whether there is semantic gaps or semantic conflicts between the passage and the fact that question is concerned about, and improves the original pointer network to make it predict the answer span with a suitable length.

· Through extensive experiments on benchmark datasets, it is demonstrated that SCDNet's effectiveness over the competitive state-of-the-art approaches by 1.6 percent in NA classification accuracy.

1 Related workMachine reading comprehension, a challenge to enable machines to answer questions after reading given textual evidence, has attracted considerable attention from both academic and industrial communities. SQuAD 1.1[1] was released as the first large-scale dataset created by humans through crowdsourcing, and it constrains the answer to be a fragment of the given passage, while SQuAD 2.0[2] contains a collection of questions that might be unanswerable. MRC models must not only extract an answer but also determine if the question is unanswerable and refrain from answering an unanswerable question.

A typical MRC framework is shared among the previous models. First, it encodes the questions and passages, then refines passage representation to get a more elaborated question-aware passage from a matching network, finally predicts the answer span and output the final answer. Most of the methods focused on how to improve question-aware passage representation, question-passage fusion process, and the attention mechanism.

As the answer defined in SQuAD is a continuous span of the passage, the broadly used strategy for extract answer is to predict the probability of each passage position being the start or end of an answer span. Most current models predict the probability directly from the question-aware passage word features with a fully connected layer and a softmax function. Pointer network[8] is usually used to predict the start and end positions sequentially, which makes the end prediction step depend on the previous start prediction. Reinforced mnemonic reader (RMR)[9], stochastic answer network (SAN)[10] used iterative output layer to refine their answer by multi-step reasoning.

The predicted start and end points of the candidate answer must obey a position constraint: the start always goes before the end, and the answer length is usually not too lengthy. So, the models usually use an extra span probability rectification step following the network's output to lower or zero the illegal start-end probabilities such as in[3-4]. Despite their success, the models don't include the answer length limitation in the training process, and the answer being evaluated maybe not the one from the start-end pair with the highest confidence.

Since SQuAD 2.0 was released, several models try to solve the NA problem by adding an extra choice to their original SQuAD model. BiDAF-no-answer (BNA)[11] adds a trainable bias as the no-answer representation to compete for the answer boundary with passage words. RMR+Verify[5] adds two independent loss items to its loss function and use a GPT formed verifier to predict the NA probability. SAN 2.0 treats the end-of-sentence (EOS) padding of the passage as a representative position for NA, and build a binary NA classifier additionally to train the model in a joint way. U-Net[3] finds that a universal node vector encoded between the question and passage to be a powerful information collector for NA detection. However, these works totally rely on the question-aware passage to predict NA, and ignore to reveal the mechanic that why a question is unanswrable, therefore are hard to explain.

2 ModelAn MRC problem can be typically represented by a 3-tuples (Q, C, A). The Q=q1, q2, …, qm is the question with m words. C=c1, c2, …, cn is the passage with n words. A=as, ae when the answer exists, A=NA when the question can't be answered according to the given passage, where as and ae are the start and the end boundaries of the answer span respectively.

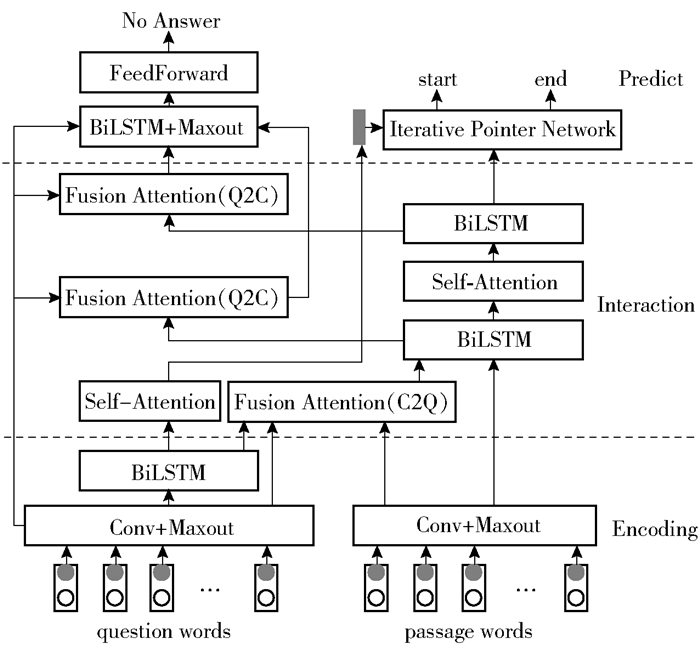

SCDNet is composed of three main blocks, they are Encoding, Interaction, and Prediction, as shown in Fig. 1. The encoding part encodes questions and reference text respectively, the interaction part fuses the information by attention mechanism to extract question-aware passage features and passage-aware question features. Based on the question-aware passage features, the answer prediction network predicts the answer boundary probability. Based on the passage-aware question features, the NA prediction network predicts the NA probability. The details of each part are given in the following subsections.

|

Figure 1 Architecture of the SCDNET |

The Encoding layer is used to transform the input word sequence into its contextual embedding. The words are first mapped into fixed word embeddings with pre-trained GloVe[12] and CoVe[13]. The embedding extraction method from document reader question answering (DRQA) is applied, part of speech (POS), named entity recognition (NER) and lemma embedding features are appended after the pre-trained word vector. Let

| $ {C_{{\text{e_m}}}} = {\rm{maxout}}\left( {{\rm{conv}}{_{{\text{uni}}}}\left( {{C_{\text{E}}}} \right),{\rm{conv}}{_{{\text{bi}}}}\left( {{C_{\text{E}}}} \right)} \right) $ | (1) |

| $ {Q_{{\text{e_m}}}} = {\rm{maxout}}\left( {{\rm{conv}}{_{{\text{uni}}}}\left( {{Q_{\text{E}}}} \right),{\rm{conv}}{_{{\text{bi}}}}\left( {{Q_{\text{E}}}} \right)} \right) $ | (2) |

A BiLSTM layer is then used to encode the contextual information as shown in Eq (3).

| $ {Q_{\text{e}}} = {\rm{BiLSTM}}\left( {{Q_{{\text{e_m}}}}} \right) $ | (3) |

The Interaction part is composed of two layers, a fusion layer and a self-attention layer. The fusion layer is to extract question-aware passage representations, the attention mechanism is applied to fulfill the fusion process. Let

| $ S\left( {\mathit{\boldsymbol{X}},\mathit{\boldsymbol{Y}}} \right) = {\rm{softmax}}\left( {{\mathit{\boldsymbol{W}}_1}\mathit{\boldsymbol{XD}}{{\left( {{\mathit{\boldsymbol{W}}_2}\mathit{\boldsymbol{Y}}} \right)}^{\text{T}}}} \right) \in {\mathbb{R}^{{l_x} \times {l_y}}} $ | (4) |

| $ {S_{{\text{Cl}}}} = S\left( {\left[ {{Q_{{\text{GloVe}}}};{Q_{{\text{CoVe }}}};{Q_{{\text{e_m}}}}} \right],\left[ {{C_{{\text{GloVe}}}};{C_{{\text{CoVe}}}};{C_{{\text{e_m}}}}} \right]} \right) $ | (5) |

| $ {S_{{\text{C2}}}} = S\left( {\left[ {{Q_{{\text{GloVe}}}};{Q_{{\text{CoVe }}}};{Q_{{\text{e_m}}}}} \right],\left[ {{C_{{\text{GloVe}}}};{C_{{\text{CoVe}}}};{C_{{\text{e_m}}}}} \right]} \right) $ | (6) |

| $ {Q_{\text{C}}} = \left[ {\mathit{\boldsymbol{S}}_{{\text{Cl}}}^{\text{T}}{Q_{{\text{e_m}}}};S_{{\text{C}}2}^{\text{T}}{Q_{\text{e}}}} \right] $ | (7) |

At last, a BiLSTM is used to encode the contextual information as shown in Eq(8).

| $ {C_{{\text{fusion}}}} = {\rm{BiLSTM}}\left( {\left[ {{C_{{\text{e_m}}}};{Q_{\text{C}}}} \right]} \right) $ | (8) |

The self-attention layer is used to capture the long-distance dependencies in the passage. The similarity matrix Sself is calculated as in Eq (10), the corresponding passage vector for every passage word is calculated as in Eq (11).

| $ {C_{\text{H}}} = \left[ {{C_{{\text{GloVe}}}};{C_{{\text{CoVe}}}};{C_{{\text{e_m}}}};{C_{{\text{fusion}}}}} \right] $ | (9) |

| $ {S_{{\text{self}}}} = S\left( {{C_{\text{H}}},{C_{\text{H}}}} \right) $ | (10) |

| $ {C_{{\text{self}}}} = \mathit{\boldsymbol{S}}_{{\text{self}}}^{\text{T}}{C_{{\text{e_m}}}} $ | (11) |

At last, a BiLSTM is used to encode the contextual information as shown in Eq (12). This layer output the final passage features HC for predicting the answer boundary.

| $ {H_{\text{C}}} = {\rm{BiLSTM}}\left( {\left[ {{C_{{\text{fusion}}}};{C_{{\text{self}}}}} \right]} \right) $ | (12) |

Our model use an attention layer to collect the question's most relevant information from the two passage layers Cfusion and HC, and a BiLSTM to check whether there is a noteworthy semantic absence or a conflict as shown in Eq (13) and (14).

| $ {S_{{\text{Ql}}}} = S\left( {{Q_{\text{e}}},{C_{{\text{fusion}}}}} \right),{S_{{\text{Q}}2}} = S\left( {{Q_{\text{e}}},{H_{\text{C}}}} \right) \in {\mathbb{R}^{m \times n}} $ | (13) |

| $ {H_{\text{Q}}} = {\rm{BiLSTM}}\left( {\left[ {{Q_{\text{e}}};{S_{{\text{Q}}1}}{C_{{\text{fusion}}}};{S_{{\text{Q}}2}}{H_{\text{C}}}} \right]} \right) \in {\mathbb{R}^{m \times {h_Q}}} $ | (14) |

A maxout layer is deployed after the BiLSTM to aggregate the checking results throughout the question sequence into a hQ dimensional vector. And based on this vector, a binary classifier is built to predict the question's NA probability,

| $ {p_{{\text{NA}}}} = {\rm{sigmoid}}\left( {{W_{{\text{NA}}}}{{\max }_m}\left( {{H_Q}} \right) + {b_{{\text{NA}}}}} \right) $ | (15) |

The predicted answer would be set to NA if its NA probability exceeds a threshold. Experiments show that it brings 2.3 percent improvement than predicting from the EOS vector of the passage.

2.3.2 Answer predictionAn iterative pointer network is used as our answer network. The memory is the output feature of the passage HC, the initial hidden vector hs, 0 is a self-attention averaged question vector, as shown in Eq (16).

| $ {h_{{s_0}}} = {\rm{softmax}}\left( {{W_Q}{Q_{\text{e}}} + {b_{\text{Q}}}} \right){Q_{\text{e}}} $ | (16) |

A gated recurrent unit (GRU) formed pointer network is used to refresh the hidden vector as shown in Eq (18), hs, t, he, t are hidden vectors of the GRU. The start and end are predicted through a bilinear function as in Eq (17) and (19).

| $ {p_t}\left( s \right) = {\rm{softmax}}\left( {{h_{{\text{s}},t}}{W_{\text{s}}}{H_{\text{C}}}} \right) \in {\mathbb{R}^n} $ | (17) |

| $ {h_{{\text{e}},t}} = {\rm{GRU}}\left( {{h_{{\text{s}},t}},{p_t}\left( s \right){H_{\text{C}}}} \right),{h_{{\text{s}},t + 1}} = {h_{{\text{e}},t}} $ | (18) |

| $ {p_t}\left( e \right) = {\rm{softmax}}\left( {{h_{{\text{e}},t}}{W_{\text{e}}}{H_{\text{C}}}} \right) \in {\mathbb{R}^n} $ | (19) |

WQ, bQ, Ws, We, are all trainable weight matrices, pt(s), pt(e) are the probabilities of the start and end at time step t. The final prediction is the output at time step T, here T is a hyperparameter.

The model is jointly trained, and the total loss is expressed as in Eq (20). lossans is the loss item for the answer span prediction task, lossNA is for the NA prediction task, lossspan is the penalty item to penalize the highest confidence answer's out-of-range length.

| $ {\rm{loss}} = {\rm{loss}}{_{{\text{ans}}}} + {\rm{loss}}{_{{\text{NA}}}} + {\rm{loss}}{_{{\text{span}}}} $ | (20) |

The cross-entropy loss function for both lossans and lossNA can be written as Eq (21)~(23) which only consider a single training example.

| $ {\rm{loss}}{_{{\text{ans}}}} = - \log {p_T}\left( s \right) - \log {p_T}\left( e \right) $ | (21) |

| $ {\rm{loss}}{_{{\text{NA}}}} = - \log {p_{{\text{NA}}}} $ | (22) |

| $ {\rm{loss}}{_{{\text{span}}}} = \left\{ {\begin{array}{*{20}{l}} {\log \left( {\hat e - \hat s - T + 1} \right),\;\;\;\;\hat e - \hat s > L} \\ {\log \left( {\hat s - \hat e + 1} \right),\;\;\;\;\hat s - \hat e > 0} \\ {0,\;\;\;\;{\text{other}}} \end{array}} \right. $ | (23) |

SQuAD 2.0 has 86 821 answerable questions and 43 498 unanswerable questions in its training data, 5 928 answerable questions and 5 945 unanswerable questions in its development data. Two metrics are used to evaluate the model performance: exact match (EM) and a marcro-averaged F1 score which measures the weighted average of the precision and recall rate at character level.

3.2 Implementation detailsThe spacy tool is utilized to tokenize all the both the question and the passage, and generate lemma, part-of-speech and named entity tags. PyTorch is used to implement our models.

The model uses word embedding with 300-dimensional GloVe and 600-dimensional CoVe word vectors, and only finetunes a 1 000 most frequent word embedding weights during training. The embeddings for the out-of-vocabulary are set to 0. All the hidden sizes of BiLSTM in the interaction layer and prediction layer are set to 300. Weight normalization is used. The dropout rate is 0.1, the mini-batch size is set to 16. Adamax optimizer is used and its learning rate is initialized to 0.001 and decrease it by 0.5 after each 10 epochs. The threshold of the NA classifier is set to 0.5. The threshold of the answer length threshold L is 10. The prediction iterative step T is also set to 10. The best results are reached after 13 training epochs.

In this model lots of codes and ideas are borrowed from SAN 2.0.

3.3 Results and analysisSCDNet is evaluated on the development dataset of SQuAD 2.0, and it achieves a F1 score of 72.43 with GloVe and CoVe embeddings. Our model can achieve a NA accuracy of 76.96 which proves that SCDNet is good at NA prediction. This is 1.6 percent higher than our compared model SAN 2.0[4], which is also tested on the development dataset with the classifier threshold set to 0.5.

SAN 2.0 has a simple but effective MRC network structure, its network structure is easy to understand and suitable for testing the differences between the two ways of NA prediction, from the question-side or the passage-side. Based on the frame of SAN 2.0, SCDNet model is built and shows the effectiveness of predicting NA probability by detecting the semantic discrepancy between the question and the passage.

Comparing with SAN 2.0, SCDNet simplifies the 3-layer encoding BiLSTM in SAN 2.0 into 1-layer BiLSTM. SCDNet adds a bi-gram convolution layer to its encoding network, because it can help SCDNet to be more sensitive of the order of words in the texts.

U-Net and RMR+Verify in Table 2 all get very strong performances. But they are more complicated in structure than SAN 2.0 and they all use ELMo which is a more advanced pre-trained word embedding model than CoVe.

|

|

Tab. 2 Experiment results |

To illustrate the effectiveness of the NA classifier and answer length penalty, ablation studies are shown in Table 3. Here are five models trained on the development set: 1) SCDNet is our proposed model; 2) -length penalty model is SCDNet without length penalty; 3) -length rectification is SCDNet without the rectification on the candidate answer-span probabilities; 4) -length penalty and length rectification is an SCDNet without both the length penalty in the loss function and the probability rectification before evaluation; 5) EOS NA means to remove the question-side NA prediction part, and use the NA prediction from the appended EOS vector of the passage feature HC instead. 6) one Q2C means to remove the second last fusion attention Q2C layer.

|

|

Tab. 3 Ablation experiments |

By adding the answer-length penalty, it gives a 0.93 percent performance boost compared to 2), the best performance is got at the threshold 10 instead of 15 although 15 is commonly used as the answer length limitation by other researchers. In addition, the answer-span probability rectification step is indispensable for our model as is shown in 3), this means that although our network improves its performance by add the answer length penalty, it still can benefit from using an extra step of probability rectification. The rectification method from SAN 2.0 codes is applied to reduce the answers' probability by multiplying a punish factor

By comparing with 5), the SCDNet get a 0.55 percent promotion in F1 and 2.3 percent promotion in NA accuracy which proves the effectiveness of our question-side based conflicts detection model. Moreover, our NA prediction network can also improve the network's performance just as a jointly trained task for the EOS based NA prediction model.

The two Q2C fusion attention layers in our model are used to collect two different levels of passage information, 6) in Table 3 shows that if we only use the last passage information collection layer, the F1 score will drop about 0.45.

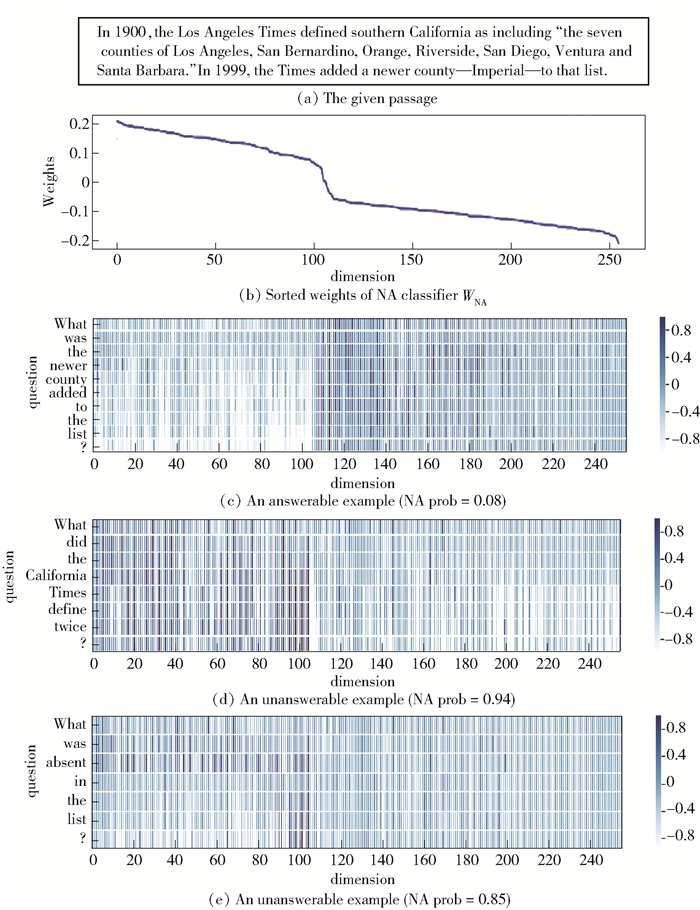

3.5 Case studyTo illustrate the effectiveness of our NA prediction, the visualization of BiLSTM output HQ and maxout output maxm(HQ) from Eq (13) are shown in Fig. 2. The BiLSTM is used as NA semantic matching network and the maxout is applied after it to detect the existence of semantic absence or conflict. The 256-dim HQ are sorted according to the weights of the NA classifier WNA because the elements multiplied with bigger weights have more powerful impacts on the predicting results. The NA classifier weights are sorted in a descending order as shown in Fig 2 (b). Here, 3 different question-answer cases for the same passage are given in Fig 2. Fig 2 (a) is the passage. Fig 2 (c) shows an answerable question. Fig 2 (d) shows an unanswerable case because of a semantic conflict, "California Times" is conflicted with "Los Angeles Times" in the passage. Fig 2 (e) is an unanswerable case for semantic absence of the word "absent", the word "absent" gets high values at the top 100 dimensions which can be regarded as a sign of semantic discrepancy, this discrepancy is caught by the maxout operation and be recognized by the NA classifier.

|

Figure 2 Visualization of the sorted outputs of the NA BiLSTM layer |

SCDNet, a simple yet effective network for machine reading comprehension with unanswerable questions is proposed. It incorporates a BiLSTM + maxout made semantic matching mechanism to check the semantic absence and conflicts through the question words, and get a 1.6 percent improvement in no-answer accuracy compared to our baseline model SAN 2.0. Additionally, it is very useful to add an answer-length penalty in the answer span prediction loss function, which brings about 1% improvement in F1 score.

| [1] |

Rajpurkar P, ZhangJian, Lopyrev K, et al. SQuAD: 100, 000+ questions for machine comprehension of text[C]//Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing. Stroudsburg, PA, USA: Association for Computational Linguistics, 2016: 2383-2392.

|

| [2] |

Rajpurkar P, Jia R, Liang P. Know what you don't know: unanswerable questions for SQuAD[C]//Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers). Stroudsburg, PA, USA: Association for Computational Linguistics, 2018, 2: 784-789.

|

| [3] |

Sun F, Li L Y, Qiu X P, et al. U-net: machine reading comprehension with unanswerable questions[EB/OL]. 2018(2018-10-12)[2019-11-18]. https://arxiv.org/abs/1810.06638.

|

| [4] |

Liu X D, Li W, Fang Y W, et al. Stochastic answer networks for SQuAD 2.0[EB/OL]. 2018(2018-09-24)[2019-11-18].https://arxiv.org/abs/1809.09194.

|

| [5] |

Hu Minghao, Wei Furu, Peng Yuxing, et al. Read + verify:machine reading comprehension with unanswerable questions[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2019, 33: 6529-6537. doi: 10.1609/aaai.v33i01.33016529 |

| [6] |

Wang S H, Jiang J, Learning natural language inference with LSTM[EB/OL]. 2015(2016-11-10)[2019-11-18]. https://arxiv.org/abs/1512.08849v2.

|

| [7] |

Parikh A P, Täckström O, Das D, et al. A decomposable attention model for natural language inference[EB/OL]. 2016(2016-09-25)[2019-11-18]. https://arxiv.org/abs/1606.01933v2.

|

| [8] |

Vinyals Q, Fortunato M, Jaitly N, Pointer networks[C]//Advances in Neural Information Processing Systems. New York: Curran Associates, 2015: 2692-2700.

|

| [9] |

Hu Minghao, Peng Yuxing, Huang Zhen, et al. Reinforced mnemonic reader for machine reading comprehension[C]//Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence. California: International Joint Conferences on Artificial Intelligence Organization, 2018: 4099-4106.

|

| [10] |

Liu Xiaodong, Shen Yelong, Duh K, et al. Stochastic answer networks for machine reading comprehension[C]//Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Stroudsburg, PA, USA: Association for Computational Linguistics, 2018: 1694-1704.

|

| [11] |

Levy O, Seo M, Choi E, et al. Zero-shot relation extraction via reading comprehension[C]//Proceedings of the 21st Conference on Computational Natural Language Learning (CoNLL 2017). Vancouver, USA: Association for Computational Linguistics, 2017: 333-342.

|

| [12] |

Pennington J, Socher R, Manning C. Glove: global vectors for word representation[C]//Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP). Doha: Association for Computational Linguistics, 2014: 1532-1543.

|

| [13] |

McCann B, Bradbury J, Xiong C M, et al. Learned in translation: contextualized word vectors[C]//Advances Inneural Information Processing Systems. New York: Curran Associates, 2017: 6294-6305.

|

| [14] |

Huang H Y, Zhu C G, Shen Y L, et al. Fusionnet: fusing via fully-aware attention with application to machine comprehension[EB/OL]. 2017(2018-02-04)[2019-11-18]. https://arxiv.org/abs/1711.0734-1v2.

|