Consider the stochastic differential equation (SDE)

| $ \begin{array}{*{20}{c}} {{\rm{d}}X\left( t \right) = f\left( {X\left( {\omega, t} \right), \theta } \right){\rm{d}}t + \sigma \left( {X\left( {\omega, t} \right), \gamma } \right){\rm{d}}B\left( t \right), }\\ {X\left( {{t_0}} \right) = {X_0}, } \end{array} $ | (1) |

where t0≤t≤T, and ω is an element of the underlying probability space Ω. f(·) and σ(·) are known functions of X, and B(t) is a standard Brownian motion. In addition, θ and γ are unknown parameters, which will be estimated. To simplify the exposition, we first assume that the parameters θ and γ are of one-dimension, and will present the discussion of estimating multiple unknown parameters in the last section. Moreover, we divide the time interval [t0, T] into N pieces of equal length h, such that ti+1-ti=h, i=0, …, N-1, tN=T.

The problem of parameter estimation in diffusion processes based on discrete observations has been studied by some authors. Morel et al.[1] first studied estimation in discretely observed diffusions. Breton[2] used approximate maximum likelihood estimation (MLE), and studied asymptotic properties of the descritized versions of MLE for linear parametric stochastic differential equations. Dorogovcev[3] used conditional least squares estimation, and gave sufficient conditions for weak consistency of the estimator. Prakasa[4] showed that Bayes estimators and MLE estimators of SDEs have the same asymptotic properties and asymptotic distributions for suitable class of loss functions of some special types. Combining statistical methods with numerical methods, Pedersen[5] used numerical approximationsbased on iterations of the Gaussian transition densities emanating from Euler-Maruyama scheme and studied approximate maximum likelihood. Papaspiliopoulos and Beskos[6] deduced the distribution of certain operation of the numerical solution of the stochastic differential equations by Euler-Maruyama scheme, and then used the maximum likelihood method to estimate the parameters based on observations, which we call the EM-MLE estimator. Inspired by their work, we propose estimators by deducing the distributions of certain relevant operations of the exact solution, as well as numerical solutions resulted from the Euler-Maruyama and the midpoint schemes of the SDEs. Instead of the maximum likelihood method in Ref.[6], we use directly the deduced distributions together with tree-like observations to estimate the parameters. Errors of our estimators are compared with that of the EM-MLE estimator in the numerical experiments.

The contents are arranged as follows. In section 1, we derive the distribution of an operation of the exact solution of constant-coefficient homogeneous linear SDE, and deseribe the method of parameter estimation based on this distribution. In section 2 we propose two methods of parameter estimation for SDEs of It

Consider the constant-coefficient homogeneous linear SDE

| $ \begin{array}{*{20}{c}} {{\rm{d}}X\left( t \right) = aX\left( t \right){\rm{d}}t + bX\left( t \right){\rm{d}}B\left( t \right), }\\ {X\left( 0 \right) = {X_0} \ne 0, } \end{array} $ | (2) |

where a and b are unknown parameters. The exact solution of this SDE has the form

| $ X\left( t \right) = {X_0}\exp \left[{\left( {a-\frac{1}{2}{b^2}} \right)t + bB\left( t \right)} \right]. $ | (3) |

Obviously,

| $ X\left( {{t_i}} \right) = {X_0}\exp \left[{\left( {a-\frac{1}{2}{b^2}} \right){t_i} + bB\left( {{t_i}} \right)} \right], $ | (4) |

| $ X\left( {{t_{i + 1}}} \right) = {X_0}\exp \left[{\left( {a-\frac{1}{2}{b^2}} \right){t_{i + 1}} + bB\left( {{t_{i + 1}}} \right)} \right]. $ | (5) |

From (4) and (5), it follows

| $ \begin{array}{l} X\left( {{t_{i + 1}}} \right) = X\left( {{t_i}} \right)\exp \left[{\left( {a-\frac{1}{2}{b^2}} \right)h + } \right.\\ \;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\left. {b\left( {B\left( {{t_{i + 1}}} \right)-B\left( {{t_i}} \right)} \right)} \right], \end{array} $ | (6) |

where h=ti+1-ti, and

| $ B\left( {{t_{i + 1}}} \right) - B\left( {{t_i}} \right)\tilde{\ }N\left( {0, h} \right). $ |

Therefore,

| $ \begin{array}{l} \;\;\;\;\;\;\;\;\ln\left| {X\left( {{t_{i + 1}}} \right)} \right| - \ln\left| {X\left( {{t_i}} \right)} \right|\\ = \left( {a - \frac{1}{2}{b^2}} \right)h + b\left( {B\left( {{t_{i + 1}}} \right) - B\left( {{t_i}} \right)} \right)\\ \sim N\left( {\left( {a - \frac{1}{2}{b^2}} \right)h, {b^2}h} \right). \end{array} $ | (7) |

Suppose we have observations

| $ \begin{array}{*{20}{c}} {X\left( {{\omega _1}, {t_0}} \right), X\left( {{\omega _1}, {t_1}} \right), \cdots, X\left( {{\omega _1}, {t_N}} \right), }\\ \vdots \\ {X\left( {{\omega _m}, {t_0}} \right), X\left( {{\omega _m}, {t_1}} \right), \cdots, X\left( {{\omega _m}, {t_N}} \right), } \end{array} $ |

where ωj ∈ Ω, X(ωj, t0)=X0, for j=1, …, m.

On the first subinterval [t0, t1], we calculate

| $ \begin{array}{*{20}{c}} {\ln \left| {X\left( {{\omega _1}, {t_1}} \right)} \right| - \ln \left| {X\left( {{\omega _1}, {t_0}} \right)} \right|, }\\ {\ln \left| {X\left( {{\omega _2}, {t_1}} \right)} \right| - \ln \left| {X\left( {{\omega _2}, {t_0}} \right)} \right|, }\\ \vdots \\ {\ln \left| {X\left( {{\omega _m}, {t_1}} \right)} \right| - \ln \left| {X\left( {{\omega _m}, {t_0}} \right)} \right|.} \end{array} $ | (8) |

According to the method of moments, we can approximate the total moments by the moments of the samples. Therefore, let

| $ \begin{array}{*{20}{l}} {\left( {a - \frac{1}{2}{b^2}} \right)h = \frac{1}{m}\sum\limits_{j = 1}^m {\ln \left| {X\left( {{\omega _1},{t_1}} \right)} \right|} - }\\ {\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\ln \left| {(X\left( {{\omega _j},{t_0}} \right)} \right|,}\\ {{b^2}h = \frac{1}{{m - 1}}\sum\limits_{j = 1}^m {(\ln \left| {X\left( {{\omega _i},{t_1}} \right)} \right|} - }\\ {\;\;\;\;\;\;\;\;{{\left. {\ln \left| {X\left( {{\omega _j},{t_0}} \right)} \right| - \left( {a - \frac{1}{2}{b^2}} \right)h} \right)}^2}} \end{array} $ |

to get the estimator of a and b on the first subinterval [t0, t1], denoted by a

By performing the same procedure on the subsequent small intervals [t1, t2], …, [tN-1, tN], we get a sequence of estimators

| $ \hat a = \frac{1}{N}\sum\limits_{i = 1}^N {{{\hat a}_i}}, \hat b = \frac{1}{N}\sum\limits_{i = 1}^N {{{\hat b}_i}} . $ | (9) |

In this section, we will give parameter estimators based on two kinds of numerical discretizations of the SDEs containing unknown parameters.

2.1 Method using the Euler-Maruyama schemeConsider the SDE of It

| $ {X_{i + 1}} - {X_i} = hf\left( {{X_i}, \theta } \right) + \left[{B\left( {{t_{i + 1}}} \right)-B\left( {{t_i}} \right)} \right]\sigma \left( {{X_i}, \gamma } \right). $ | (10) |

Given Xi, we know that the distribution of the increment is

| $ {X_{i + 1}} - {X_i} \sim N\left( {hf\left( {{X_i}, \theta } \right), h{\sigma ^2}\left( {{X_i}, \gamma } \right)} \right). $ | (11) |

Suppose we have m observations X(ω10, t1), X(ω20, t1), …, X(ωm0, t1), each appearing after evolution of a time period h from the given initial value X0. Then due to (11), we can let

| $ \frac{1}{m}\sum\limits_{j = 1}^m {X\left( {\omega _j^0, {t_1}} \right)} = hf\left( {{X_0}, \theta } \right) + {X_0} $ |

and

| $ \frac{1}{{m - 1}}\sum\limits_{j = 1}^m {{{\left( {X\left( {\omega _j^0, {t_1}} \right) - hf\left( {{X_0}} \right)} \right)}^2}} = h{\sigma ^2}\left( {{X_0}, \gamma } \right), $ |

from which we get an estimate

Then, we fix an arbitrary X(ωk0, t1)=:X1 (k ∈ {1, …, m}), and observe other m values X(ω11, t2), X(ω21, t2), …, X(ωm1, t2), each resulting from evolving time h from the given value X(ωk0, t1)=X1. Let

| $ \frac{1}{m}\sum\limits_{j = 1}^m {X\left( {\omega _j^1, {t_2}} \right)} = hf\left( {{X_1}, \theta } \right) + {X_1}, $ |

and

| $ \frac{1}{{m - 1}}\sum\limits_{j = 1}^m {{{\left( {X\left( {\omega _j^1, {t_2}} \right) - hf\left( {{X_1}} \right)} \right)}^2}} = h{\sigma ^2}\left( {{X_1}, \gamma } \right). $ |

We obtain another estimate

Analogously, we can get successively a sequence of estimates

| $ \hat \theta = \frac{1}{N}\sum\limits_{i = 1}^N {{{\hat \theta }_i}}, \hat \gamma = \frac{1}{N}\sum\limits_{i = 1}^N {{{\hat \gamma }_i}} . $ | (12) |

For the SDE of Stratonovich sense

| $ \begin{array}{*{20}{c}} {{\rm{d}}X\left( t \right) = f\left( {X\left( {\omega, t} \right), \theta } \right){\rm{d}}t + \sigma \left( {X\left( {\omega, t} \right), \gamma } \right) \circ {\rm{d}}B\left( t \right), }\\ {X\left( {{t_0}} \right) = {X_0}, } \end{array} $ | (13) |

the midpoint scheme is a kind of consistent numerical method (see Ref.[7]). If θ and γ are unknown parameters, we apply the midpoint scheme to (13), and simulate B(ti+1)-B(ti) by

| $ \begin{array}{l} {X_{i + 1}} - {X_i} = f\left( {\frac{{{X_{i + 1}} + {X_i}}}{2}, \theta } \right)h + \\ \;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\sigma \left( {\frac{{{X_{i + 1}} + {X_i}}}{2}, \gamma } \right){\xi _i}\sqrt h . \end{array} $ | (14) |

Since scheme (14) is usually implicit, we may need to use predictor-corrector type iterations, as well as proper truncation of the ξi to compute an approximation

| $ \frac{{{{\tilde X}_{i + 1}} - {X_i} - f\left( {\frac{{{{\tilde X}_{i + 1}} + {X_i}}}{2}, \theta } \right)h}}{{\sigma \left( {\frac{{{{\tilde X}_{i + 1}} + {X_i}}}{2}, \gamma } \right)\sqrt h }} \approx {\xi _i} \sim N\left( {0, 1} \right), $ | (15) |

where Xi is given by last iteration step.

We use the same observation as described in section 2.1. According to (15), for the first time interval [t0, t1], we can let

| $ \begin{array}{*{20}{c}} {\frac{1}{m}\sum\limits_{j = 1}^m {\frac{{X\left( {\omega _j^0, {t_1}} \right) - {X_0} - f\left( {\frac{{X\left( {\omega _j^0, {t_1}} \right) + {X_0}}}{2}, \theta } \right)}}{{\sigma \left( {\frac{{X\left( {\omega _j^0, {t_1}} \right) + {X_0}}}{2}, \gamma } \right)\sqrt h }} = 0, } }\\ {\frac{1}{{m - 1}}\sum\limits_{j = 1}^m {{{\left( {\frac{{X\left( {\omega _j^0, {t_1}} \right) - {X_0} - f\left( {\frac{{X\left( {\omega _j^0, {t_1}} \right) + {X_0}}}{2}, \theta } \right)h}}{{\sigma \left( {\frac{{X\left( {\omega _j^0, {t_1}} \right) + {X_0}}}{2}, \gamma } \right)\sqrt h }}} \right)}^2}} }\\ { = 1, } \end{array} $ |

from which we can get an estimate

| $ \hat \theta = \frac{1}{N}\sum\limits_{i = 1}^N {{{\hat \theta }_i}}, \hat \gamma = \frac{1}{N}\sum\limits_{i = 1}^N {{{\hat \gamma }_i}} . $ | (16) |

Alternatively, we can transform the SDE of Stratonovich sense (13) into its equivalent It

| $ \begin{array}{*{20}{c}} {{\rm{d}}X\left( t \right) = \left( {f\left( {X\left( {\omega, t} \right), \theta } \right) + \frac{1}{2}\frac{{\partial \sigma }}{{\partial X}} \cdot } \right.}\\ {\left. {\sigma \left( {X\left( {\omega, t} \right), \gamma } \right)} \right){\rm{d}}t + \sigma \left( {X\left( {\omega, t} \right), \gamma } \right){\rm{d}}B\left( t \right), }\\ {X\left( {{t_0}} \right) = {X_0}, } \end{array} $ | (17) |

and then use the method given in section 2.1 to estimate the parameters. We will compare the results of the two approaches based on Stratonovich and It

For the linear SDE (2), let t0=0, T=1, X0=1. Suppose a and b are given. a and b will be estimated by using the method given in section 1 to test the ability of our estimator.We set the number of subintervals of time to be N=500 and the number of sample path observations to be m=100, and we perform five tests for five different groups of a and b values. The observation data are simulated by the exact solution (3). Our five group of numerical results

|

|

Table 1 Estimates based on the exact solution |

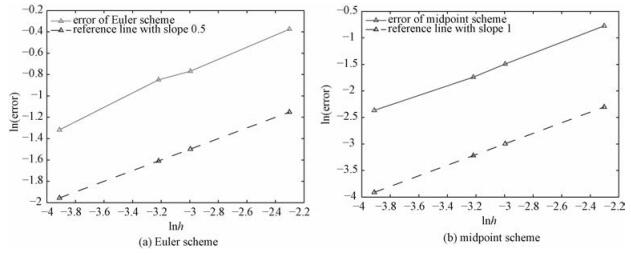

In Fig. 1(a), we plot the points (a, b) denoted by 'º', and the corresponding estimates (

|

Download:

|

|

Fig. 1 Exact values and estimates of the parameter (a, b) |

|

To test the effect of the parameter estimations based on the Euler-Maruyama and the midpoint schemes, we first consider the linear SDE of Stratonovich sense

| $ \begin{array}{*{20}{c}} {{\rm{d}}X\left( t \right) = aX\left( t \right){\rm{d}}t + bX\left( t \right) \circ {\rm{d}}B\left( t \right), }\\ {X\left( 0 \right) = {X_0} \ne 0, } \end{array} $ | (18) |

and its equivalent It

| $ \begin{array}{*{20}{c}} {{\rm{d}}X\left( t \right) = \left( {a + \frac{1}{2}{b^2}} \right)X\left( t \right){\rm{d}}t + bX\left( t \right){\rm{d}}B\left( t \right), }\\ {X\left( 0 \right) = {X_0} \ne 0.} \end{array} $ | (19) |

We apply the midpoint scheme to (18) and the Euler-Maruyama scheme to (19). Then we compare their effects.

The data are set as t0=0, T=1, X0=1, N=100, and m=500, and the observation data are generated by the exact solution of (18) and (19)

| $ X\left( t \right) = {X_0}\exp \left( {at + bB\left( t \right)} \right). $ | (20) |

Again, we perform five tests with five different groups (a, b), and denote the estimates by the Euler-Maruyama scheme with (

|

|

Table 2 Estimates based on the numerical solutions |

In Fig. 1(b) we plot the five points (a, b) denoted by 'º', and the corresponding estimates (

In this section, we analyze the relation between the error of estimate

Set X0=1, t0=0, T=1, a=3, b=1, and the number of observed sample paths m=500. We test four different values of the time step size of the numerical solutions, namely, h=0.02, 0.04, 0.05, and 0.1. In Table 3 listed are the estimated values (

|

|

Table 3 Estimates and the corresponding h values |

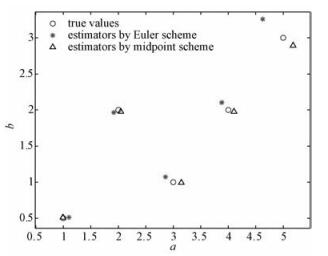

In Table 3, we observe an inereasing tendency of errore with h for both numerical schemes. For a clearer demonstration, we plot lne against lnh for the four different values of h and the corresponding errors e in Fig. 2, with Fig. 2(a) for the Euler-Maruyama scheme and Fig. 2(b) for the midpoint scheme. In Fig. 2, we can observe the order of the error with respect to h. The dashed reference line in Fig. 2(a) is of slope 0.5. The near parallelism between the reference line and the plotted lne-lnh line indicates that the order of the error e for the Euler-Maruyama scheme is about O(h0.5). Analogously, it can be inferred from Fig. 2(b) that the error e for the midpoint scheme is of order O(h), since the dashed reference line in Fig. 2(b) is of slope 1.

|

Download:

|

|

Fig. 2 Error orders with respect to h |

|

Meanwhile, it is interesting to note that the Euler-Maruyama method applied to the SDE (19) is of root mean-square convergence order 0.5, while the midpoint scheme applied to the SDE (18) is of root mean-square convergence order 1 ([7, 8]). Three facts imply that the estimate errors based on the numerical solutions may mainly result from the error of the numerical methods themselves. More accurate estimators might be obtained by applying higher-order numerical methods.

3.4 Error comparison with the EM-MLE estimatorFor the linear SDE (2), we apply our estimator based on the exact solution, which we call the exact solution estimator, and the EM-MLE estimator [6] to estimate the parameters (a, b), which are given as a=3, b=1. Moreover, set X0=1, t0=0, and T=1. We perform four tests with four different values of h, namely, h=0.02, 0.04, 0.05, and 0.1, and observe m=100 sample paths for each. Denote the estimate arising from our method by (

|

|

Table 4 Exact solution and EM-MLE estimators |

Table 4 shows that our exact solution estimator is more accurate than the EM-MLE estimator.

To compare our numerical solution estimators with the EM-MLE estimator, we consider the linear SDE of Stratonovich sense (18), to which we apply the midpoint scheme estimator, and the equivalent It

In Table 5, (

|

|

Table 5 Numerical solution and EM-MLE estimators |

To test the effect of our estimators on non-linear SDEs, we consider the following SDE

| $ \begin{array}{*{20}{c}} {X\left( t \right) = a{X^2}\left( t \right){\rm{d}}t + b{X^2}\left( t \right) \circ {\rm{d}}B\left( t \right), }\\ {X\left( {{t_0}} \right) = {X_0}, } \end{array} $ | (21) |

and its equivalent It

| $ X\left( t \right) = \left( {a{X^2}\left( t \right) + {b^2}{X^3}\left( t \right)} \right){\rm{d}}t + b{X^2}\left( t \right){\rm{d}}B\left( t \right), $ | (22) |

where a and b are unknown parameters to be estimated.

We apply the midpoint estimator to (21), and the Euler-Maruyama estimator to (22). Set X0=0.5, t0=0, T=1, and h=0.02. We use the midpoint scheme with tiny step size h=0.001 to (21) to simulate the true solution of the system which serves as observation data for our tests, and we conduct m=500 samples. The estimation results with different sets of a and b are listed in Table 6, where

|

|

Table 6 Estimates with different a and b values |

Again, we plot the five groups of points (a, b), (

|

Download:

|

|

Fig. 3 Exact values and estimates of the parameter (a, b) in the nonlinear SDE (21) or (22) |

|

Then, we fixa=1 and b=2, but take h=0.02, 0.04, 0.05, and 0.1, to observe the order of the error

|

|

Table 7 Estimates and errors |

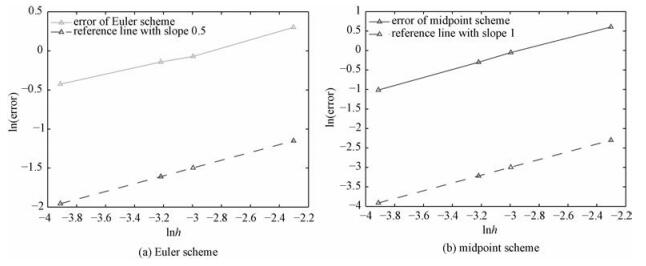

Figure 4(a) and Fig. 4(b) illustrate the ln|error| ~ ln h relationships arising from the Euler-Maruyama and the midpoint estimator, respectively.

|

Download:

|

|

Fig. 4 Error orders with respect to h of non-linear SDE estimators |

|

It is indicated that for the non-linear SDE (22) or its equivalent equation (21), the order of the error ee produced by the Euler-Maruyama estimator is about O(h0.5) while that by the midpoint estimator is about O(h). This is the same as the indication for the numerical results for the linear SDE in the previous subsections.

3.6 A remarkIt is observed from the above-performed numerical tests that, with the growth of the order of the numerical method, the estimation accuracy is improved. It is then naturally expected that higher order methods give better estimations. However, in high-order stochastic numerical schemes such as the weak order 2 It

Up to now we have considered estimation of 2 unknown parameters appearing in the drift and diffusion coefficients of a SDE. Actually, our methods can be extended to estimate multiple unknown parameters. Consider the following SDE

| $ \begin{array}{*{20}{c}} {{\rm{d}}X\left( t \right) = f\left( {X\left( {\omega, t} \right), {\theta _1}, \cdots, {\theta _p}} \right){\rm{d}}t + }\\ {\sigma \left( {X\left( {\omega, t} \right), {\gamma _1}, \cdots, {\gamma _q}} \right){\rm{d}}B\left( t \right), }\\ {X\left( {{t_0}} \right) = {X_0}, } \end{array} $ | (23) |

where θ1, …, θp, γ1, …, γq are (p+q) unknown parameters to be estimated. Applying the Euler-Maruyame scheme to the SDE (23), we have

| $ {X_{i + 1}} - {X_i} \sim N\left( {hf\left( {{X_i}, \Theta } \right), h{\sigma ^2}\left( {{X_i}, \Gamma } \right)} \right), $ | (24) |

where Θ:=(θ1, …, θp), Γ:=(γ1, …, γq).

Given m observations at time

| $ \begin{array}{*{20}{c}} {\frac{1}{m}\sum\limits_{j = 1}^m {X\left( {\omega _j^0, {t_1}} \right)} = hf\left( {{X_0}, \Theta } \right) + {X_0}, }\\ {\frac{1}{{m - 1}}\sum\limits_{j = 1}^m {{{\left( {X\left( {\omega _j^0, {t_1}} \right) - {X_0} - hf\left( {{X_0}, \Theta } \right)} \right)}^2}} }\\ { = h{\sigma ^2}\left( {{X_0}, \Gamma } \right), }\\ {\frac{1}{m}\sum\limits_{j = 1}^m {X{{\left( {\omega _j^0, {t_1}} \right)}^3}} = E\left( {X_1^3} \right) = \int\limits_{ - \infty }^{ + \infty } {{x^3}{\rho _1}\left( x \right){\rm{d}}x}, }\\ \vdots \\ {\frac{1}{m}\sum\limits_{j = 1}^m {X{{\left( {\omega _j^0, {t_1}} \right)}^{p + q}}} = E\left( {X_1^{p + q}} \right) = \int\limits_{ - \infty }^{ + \infty } {{x^{p + q}}{\rho _1}\left( x \right){\rm{d}}x}, } \end{array} $ | (25) |

where

| $ \begin{array}{l} g\left( t \right) = E{{\rm{e}}^{{\rm{i}}t{X_1}}} = \frac{1}{{\sqrt {2{\rm{\pi }}} {d_1}}}\int\limits_{ - \infty }^{ + \infty } {{{\rm{e}}^{{\rm{i}}tx}}\exp \left( { - \frac{{{{\left( {x - {c_1}} \right)}^2}}}{{2d_1^2}}} \right){\rm{d}}x} \\ \;\;\;\;\;\;\; = {{\rm{e}}^{{\rm{i}}{c_1}t - \frac{{d_1^2{t^2}}}{2}}}, \end{array} $ | (26) |

with the property that the k-th derivative of g at t=0 is g(k)(0)=ikE(X1k).

There are (p+q) unknowns and (p+q) equations in the equations system (25), which is usually non-linear and might need iteration methods for solving the unknowns

Given observations at time t2=t0+2h, …, tN=t0+Nh, we write similar equations systems regarding the p+q moments of Xi with probability density function

| $ {{\hat \theta }_i} = \frac{1}{N}\sum\limits_{l = 1}^N {\hat \theta _i^l}, {{\hat \gamma }_i} = \frac{1}{N}\sum\limits_{l = 1}^N {\hat \gamma _i^l}, $ | (27) |

for i=1, …, p, j=1, …, q.

Note that the observation data are the same tree-like data as described in section 3.1, wherefore the Xi is known when we calculate the moments of Xi+1, for i=0, …, N-1.

| [1] | Morel J M, Takens F, Teissier B. Parameter estimation in stochastic differential equations[M]. Berlin Heidelberg: Springer-Verlag, 2008: 6-10. |

| [2] | Breton A L. On continuous and discrete sampling for parameter estimation in diffusion type processes[M]. Berlin Heidelberg: Springer, 1976: 124-144. |

| [3] | Dorogovcev A J. The asymptotic properties of least square estimators for regression coefficients[J]. Problemy Peredai Informacii, 1973, 4:49–57. |

| [4] | Prakasa R. On Bayes estimation for diffusion fields[M]. North Holland: Statistical Publishing Society, 1984: 575-590. |

| [5] | Pedersen A R. A new approach to maximum likelihood estimation for stochatic differential equations based on discrete observations[J]. Scandinavian Journal of Statistics, 1995, 22(1):55–71. |

| [6] | Papaspiliopoulos O, Beskos A. An introduction to modelling and likelihood inference with stochastic differential equations. Great Britain: Warwick, 2011. |

| [7] | Kloeden P E, Platen E. Numerical solution of stochastic differential equations[M]. Berlin Heidelberg: Springer-Verlag, 1992: 305. |

| [8] | Milstein G N, Tretyakov M V. Stochastic numerics for mathematical physics[M]. Berlin Heidelberg: Springer, 2004: 9-10. |

2017, Vol. 34

2017, Vol. 34