2. School of Information Science and Technology, ShanghaiTech University, Shanghai 201210, China;

3. University of Chinese Academy of Sciences, Beijing 100049, China

2. 上海科技大学信息科学与技术学院, 上海 201210;

3. 中国科学院大学, 北京 100049

Wildfires are considered devastating since it causes considerable losses in both forest resources and people's lives and property. Furthermore, according to Ref.[1], the frequency of large wildfires has been witnessed being profoundly increasing in recent decades, mostly because of the climate and ecosystem changes. Therefore, a reliable prediction model of forest fire susceptibility is critical for public safety, forest management, and suppression planning. However, predicting fire risks at high accuracy is still a difficult task, basically because of the non-linearity and complexity of wildfires process, which is governed by many influencing factors[2] i.e. ignition factors, biomass fuels and also weather conditions[3].

Various models have been proposed to tackle the problem, varying from empirical/quasi-empirical modelling[4-5], regression methods[6-7] to some more complex statistical learning techniques[8-9] in the early days, while in recent years, as the rapid development of machine learning methods, more and more advanced machine learning algorithms have been applied and compared in this field, bringing both better accuracy and robustness to the prediction of wildfires[10-11]. Ghorbanzadeh et al.[12] introduced a forest fire susceptibility and risk mapping approach using both data-based and knowledge-based multi-criteria decision analyses. They used an ANN (artificial neural network) to generate hazard map and achieve overall satisfactory result by measure of RMSE and ROC curve; Pourghasemi et al[13]. recently tested three famous machine learning techniques-boosted regression tree (BRT), general linear model (GLM), and mixture discriminant analysis (MDA) for forest-fire susceptibility assessment. Their test results indicate that accuracy of BRT and MDA models are suitable for the forest fire susceptibility mapping. Despite the great success of the applications of machine learning methods to forest management, the problem of these techniques is also obvious. These models basically only accept pixel-wise data or handcrafted features, which leads to a poor representation of the data and failure of mining the spatial patterns thus limited their performance.

Deep learning, on the other hand, has powered many aspects of modern society and achieved far superior performance than conventional machine learning techniques, mostly due to its capability to extract deep learned features thus greatly enlarge the model capacity[14]. Deep learning techniques are proved (though still by experiments only, for now) to be very good at discovering intricate structures in high-dimensional data[14] thus applicable to many domains like image recognition, speech recognition, bio-medical searching or analysis and natural language understanding and processing, which most of these according to LeCun et al. in Ref.[14], 'have resisted the best attempts of the artificial intelligence community for many years'. Zhang et al.[15] applied conventional neural network (CNN) to predict forest fire susceptibility using a set of 14 forest fire influencing factors grid data. Their results confirmed that CNN models achieved higher accuracy than traditional machine learning techniques. Wildfire spread modelling also relies heavily on land characteristics. In Ref.[16], Hodges and Lattimer proposed a DCIGN based wildfire spread modelling method, their predicted burn maps overall agreed with the simulation results processed by FARSITE[4] while reduced the simulation time of 102-105; There's also much effort spent on remotely sensed image classification[17-18], landslide susceptibility mapping[19], all showing that deep CNN is capable of exploring complex and implicit spatial patterns which can greatly boost the performance. However, all these approaches use supervised learning schema, which requires considerable amount of high quality, labelled training data, especially for training a deep CNN, while acquiring labels is quite expensive and time-consuming almost all the time.

Under such circumstances, in this paper, we seek to apply unsupervised representation learning methods to model the forest geographic information, which can be easily integrated in any existing machine learning algorithms that leverage land characteristics (pixel-wise or raster data). That is, firstly using a pre-trained model to extract deep feature embeddings from related factors, then replace handcrafted features with these learned features to make full use of spatial patterns. We conclude our contributions as follows:

1) We make the first attempt to introduce unsupervised learning approaches to land characteristics modelling. These approaches produce comparable results with supervised learning baseline model in the experiments and can be almost directly applied to downstream tasks like forest fire susceptibility prediction and fire resurgence probability prediction.

2) We create and clean a large scale dataset base on LANDFIRE[20]'s LF shared program, thus training a deep neural network is made possible. Four series of labels are processed and provided.

3) Based on the dataset created, we give several experiments to prove that deep convolutional neural network can be used to predict fire susceptibility at high accuracy. We design a series of benchmarks to show the unsupervised learning methods have comparable performance with the supervised baseline model in the forest fire susceptibility prediction task. We also show these methods can learn stable and discriminative data representations, indicating their great potential in modelling forest land and vegetation cover characteristics.

1 Overview of deep unsupervised representation learningAccording to Bengio, et al. in Ref.[21], the great success of machine learning algorithms is 'heavily dependent on the choice of data representation'. For conventional machine learning methods, this kind of choice relies on experienced experts, and when it comes to deep learning, data representation mostly comes from data, or to be more specifically, is discovered automatically from raw data by machine, which is one key aspect deep learning models achieve such great success[14]. However, a large amount of labelled data should firstly be collected, cleaned and preprocessed by human effort (thus quite expensive) to train a supervised deep learning model in the specific field. Moreover, even a slight difference in statistical distribution between the training set and the real scenario could greatly devastate the performance and unfortunately, is always the case[22]. As a result, more and more researchers spare effort on transfer learning, domain adaptation, semi-supervised, weak-supervised and unsupervised learning these years to design systems that is able to robustly adapt to new conditions without leveraging a large amount of expensive supervision[23].

One simple and well performing thus commonly adapted transfer learning technique in deep learning is to leverage a pretrained model on large-scale labelled dataset. Then we fine-tune the model on the much smaller and maybe slightly different target domain. For example, a pretrained deep CNN model under ImageNet dataset achieves better performance than models trained from scratch in downstream tasks like image classification, object detection, semantic segmentation or image super resolution when the training dataset is not quite adequate. However, two problems pose here. It is almost impossible to label a forest geographic information dataset at comparable amount and quality as ImageNet (even a mostly adapted subset is more than a million) and relationship between downstream tasks in forest management is considered less relevant than in computer vision.

The main goal of unsupervised learning is to learn data representation from only unlabeled data thus one pretrained model can be almost directly applied to a set of downstream tasks. Generally, there are mainly two forms of unsupervised representation learning. 1) Generation-based models learn meaningful latent representations that can be used in downstream tasks by learning to generate examples[24]. Popular approaches include auto-encoders[25], restricted Boltzman machines[26], variational auto-encoders[27] and generative adversarial networks[28]. 2) Self-supervised learning models leverage a delicately designed pretext task to derive proxy labels from raw data. Various such tasks have been explored, e.g. predicting image patches relative arrange[29], solving jigsaw puzzles[30], colorizing grayscale images[31], image inpainting[32], cross-channel prediction[33], counting visual primitives[34] and predicting image rotations[35] 2.1) Clustering-based models update feature extractor with pseudo labels assigned through clustering over feature embeddings. 2.2) Contrastive learning models learn representations by contrasting similar/dissimilar representations from data that are organized into similar/dissimilar pairs[36].

In this paper, we empirically choose one clustering based model and one contrastive learning model to evaluate their performance in modelling forest landform characteristics.

2 MethodologyIn this section, we formally introduce our whole schema of modelling the geographic information. We first elaborate the data acquisition and preprocessing procedure in section 2.1, then in section 2.2, we explain the models we use and implementation details are described in section 2.3.

2.1 Data acquisitionWe consider modelling three aspects of forest fire influencing factors in our experiments, which are: topography-related, canopy-related, and fuel-related variables. We acquire our data from Landfire[20]'s LF shared program, which produces 'consistent, comprehensive, geospatial data and databases that describe vegetation, wildland fuel, and fire regimes across the United States and insular areas'. LF data products provide data including vegetation, disturbance change, fuel, fire regime, topographic and seasonal data. LF program was initiated to map fuel and fire. It provides various historical fire data for applications like fuel treatments, fire management planning. Six layers of raster data have been collected and cleaned, which are: forest canopy bulk density (LF product code: 200CBD), forest canopy base height (200CBH), forest canopy cover (200CC), forest canopy height (200CH), digital elevation model (DEM2016) and 40 fire behavior fuel models-Scott/Burgan (200FBFM40). All layers are created at a 30-meter spatial resolution and area of interest covers (most part of) conterminous United States, which to be specific, ranging from 125.0°E, 49.1°N (upper left coordinate) to 85.0°E, 30.0°N (bottom right coordinate). Data specifications are listed in Table 1.

|

|

Table 1 Input layer specifications |

Data are cleaned and processed by following procedure:

1) Slice raw raster grid into suitable size, we choose one data point to have a size of 256×256 pixels (7 680-meter width in surface distance) which is both efficient for calculation and spatially correlated. An awesome python library Rasterio[37] is used to deal with the input/output.

2) Data cleaning process is divided into two steps. First, we exclude those data samples with too much no data or non-burnable pixels. No data means that not enough information is known about a cell location thus no valid value is assigned; we extract non-burnable information from layer 200FBFM40, which typically includes urban, snow, water, barren and managed agriculture area. We adapt data samples that contain less than 20% of no data or non-burnable pixels into our dataset. For valid data points, we linearly map each pixel value to 0~1, which is more efficient as for calculation.

3) Remap fire behavior fuel models layer according to Ref.[38], we adopt four character data, which are: fine fuel load (t/ac), characteristic SAV (1/ft), packing ratio and extinction moisture content (%). Thus, four layers are constructed.

4) Generate labels bases on four statistical characteristics layers from Landfire[20], which are: mean fire return interval (140 MFRI), percent low-severity fire (200PLS), percent mixed-severity fire (200PMS), and percent replacement-severity fire (200PRS). Those characteristics are empirically considered representative of land fire risk, thus is used to test the performance of the proposed model. We further calculated the Pearson product-moment correlation coefficients of the four label sets:

| $ \left[\begin{array}{ccccc} & \text { PLS } & \text { PMS } & \text { PRS } & \text { MFRI } \\ \text { PLS } & 1.0 & 0.047 & -0.692 & -0.472 \\ \text { PMS } & 0.047 & 1.0 & -0.444 & 0.116 \\ \text { PRS } & -0.692 & -0.444 & 1.0 & 0.228 \\ \text { MFRI } & -0.472 & 0.116 & 0.228 & 1.0 \end{array}\right] $ |

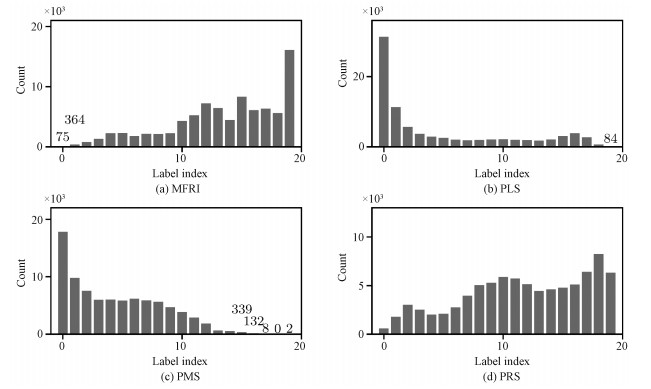

It can be found that in addition to the PRS label set, the correlation between the rests is weak, which is preferred and can be used to reflect the generalization performance of the representation extracted to some extent. For each data point, we average over all the pixels. We show label distribution in Fig. 1.We visualize the distributions of the four processed labels, observing class distribution is quite imbalanced.

|

Download:

|

|

Fig. 1 Label distribution |

|

We apply two state-of-the-art unsupervised representation learning approaches in our experiments. Since limited modifications are applied, we only explain their basic idea here and refer the reader their original paper for in-depth information.

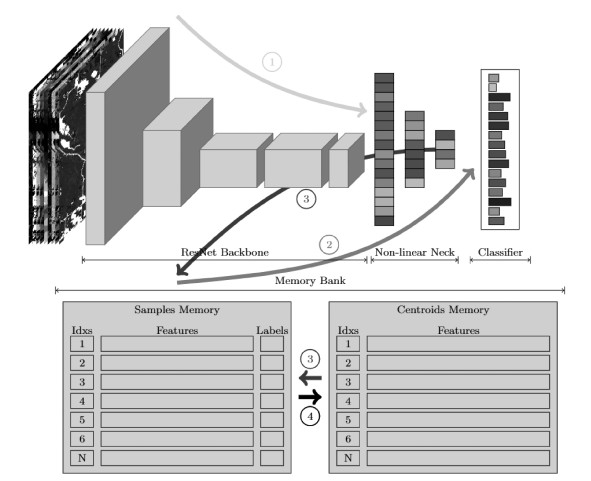

The clustering based model uses cluster generated pseudo labels as the supervision to update the whole network. The basic idea it works depends on that a random initialized CNN is discriminative thus can be used as a bootstrap[30]. For example, a random initialized AlexNet with a fully connected classification head achieves 12% in accuracy on ImageNet while the chance is only at 0.1%, which is far above the chance level. In this paper, we follow the method proposed in Ref.[39], which provides the state-of-the-art result among this class of approaches. Their approach introduces samples and centroids memories to steadily update representation learned. Samples memory stores sample feature vectors and the corresponding labels while the centroids memory stores the features of class centroids (for K-Means, class centroid is the mean feature of all samples in the class while the clustering algorithm can be different) thus sample labels and network parameters can be updated simultaneously. A typical online deep clustering (ODC) architecture is shown in Fig. 2. The model training process takes four steps:

|

Download:

|

|

Fig. 2 Online deep clustering training process |

|

1) Feed forward the raster data to the network to map the input into compact feature vectors;

2) Back propagate over the network to update trainable parameters according to pseudo-labels stored in samples memory;

3) Use new compact feature vectors to update sample features and assigning new labels (according to the previous centroids);

4) Update centroids memory with the evolved features.

Additional techniques like loss re-weighting and cluster division are applied to avoid network stuck into trivial solutions.

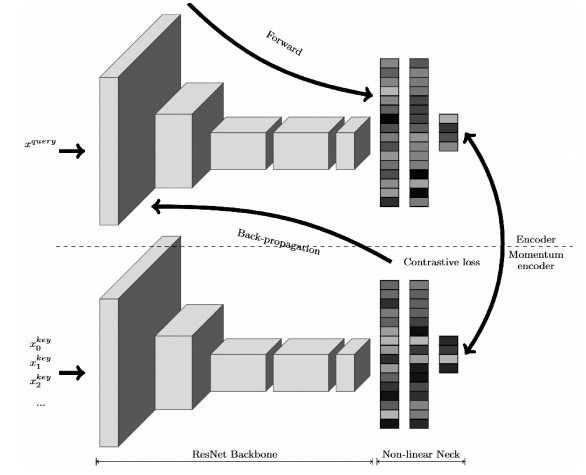

The contrastive learning based model maximizes agreement of the representation of two different data augmentation (of one input) and minimizes agreement otherwise using a contrastive loss to update network parameters. He et al.[40] present a way of building large and consist contrastive learning model (Fig. 3) using a dictionary look-up method, which is, an encoded 'query' sample should be similar to its matching key and dissimilar otherwise, like a dictionary look-up procedure. Their approach trains an encoder to generate feature embeddings and a momentum updated encoder is used to produce slowly evolved keys thus relatively consistent. The dynamic dictionary is built on the fly using a 'first in first out' queue. A contrastive loss function called InfoNCE[41] is considered in their work for training the encoder network:

| $ \mathcal{L}_q=-\log \frac{\exp \left(q \cdot k_{+} / \tau\right)}{\sum\nolimits_{i=0}^K \exp \left(q \cdot k_i / \tau\right)} . $ |

|

Download:

|

|

Fig. 3 Momentum contrast architecture |

|

where q is an encoded 'query' and k+ is the match of q while ki represents other 'keys' in dictionary. τ is a temperature parameter that controls the concentration level of the distribution. This loss function can be seen as a log loss of a K-way classifier, which classify q as, k+ but not other 'keys'.

Loss function Unlike a normal classification problem where each label index represents a discrete class (i.e. identify whether an image is of a cat or dog), our labels are however continuous as we shown in Fig. 1. Thus, we follow a multitask-learning schema:

· We use weighted average as model output, which can be formulated as

xquery is the data sample for query and x0key, x1key, x2key…represents the dictionary queue.

· The loss function we use is consist of three items: cross entropy loss, mean loss and variance loss. Cross entropy loss is a commonly used criterion when training a multiclass classifier[42], and can be calculated by

| $ \mathcal{L}_{\text {cross entropy }}=-\frac{1}{N} \sum\limits_N \log \left(p_j\right) . $ |

where N is the batch size while pi is the softmax probability of the ground truth label. We then include mean and variance loss as the regularization term that guide the model to learn a prediction distribution with not only a mean value close to the ground-truth, but also a concentrated shape[43]. Mean loss and variance loss can be computed by

| $ \begin{gathered} \mathcal{L}_{\text {mean }}=\frac{1}{N} \sum\limits_N\left(\hat{\boldsymbol{y}}_i-\boldsymbol{y}_i\right)^2, \\ \mathcal{L}_{\mathrm{var}}=\frac{1}{N} \sum\limits_N \sum\limits_{\text {class }}\left(p_j{ }^*\left(j-\hat{\boldsymbol{y}}_i\right)\right)^2 . \end{gathered} $ |

Thus, our final loss function can be expressed as

| $ \mathcal{L}=\lambda_1 \mathcal{L}_{\text {mean }}+\lambda_2 \mathcal{L}_{\text {var }}+\mathcal{L}_{\text {cross entropy }} . $ |

where λ1 and λ2 are two tunable hyper-parameters.

Classifier We use a 20-way linear classifier on features extracted by the backbone, thus the classifier does not bring extra non-linearity. For all pretrained models, all their backbone parameters are frozen. In this way, we can better evaluate the discriminative of the feature extracted.

3 Implementation detailsTraining process All models use the same ResNet-50[44] architecture as the backbone. For the supervised baseline, training takes 160 epochs at a batch size of 128 allocated to two GTX 1080Ti. The initial learning rate is set to 0.1, using SGD optimizer with a cosine annealing learning rate scheduler. Weight decay is set to 0.000 1 and momentum is set to 0.9. Pretraining settings of the two unsupervised learning approaches are as follows. For ODC, we use a learning rate of 0.06 for the first 400 epochs and 0.024 for the last 80 epochs and SGD optimizer. Weight decay is 0.000 01 and momentum is 0.9. We reduce the number of clusters to 1 000 compared to the original paper for our dataset is about one-tenth of ImageNet. The threshold for small clusters is set to 20. The samples and centroids memory is updated every 10 iterations. For MoCo, cosine annealing learning rate decay schedule and SGD optimizer is used, with an initial learning rate at 0.05. Weight decay is 0.000 1 and momentum is set to 0.9. We tuned the dictionary length through several runs and set it to 8 192. In all the experiments, we only adapt backbone network parameters of the two unsupervised learning models to keep all architecture the same. The hyper-parameters λ1, λ2 in the loss function are tuned on 10% of the dataset using grid search. For each pair of hyper-parameters, we train a supervised model with 30 epochs with no learning rate decay and choose the one with the highest average accuracy over three tests. In all experiments, we set λ1=0.26 and λ2=0.69.

Data augmentation We empirically choose the data augmentation methods that encourage the model learn rotational invariance and position invariance features thus the model training would be more stable and capable of generalization. For all experiments, we apply random horizontal flip and random crop augmentation. For ODC, an additional random rotation augmentation within ±2 degrees is applied and for MoCo, a random gaussian blur augmentation is applied with a kernel size of 23, standard deviation range from 0.1 to 2.

4 ExperimentsIn this section, we evaluate the quality of representation learned by the two proposed unsupervised approaches through two experiments. For all the experiments, we report the accuracy within 10% absolute error (ACC, higher the better) and the mean absolute error (MAE, lower the better).

4.1 Full dataset classificationFirstly, we compare the three models on the full dataset. Supervised baseline model is trained from scratch on the dataset following the procedure described in section 2.3. We also include the results given by the four supervised model backbone. For fair comparation, all pretrained models are trained for 60 epochs at a batch size of 128, using SGD optimizer with an initial learning rate of 0.1. Learning rate is decayed following cosine annealing schedule, weight decay is set to 0.000 1 and momentum is set to 0.9. We further apply different weight in regard to the label distribution to help alleviate the effects of imbalanced dataset.

We show the results in Table 2. First, the baseline (supervised) model gives the best results on all four sets of labels as expected, proves that given enough data and supervision, deep learning algorithm can predict well on wildfire related statistical characteristic according to multiple factors. Second, the unsupervised pretrained models give comparable results on all sets of labels. The ODC pretrained model achieves the best result on MFRI, PLS and PRS while MoCo pretrained takes the second. The two unsupervised pretrained models both exceed the supervised pretrained models at a large margin in MFRI and PRS label sets, showing their capability of producing robust and well generalized representation that suitable for multiple downstream tasks. Note that the performance of all the supervised pretrained network greatly degenerated when label set changes, which is not preferred. One interesting phenomenon we notice is that PLS pretrained achieves the best on PMS labels, with only 0.7% performance drain (compares to the supervised baseline) as for accuracy, which is quite close. As identical result reproduces on 10% and 5% of the dataset and the model achieves over 90% in accuracy within 1 epoch, we preliminarily assign this phenomenon to the close distribution between PLS and PMS labels. While its performance on the other two labels is quite poor (almost the poorest actually) which does no good for potential downstream tasks, we leave this part of discussion to future researches.

|

|

Table 2 Results of full dataset classification |

we evaluate the performance of the two unsupervised learning approaches on 10% and 5% of the training set to further illustrate the effectiveness in generalization of our proposed approaches. To fairly evaluate the performance, the test set is not changed. All the rest settings are identical with the experiment described in section 4.1 and we show the results in Table 3.

|

|

Table 3 Results of subset classification |

We observe the superiority of the unsupervised approaches still holds on both experiments. The unsupervised methods take the first and second place on MFRI, PLS, PRS label sets not only in terms of ACC and MAE, they also suffer less performance drain due to the insufficient training data. One more thing to notice is that although the MoCo pretrained model does not give better performance than ODC pretrained model on the full dataset, its performance becomes quite competitive in low-shot dataset. We can find that the drain caused by the reduced amount of dataset is much less, indicating the feature it learned is relatively more robust than that for ODC to some extent. We should also mention that the model trained under a less imbalanced set of labels (which is the PRS pretrained) performs overall better than the other two in generalization for its accuracy on the three sets of labels are closer and got less performance drain because of the reduced dataset size.

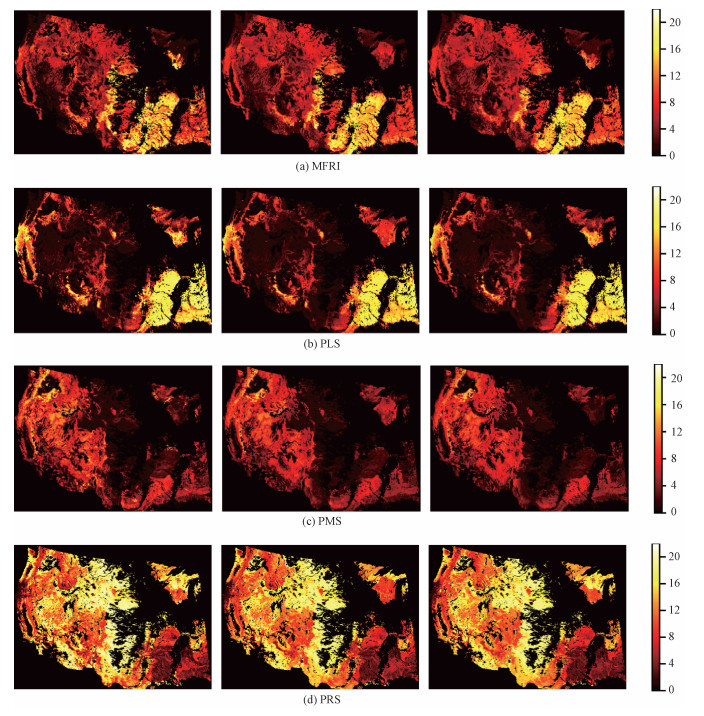

4.3 Maps and visualizationFinally, we visualize the model predictions in Fig. 4. The whole dataset is used to construct the map. We visualize the ground truth and the prediction of the two unsupervised pretrained models trained on full dataset. For MFRI map, we use (1-MFRI) to visualize results thus the bright area suffers fire that is more frequent. The overall result is promising. Both ODC and MoCo pretrained models give visually similar results as the ground truth. Then, there are two things to notice. First, model tend not to give extreme prediction like 1 and 20. We can easily find that the ground truth map of PRS is 'brighter' than the other two. The main reason for this phenomenon is that our model output is a weighted average thus smoothed. Second, while ODC pretrained model does better in terms of mean ACC and MAE, its performance significantly degenerated in some area (northeast for example) for all four labels while MoCo seems more robust at all location.

|

Download:

|

|

From left to right: Ground truth, ODC-pretrained prediction, and Moco-pretrained prediction Fig. 4 MFRI, PLS, PMS, and PRS map |

|

In this paper, we showed a series of promising results of deep learning methods applied in forest land characteristics modelling. We use a forest fire susceptibility task to evaluate the quality of features extracted by deep learning models, find these methods are good at discovering intricate structures in high-dimensional data and give reasonable representations of data. Since our dataset covers the entire continental United States, we believe our method is transferable to other regions, and could help those regions with fewer data. Moreover, these methods born to be capable to process raster data, make use of its spatial patterns and learn to extract feature from data. This capability makes deep learning methods different from conventional machine learning approaches and more efforts should be spared to explore the possible application. We further compare performance between unsupervised learned model and supervised learned models. Both ODC and MoCo achieve competitive performance in terms of accuracy and mean absolute error compare to the supervised baseline model and learn better representations than supervised pretrained models. Our ongoing research also shows that the two unsupervised pretrained model can improve performance of a wildfire spread prediction network by providing more robust land characteristics. Moco pretrained model reduces the error rate by 1.4% (from 2.9% to 1.5%) and enhance the F-Measure score from 0.843 to 0.911, compared to the model trained from scratch with the same architecture. In this manner, we are quite confident that our proposed method is robust and has good generalization performance. As the difficulty of obtaining high precision gridded forest landform information decreases, it is more and more urgent to develop a general modelling method to process those data and deep neural network we believe, is a promising solution. Cheaper and more accurate data could strongly facilitate these deep learning approaches, bringing better prediction to various downstream tasks like fire risk assessment, forest fire early detection, and spread modelling. In this way, more precise forest management can be achieved, thereby reducing economic and natural losses caused by the disasters.

| [1] |

Wehner M, Arnold J, Knutson T, et al. Chapter 8: droughts, floods, and wildfire. Climate science special report: Fourth national climate assessment (NCA4), Volume I[R]. U.S. Global Change Research Program, 2017. DOI: 10.7930/JOCJ8BNN.

|

| [2] |

Ngoc Thach N, Bao-Toan Ngo D, Xuan-Canh P, et al. Spatial pattern assessment of tropical forest fire danger at Thuan Chau area (Vietnam) using GIS-based advanced machine learning algorithms: a comparative study[J]. Ecological Informatics, 2018, 46: 74-85. DOI:10.1016/j.ecoinf.2018.05.009 |

| [3] |

Pettinari M L, Chuvieco E. Fire behavior simulation from global fuel and climatic information[J]. Forests, 2017, 8(6): 179. DOI:10.3390/f8060179 |

| [4] |

Finney M A. FARSITE: fire Area Simulator-model development and evaluation[R]. U.S. Department of Agriculture, Forest Service, Rocky Mountain Research Station, 1998.

|

| [5] |

Tymstra C, Bryce R, Wotton B, et al. Development and structure of Prometheus: the Canadian wildland fire growth simulation model[R/OL]. (2013-04-03)[2021-04-06]. http://publications.gc.ca/pub?id=9.619969&sl=1.

|

| [6] |

Wotton B M, Martell D L, Logan K A. Climate change and people-caused forest fire occurrence in Ontario[J]. Climatic Change, 2003, 60(3): 275-295. DOI:10.1023/A:1026075919710 |

| [7] |

Koutsias N, Martínez-Fernández J, Allgöwer B. Do factors causing wildfires vary in space? evidence from geographically weighted regression[J]. GIScience & Remote Sensing, 2010, 47(2): 221-240. DOI:10.2747/1548-1603.47.2.221 |

| [8] |

Conedera M, Torriani D, Neff C, et al. Using Monte Carlo simulations to estimate relative fire ignition danger in a low-to-medium fire-prone region[J]. Forest Ecology and Management, 2011, 261(12): 2179-2187. DOI:10.1016/j.foreco.2010.08.013 |

| [9] |

Lautenberger C. Wildland fire modeling with an Eulerian level set method and automated calibration[J]. Fire Safety Journal, 2013, 62: 289-298. DOI:10.1016/j.firesaf.2013.08.014 |

| [10] |

Bisquert M, Caselles E, Sánchez J M, et al. Application of artificial neural networks and logistic regression to the prediction of forest fire danger in Galicia using MODIS data[J]. International Journal of Wildland Fire, 2012, 21(8): 1025-1029. DOI:10.1071/wf11105 |

| [11] |

Satir O, Berberoglu S, Donmez C. Mapping regional forest fire probability using artificial neural network model in a Mediterranean forest ecosystem[J]. Geomatics, Natural Hazards and Risk, 2016, 7(5): 1645-1658. DOI:10.1080/19475705.2015.1084541 |

| [12] |

Ghorbanzadeh O, Blaschke T, Gholamnia K, et al. Forest fire susceptibility and risk mapping using social/infrastructural vulnerability and environmental variables[J]. Fire, 2019, 2(3): 50. DOI:10.3390/fire2030050 |

| [13] |

Pourghasemi H R, Gayen A, Lasaponara R, et al. Application of learning vector quantization and different machine learning techniques to assessing forest fire influence factors and spatial modelling[J]. Environmental Research, 2020, 184: 109321. DOI:10.1016/j.envres.2020.109321 |

| [14] |

LeCun Y, Bengio Y, Hinton G. Deep learning[J]. Nature, 2015, 521(7553): 436-444. DOI:10.1038/nature14539 |

| [15] |

Zhang G L, Wang M, Liu K. Forest fire susceptibility modeling using a convolutional neural network for Yunnan Province of China[J]. International Journal of Disaster Risk Science, 2019, 10(3): 386-403. DOI:10.1007/s13753-019-00233-1 |

| [16] |

Hodges J L, Lattimer B Y. Wildland fire spread modeling using convolutional neural networks[J]. Fire Technology, 2019, 55(6): 2115-2142. DOI:10.1007/s10694-019-00846-4 |

| [17] |

Zhang C, Pan X, Li H P, et al. A hybrid MLP-CNN classifier for very fine resolution remotely sensed image classification[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2018, 140: 133-144. DOI:10.1016/j.isprsjprs.2017.07.014 |

| [18] |

Liu T, Abd-Elrahman A. Deep convolutional neural network training enrichment using multi-view object-based analysis of Unmanned Aerial systems imagery for wetlands classification[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2018, 139: 154-170. DOI:10.1016/j.isprsjprs.2018.03.006 |

| [19] |

Wang Y, Fang Z C, Hong H Y. Comparison of convolutional neural networks for landslide susceptibility mapping in Yanshan County, China[J]. Science of the Total Environment, 2019, 666: 975-993. DOI:10.1016/j.scitotenv.2019.02.263 |

| [20] |

Landfire. Forest canopy cover layer, forest canopy height layer, etc[EB/OL]. US Department of the Interior, Geological Survey, (2019)[2020-06-23]. http://landfire.cr.usgs.gov/viewer/.

|

| [21] |

Bengio Y, Courville A, Vincent P. Representation learning: a review and new perspectives[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 35(8): 1798-1828. DOI:10.1109/TPAMI.2013.50 |

| [22] |

Pan S J, Yang Q. A survey on transfer learning[J]. IEEE Transactions on Knowledge and Data Engineering, 2010, 22(10): 1345-1359. DOI:10.1109/TKDE.2009.191 |

| [23] |

Kolesnikov A, Zhai X H, Beyer L. Revisiting self-supervised visual representation learning[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). June 15-20, 2019, Long Beach, CA, USA. IEEE, 2020: 1920-1929. DOI: 10.1109/CVPR.2019.00202.

|

| [24] |

Donahue J, Krähenbühl P, Darrell T. Adversarial feature learning[EB/OL]. arXiv: 1605.09782. (2017-04-03)[2021-04-06]. https://arxiv.org/abs/1605.09782.

|

| [25] |

Le Q V. Building high-level features using large scale unsupervised learning[C]//2013 IEEE International Conference on Acoustics, Speech and Signal Processing. May 26-31, 2013, Vancouver, BC, Canada. IEEE, 2013: 8595-8598. DOI: 10.1109/ICASSP.2013.6639343.

|

| [26] |

Lee H, Grosse R, Ranganath R, et al. Convolutional deep belief networks for scalable unsupervised learning of hierarchical representations[C]//Proceedings of the 26th Annual International Conference on Machine Learning-ICML'09. June 14-18, 2009, Montreal, Quebec, Canada. New York: ACM Press, 2009: 609-616. DOI: 10.1145/1553374.1553453.

|

| [27] |

Kingma D P, Welling M. Auto-encoding variational bayes[EB/OL]. arXiv: 1312.6114. (2014-05-01)[2021-04-06]. https://arxiv.org/abs/1312.6114.

|

| [28] |

Goodfellow I J, Pouget-Abadie J, Mirza M, et al. Generative adversarial networks[EB/OL]. arXiv: 1406.2661. (2014-06-10)[2021-04-06]. https://arxiv.org/abs/1406.2661.

|

| [29] |

Doersch C, Gupta A, Efros A A. Unsupervised visual representation learning by context prediction[C]//2015 IEEE International Conference on Computer Vision (ICCV). December 7-13, 2015, Santiago, Chile. IEEE, 2016: 1422-1430. DOI: 10.1109/ICCV.2015.167.

|

| [30] |

Noroozi M, Favaro P. Unsupervised learning of visual representations by solving jigsaw puzzles[M]//Computer Vision-ECCV 2016. Cham: Springer International Publishing, 2016: 69-84. DOI: 10.1007/978-3-319-46466-4_5.

|

| [31] |

Larsson G, Maire M, Shakhnarovich G. Learning representations for automatic colorization[M]//Computer Vision-ECCV 2016. Cham: Springer International Publishing, 2016: 577-593. DOI: 10.1007/978-3-319-46493-0_35.

|

| [32] |

Pathak D, Krähenbühl P, Donahue J, et al. Context encoders: feature learning by inpainting[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). June 27-30, 2016, Las Vegas, NV, USA. IEEE, 2016: 2536-2544. DOI: 10.1109/CVPR.2016.278.

|

| [33] |

Zhang R, Isola P, Efros A A. Split-brain autoencoders: Unsupervised learning by cross-channel prediction[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). July 21-26, 2017, Honolulu, HI, USA. IEEE, 2017: 645-654. DOI: 10.1109/CVPR.2017.76.

|

| [34] |

Noroozi M, Pirsiavash H, Favaro P. Representation learning by learning to count[C]//2017 IEEE International Conference on Computer Vision (ICCV). October 22-29, 2017, Venice, Italy. IEEE, 2017: 5899-5907. DOI: 10.1109/ICCV.2017.628.

|

| [35] |

Gidaris S, Singh P, Komodakis N. Unsupervised representation learning by predicting image rotations[EB/OL]. arXiv: 1803.07728. (2018-03-21)[2021-04-06]. https://arxiv.org/abs/1803.07728.

|

| [36] |

Chen X, Fan H, Girshick R, et al. Improved baselines with momentum contrastive learning[EB/OL]. arXiv: 2003.04297. (2020-03-09)[2021-04-06]. https://arxiv.org/abs/2003.04297.

|

| [37] |

Gillies S, Ward B, Petersen A, et al. Rasterio: geospatial raster I/O for Python programmers[EB/OL]. (2013)[2020-06-21] https://github.com/mapbox/rasterio.

|

| [38] |

Scott J H, Burgan R E. Standard fire behavior fuel models: a comprehensive set for use with Rothermel's surface fire spread model[R]. U.S. Department of Agriculture, Forest Service, Rocky Mountain Research Station, 2005.

|

| [39] |

Zhan X H, Xie J H, Liu Z W, et al. Online deep clustering for unsupervised representation learning[C]//2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). June 13-19, 2020, Seattle, WA, USA. IEEE, 2020: 6687-6696. DOI: 10.1109/CVPR42600.2020.00672.

|

| [40] |

He K M, Fan H Q, Wu Y X, et al. Momentum contrast for unsupervised visual representation learning[C]//2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). June 13-19, 2020, Seattle, WA, USA. IEEE, 2020: 9726-9735. DOI: 10.1109/CVPR42600.2020.00975.

|

| [41] |

Oord A Van Den, Li Y, Vinyals O. Representation learning with contrastive predictive coding[EB/OL]. arXiv: 1807.03748. (2019-01-22)[2021-04-06]. https://arxiv.org/abs/1807.03748.

|

| [42] |

Janocha K, Czarnecki W M. On loss functions for deep neural networks in classification[EB/OL]. arXiv: 1702.05659. (2017-02-18)[2021-04-06]. https://arxiv.org/abs/1702.05659.

|

| [43] |

Pan H Y, Han H, Shan S G, et al. Mean-variance loss for deep age estimation from a face[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. June 18-23, 2018, Salt Lake City, UT, USA. IEEE, 2018: 5285-5294. DOI: 10.1109/CVPR.2018.00554.

|

| [44] |

He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). June 27-30, 2016, Las Vegas, NV, USA. IEEE, 2016: 770-778. DOI: 10.1109/CVPR.2016.90.

|

2023, Vol. 40

2023, Vol. 40