2. AI Lab of KnowLeGene Intelligent Technology Co, Ltd, Beijing 100088, China

2. 北京知因智慧科技有限公司AI实验室, 北京 100088

Extracting relational facts from unstructured text is an important topic in natural language task, which is widely used in knowledge extraction and automatic construction of knowledge base. A relational fact is often represented as a triplet which contains of two entities and a relation between them, such as < Trump, Presidentof, United States>, in which the relation is in a predefined set.

To handle this task, there have been many previous methods which mainly divided into traditional methods and joint extraction methods. Traditional methods[1-2] handle this in a pipeline manner, which separate this task into pipelined subtasks: extracting entities first, and then recognizing relations between extracted entities. However, the pipeline framework ignores the relevance of entity identification and relation prediction[3]. Meanwhile, solving the two subtasks in a sequence manner, it leaves the model open to potential error accumulation, since the results of entity recognition may affect the performance of relation classification. Joint extraction models[3-6] are to extract entities and relations together using one single model instead of two. With the success of deep neural networks, NN-based automatic feature learning methods have been applied to extract relations, which reduces the model dependency on manual feature selection. Recent studies show that joint learning model can effectively integrate the information of entity and relation, and therefore achieve better performance in both subtasks than the pipeline method. However, their methods cannot identify overlapping relations, which may lead to poor recall when processing a sentence with overlapping relations.

In the traditional methods of entity recognition and relation extraction, it is common to assume that there is only one relational triplet or multiple un-overlapped relational triplets in each sentence. From practical perspective, triplets, within one sentence, are overlapped in different ways, which is a common scenario and usually being neglected in models. Zeng et al.[7] proposed a method named Copy to tackle this matter. In their paper, they generalized three categories of the sentence based on the level of overlap between the extracted triplets: Normal, Entity Pair Overlap (EPO), and Single Entity Overlap (SEO).

In this paper, we still use the three categories of the sentence classification as Zeng et al.[7] to divide sentences which are illustrated in detail in Fig. 1. S1 belongs to Normal class because none of its triplets have overlapped entities; S2 belongs to EPO class since the entity pair < Sudan, Khartoum> of its two triplets are overlapped; And S3 belongs to SEO class because the entity Aarhus of its two triplets are overlapped and these two triplets have no overlapped entity pair.

|

Download:

|

|

Fig. 1 Examples of Normal, EPO, and SEO categories |

|

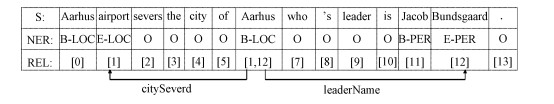

We propose a new joint model to solves the overlapping relationship, which performs the two tasks of entity recognition and relation extraction simultaneously. In our model, the encoding of the source sentence is used for both entity recognition and relation recognition. It has been proved that models[8-9], which use deep learning methods such as CNN- and RNN- based and combine with Conditional Random Field (CRF) loss function, can achieve good performance in entity identification. We add attention mechanism into entity identification process, since the attention mechanism has been proved successful in capturing long-range dependencies recently. At the same time, in theory, the additional information, such as part-of-speech (POS) and dependency relations (Deprel), enriches the information contained within the input and increases the accuracy of the model. We conduct experiments to verify this theory by concatenating the corresponding vectors into embedding layer, the effect of relation extraction will be improved. In relation recognition task, we consider it as multi-label problem, which cannot be properly dealt with in the previous joint model. The multi-label problem is that an entity appears in variety of relation triples and the entity only mentioned once in the sentence. In our model, we annotate the corresponding relation of the two involved entities with the position of the last word of each entity, since the entity may be represented with multiple words. For example, as shown in Fig. 2, the relationship of the relation triplet < Aarhus, citySeverd, Aarhus airport> is represented with the position of the word "Aarhus" and "airport", and the relationship of the relation triplet < Aarhus, leaderName, Jacob Bundsgaard> is represented with the position of the word "Aarhus" and "Bundsgaard". By this design, our model can successfully extract overlapping relations.

|

Download:

|

|

Fig. 2 An example of NER label and relation label |

|

The key contributions of this paper are given as follows:

1) We proposed a new unified architecture of extracting multi-relations, which performs the entity identification and relation recognition task simultaneously.

2) Our model uses a multi-label method to deal with the overlapping relation problem and the difficulties of labeling the entity that contains multiple words.

3) Our model uses the attention mechanism and the input of this architecture contains more information such as part-of-speech (POS) and grammatical dependencies (Deprel). The overall performance on the multi-relation extraction from NYT dataset is improved 8 % on F1 score compare to that from the Copy model.

1 Relation workExtracting relational triples from a given sentence, without any entity annotation, is an important subject in natural language processing (NLP), because the relational structure is the basis of knowledge acquisition, knowledge construction and knowledge graphs. There are rapid and significant developments of knowledge graphs constructions, such as DBpedia[10] and Freebase[11], hence the accuracy of the relation extracted is more prominent than before.

Earlier works used the idea of pipeline to tackle this problem, they divided the relationship extraction into two separated tasks: Named Entity Recognition (NER)[12] and Relationship Classification (RC)[13]. NER models are linear statistical models, such as Hidden Markov Model (HMM) and Conditional Random Fields (CRF)[14]. Recently, several neural network structures[15-16] have been successfully applied to NER by recognizing the entity as a sequential token tagging task. Existing RC methods[17] can be divided into handcrafted feature-based methods and neural networks-based methods.

Joint learning model, a unified model, aims to extract entities and relations simultaneously. Recently, Miwa and Bansal[5] uses LSTM-based model to extract entities and relations which uses neutral network to reduce manual work. However, most existing neural models[3-5] implemented in joint learning of entities and relationships only through parameter sharing rather than joint decoding. In the proposed model, the joint learning model is conduct in a pipeline manner, to obtain the relationship triples, they still need to pipe the detected entity pairs to the relationship classifier to identify the relationships between the entities. Zheng et al.[17] introduced a new unified labeling scheme to realize joint decoding, which transformed the task of relational triple extraction into an end-to-end sequence labeling problem that does not require NER or RC. Because the entity and relationship information are integrated into a unified labeling scheme, the model they propose can directly learn the relationship triples as a whole.

Although joint models have achieved good performance in relation extraction, the above methods ignore the problem of overlapping relation triples. A general definition of the overlapping relation triples is when the entity exists in different relational triples, but the times of the entity appears in the sentence is less than the number of triples involves the same entity. Zeng et al.[7] first proposed the problem of overlapping relation, and comes up a sequence-to-sequence (Seq2Seq) model with copy mechanism to solve the problem. Recent, Fu et al.[18] also studied the problem and proposed GraphRel, which using dependency trees as inputs. Although the models improved the performance of the entity recognition and relation extraction when the overlapping scenario is considered, these methods suffer from complex decoding process. Our proposed architecture is joint model that performs the two tasks of entity recognition and relation extraction simultaneously and takes into account of multiple relations and overlapping problem.

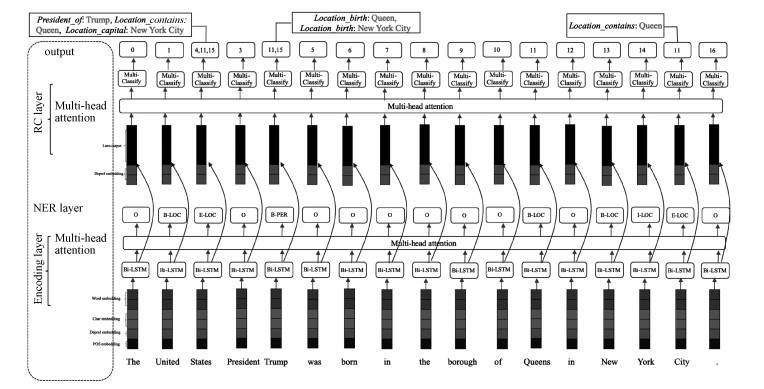

2 Our modelFor a given sentence without any entity annotated, our goal is to extract the relation triples (h, r, t) from it, where h, t∈E, r∈R, E is the set of entities and R is the set of relations. We divide the extract relation task into two sub-tasks: entity recognition and relationship recognition. This newly proposed joint extraction model not only extract entities and recognition relationships simultaneously but also solve the problem of overlapping relationships, as shown in Fig. 3. In this section, we provide detailed procedures of the model we proposed.

|

Download:

|

|

Fig. 3 Model for multi-tasks joint extraction of entities and relationships |

|

Suppose a sentence is represented as S={w1, …, wl}, which contains l words. First, we obtain the word-level embedding of each word

We use Bi-directional LSTM (Bi-LSTM) encoding layer[6], a combination of a forward LSTM layer and a backward LSTM layer, which has been proven to be effective in capturing semantic information between each word in a sentence and in encoding sentence. For each word wi in the sentence, we take the combination of word embedding, character embedding, POS information and Deprel as Bi-LSTM input:

| $ \begin{gathered} \boldsymbol{h}_{w_{i}}^{0} = \operatorname{word}\left(\boldsymbol{w}_{i}\right) \oplus \operatorname{char}\left(\boldsymbol{w}_{i}\right) \oplus \operatorname{pos}\left(\boldsymbol{w}_{i}\right) \oplus \\ \operatorname{deprel}\left(\boldsymbol{w}_{i}\right). \end{gathered} $ | (1) |

Where hwi0 represents the initial feature of word wi, word(wi), char(wi), pos(wi), and deprel(wi) are the word, character, POS, and Deprel embedding, respectively. The Bi-LSTM is implemented to produce forward state

| $ \boldsymbol{h}_{\boldsymbol{i}} = \left[\overrightarrow{\boldsymbol{h}}_{\boldsymbol{i}} \overleftarrow{\boldsymbol{h}}_{\boldsymbol{i}}\right], i = 1, \cdots, l $ | (2) |

And the sentence representation is

Then it is used the input of attention layer. The attention layer is mainly used to calculate the correlation between the current word and other words in the sentence. Here we compute the Attention (A) using multi-head attention[20] which allows the model to jointly attend to information from different representation subspaces. The calculation takes a query and a set of key-value pairs as inputs. The output is computed as weighted sum of values, where the weight is computed by a function of the query with the corresponding key. We compute the A as

| $ \boldsymbol{A}^{(\boldsymbol{i})} = \operatorname{softmax}\left(\frac{\boldsymbol{Q} \boldsymbol{W_{i}^{Q}} \times\left(\boldsymbol{K} \boldsymbol{W_{i}^{K}}\right)^{\mathrm{T}}}{\sqrt{\mathrm{d}}}\right) \boldsymbol{V}, i \in[1, \text { head}]. $ | (3) |

Where A(i) is the i-th weight of i-th head attention, and Q and K are both equal to H, which is the output of Bi-LSTM. The projections are parameter matrices

At this point, we can get a word based on a global feature representation Z =[z1, …, zl] at the sentence-level: Z =HAT, where

In this layer, the main task is to identify the boundary and categorize the entity, and we use the BIOE method to annotate the sentence. For example, the annotations corresponding to the entity "New York City" are B-LOC, I-LOC, and E-LOC. The annotation includes two parts, the first part represents the boundary of the entity, where B-represents the entity's first token, E-represents the last token of the entity, and I-represents the tokens between the start and the end of the entity; the second part represents the category of the entity. In this paper, the types of entities identified in this category include three types of entities: Location, Organization and Person.

We use Conditional Random Fields (CRF) to identify the entity. The input of the CRF layer is the output of the encoding layer Z =[z1, …, zl]. The prediction task of CRF includes two parts: one is to calculate the score of the label corresponding to each word si; the other is to calculate the best labeling sequence using the Viterbi algorithm on the transformation matrix T and the score of si. The calculation process is as follows:

| $ s_{i} = \boldsymbol{V} f\left(\boldsymbol{U z_{i}}+\boldsymbol{b}\right). $ | (4) |

| $ C\left(y_{1}, \cdots, y_{l}\right) = \sum\nolimits_{i = 0}^{l} s_{i, y_{i}}+\sum\nolimits_{i = 1}^{l-1} T_{y_{i}, y_{i+1}} $ | (5) |

| $ Y_{\text {ner }}^{\text {result }} = \operatorname{argmax}\left(P\left(y_{1}, \cdots, y_{l} \mid \text { sentence }\right)\right) . $ | (6) |

| $ P\left(y_{1}, \cdots, y_{l} \mid \text { sentence }\right) = \frac{\mathrm{e}^{C\left(y_{1}, \cdots, y_{l}\right)}}{\sum\nolimits_{\hat{y}_{1}, \cdots, \hat{y}_{l}} \mathrm{e}^{C\left(\hat{y}_{1}, \cdots, \hat{y}_{l}\right)}} . $ | (7) |

Where f(·) is nonlinear activation function, In this paper, we use tanh as activation function.

In the end, the NER layer is trained using the cross-entropy loss function as the objective function, which is defined as

| $ L_{\text {ner }} = -\log P(\hat{y}), $ | (8) |

where

In this section, we introduce the relation recognition task which conceived as a multi-classification problem. The inputs of RC layer are the output of Bi-LSTM layer and Deprel embedding:

| $ \boldsymbol{d}_{i} = \left[\boldsymbol{h_{i}}, {\bf { deprel }}_{i}\right], i = 1, \cdots, l. $ | (9) |

where hi is the Bi-LSTM output at timestep i, depreli is Deprel embedding.

Through experiments, we found that dependency relationships can improve the effectiveness of relationship classification. Hence, we add the dependency relationship into the inputs of the RC layer. For the RC layer input D=[di, …, dl], we also use the attention model to get the representation

| $ \boldsymbol{A}_{\mathrm{rel}}^{(\boldsymbol{i})} = \operatorname{softmax}\left(\frac{\boldsymbol{Q} \boldsymbol{W_{i}^{Q}} \times\left(\boldsymbol{K} \boldsymbol{W_{i}^{K}}\right)^{\mathrm{T}}}{\sqrt{d}}\right) \boldsymbol{V}, i \in[1, \text { head}]. $ | (10) |

| $ \hat{\boldsymbol{D}} = \boldsymbol{D} \boldsymbol{A}_{\mathrm{rel}}^{\mathrm{T}}. $ | (11) |

where Q and K are both equal to D.

Our goal is to predict the maximum possible relationship category of each token wi, i∈1, …, l with other tokens wj, j∈1, …, l and j≠i. Since there may be multiple relationships between tokens, this obstacle is a multi-label problem. For the multi-label problem, there are two solutions. One solution is to use a softmax function to calculate the probability of all relationships between each token. Then we set a threshold, and when the probability of the relationship exceeds the threshold, the relationship is considered to exist. The other method is to use multiple a sigmoid model to classify any kind of relationship between each token. These two methods are essentially the same. In order to reduce hyperparameters in the model, we use the latter to predict the relation between each token.

We calculate the score between tokens wi and wj given a relation label rk as follows

| $ s\left(\hat{d}_{i}, \hat{d}_{j}, r_{k}\right) = \boldsymbol{V} \cdot \tanh \left(\boldsymbol{U} \hat{d}_{i}+\boldsymbol{W} \hat{d}_{j}+\boldsymbol{b}\right). $ | (12) |

where

The probability of token wi choses token wj as the tail of relation label rk is

| $ P\left(w_{j}, r_{k} \mid w_{i}\right) = \operatorname{sigmoid}\left(s\left(\hat{d}_{i}, \hat{d}_{j}, r_{k}\right)\right) . $ | (13) |

Finally, we minimize the objective function, which is the cross-entropy loss function:

| $ L_{\mathrm{rel}} = -\sum\nolimits_{i = 1}^{l} \sum\nolimits_{j = 1}^{m} \log P\left(y_{i, j}, r_{i, j} \mid w_{i}\right). $ | (14) |

where yi∈W and

To test the performance of the proposed method, we perform experiments on two public datasets NYT and WebNLG. The New York Times (NYT) dataset was obtained by Riedel et al.[21] using a remote monitoring method. The 1.18 M sentence corpus of this dataset is extracted from 194 k articles of the New York Times from 1987 to 2007, and there are overall 24 relationships. We use the same processing method as Zeng et al.[7] to preprocess the dataset. First, we filter out sentences in the dataset that are longer than 100 words and those that do not contain a relationship. Then 5 000 sentences are randomly selected as the test set, and the rest of sentences (a total of 56 195 sentences) are for training purpose. Among the train set, we take 5 000 sentences are selected as the verification and the rest are the training set. Meanwhile, the sentences are classified based on the degree of relationship overlap contained in the sentences.

The WebNLG[22] was originally created for natural language generation (NLG) task. We follow the method of to filter sentences with more than 100 words and for WebNLG, we use only the first sentence in each instance in our experiments. The number of sentences of every class in NYT and WebNLG dataset are shown in Table 1. In the experimental analysis stage, we analyze the SEO dataset in the NYT dataset in detail.

|

|

Table 1 The number of Normal, SEO, and EPOcategories in the training and test sets |

We still use the Stanford NLP tool to perform word segmentation and part-of-speech and dependency relation analysis on sentences for the POS embedding and the Deprel embedding inputs.

3.2 Experimental parameter setting and evaluation metricsTable 2 shows some parameter settings during our experiment. In these experiments, we use LSTM[23] as the cell of Bi-LSTM layer, which unit number is 64. About the information of the inputs, the word embedding, we use the pre-trained word2vec to initialize, which dimension is 50. The character embedding size is 25, the embedding size of POS and Deprel are all 32. In the attention mechanism, we use multi-head attention[20] which the size of the heads of attention is 8. We use Adam[24] to optimize parameters and if the loss function decreases less or does not decrease within 30 epochs, we will early stop training. The learning rate is 0.001 at the beginning of the training. At the same time, we use dropout to regularize our network. Dropout is used between input embeddings and hidden layers.

|

|

Table 2 Experimental parameter setting |

We compare our method with Copy (Zeng et al.[7]) and GraphRel (Fu et al.[18]), which conduct the best performance on relational facts extraction. We use a strict evaluation method, that is, an entity is considered correct if and only if its boundaries and types are correct simultaneously; a relationship is correct if and only if the type of this relationship and the entities that the relationship connected are both correct. And we use the standard micro Precision, Recall and F1 score to evaluate the results.

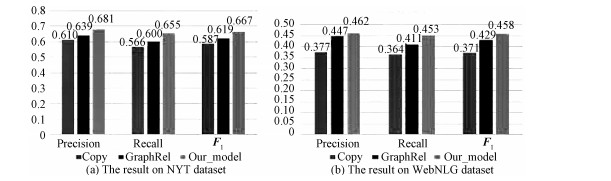

3.3 Different types of triplesIn this paper, we divided the sentences in the test corpus into three types: Normal, SEO, and EPO. Figure 4 shows the Precision, Recall, and F1 value of Copy model, GraphRel, and our model.

|

Download:

|

|

Fig. 4 Experimental results on different datasets |

|

As we can see, in NYT dataset, our model achieves the best F1 score, which is 0.667. There is 8 % improvement compared with the Copy model, and there is 4.8 % improvement compared with the GraphRel model. In the WebNLG dataset, our model also achieves the highest F1 score(0.458). Our model outperforms the Copy model and GraphRel model with 18.7 % and 2.9 % improvements, respectively. These observations verify the effectiveness of our models.

We can also observe that, in both NYT and WebNLG dataset, our model precision value and recall value are relatively balanced. We think that the reason is in the structure of the proposed models, in the relationship recognition task, the last word of the entity is multi-classified to identify overlapping relationships. The Copy model apply copy mechanism to find entities for a triplet, and a word can be copied many times when this word needs to participate in multiple different triplets, but Copy cannot solve the situation where the entity consists of multiple words. Not surprisingly, our model recalls more triples and achieves higher recall value.

To verify the ability of our models in handling the overlapping problem, we conduct further experiments on NYT dataset. Figure 5 shows the results of Copy, GraphRel, and our model in Normal, EPO, and SEO classes. As we can see, our proposed models perform much better than Copy model and GraphRel model in EPO class and SEO classes. Specifically, our models achieve much higher performance on all metrics. Moreover, we notice that the extracted performance of SEO class is 24.9 % improvement compared with the Copy model, and 16.7 % improvement compared with the GraphRel model, which proves that our proposed models are more suitable for the triplet overlap issues. We think that the reason is that the copy mechanism used by the Copy model is difficult to judge how many triplets are needed for the input sentence. The GraphRel model models text as relational graph, which will miss the semantic information, and at the same time, the embedding of entity nodes in GCN will be smooth. The model proposed in the paper is to separately label the entities and multi-classify the relationships. Finally, output the results of the two subtasks in a strict manner to extract fact triples.

|

Download:

|

|

Fig. 5 The F1 score on different classes of NYT |

|

As we all known, part-of-speech (POS) information and dependency relations (Deprel) contain more information for a sentence than word embedding alone, attention mechanism can effectively mine features in text. We aim to verify the impacts of adding the external information, such as POS and Deprel, and adding attention mechanism the in different positions on the performance of entity extraction and relationship recognition tasks, to verify the rationality of our model. We detail analyze the experimental results on NYT's SEO, where the Original has neither the POS embedding and Deprel embedding in the input for the encoding layer nor the attention layer after Bi-LSTM. In the relation recognition process, neither the Deprel embedding nor attention layers were added.

3.4.1 Add POS embeddingIn this experiment, POS information was added to the Original model, and the results are shown in Table 3. The Input_add_POS experiment refers to the results of adding POS embedding to the embedding layer, and the Rel_add_POS refers to the result of adding POS embedding as the input of the relation classification layer.

|

|

Table 3 The results of adding POS embedding on SEO dataset |

First, from the table above, compared to the Original, the Precision, Recall, and F1 in the Input_add_POS have improved by approximately 6 %, 3 %, and 5 % respectively. The results of the Rel_add_POS are also improved compared to the Original, but not as effective as the Input_add_POS. We believe that in the relationship recognition task, the input part is the output from the encoding layer, which contains more complex representations obtained by the part-of-speech information through the neural network. At the same time, from the comparison of the Rel_add_POS and Input add POS, it is not appropriate to concatenate POS embedding on the input layer of relationship recognition, because it blurs the features obtained during encoding and ends up the lower performance than that of Input_add_POS. Ignoring the positions of where the POS added, the results of adding the POS is better than the Original.

3.4.2 Add Deprel embeddingThis group of experiments is to verify the effect of adding dependency relation (Deprel) and the results are shown in Table 4. The first experiment, named Input_add_deprel, is to concatenate the Deprel embedding with the other input embedding. Compared with the Original, the experimental results are improved by approximately 6 %, 3 % and 4.5 % in Precision, Recall and F1, respectively. Rel_add_deprel experiment is based on experiment Input_add_deprel, hence in the relationship recognition task Deprel embedding is stitched in the input. Compared with the Original, the experimental results are improved by approximately 8 %, 3.5 % and 6 % in Precision, Recall, and F1, respectively. This experiment shows that the dependency relation feature has a greater impact on the results in the relationship recognition task. The reason lies within the dependency relationship itself. The dependency relationship naturally contains the grammatical interdependence characteristics between words, and the grammatical interdependence to some extent reflects the semantic interdependence between words. We also see this trend in entity extraction task.

|

|

Table 4 The results of adding Deprel embedding on SEO dataset |

This group of experiments is to discuss the impact of attention mechanism on entity identification and relationship recognition tasks. The experimental results are shown in Table 5. We also perform attention at the input layer and the embedding layer of the relationship recognition task. The Input_attention experiment is to add an attention mechanism after the Bi-LSTM encoding of the input layer. Compared with the Original, the result in Precision, Recall and F1 were improved by 1 %, 5.5 % and 3.4 %, respectively. In the Rel_attention experiment, we add an attention mechanism to the input layer of the relationship recognition task. The experimental results are about 4 % improvement over the Original, at Precision, Recall and F1. Analyzing the experimental results, we believe that there are two reasons that explain the improvement of the experimental results. The first is the use of the attention mechanism, after the Bi-LSTM encoding of the input layer, obtains the global features between the words in the sentence, especially in the sentence. With the length of the sentence increases, the long-distance dependencies will be forgotten. The attention mechanism can make up for this shortcoming by directly calculating the distance between all vectors. The second is using the attention mechanism in the task of relationship recognition. We intend to assign more weight about the relationship between entities. Even if the position of the entity, within the relation triplet, are currently unknown, the connect represented as relation is strong than other unrelated words or entities. The attention mechanism can focus on this and lays better foundation for later relationship classification.

|

|

Table 5 The results of adding attention on SEO dataset |

Table 6 shows the case study of Copy model and GraphRel model and our proposed model. The first sentence is an easy case and all models can extract accurately. For the second case, although there does not belong to name entity, it should contain the hidden semantic of Italy. Therefore, our model can further predict that A.S. Gubbio 1910 grounds in Italy. The third case is an SEO class in which Copy and GraphRel only discover that Asam pedas is the same as Asam padeh, while our model can locate in Malay Peninsula and come from Malaysia. The fourth case is an EPO class, our model can identify multiple types of relationships between entities.

|

|

Table 6 Case study of different models |

In this paper, we propose a new joint extraction model to solve the relation extraction task. Relation extraction is composed of entity recognition and relationship recognition. For overlapping relationships, we use the multi-label classification method in the relationship recognition. The labeling method is to label the position of the head and the tail entity in the relationship triple with the corresponding relationship. At the same time, since the entity may contain multiple words, we only use the position of the last word of the entity as the entity's position in labeling process. At the same time, we not only add part-of-speech (POS) information and dependencies (Deprel) at the input layer, but also employ an attention mechanism at the coding layer. Our experiments have proved that these modifications are effective, and the results of the relationship extraction are significantly improved, comparing with the experimental results of Copy, the Precision, Recall and F1 improved by about 7.1 %, 8.9 % and 8 % respectively, on NYT dataset. For future research directions, there are still more to explore. in this article, we just simply spliced the dependencies and did not investigate further of the information on the sentence structure. Recently, many articles have used the graph convolutional neural network to model the syntactic dependency tree, which can mine the sentence structure information and provide more information than simple dependencies we used. At the same time, from another perspective, syntactic dependencies and part-of-speech information depend on external tools. It is also worth to investigate whether we can use only sentence information for relationship extraction to achieve this effect.

| [1] |

Zelenko D, Aone C, Richardella A. Kernel methods for relation extraction[C]//Proceedings of the ACL-02 conference on Empirical methods in natural language processing-EMNLP'02. Not Known. Morristown, NJ, USA: Association for Computational Linguistics, 2002: 71-78. DOI: 10.3115/1118693.1118703.

|

| [2] |

Chan Y S, Roth D. Exploiting syntactico-semantic structures for relation extraction[C]//Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1. Portland, Oregon, 2011: 551-560.

|

| [3] |

Li Q, Ji H. Incremental joint extraction of entity mentions and relations[C]//Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Baltimore, Maryland. Stroudsburg, PA, USA: Association for Computational Linguistics, 2014: 402-412. DOI: 10.3115/v1/p14-1038.

|

| [4] |

Yu X, Lam W. Jointly identifying entities and extracting relations in encyclopedia text via a graphical model approach[C]//Proceedings of the 23rd International Conference on Computational Linguistics: Posters. Association for Computational Linguistics. August 23-27, 2010, Beijing, China. 2010: 1399-1407.

|

| [5] |

Miwa M, Bansal M. End-to-end relation extraction using LSTMs on sequences and tree structures[C]// Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Berlin, Germany. Stroudsburg, PA, USA: Association for Computational Linguistics, 2016: 1105-1116. DOI: 10.18653/v1/p16-1105.

|

| [6] |

Dai D, Xiao X Y, Lyu Y J, et al. Joint extraction of entities and overlapping relations using position-attentive sequence labeling[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2019, 33: 6300-6308. DOI:10.1609/aaai.v33i01.33016300 |

| [7] |

Zeng X R, Zeng D J, He S Z, et al. Extracting relational facts by an end-to-end neural model with copy mechanism[C]//Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Melbourne, Australia. Stroudsburg, PA, USA: Association for Computational Linguistics, 2018: 506-514. DOI: 10.18653/v1/p18-1047.

|

| [8] |

Collobert R, Weston J, Bottou L, et al. Natural language processing (almost) from scratch[J]. IEICE Transactions on Fundamentals of Electronics, Communications and Computer Sciences, 2011, abs/1103.0398: 2493-2537.

|

| [9] |

Ma X Z, Hovy E. End-to-end sequence labeling via Bi-directional LSTM-CNNs-CRF[EB/OL]. 2016: arXiv: 1603.01354[cs. LG]. (2016-03-04) [2020-05-20] https://arxiv.org/abs/1603.01354.

|

| [10] |

Auer S, Bizer C, Kobilarov G, et al. DBpedia: a nucleus for a web of open data[C]//The Semantic Web. Springer, Berlin, Heidelberg, 2007: 722-735. DOI: 10.1007/978-3-540-76298-0_52.

|

| [11] |

Bollacker K, Evans C, Paritosh P, et al. Freebase: a collaboratively created graph database for structuring human knowledge[C]//SIGMOD ′08: Proceedings of the 2008 ACM SIGMOD international conference on Management of data. 2008: 1247-1250. DOI: 10.1145/1376616.1376746.

|

| [12] |

Nadeau D, Sekine S. A survey of named entity recognition and classification[J]. Lingvisticae Investigationes, 2007, 30(1): 3-26. DOI:10.1075/li.30.1.03nad |

| [13] |

Rink B, Harabagiu S. Utd: classifying semantic relations by combining lexical and semantic resources[C]//Proceedings of the 5th International Workshop on Semantic Evaluation. Association for Computational Linguistics, 2010: 256-259.

|

| [14] |

Luo G, Huang X J, Lin C Y, et al. Joint entity recognition and disambiguation[C]//Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing. Lisbon, Portugal. Stroudsburg, PA, USA: Association for Computational Linguistics, 2015: 879-888. DOI: 10.18653/v1/d15-1104.

|

| [15] |

Lample G, Ballesteros M, Subramanian S, et al. Neural architectures for named entity recognition[C]//Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. San Diego, California. Stroudsburg, PA, USA: Association for Computational Linguistics, 2016: 260-270. DOI: 10.18653/v1/n16-1030.

|

| [16] |

Huang Z H, Xu W, Yu K. Bidirectional LSTM-CRF models for sequence tagging[J]. arXiv: 1508.01991, 2015.

|

| [17] |

Zheng S C, Wang F, Bao H Y, et al. Joint extraction of entities and relations based on a novel tagging scheme[C]//Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Vancouver, Canada. Stroudsburg, PA, USA: Association for Computational Linguistics, 2017: 1227-1236. DOI: 10.18653/v1/p17-1113.

|

| [18] |

Fu T J, Li P H, Ma W Y. GraphRel: modeling text as relational graphs for joint entity and relation extraction[C]//Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence, Italy. Stroudsburg, PA, USA: Association for Computational Linguistics, 2019: 1409-1418. DOI: 10.18653/v1/p19-1136.

|

| [19] |

Mikolov T, Chen K, Corrado G, et al. Efficient estimation of word representations in vector space[J]. arXiv: 1301.3781, 2013.

|

| [20] |

Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need[C]//Advances in neural information processing systems. 2017: 5998-6008.

|

| [21] |

Riedel S, Yao L M, McCallum A. Modeling relations and their mentions without labeled text[C]//Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Springer, Berlin, Heidelberg, 2010: 148-163. DOI: 10.1007/978-3-642-15939-8_10.

|

| [22] |

Gardent C, Shimorina A, Narayan S, et al. Creating training corpora for NLG micro-planners[C]//Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Vancouver, Canada. Stroudsburg, PA, USA: Association for Computational Linguistics, 2017: 179-188. DOI: 10.18653/v1/p17-1017.

|

| [23] |

Hochreiter S, Schmidhuber J. Long short-term memory[J]. Neural Computation, 1997, 9(8): 1735-1780. DOI:10.1162/neco.1997.9.8.1735 |

| [24] |

Kingma D P, Ba J. Adam: a method for stochastic optimization[J]. arXiv: 1412.6980, 2014.

|

2022, Vol. 39

2022, Vol. 39