2. University of Chinese Academy of Sciences, Beijing 100049, China;

3. Chengdu ZhongKeWei Information Technology Research Institute Co Ltd, Chengdu 610000, China

2. 中国科学院大学, 北京 100049;

3. 成都中科微信息技术研究院有限公司, 成都 610000

With the rapid development of smart phones, the camera function of mobile phones has become more and more powerful. People are happy to take photos of their favorite scenery anytime and anywhere. However, taking a good photo requires taking into account multiple factors such as lighting condition, especially highlights can interfere with buildings or people in an image. Principally, the degradation occurs because the area affected by the highlights contain different imageries from those without highlights.

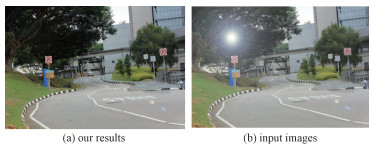

In this paper, we address the particular situation where the image is impaired by highlights. Our goal is to remove the highlights and produce a clean image as shown in Fig. 1. Using our method, people can take their own satisfactory photos more easily.

|

Download:

|

|

Fig. 1 Demonstration of our highlight removal method |

|

So far, many papers deal with image highlights-removal by traditional methods such as analyzing pixel color (highlight color)[1] or using bilateral filtering[2]. Few papers solved highlights-removal problems through neural networks. We believe that neural networks reduce the limitations of scenes and lighting types compared to traditional algorithms, and that is why we choose to use GAN (generative adversarial networks) to remove the highlights. We find image highlights-removal is similar to image raindrop-removal in algorithm flow (locate, restore). There are some methods proposed to tackle the raindrop detection and removal problems, which are helpful to us. Methods in such as Refs.[3-5]are dedicated to detecting raindrops. Some methods are introduced to detect and remove raindrops using stereo camera[6], video[7-8], or specifically designed optical shutter[9], and thus are not applicable for a single input image taken by a normal camera. Recently, a new network proposed by Qian et al.[10] performs well, which can detect the raindrops and remove them.

Generally, the highlights-removal problem is intractable. There are two main reasons. One is that the regions occluded by highlights are not given, the other is that the information about the background scene of the occluded regions is almost lost. Naturally, there are two corresponding steps to solve this problem. The first step is to locate the area affected by the highlights, and the second step is to restore the occluded area.

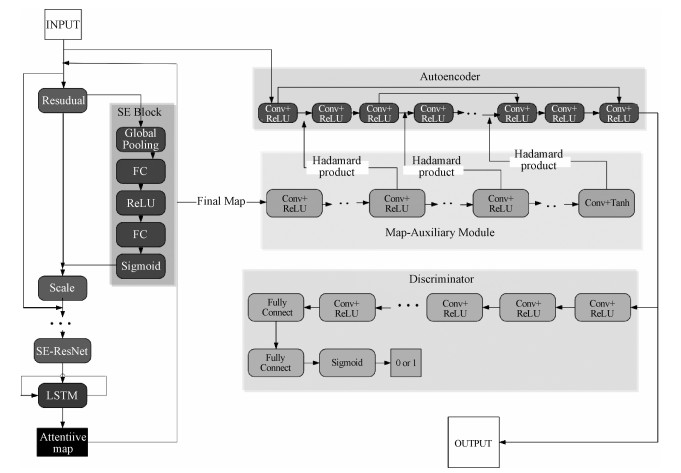

In our network, we propose an improved GAN[11], where the generator will be assessed by the discriminator to ensure that our generator produce good outputs. The first part of the generator is to locate the area affected by the highlights, so we build the attention module. It mainly consists of the SE-ResNet[12-13]combined with a convolutional long short term memory network (ConvLSTM)[14]. The second part is to remove the degraded area by our attention-auxiliary auto-encoder. The output of the attention model will instruct the network to remove highlights more accurately. We use the autoencoder to extract higher-order features and use the decoder to predict clean images. At last, our discriminative network will check if it is real enough. This kind of training method is like a game process, which can make both generator and discriminator get stronger.

Overall, based on existing networks, we have two main contributions, one is applying deep-learning method (GAN) to the image highlights-removal, the other is the injection of the SE block into the generative network.

1 The proposed networkWe think the attentive GAN[10] is pretty effective for image rain-removal. However, for image highlights-removal, this network is not very suitable because of the different characteristics between raindrops and highlights. So, we improved the generator to fit our mission better. Figure 2 shows an overview of our proposed attention-auxiliary GAN.

|

Download:

|

|

Fig. 2 Overview of our proposed attention-auxiliary GAN |

|

We employ a recurrent network to find the areas influenced by highlights. In our architecture, each time step consists primarily of a SE-ResNet and a ConvLSTM network.

1.1.1 SE-ResNetSE-ResNet is one of the most essential parts of the attention model, it can be seen of as a combin-ation of SE blocks[12] and residual networks[13]. Since the features of highlights are very different from those of the background, the weights of the feature maps should also be different. SE blocks can do this very well.

A SE block is divided into three steps: squeeze, excitation, and scale. Firstly, squeeze is achieved by using global average pooling to generate channel-wise statistics. Formally, a statistic $\mathcal{z}$ ∈ $\mathbb{R}$c is generated by shrinking U through its spatial dimensions H×W, such that the c-th element of $\mathcal{z}$ is calculated by:

| $ {\mathcal{z}_c} = {F_{{\rm{sq}}}}({u_c}) = \frac{1}{{H \times W}}\sum\limits_{i = 1}^H {\sum\limits_{j = 1}^W {{\rm{ }}{u_c}\left( {i, j} \right).} } $ | (1) |

To make use of the information aggregated in the squeeze operation, we follow it with a second operation which aims to fully capture channel-wise dependencies. We opt to employ a simple gating mechanism with a sigmoid activation:

| $ s = {F_{{\rm{ex}}}}\left( {\mathcal{z}, W} \right) = \sigma \left( {g\left( {\mathcal{z}, W} \right)} \right) = \sigma ({W_{\rm{2}}}\delta ({W_1}\mathcal{z})). $ | (2) |

Where δ refers to the ReLU[15] function,

| $ {{\tilde x}_c} = {F_{{\rm{scale}}}}({u_c},{s_c}) = {s_c}.{u_c}, $ | (3) |

Where

Long short term memory network (LSTM)[16] is a special recurrent neural network(RNN), capable of learning long-term dependencies. The key to LSTM is the cell state, which is kind of conveyor belt. It runs straight down the entire chain, with only some minor linear interactions. It is very easy for information to just flow along it and remain unchanged. And each time step consists of an input gate it a forget gate ft, an output gate ot, and a cell state Ct. The ConvLSTM is essentially the same as the LSTM, taking the output from the previous layer as the input from the next layer. Different from the normal LSTM, the ConvLSTM is added with the convolution operation, which can not only get timing relationships, but also extract spatial features. At the same time, the operation between states is replaced by convolution operation. The key equations of ConvLSTM are shown below, where '*' denotes the convolution operator, '°' denotes the Hadamard product, Xtis the input, and Ht represents the output:

| $ {i_t} = \sigma ({W_{xi}} * {X_t} + {W_{hi}} * {H_{t - 1}} + {W_{ci}} \circ {C_{t - 1}} + {b_i}), $ | (4) |

| $ {f_t} = \sigma ({W_{xf}}*{X_t} + {W_{hf}}*{H_{t - 1}} + {W_{cf}} \circ{C_{t - 1}} + {b_f}), $ | (5) |

| $ {C_t} = {\rm{ }}{f_t} \circ {C_{t - 1}} + {i_t} \circ {\rm{tanh}}({W_{xc}}*{X_t} + {W_{hc}}*{H_{t - 1}} + {b_c}), $ | (6) |

| $ {o_t} = \sigma ({W_{xo}}*{X_t} + {W_{ho}}*{H_{t - 1}} + {W_{co}} \circ {C_t} + {b_o}), {\rm{ }} $ | (7) |

| $ {H_t} = {o_t}\circ{\rm{tanh}}({C_t}). $ | (8) |

For this project, we set the time step of the ConvLSTM to 6. Feeding the original degraded image to this module, we can get the final attention map M, which is a 2D matrix ranging from 0 to 1. And the higher the value, the more attention should be paid. The loss function of this part is defined as the mean squared error (MSE) between M and D, where D is the difference between groundtruths and degraded images.

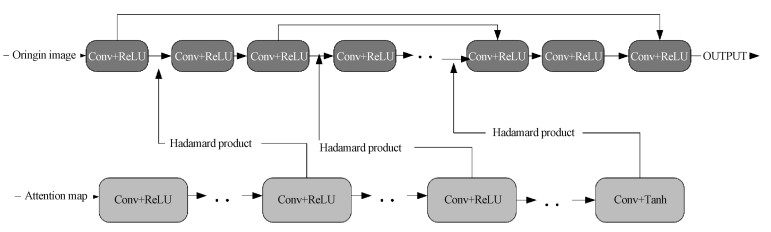

1.2 Map-auxiliary autoencoderThe purpose of this module is to use the previously obtained attention map M to remove highlights and generate a clean image. This module consists of two parts: one is a normal autoencoder with skip connections, the other is an attention- auxiliary network. Figure 3 illustrates the architecture of our map-auxiliary autoencoder.

|

Download:

|

|

Fig. 3 The architecture of our map-auxiliary network |

|

As shown in Fig. 3, the darker part is the autoencoder module, while the grey part is the map-auxiliary module. We think the final attention map generated by the previous attention model is very meaningful to help the autoencoder remove the highlights. To make better use of the final attention map, instead of concatenating it with the input image directly, we feed the final attention map into the attention-auxiliary network and train independently to generate three auxiliary maps. We think these three auxiliary maps of different scales can instruct the autoencoder to remove the highlight by performing Hadamard product. And we describe this module by following formula:

| $ C{\rm{ }} = \Phi \left( {{\rm{ }}A{\rm{ ° }}{\rm{ }}I{\rm{ }}} \right) + \left( {{\rm{ }}E{\rm{ }} - {\rm{ }}A{\rm{ }}} \right){\rm{ ° }}I{\rm{ }}, $ | (9) |

where C is the clean image that we want, A is the attention matrix that learned from attention-auxiliary module, I is the degraded image and E denotes the identity matrix of the same size as A. Φ represents a transformation process that can restore the highlight area. Of course, this transformation is exactly learned through our autoencoder and attention-auxiliary module. And the loss function of this module contains a perceptual loss[17] and a multi-scale loss[10].

1.3 DiscriminatorWe employ the attentive discriminator[8] as our discriminator. Different from conventional discriminators, the attentive discriminator uses the final attention map generated by our attention model, which uses a loss function based on the output of CNN and the attention map.

2 Experiments 2.1 Dataset and implementation 2.1.1 DatasetSimilar to current deep learning methods, our method requires relatively a large amount of data with groundtruths for training. However, since there is no such dataset for our project, we create our own. In our experiment, we need a set of image pairs, where each pair contains exactly the same background scene, yet one is degraded by highlights and the other is free from highlights. The important thing is and the other is free from highlights. The important thing is we need to manage any other causes of misalignment, such as camera motion, when taking the two images; and, ensure that the atmospheric conditions as well as the background objects to be static during the acquisition process. In the process of capturing image pairs, we found it difficult to shoot a pair of photos that were just different in lighting.

So, we decide to use Photoshop to simulate highlights in images. Firstly, we download 861 clean pictures which are taken with Canon from the Internet. Then we add highlights to the images randomly and generate 861 image pairs for training. And in this way we get an ideal training set. In addition, we use the same method to generate 200 image pairs as the test set.

2.1.2 Model implementationThe model is implemented with the TensorFlow framework and trained from scratch in a fully supervised manner. The learning rate is 0.002. Our attention auxiliary module has 9 convolution-ReLU blocks and the ratio of the SE block r is 16.

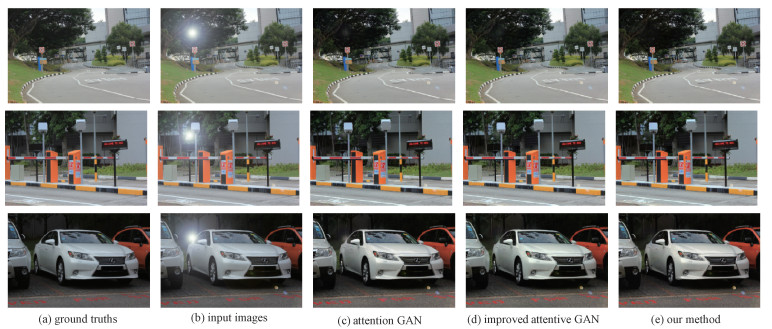

2.2 Results and analysis 2.2.1 Comparison resultsThere are four models in our experiment. The first model uses CycleGAN[18], which does not add attention mechanism. The second is the original attentive GAN[10], which does not contain the SE block and the map-auxiliary module. The third is an improved version of the original attentive GAN, which is part of our own network. In this model, we consider the channels with different given weights, and apply the SE block to the model. And the last one is the whole attention-auxiliary GAN, which has employed both SE block and map-auxiliary module. Table 1 shows the numerical comparison of the above three models.

|

|

Table 1 Quantitative evaluation results |

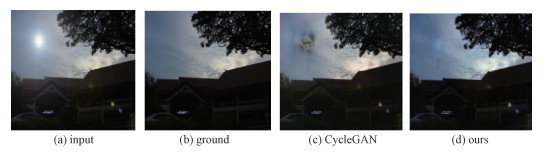

As shown in the table, our model has a significant improvement in numerical value compared to the original model. We can find that the effect of direct mapping under the CycleGAN framework does not perform well numerically. Figure 4 shows the visual comparison of CycleGAN and our algorithm.

|

Download:

|

|

Fig. 4 Results of comparing CycleGAN and our algorithm |

|

It can be drawn from Fig. 4 that the comparison of the recovery effects of CycleGAN and our algorithm, which illustrates the poor recovery details of CycleGAN. As far as our attention-auxiliary GAN is concerned, it can accurately locate and remove areas affected by highlights. Thus, we can infer that the attention network improves the algorithm's ability to recover details by finding the region of interest. Figure 5 shows the visual comparison of three models with attention mechanisms.

|

Download:

|

|

Fig. 5 Results of comparing a few different methods |

|

In addition, in order to display the effect of our attention module in a real scene, we feed the real images degraded by highlights into our network. Figure 6 shows the region of interest extracted by the attention module in a real scene. The red area in the figure indicates the area affected by the highlights, which is also the area we should pay attention to.

|

Download:

|

|

Fig. 6 The region of interest extracted by the attention module |

|

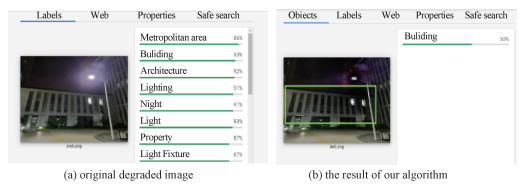

In order to measure the performance in practical application, we also test our model by employing Google Vision API, which can reflect the recognition performance of our outputs. As shown in Fig. 7, Google Vision API can not frame the building in the original degraded image, while it can frame the building in our output. Therefore, our method has a good improvement for target recognition.

|

Download:

|

|

Fig. 7 The performance of a pair of images judged by Google Vision API |

|

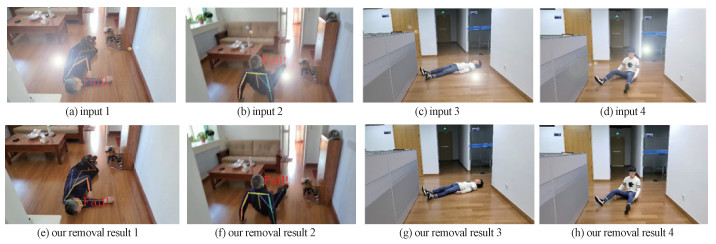

In addition, our algorithm can remove highlights in indoor scenes, thereby improving the accuracy of indoor video detection algorithms (fall detection). Figure 8 shows the effect of our algorithm in indoor scenes, which has a certain meaning in real life.

|

Download:

|

|

Fig. 8 The performance of our algorithm in indoor scenes (fall detection) |

|

This paper targets the problem of removing the highlights from single image. We have proposed a novel attention-auxiliary GAN. Different from the existing networks, our proposed network feeds the accurate attention map into the map-auxiliary module, which can help the autoencoder generate a clean image. The attention-auxiliary module makes the GAN accurately locate the region of interest, and then remove the highlights in the image more accurately.

More potentially, we can expand our training set to various types of highlights, such as highlights caused by street lights, car headlights or camera flash. Therefore, we believe that our deep learning-based method image highlights-removal has a wide range of application scenarios, which makes sense in real life.

| [1] |

Tan P, Lin S, Quan L, et al. Highlight removal by illumination-constrained inpainting[C]//Proceedings of the 9th IEEE International Conference on Computer Vision. October 13-16, 2003, Nice, France. IEEE, 2003: 164-169. DOI: 10.1109/ICCV.2003.1238333.

|

| [2] |

Yang Q X, Wang S N, Ahuja N. Real-time specular highlight removal using bilateral filtering[C]//European Conference on Computer Vision-ECCV 2010. Springer, Berlin, Heidelberg, 2010: 87-100. DOI: 10.1007/978-3-642-15561-1_7.

|

| [3] |

Kurihata H, Takahashi T, Ide I, et al. Rainy weather recognition from in-vehicle camera images for driver assistance[C]//IEEE Proceedings of Intelligent Vehicles Symposium, June 6-8, 2005, Las Vegas, NV, USA. IEEE, 2005: 205-210. DOI: 10.1109/IVS.2005.1505103.

|

| [4] |

Roser M, Geiger A. Video-based raindrop detection for improved image registration[C]//2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops. September 27-October 4, 2009, Kyoto, Japan. IEEE, 2009: 570-577. DOI: 10.1109/ICCVW.2009.5457650.

|

| [5] |

Roser M, Kurz J, Geiger A. Realistic modeling of water droplets for monocular adherent raindrop recognition using bézier curves[C]//Asian Conference on Computer Vision-ACCV 2010. Springer, Berlin, Heidelberg, 2010: 235-244. DOI: 10.1007/978-3-642-22819-3_24.

|

| [6] |

Tanaka Y, Yamashita A, Kaneko T, et al. Removal of adherent waterdrops from images acquired with a stereo camera system[J]. IEICE Transactions on Information and Systems, 2006, E89-D(7): 2021-2027. DOI:10.1093/ietisy/e89-d.7.2021 |

| [7] |

Yamashita A, Fukuchi I, Kaneko T. Noises removal from image sequences acquired with moving camera by estimating camera motion from spatio-temporal information[C]//2009 IEEE/RSJ International Conference on Intelligent Robots and Systems. October 10-15, 2009, St. Louis, MO, USA. IEEE, 2009: 3794-3801. DOI: 10.1109/IROS.2009.5354639.

|

| [8] |

You S D, Tan R T, Kawakami R, et al. Adherent raindrop modeling, detection and removal in video[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 38(9): 1721-1733. DOI:10.1109/TPAMI.2015.2491937 |

| [9] |

Hara T, Saito H, Kanade T. Removal of glare caused by water droplets[C]//2009 Conference for Visual Media Production. November 12-13, 2009, London, UK. IEEE, 2009: 144-151. DOI: 10.1109/CVMP.2009.17.

|

| [10] |

Qian R, Tan R T, Yang W H, et al. Attentive generative adversarial network for raindrop removal from a single image[C]// 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. June 18-23, 2018, Salt Lake City, UT, USA. 2018: 2482-2491. DOI: 10.1109/CVPR.2018.00263.

|

| [11] |

Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets[C]//Advances in Neural Information Processing Systems, 2014: 2672-2680.

|

| [12] |

Hu J, Shen L, Sun G. Squeeze-and-excitation networks[C]// 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. June 18-23, 2018, Salt Lake City, UT, USA. 2018: 7132-7141. DOI: 10.1109/CVPR.2018.00745.

|

| [13] |

He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]// 2016 IEEE Conference on Computer Vision and Pattern Recognition. June 27-30, 2016, Las Vegas, NV, USA. 2016: 770-778. DOI: 10.1109/CVPR.2016.90.

|

| [14] |

Shi X J, Chen Z R, Wang H, et al. Convolutional LSTM network: a machine learning approach for precipitation nowcasting[C]//Advances in Neural Information Processing Systems, 2015: 802-810.

|

| [15] |

Nair V, Hinton G E. Rectified linear units improve restricted boltzmann machines[C]//Proceedings of the 27th International Conference on Machine Learning (ICML-10), 2010: 807-814.

|

| [16] |

Hochreiter S, Schmidhuber J. Long short-term memory[J]. Neural Computation, 1997, 9(8): 1735-1780. DOI:10.1162/neco.1997.9.8.1735 |

| [17] |

Johnson J, Alahi A, Li F F. Perceptual losses for real-time style transfer and super-resolution[C]//European Conference on Computer Vision-ECCV 2016. Springer, Cham, 2016: 694-711. DOI: 10.1007/978-3-319-46475-6_43.

|

| [18] |

Zhu J Y, Park T, Isola P, et al. Unpaired image-to-image translation using cycle-consistent adversarial networks[C]// 2017 IEEE International Conference on Computer Vision. October 22-29, 2017, Venice, Italy. IEEE, 2017: 2242-2251. DOI: 10.1109/ICCV.2017.244.

|

2022, Vol. 39

2022, Vol. 39