For memory-intensive applications, the computer systems are required to cache huge amount of user data in main memory to achieve high performance due to the gap between main memory and main memory extension, such as hard disk. However, the current main memory of computer systems, dynamic random access memory (DRAM) faces its constraints of high-power consumption and high price-per bit. What's more, the resources on computers restrict the expansion of memory capacity. Hence, large capacity of DRAM makes the computers neither cost-effective nor energy-effective. For applications, when the available DRAM is to be exhausted, operating system extends main memory capacity using a part of storage, such as hard disk.

Many new non-volatile memory (NVM) devices have emerged recently, such as phase change memory (PCM), magnetic random-access memory (MRAM), resistive random-access memory (RRAM) and NAND flash-based SSD. Some researchers[1-3] introduce PCM into computer memory system, which reduces memory power consumption and improves memory capacity. However, the newly appeared PCM technology is not yet mature to be widely used in computer system because of its high cost and low memory capacity, such as Intel Optane memory. Among the various emerging NVM devices, the NAND flash-based SSD has been widely used in enterprise data storage systems. Compared with the hard disk, NAND flash memory owns low access latency, which makes it more suitable for main memory extension than the hard disk.

There are already many researches about using flash as DRAM extension. The works in Refs. [4-6]have proposed the methods of integrating SSD as system swap device. The OS swapping mechanism works at a page granularity, which causes unnecessary increase of data accessing and wastage of IO bandwidth when objects are smaller than a page. Some other research[7-8] map the SSD file into memory through POSIX mmap(). The performance of this method relies on smart data access patterns and achieves only a fraction of the SSD's performance by using mmap() system call. A few research[9-10]modify the memory management of OS and applications, which need large overhead. Yoshida1 et al.[11] implements flash translation layer (FTL) as software on the host side, so that small but frequently accessed data such as hash table is stored in DRAM, while key/value data occupying most of the data capacity is stored in the memory space composed of DRAM and flash. However, this application-optimized architecture needs to redesign the software FTL and hardware acceleration module for different applications, which leads to poor portability. And some approach[12-13] implement hybrid memory by adding hardware extension or modification. Some efforts have adopted SSD as a main memory extension by implementing a runtime library. SSDAlloc[14] can boost performance by managing data at an object granularity. Meanwhile, it needs extra page faults that cause more latency even when fetching data from the DRAM object cache. Chameleon[15] reduces the extra page faults by providing the access to the entire physical memory for applications, which leads to the waste of physical main memory.

In summary, using traditional Linux operating system, such as swap system and mmap(), to manage extended SSD memory can not make full use of the performance advantages of SSD. Some hardware based improvements have poor portability. And the application-oriented solution has not good portability. Designing a special extended memory management method, such as SSDAlloc and Chameleon, would be an effective approach.

In this paper, we propose a hybrid main memory architecture named HMM_alloc (hybrid main memory allocator) to augment DRAM with SSD. HMM_alloc implements a library to provide several application interfaces for allocating and releasing memory in user space. Data accessing is managed at object granularity by a custom page fault handler mechanism, similar to the thought of Liu et al.[16]. When the object size is smaller than 4 KB, object-granularity management can reduce extra system I/O by caching as many hot objects as possible in DRAM. Our key contributions are as follows:

1) HMM_alloc: A hybrid main memory architecture using SSD as the extension of main memory and using DRAM as the cache of SSD. To transparently manage the movement of data between DRAM and SSD, HMM_alloc offers interfaces for applications like malloc by implementing a runtime library.

2) Memory management based on object granularity: In comparison with using SSD as system swap device which manages data at a page granularity, HMM_alloc works at an application object granularity to boost the efficiency of data accessing on SSD. To reduce the waste of physical memory caused by object granularity, HMM_alloc provides a flexible memory partition strategy and a multi-mapping strategy to manage the physical memory by micro-pages.

3) Evaluation: The HMM_alloc architecture has been evaluated on a DRAM-restricted server with a number of memory-intensive workloads including microbenchmarks and some representative real-world applications.

1 DesignThis section explains the details of several strategies to implement the HMM_alloc system.

1.1 HMM system architectureThe proposed DRAM/SSD hybrid main memory architecture treats NAND flash-based SSD as an extension of system main system. It can efficiently handle allocation/ de-allocation requests in user space and random read/write operations with a large number of various-sized objects for applications. A runtime library is implemented to take charge of both virtual memory address space allocation and memory page exception. Accessing data out of DRAM is handled by a custom page fault handler as shown in Fig. 1. Instead of a page granularity of swapping management, SSDRAM works at an application object granularity to boost system performance and provide efficient use of SSD. Thus, DRAM memory is partitioned into micro-pages with several kinds of size and offset which are both less than one page. When an object is fresh accessed or fetched from SSD, an appropriate micro-page will be materialized dynamically to fill its page table entry.

|

Download:

|

|

Fig. 1 The data accessing of the hybrid memory |

|

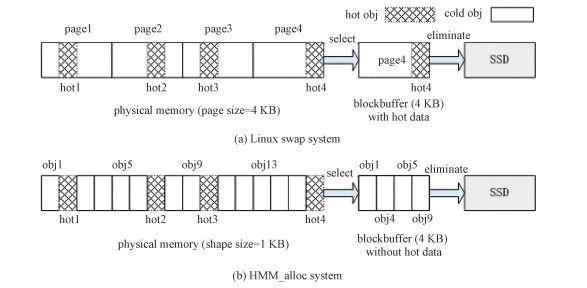

In the Linux-swap system, as shown in Fig. 2(a), data exchange between memory and storage is managed in page granularity. If the selected eliminated page includes hot data, this page would be fetched back again soon, which leads to extra system I/O. However in the proposed HMM_alloc system, as shown in Fig. 2(b), memory is managed in object granularity(e.g. object size=1 KB), so that we can eliminate cold objects to SSD, which reducing the probability of hot data being swapped out of memory. What is more, SSD is managed as a log-structured object store, which is partitioned at a sector granularity. Instead of whole pages, only objects are transferred when data fetching happens. Hence, packing objects and writing them in a log can not only reduce write data volume but also increase SSD lifetime.

|

Download:

|

|

Fig. 2 Comparison between Linux swap system and HMM_alloc system |

|

Virtual memory address allocation is managed in an object-per-page manner so that data accessing can be performed at an object granularity instead of one page in the traditional swap policy. That is, only an object is allocated on each virtual page and aligned with a changing offset. But in order to reduce the waste of DRAM memory caused by the object granularity and provide a transparent tiering to applications, a compact caching method and a multi-mapping strategy are implemented. In details, the offset of each object in each virtual memory page should be aligned with its corresponding physical memory space. This is completed by a kernel module to fill the page table entries. Thus, multiple virtual pages can be mapped into one physical memory page, as shown in Fig. 3.

|

Download:

|

|

Fig. 3 The mapping between virtual and physical memory |

|

A large pool of physical memory is reserved for HMM_alloc initially in the system. Physical memory is divided into lots of memory slabs with the size of 1 MB. For each memory slab, physical page frames are partitioned into several micro-pages according to a fixed-size pattern of current request. When a micro-page is required for the memory mapping, a unique slab is picked up to satisfy the allocation for the requested size. Then all the pages of the slab are divided into micro-pages with the predetermined size of the first object, and these micro-pages are grouped to different patterns according to different offsets.

Reference to our preliminary work[17], system keeps a group of FIFO lists for the micro-pages with the same size and offset using a two-FIFO clock algorithm. One FIFO list is used to track active objects whereas the other is used to track inactive ones which will be chosen as victim objects for swapping out. Micro-pages of one required slab are first assembled in each free list, waiting for physical memory allocation. Once a micro-page is selected for an object loading, it will be added to the head of active FIFO list. When FIFO is updated next time, the access bit of page at active FIFO head is set to 0 to record its accessing characteristics. When all the physical slabs are used up and the free list of the required pattern is empty, it needs to evict some objects and reclaim space for the incoming requests. The eviction begins to check the access bit of the page from the tail of active FIFO. If the access bit is set to 1, it reveals the recent accessing information and this object will be moved back to the head of active FIFO. If the access bit is already 0, this page is a good candidate for replacement and it will be moved to the head of inactive FIFO. The inactive FIFO list is managed with the similar method for objects which are evicted from active FIFO list.

1.4 SSD managementFlash-based SSD is managed as a log-structured object store and holds the objects flushed from DRAM memory in the log. To reduce the latency of read/write and make better use of DRAM memory and IO bandwidth, enough metadata is ensured in DRAM to perform objects fetching instead of pages. A hierarchical page table which is indexed by virtual memory page address is used to restore the information of size, offset and SSD location for each object.

To meet the read/write characteristics of NAND flash-based SSD, reading is performed at page granularity and writing back is executed at granularity of block. A clean log block is prepared for dirty objects flushing and logs are operated in rounds. Dirty objects collected from DRAM memory are packed into a fresh cache block and their virtual page addresses are stored in the header of each block as reverse pointers. The SSD locations prepared for these objects are then recorded to the hierarchical object table according to the reverse pointers.

As known to all, SSD does not support to write back in place directly because it needs an erase operation before writing again. This feature requires each dirty object to write to another place, which makes the old location garbage. Therefore, a garbage collection strategy is needed. Garbage collection is also started as a background task for the runtime system when free blocks on SSD are not enough for dirty object eviction. The system maintains a GC table for the whole SSD and records the garbage volume for each block. When the hierarchical object table is updated and the former location exists, it will generate an invalid object of a certain size on that block and the corresponding element in GC table will be increased. One SSD block is determined to be recycled when its garbage volume exceeds a pre-defined threshold. The garbage block can be reused as a free block after that all the valid data objects are gathered to a new SSD block.

2 Implementation 2.1 APIA runtime library is implemented to replace the system standard library by the proposed hybrid main memory architecture. It provides the analogous application interfaces with the standard library such as malloc and free. The interfaces are stated as below.

1) void sysInit(), void sysQuit(): sysInit is used to initialize the metadata of the hybrid main memory, and sysQuit is called to release all the resources before that the application exists.

2) void* nv_malloc(SIZE t userReqSize): is used to allocate userReqSize bytes of memory for one object using the proposed memory allocator.

3) void* nv_calloc(SIZE t nmemb, SIZE t userReqSize): is used to allocate userReqSize bytes of memory for each object of an array with nmemb objects.

4) void* nv_realloc(void* vaddr, SIZE t userReqSize): is used to adjust the size of memory space pointed by the vaddr to userReqSize bytes.

5) void nv_free(void* vaddr): is used to deallocate the object with virtual address vaddr. Actually, the physical memory of the object is reclaimed to the corresponding free list depending on its metadata which is stored in object table.

2.2 The kernel moduleApplications call the proposed library to allocate the hybrid memory transparently. To track data accessing after allocating read/write protected virtual pages to the applications, the proposal replaces the handler of segmentation fault signal and registers with a new function for dealing with this page fault. When the protected virtual pages are accessed, they will trigger the page fault processing function. Then appropriate physical micro-pages are allocated to the objects and a kernel module is called to modify PTE to map the physical address of micro-pages to multiple virtual pages. This enables the proposed architecture to provide thread-safe operations for concurrent applications. Moreover, the same kernel module also has the ability to check and modify the access or dirty bit in page table entries, which are respectively used to manage the two FIFO lists and dirty object writing back. To reduce the communication overhead between user and kernel space, a message buffer is used to pack a number of PTE accessing requests into one message to transfer.

2.3 Support for multithreadingTo response the concurrent requests from applications, Multithreading operation is designed carefully to reduce the conflict of tasks such as page fault handler, SSD writing and garbage collection. SSD logical pages are locked when data objects are prefetched from SSD bulk store, whereas SSD logical blocks are locked when dirty objects are combined to a whole block and ready to write back. And all the writing operations are performed in the background.

2.4 Applicable sceneHMM_alloc applies to memory-intensive applications, such as in-memory database. To speed up data access, user data is stored in main memory rather than the storage device. This requires the computer system to provide enough physical memory to store user data. However, due to the constraints of DRAM and computer system, main memory capacity can't be expanded unlimited. When physical memory is to be exhausted, data exchange between DRAM and swap device is started. Under these circumstances, HMM_alloc can effectively reduce the adverse effects of the data exchange process on system performance through decreasing the unnecessary data exchange.

3 Performance evaluation 3.1 Experimental setupAll the experiments are conducted on a server with an eight-core Intel i7-4790 3.6 GHz processor and 6 GB DRAM. A 64-bit Linux 2.6.32 kernel is installed and a 120 GB Kingston SSD is adopted for both SSD swap device and the proposed main memory extension. If main memory is sufficient for applications, there is no need to build up the hybrid main memory because its performance is the same with that using the standard library. Therefore, only when the total capacity of main memory is not enough for a large-scale data set, the performance of data accessing is evaluated. To simulate the out-of-core DRAM accessing, the capacity of DRAM memory is restricted to 64 MB so that a handful of objects can reside in DRAM. The specific parameters of the evaluation are given in Table 1.

|

|

Table 1 The parameters of the evaluation |

Microbenchmarks are used to test the basic performance of random access with certain object size ranging from 256 bytes to 4 KB. An array of total size of 10 GB is loaded into SSD for all read, all write and read-write ratio of 80:20.

Figure 4 shows the average latency of HMM_alloc and SSD-swap at different object size. Accessing objects allocated by HMM_alloc runtime can achieve much higher performance than that in SSD-swap system. It beats SSD-swap by a factor of 1.23-1.78 times depending on object size and read/write ratio. With the increase of object size, the benefit of hybrid memory decreases because invalid data accessing per read or write is decreased. When object size increases to one-page size, the performance improvement decreases to 23%, which is the benefit of log-structured SSD store and background dirty data flushing.

|

Download:

|

|

Fig. 4 Performance of microbenchmarks with fixed object size |

|

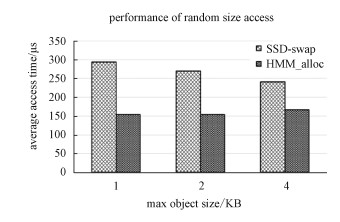

Random object size allocation requests are also used to test the proposed hybrid memory. As shown in Fig. 5, three sets of object size under different ranges are tested respectively. Even the random size can produce a waste of memory space, HMM_alloc still trumps SSD-swap system up to 47.5% in average access time.

|

Download:

|

|

Fig. 5 Performance of microbenchmarks for random size allocations |

|

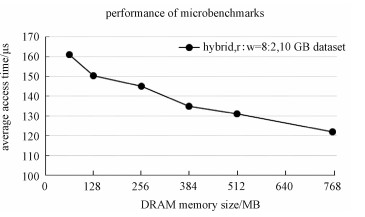

The hybrid main memory consists of DRAM memory device and NAND flash-based SSD device. DRAM caches hot objects and provides a high performance of accessing in-core data, and SSD extends the capacity and reduces energy consumption. To test the benefit of DRAM memory cache, the reserved DRAM memory size varies from 32 MB to 768 MB for fixed size dataset. When accessing 1 GB data of objects with size of 512 bytes from 10 GB dataset randomly, the larger size of DRAM memory improves the performance for caching hot objects as many as possible and reducing IO bandwidth to SSD device. Figure 6 indicates the linear relationship between DRAM memory size and latency. So DRAM capacity in the system depends on the tradeoff of cost and performance according to the requirement in applications.

|

Download:

|

|

Fig. 6 Performance of microbenchmarks for various DRAM size |

|

To demonstrate the physical micro-page management of HMM_alloc, a sorting workload with different fixed object size is tested. Initially, 10 GB worth of object records spread across the whole SSD device. Then a small number of these records are selected randomly to be sorted in place using a quick-sorting algorithm. Figure 7 presents the results of the sorting workload at different object size. As can be seen, HMM_alloc outperforms SSD-swap system by up to 1.3 times. Because the micro-page policy of HMM_alloc can make more effective use of physical memory and cache more hot objects when object size is smaller than 4 KB. On the contrary, SSD-swap can only fetch and flush data at a page granularity, which caches 1/16 hot objects for 256-bytes object size in the worst case. With the increase of object size, the performance gap between HMM_alloc and SSD-swap system decreases until that the average accessing latency approaches that of SSD-swap.

|

Download:

|

|

Fig. 7 Performance comparison of quick-sorting workload |

|

To verify the performance of write-heavy workloads, a bloomfilter is adopted. It is used to retrieve whether an element is in a set. Bloomfilter consists of a long bit vector and a series of random mapping functions. Each element has different locations mapped by several mapping functions. The total size of the bloomfilter is 80 billion bits (10 GB) and most of the data resides on flash-based SSD because of the restricted capacity of DRAM memory. Then checking and insertion of random keys in the bloomfilter are performed using 8 hash functions. Figure 8 shows the results of running the bloomfilter. HMM_alloc outperforms SSD-swap system and reduces average accessing latency by up to 42%. To SSD-swap, when one element in the huge bitmap is checked, it needs to fetch one page from swap device. And modifying one bit also causes to write more than one object that is less than one page. Thus, SSD-swap produces much more dirty physical memory pages and causes more write-back operations to SSD device. Whereas, HMM_alloc reduces latency by evicting dirty data at a granularity of object and coalescing multiple dirty objects into one block.

|

Download:

|

|

Fig. 8 Performance comparison of bloomfilter insertions |

|

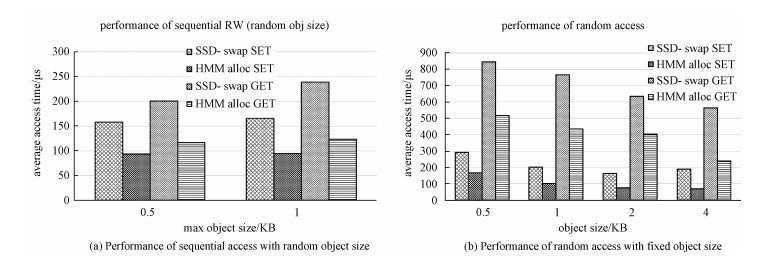

To evaluate the performance benefit for the existing in-memory database servers, memcached is chosen as the representative. Memcached is a high performance, distributed memory object caching system, which has been widely applied in data- center servers. For ease of use, the object allocator inside memcached is replaced by the proposed memory allocator. The experiments are conducted under two situations as shown in Fig. 9. One tests random object size with sequential access, the other runs random access with fixed object size. It can be seen that compared with SSD-swap, HMM_alloc can improve performance by 43%-61% at the SET phase, and 37%-58% at the GET phase in Fig. 9(b). Because memcached is suitable for caching key/value objects and the management policy of HMM_alloc is based on object granularity, which offers better service for memcached. Thus, the extension of memory capacity in the proposed way can improve the ability of memcached to cache more database requests and reduce the numbers of accessing to databases from the clients.

|

Download:

|

|

Fig. 9 Performance comparison of running memcached |

|

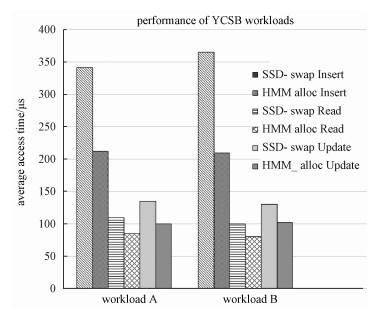

We also use YCSB(Yahoo! Cloud Serving Benchmark)to evaluate the performance benefit for the memcached database server equipped with HMM-alloc. Workload A and workload B with Zipf distribution in YCSB have different read-write ratio. There are 50% Read operations and 50% Update operations in workload A, and 95% Read operations and 5% Update operations in workload B. 10 million records are inserted in the database first, and then run 1 million database operations(read/update) on the database. Compared with SSD-swap, HMM_alloc can improve performance by 61.9%-73.3% for the Insert operation, 25.0%-29.1% for the Read operation, and 26.9%-32.6% for the Update operation in Fig. 10.

|

Download:

|

|

Fig. 10 Performance comparison of running memcached (YCSB) |

|

The metadata overhead of HMM_alloc is mainly composed of two parts.

1) HMM_alloc manages each micro-page using a 24-bit structure. When user data are all smaller than the minimum micro-page Cmin, all physical pages are divided according to the minimum micro-page granularity, and the number of mirco-pages reaches the maximum. Under this circumstance, the metadata overhead of managing physical memory also reaches the maximum Pmeta_max.

| $ {P_{{\rm{meta\_max}}}} = \frac{M}{{{C_{\min }}}} \times 24{\rm{B, }} $ | (1) |

where M is the capacity of physical memory.

2) HMM_alloc uses a hierarchical page table indexed by virtual memory page address to restore the information of size, offset and SSD location for each object. Each table item is 8 bytes in size. As the number of objects Nobj increases, the total size of the page table Tmeta grows linearly.

| $ {T_{{\rm{meta }}}} = {N_{{\rm{obj }}}} \times 8{\rm{ B}}{\rm{.}} $ | (2) |

Compared with the original memory management system, the extra metadata overhead of HMM_alloc mainly comes from the hierarchical page table. Although HMM_alloc increases metadata overhead, it improves system performance significantly through decreasing the unnecessary data exchange.

4 ConclusionsMany applications try to keep huge amount of data in main memory to speed up data access. However, computer systems fail to provide high main memory capacity because of the constraints of hardware cost and power consumption of DRAM device. Fortunately, NAND flash-based memory with high capacity, low price and low power offer an opportunity to alleviate the drawbacks of DRAM. Therefore, a hybrid main memory architecture HMM_alloc using SSD to augment DRAM is proposed. It provides interfaces for applications to access data transparently through a byte-addressable approach. DRAM is used as a hot data cache and works at an object granularity, whereas SSD is organized into a log-structured sequence and serves as a secondary dynamic user data storage partition. The comprehensive experiments demonstrate that the proposed hybrid main memory system HMM_alloc can improve the performance of memory-intensive applications significantly against the system using SSD as a swap device.

| [1] |

Wang Q. Research on novel memory system based on non-volatile memory technology[D]. Beijing: Institute of Microelectronics of the Chinese Academy of Sciences, 2013(in Chinese).

|

| [2] |

Li G, Chen L, Hao X R. Linux memory management algorithm based on hybrid memory architecture PDRAM[J]. Microelectronics & Computer, 2014, 31(5): 14-20. |

| [3] |

Ved S N, Awasthi M. Exploring non-volatile main memory architectures for handheld devices[C]//Proceedings of Design, Automation & Test in Europe Conference & Exhibition. Dresden: IEEE, 2018: 1528-1531.

|

| [4] |

Saxena M, Swift M M. Flashvm: virtual memory management on flash[C]//Proceedings of USENIX Annual Technical Conference. Boston: USENIX Association, 2010: 14.

|

| [5] |

Guo W C, Chen K, Feng H, et al. MARS: Mobile application relaunching speed-up through flash-aware page swapping[J]. IEEE Transactions on Computers, 2016, 65(3): 916-928. DOI:10.1109/TC.2015.2428692 |

| [6] |

Yoon S K, Yoon Y S, Burgstaller B, et al. Self-learnable cluster-based prefetching method for DRAM-flash hybrid main memory architecture[J]. ACM Journal on Emerging Technologies in Computing Systems, 2019, 15(1): 10. |

| [7] |

Wang C, Vazhkudai S S, Max S, et al. NVMalloc: exposing an aggregate SSD store as a memory partition in extreme-scale machines[C]//Proceedings of Parallel & Distributed Processing Symposium. Shanghai: IEEE, 2012: 957-968.

|

| [8] |

Van E B, Hsieh H, Ames S, et al. DI-MMAP-a scalable memory-map runtime for out-of-core data-intensive applications[J]. Cluster Computing, 2015, 18(1): 15-28. DOI:10.1007/s10586-013-0309-0 |

| [9] |

Mogul J C, Argollo E, Shah M, et al. Operating system support for NVM+DRAM hybrid main memory[C]//Proceedings of Workshop on Hot Topics in Operating Systems. Monte Verità: USENIX Association, 2009: 1-8.

|

| [10] |

Xu J, Swanson S. NOVA: a log-structured file system for hybrid volatile/non-volatile main memories[C]//Proceedings of the 14th USENIX Conference on File and Storage Technologies. Santa Clara: USENIX Association, 2016: 323-338.

|

| [11] |

Yoshida E, Kazama S, Kuwamura S, et al. Memory expansion technology for large-scale data processing using software-controlled SSD[C]//2018 IEEE Symposium on VLSI Circuits, Honolulu: IEEE, 2018: 59-60.

|

| [12] |

Meza J, Luo Y X, Khan S, et al. A case for efficient hardware/software cooperative management of storage and memory[C]//Proceedings of the Workshop on Energy-Efficient Design. 2013: 1-7.

|

| [13] |

Kawata H, Oikawa S. A feasibility study of hybrid DRAM and flash memory management Unit[C]//Proceedings of the 3rd International Conference on Advanced Applied Informatics. Kitakyushu: IEEE, 2014: 694-698.

|

| [14] |

Badam A, Pai V S. SSDAlloc: Hybrid SSD/RAM memory management made easy[C]//Proceedings of the 8th USENIX Conference on Networked Systems Design and Implementation. Boston: USENIX Association, 2011: 211-224.

|

| [15] |

Badam A, Pai V S, Nellans D W. Better flash access via shape-shifting virtual memory pages[C]//Proceedings of the 1st Timely Results on Operating Systems Principles Conference. New York: ACM, 2013: 1-14.

|

| [16] |

Liu T, Nie X F, Xing J W, et al. Memory management in worm simulation based on small object memory allocation technique on the GTNetS[J]. Journal of University of Chinese Academy of Sciences, 2012, 29(1): 131-135. |

| [17] |

Wang L Y, Chen L, Hao X R. LAB-LRU: a life-aware buffer management algorithm for NAND flash memory[J]. IEICE Transactions on Information and Systems, 2016, 99(12): 3172-3176. |

2021, Vol. 38

2021, Vol. 38