2. University of Chinese Academy of Sciences, Beijing 100049, China

2. 中国科学院大学, 北京 100049

To eventually perform low-cost space collision avoidance maneuvers, space debris localization which observed by ground-based space surveillance network remained the most powerful system over the last decade, especially from the Electro-Optic (EO) telescopes illustrated by a 3.5m DARPA telescope and 1.0m Lockheed Martin SPOT telescope[1-2]. However, all of long-range EO astrometry are not robust under variable illumination conditions. Moreover, the high-altitude space debrisand small fragments are stealth appear on the screen normally, so it is difficult to detect automatically. Thus, the obtained measurement information could have been seriously missed, which makes the conventional tracking techniques inefficient.In this article, we propose a robust small and faint space debris detection and tracking strategy that is an integrated system between an intelligent object extractions and Monte-Carlo based Track-before-Detect framework (TBD). In both space and military missions, which have emphasized capabilities of tracking faint targets, the TBD frameworks all work with variable degree of success[3-4]. The primary advantage of TBD is not needed to store and process multiple scans of data but it only requires hypotheses for extended target tracks and then updates using the measurement detection without a threshold value. Technically, in long-range observation, the kinematic model of the target can be described by the linear-stochastic process, which probably includes the target amplitude. However, the primary problem of TBD is the measurement model, which depends on the type of sensors. It is a highly non-linear function of the target state and directly influences the target position. Several TBD frameworks have been developed recently, such as dynamic programming and the polar-Hough transform[5-6]. However, in real EO-based observations, the background noise frequently appears in dynamic and/or non-Gaussian backgrounds besides, over a short period of time, moving space debris and fixed-stars have a similar appearance in the pattern of the point-spread function (PSF) that requires the moving object extraction algorithms. Traditionally, the median image subtraction was applied in astrometry[7] with a first detailed description based on static and/or Gaussian backgrounds. As the extraction results, they used the rationale of pixels, which is the difference between the current frame and a reference frame called the background model.

To overcome this limitation, we propose a method that combines moving object extraction based on a running Gaussian average (RGA) adapted by the neural-network algorithm (RGA-NN) and TBD framework. We automatically optimize the dynamics of the astronomical background model under the condition of the NN algorithm. It is correlated with the simulation result of the previous work[8]. The advantage of our proposed strategy is not only suppression of the background interference and low computation resources but also without the lack of burden or human bias. The remainder of this work is organized as follows. Section. 2 discusses some background aspects of RGA-NN that are used to develop the robust extraction and TBD framework for real-time observations. Section. 3 presents the specifications of the APOSOS telescope, the astronomical image datasets for validation, and some experimental results. Finally, Section.4 concludes this article.

2 MethodologyTechnical speaking, the RGA is one of the computer vision technique, it is the updated version of static background subtraction, and has been widely used in the initial stages of moving object detection and tracking. However, in real environments, static background subtraction does not perform well on dynamic backgrounds. The basic RGA process can be expressed as follows:

| $ \delta _N^{\left( {x,y} \right)} = \frac{1}{N}\sum\limits_{k = 1}^N {I_k^{\left( {x,y} \right)}} , $ | (1) |

where N is the number of frames used to construct the astronomical background (δN(x, y)) in two-dimensional spatial coordinates (x, y), and Ik(x, y) is the intensity of the present image ordered by k∈R+. After δN(x, y) has been constructed, we can calculate the absolute difference image (Dt(x, y)) between δt(x, y) and It(x, y) at time (t). We modify the new optimal δt(x, y) using the empirical weight (α)∈[0, 1], which depends on the scene variability. In traditional RGA, the intensity level of Dt(x, y) can be described by three cases based on two boundary thresholds, the upper limit (τup) and lower limit (τlw). This can be written as follows:

| $ \delta _t^{\left( {x,y} \right)} = \left\{ {\begin{array}{*{20}{c}} \begin{array}{l} \delta _{t - 1}^{\left( {x,y} \right)}\\ \alpha I_t^{\left( {x,y} \right)} + \left( {1 - \alpha } \right)\delta _{t - 1}^{\left( {x,y} \right)}\\ I_t^{\left( {x,y} \right)} \end{array}&\begin{array}{l} if\;D_t^{\left( {x,y} \right)} \ge {\tau _{up}}\\ if\;{\tau _{lw}} \le D_t^{\left( {x,y} \right)} \le {\tau _{up}}\\ if\;D_t^{\left( {x,y} \right)} \le {\tau _{lw}} \end{array} \end{array}} \right. $ | (2) |

Typically, α is manually adjusted according to human visual interpretation; it can be observed from the scene variability. This process is complicated, and readjustments are time-intensive. To overcome this limitation, a supervised feed-forward perceptron structure NN algorithm. The main structure of this algorithm comes from two important small components, which are neurons and activation transfer functions. They are inter-connected with other elements and become a complex networks, which is similar to the nervous system of the human brain. The fundamental functions of neural networks as follows:

| $ \begin{array}{l} \eta = W{x_i} + b,\\ a = f\left( \eta \right) = f\left( {W{x_i} + b} \right), \end{array} $ | (3) |

where η is a neuron, the input vector is xi=[x1, x2, ..., xκ]′ and W, b are the connecting weight matrix and bias vector between neurons, respectively. In Eq. (3) a is the activation state and f(η) is the activation transfer function.

The aim of this adaptive algorithm was designed to automatically adjust α based on Eq. (2) with two input parameters: the intensity level of Dt(x, y) and the input image signal-to-clutter ratio (SCRi). Normally, the image SCR value represents the performance of small-target extraction, which can be defined as:

| $ SCR = \frac{{\left| {{\mu _{ob}} - {\mu _{bk}}} \right|}}{{\sigma _{bk}^{ROI}}}, $ | (4) |

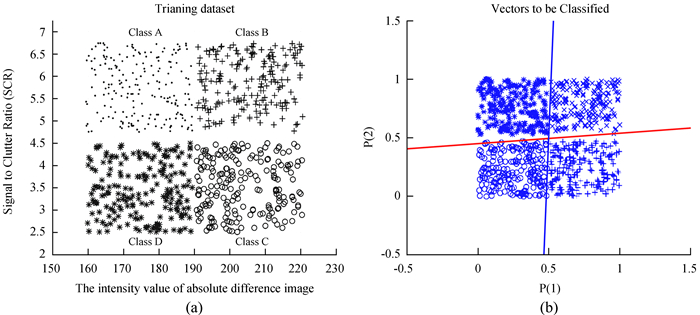

where μob is the average intensity of the object of interest, μbk is the average of the background intensity, and σbkROI is the standard deviation (STD) of background intensity within the specified region of interest (ROI), which excludes the object region. For the training dataset of the NN algorithm, 200 points, which were symmetrically extracted, randomly based on the probability density function (pdf) and pre-identified by four-classes, namely: large, normal, small and zero which symbolized as A, B, C, and D, respectively, as shown in Fig. 1(a). To reduce the learning process time, the training dataset should be scaled in the optimal range [0, 1] both horizontal and vertical axis as shown in Fig. 1(b). We combined the proposed RGA-NN within the tracking loop to improve the measurement data. In the case of tracking single faint space target, TBD framework is a non-linear state-estimation methods based on the sequential Monte Carlo approximation that incorporates the prediction and updating stage, especially, to solve the hybrid state-estimation problems through the Particle Filter (PF) mechanism[9], where the state vector consist of two conditions that are continuous parts and discrete-value parts (presence of target or absence of target). However, conventional PF considers only one condition, which is the continuous appearance of the target in time; this condition is extracted by thresholding the output of the signal-processing unit of a sensor. In the prediction stage, the kinematic model of moving space debris on the celestial sphere projected to a spatial coordinate can be defined by the state vector xt=[px, vx, py, vy, Ω]′, where (px, py)t, (vx, vy)t, and Ωt are the position, velocity, and intensity level of the space debris, respectively. The propagation of state variables occurs according to a linear state transition matrix (At), which is a time invariant system with sampling interval (T). This can be defined in terms of the state vector using the linear stochastic equation:

| $ {x_{t + 1}} = {A_t}{x_t} + {w_t},{E_t} \in \left\{ {e,\bar e} \right\}. $ | (5) |

|

| Fig. 1 The RGA-NN training dataset, which has 200 points randomly generated by the pdf (a) and the vector to be classified (b) |

Hence,

| $ {A_t} = \left[ {\begin{array}{*{20}{c}} 1&T&0&0&0\\ 0&1&0&0&0\\ 0&0&1&T&0\\ 0&0&0&1&0\\ 0&0&0&0&1 \end{array}} \right],{Q_t} = \left[ {\begin{array}{*{20}{c}} {\frac{{{\varphi _1}}}{3}{T^3}}&{\frac{{{\varphi _1}}}{2}{T^2}}&0&0&0\\ {\frac{{{\varphi _1}}}{2}{T^2}}&{{\varphi _1}T}&0&0&0\\ 0&0&{\frac{{{\varphi _1}}}{3}T}&{\frac{{{\varphi _1}}}{2}{T^2}}&0\\ 0&0&{\frac{{{\varphi _1}}}{2}{T^2}}&{{\varphi _1}T}&0\\ 0&0&0&0&{{\varphi _2}T} \end{array}} \right], $ |

where wt is white Gaussian process noise with mean zero and covariance matrix Qt. The variances of the target acceleration and return intensity noise are represented in φ1 and φ2, respectively. The two situations of the target (Et) consist of existence (e) and non-existence (e), which are described by the two-state Markov chain in the discrete-time domain[10].

In theory, the two-state Markov chain model is a stochastic process for which the probability of entering a certain state (t) depends only on the last occupied state (t-1) and not on any earlier state. This is performed by a regime transition (RT) process, which transfers the state sequence through a square matrix named transition probability matrix (Π) having all entries non-negative and all column sums equal to 1. Furthermore, Π can be expressed as follows:

| $ \left. {z_t^{\left( {i,j} \right)}} \right) = \left\{ {\begin{array}{*{20}{c}} \begin{array}{l} h_t^{\left( {i,j} \right)}\left( {{x_t}} \right) + {y_t},\\ {y_t}, \end{array}&\begin{array}{l} {E_t} = e\\ {E_t} = \bar e \end{array} \end{array}} \right., $ | (6) |

where ht(i, j)(xt) is the distribution intensity of space debris in each set of observation cells Oi(xt) and Oj(xt), as indexed by i, j, and yt is the measurement noise in each cell. We assume that yt is independent of wt and has a white Gaussian distribution with zero mean and variance ε2. In this study, the distribution intensity of space debris can be modeled by the PSF as a point target intensity at the rectangular position (px, py). Therefore, ht(i, j)(xt) as follows:

| $ h_t^{\left( {i,j} \right)}\left( {{x_t}} \right) = \frac{{{\Delta _x}{\Delta _y}{\Omega _t}}}{{2{\rm{ \mathsf{ π} }}\sum\nolimits_{}^2 {} }}\exp \left( { - \frac{{{{\left( {p_t^x - i{\Delta _x}} \right)}^2} + {{\left( {p_t^y - j{\Delta _y}} \right)}^2}}}{{2\sum\nolimits_{}^2 {} }}} \right), $ | (7) |

where Δx and Δy are the sizes of Oi(xt) and Oj(xt) in spatial coordinates. The variable ∑ represents the blurring coefficient of the PSF. The likelihood functions of the target in the two situations, which are the probability density function (pdf) of the background noise for each p(zt(i, j)|, Et=e) and p(zt(i, j)|, Et=e) the pdf of the target existence in noise, consider the target state xt, Therefore, the two pdfs can be expressed as follows:

| $ \begin{array}{l} p\left( {z_t^{\left( {i,j} \right)}\left| {,{E_t} = \bar e} \right.} \right) \buildrel \Delta \over = N\left( {{z^{\left( {i,j} \right)}},0,{\varepsilon ^2}} \right)\\ = \frac{1}{{\sqrt {2{\rm{ \mathsf{ π} }}{\varepsilon ^2}} }}\exp \left( { - \frac{{{{\left( {z_t^{\left( {i,j} \right)}} \right)}^2}}}{{2{\varepsilon ^2}}}} \right);\\ p\left( {z_t^{\left( {i,j} \right)}\left| {{x_t},{E_t} = e} \right.} \right) \buildrel \Delta \over = N\left( {{z^{\left( {i,j} \right)}},h_t^{\left( {i,j} \right)}\left( {{x_t}} \right),{\varepsilon ^2}} \right) \\= \frac{1}{{\sqrt {2{\rm{ \mathsf{ π} }}{\varepsilon ^2}} }}\exp \left\{ { - \frac{{{{\left[ {z_t^{\left( {i,j} \right)} - h_t^{\left( {i,j} \right)}\left( {{x_t}} \right)} \right]}^2}}}{{2{\varepsilon ^2}}}} \right\}. \end{array} $ | (8) |

The case of target existence, p(zt(i, j)|, Et=e) will affect only the set of pixels around the target position (px, py), and the expression of this case can be estimated as follows:

| $ p\left( {z_t^{\left( {i,j} \right)}\left| {{x_t},{E_t} = e} \right.} \right) \approx \prod\limits_{i \in {O_i}\left( {{x_t}} \right)} {\prod\limits_{j \in {O_j}\left( {{x_t}} \right)} {p\left( {z_t^{\left( {i,j} \right)}\left| {{x_t},{E_t} = e} \right.} \right)} } \\ \prod\limits_{i \notin {O_i}\left( {{x_t}} \right)} {\prod\limits_{j \notin {O_j}\left( {{x_t}} \right)} {p\left( {z_t^{\left( {i,j} \right)}\left| {,{E_t} = \bar e} \right.} \right)} } . $ | (9) |

Thus, the likelihood ratio for each cell is defined as follows:

| $ \ell \left( {z_t^{\left( {i,j} \right)}\left| {,{E_t}} \right.} \right) = \left\{ \begin{array}{l} \frac{{p\left( {z_t^{\left( {i,j} \right)}\left| {{x_t},{E_t} = e} \right.} \right)}}{{p\left( {z_t^{\left( {i,j} \right)}\left| {,{E_t} = \bar e} \right.} \right)}},\;\;\;\;{E_t} = e\\ 1,\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;{E_t} = \bar e \end{array} \right.. $ | (10) |

From Eq. (10), the likelihood function of the target existence is given as:

| $ \ell \left( {z_t^{\left( {i,j} \right)}\left| {{x_t},{E_t} = e} \right.} \right) = \prod\limits_{i = 1}^{{n_l}} {\prod\limits_{j = 1}^{{n_c}} {\exp \left\{ { - \frac{{h_t^{\left( {i,j} \right)}\left( {{x_t}} \right)\left[ {h_t^{\left( {i,j} \right)}\left( {{x_t}} \right) - 2z_t^{\left( {i,j} \right)}} \right]}}{{2{\varepsilon ^2}}}} \right\}} } . $ | (11) |

The variables nl and nc are the number of pixels in the observation cells, which are affected by the space debris intensity.

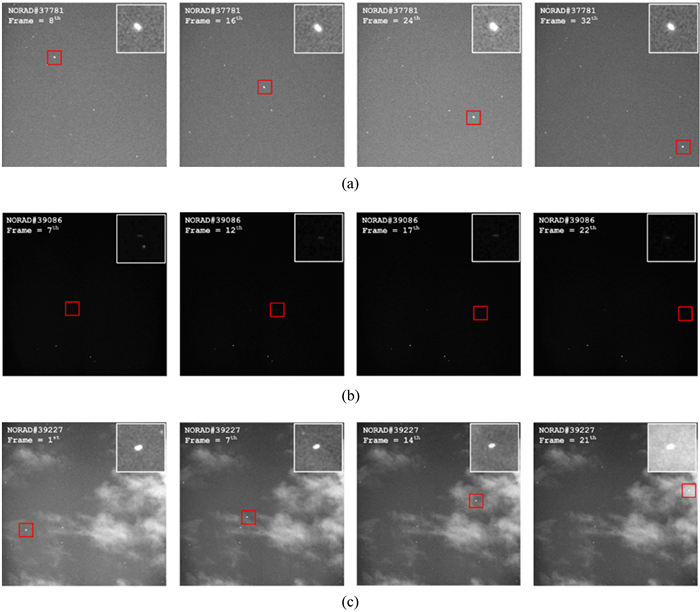

3 Specification of APOSOS telescope and image datasetsIn our experiment, we used input images from three datasets of a real astronomical background obtained by an azimuthal-mounted APOSOS telescope[11] equipped with a complementary metal-oxide semiconductor (CMOS)- type high-resolution sensor. These were stored as flexible image transport System (FITS)- type gray-scale images with range [0, 255], 150-mm aperture size, 300-mm focal length, and a 3 × 3 degree2 FoV. In detail, the specification are listed in Table 1. The observation site (WGS84) was located at National Astronomical Observatories, CAS, Beijing, China (Lat. 43.845 00 and Long. 125.400 80 degrees). For the telescope guidance system, the APOSOS telescope applied the orbit parameters of two line elements to calculate the orbital prediction, which was provided by North American Aerospace Defense Command. The datasets cover the three primary situations in space observation with exposure time: ≈50 ms; (ⅰ) normal-sky tracking of 32 frames, (ⅱ) a small and faint space-object tracking of 25 frames, and (ⅲ) a critically dynamic background tracking of 25 frames. These were labelled as datasets A, B, and C, respectively, as shown in Fig. 2.

| Optical system | Mechanical Tracking system | |||

| Aperture | 150 mm | Angular velocity | 3 degree/s | |

| Focus length | 300 mm | Tracking acceleration | 1-2 degree/s | |

| Spectrum range | 500-850 nm | Pointing accuracy | 5″ (RMS) | |

| Pixel size (radius) | < 6 um | Elevation angle range | 0-90 degree | |

| CMOS-image sensor | Timing system | |||

| Resolution | 2 048 × 2 048 pixels | Frequency | 10 MHz, 1 s | |

| Array size | 13.3 mm × 13.3 mm | Frequency precision | 10e-9 | |

| Maximum frame frequency | 20 frames/s | |||

| Field of View | > 3.0°×3.0° | |||

|

| Fig. 2 (a)-(c) are the tracking datasets of space object named A, B, and C, with known orbital parameters, respectively. The red rectangle marks are the observations with a 64 × 64 pixels2 ROI around the space object |

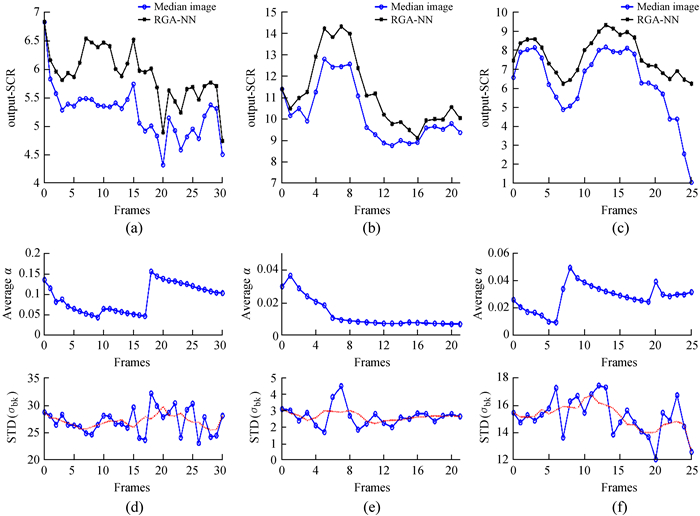

The background clutter is typically non-Gaussian and independent, both pixel to pixel and frame to frame, which can be observed from σbkROI, as shown in the bottom of Fig. 3(d), (e), and (f). Moreover, this parameter is inversely proportional to the extraction performance. In this experiment, the traditional object extraction technique named median image subtraction[12] was used to compare the background suppression factors with the proposed RGA-NN method over three benchmarks of real astronomical images sequences under the three primary interference conditions in observation time. Normally, the energy of long-distance imaging target is very weak; therefore, the target account for only one or a few pixels in the astronomical screen, which are the cause of the low-dynamic range as shown in red rectangle marks of Figs. 2(a)-(c). The first experiment is related to the moving object-extraction procedure; Fig. 3(e) shows the NN-α (top) and σbkROI of dataset B, which is relatively stable or clear-sky background. As a result, the NN-α is consequently inserted in based on the conditions of Eq. (2).

|

| Fig. 3 (a)-(c) are the comparison of the extraction performance with datasets A, B, and C, respectively, and (d)-(f) are the average of NN-α based on the second condition of Eq. (2) and STD in a specific ROI corresponding to dataset A, B, and C, respectively |

Otherwise, in datasets A and C, as shown in Figs. 2 (a)-(c), both normal and critical dynamics of the atmospheric effect causing NN-α consequently insert in It(x, y). Fig. 3 (a)-(c) depict the comparison of the extraction algorithms indicated by the output SCR. From the Fig. 3 (a)-(c) it is clearly proved that the proposed RGA-NN approach outperforms the median technique under the three categories of background interference, especially, the condition of a dynamic background. As the results, the average output SCR of the median and RGA-NN approaches are 5.245 and 6.324 for dataset A, 9.991 and 11.130 for dataset B, and dataset C at 7.105 and 7.863, respectively. For the extraction performance, factors consist of improved-SCR (iSCR), which represents the enhancement of the extraction procedure with SCRi before and after processing with output SCR and the background suppression factor (BSF), as illustrated in Table 2.

| Index | Dataset A | ||||||

| SCRi | Median image | RGA-NN | |||||

| SCR | iSCR | BSF | SCR | iSCR | BSF | ||

| 1 | 4.214 | 6.822 | 1.618 | 0.979 | 6.829 | 1.620 | 0.985 |

| 5 | 5.473 | 5.447 | 0.995 | 0.894 | 6.059 | 1.107 | 0.869 |

| 10 | 5.598 | 5.512 | 0.984 | 0.919 | 8.174 | 1.460 | 1.274 |

| 15 | 5.381 | 5.429 | 1.009 | 0.913 | 6.211 | 1.154 | 1.037 |

| 20 | 0.273 | 2.034 | 7.457 | 1.064 | 3.531 | 1.294 | 1.834 |

| 25 | 5.890 | 6.146 | 1.043 | 0.859 | 7.203 | 1.223 | 0.898 |

| 30 | 5.233 | 5.326 | 1.017 | 0.844 | 6.124 | 1.170 | 0.831 |

| Average | 4.618 | 5.245 | 2.017 | 0.924 | 6.324 | 2.953 | 1.100 |

| Index | Dataset B | ||||||

| SCRi | Median image | RGA-NN | |||||

| SCR | iSCR | BSF | SCR | iSCR | BSF | ||

| 1 | 8.824 | 11.40 | 1.292 | 1.135 | 11.40 | 1.292 | 1.135 |

| 5 | 9.653 | 9.718 | 1.006 | 0.824 | 10.65 | 1.104 | 1.002 |

| 10 | 11.44 | 9.824 | 0.858 | 0.671 | 13.13 | 1.147 | 0.964 |

| 15 | 7.189 | 7.807 | 1.086 | 1.088 | 8.224 | 1.144 | 1.081 |

| 20 | 10.30 | 11.20 | 1.087 | 0.981 | 12.24 | 1.188 | 1.033 |

| Average | 9.484 | 9.991 | 1.065 | 0.939 | 11.13 | 1.175 | 1.043 |

| Index | Dataset C | ||||||

| SCRi | Median image | RGA-NN | |||||

| SCR | iSCR | BSF | SCR | iSCR | BSF | ||

| 1 | 5.640 | 6.549 | 1.161 | 1.036 | 7.445 | 1.320 | 1.053 |

| 5 | 7.856 | 8.228 | 1.047 | 1.005 | 8.732 | 1.111 | 0.963 |

| 10 | 4.784 | 4.949 | 1.034 | 0.969 | 6.248 | 1.306 | 0.913 |

| 15 | 8.559 | 8.675 | 1.013 | 0.974 | 9.181 | 1.072 | 0.819 |

| 20 | 6.682 | 7.124 | 1.066 | 1.005 | 7.710 | 1.153 | 0.920 |

| Average | 6.704 | 7.105 | 1.064 | 0.997 | 7.863 | 1.192 | 0.920 |

To evaluate the tracking performance, the kinematic of moving space targets in spatial coordinates was designed based on the dead-reckoning approach. We assumed that the single moving space-debris object appeared to cover the whole image sequence with a constant velocity and crossed over the critically dynamic background area, typically Figs. 2 (c-2)-(c-4) show dataset C. For the star image registration, we used the USNO-B 1.0 star catalog to identify fixed stars for reference positions and transformed the estimated position in 2D spatial coordinates to celestial coordinates based on the least-squares method. Then, the reference fixed stars were recognized by δN(x, y). The tracking performance with the proposed method was evaluated in terms of accuracy and robustness in a comparison with the two types of combination algorithms: median-TBD and RGA-NN TBD. Although the tracking accuracy improved with the number of particle size, the computation time also linearly increased. In the detection and tracking process, first, the TBD framework operated with the optimal number of particles: np≈750; the processing time around 40 ms in average. The particle uniform proposal densities were set as xpt(x, y)~ρ(-5, 5), xvt(x, y)~ρ(-3, 3), and xτt~ρ(-5, 5), with the probability of target existence at Pb=Pd=0.01. Tracking with the observation size was Δx=Δy=5. The tracking accuracy could be computed as the root mean square error (RMSE).In the first tracking experiment, we employed both datasets A and B, which are a common scenario in low-clutter background and faint space target conditions, respectively. For dataset A, it included a partly Gaussian interference background with a different variance in the last fifteen frames, as illustrated by the STD clutter level in Fig. 3(d). The tracking performance using both datasets is shown in Figs. 4(a)-(b). In a comparison, using the average RMSE value considering only the present frames for both datasets, the median-TBD and RGA-NN TBD are 0.120 0 deg. and 0.052 2 deg. and are 0.307 6 deg. and 0.033 2 deg. for datasets A and B., respectively. In the second experiment, we emphasized on dataset C. As shown in Fig. 3(f), the fit data (red dashed line) has an increasing trend and change of the STD clutter level. The object path is shown in Fig. 4(c). It is evident that the non-Gaussian interference has an effect in terms of position accuracy, the static background model of the median technique can approximate the position and orientation of the space target; however, from that point, the SCR declines due to the clutter signal, which causes the velocity bias of both directions in median-TBD to be increasing continuously. Nevertheless, in the case of RGA-NN TBD, tracking continues under this condition with an average RMSE of 0.149 7 deg. Note that, we did not adjust the TBD parameters in the new environment in order to determine the robustness.

|

| Fig. 4 (a)-(c) are estimation of positions of datasets A, B, and C given by two methods and (d)-(f) are the probabilities of existence (bottom) and position-based RMSE (top) corresponding to datasets A, B, and C, respectively |

In summary, we presented techniques to solve the problem of the tracking strategy of small and faint space targets for the EO-based telescope. The proposed methods combined two main steps. The first stage is the extraction approach in which we apply the artificial NN and automatically estimate the optimal value of the empirical weight in traditional RGA. The learning process of NN was designed using two primary parameters, which are input SCR of the present image and absolute difference in stochastic RGA process. According to the results, this approach not only reduces the effect of a dynamic background, but it also improves the extraction performance and robustness more than the traditional median technique as shown in Table 2. the iSCR of Dataset A, B, and C via RGA-NN are improved with 23.2%, 9.6%and 11.3%, respectively. We then integrate the first procedure with the second stage, a TBD approach. The results demonstrate that the proposed combination algorithms provide considerable improvement in tracking accuracy, precision, and robustness compared to other methods when the level of real background noise covariance is changed.

Acknowledgements: The authors are thankful for the support from The World Academy of Sciences (TWAS), the Chinese Academy of Sciences (CAS), and Asia-Pacific Space Cooperation Organization (APSCO).

| [1] | WOODS D, SHAH R, JOHNSON J, et al. Asteroid detection with the Space Surveillance Telescope[C]//Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference. 2013. |

| [2] | SHIVITZ R, KENDRICK R, MASON J, et al. Space Object Tracking (SPOT) facility[C]//Proceedings of SPIE. 2014. |

| [3] | UETSUHARA M, IKOMA N. Faint debris detection by particle based track-before-detect method[C]//Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference. 2014. |

| [4] | DAO P, RAST R, SCHLAEGEL W, et al.Track-before-detect algorithm for faint moving objects based on random sampling and consensus[C]//Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference. 2014. |

| [5] | JOHNSTON L A, KRISHNAMURTHY V. Performance analysis of a dynamic programming track before detect algorithm[J]. IEEE Transactions on Aerospace and Electronic Systems, 2002, 38(1): 228–242. DOI: 10.1109/7.993242 |

| [6] | GARVANOV I, KABAKCHIEV C. Radar detection and track in presence of impulse interference by using the polar hough transform[C]//Proceedings of the European Microwave Association. 2007: 170-175. |

| [7] | YANAGISAWA T, NAKAJIMA A, KADOTA K, et al. Automatic detection algorithm for small moving objects[J]. Publications of the Astronomical Society of Japan, 2005, 57(2): 399–408. DOI: 10.1093/pasj/57.2.399 |

| [8] | TORTEEKA P, GAO P Q, SHEN M, et al. Space debris tracking based on fuzzy running Gaussian average adaptive particle filter track-before-detect algorithm[J]. Research in Astronomy and Astrophysics, 2017, 17(2): 51–62. |

| [9] | SALMOND D J, BIRCH H. A particle filter for track-before-detect[C]//Proceedings of the American Control Conference. 2001: 3755-3760. |

| [10] | PAPOULISA. Probability, random variables, and stochastic processes[M]. 机械工业出版社, 1965. |

| [11] | 于欢欢, 高鹏骐, 沈鸣, 等. 空间碎片激光测距探测能力分析[J]. 天文研究与技术, 2016, 13(4): 416–421 DOI: 10.3969/j.issn.1672-7673.2016.04.005 |

| [12] | ODA H, YANAGISAWA T, KUROSAKI H, et al. Optical observation, image-processing, and detection of space debris in geosynchronous Earth orbit[C]//Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference. 2014. |